Dynamic SQL processing with Apache Flink

In this blog post, I would like to cover the hidden possibilities of dynamic SQL processing using the current Flink implementation. I will showcase a…

Read more

2020 was a very tough year for everyone. It was a year full of emotions, constant adoption and transformation - both in our private and professional lives. All this driven by a piece of nasty RNA that was spreading around and as a consequence - changing our comfortable status quo. Thanks to enormous work of scientists and professionals all over the world 2021 is the year of hope, that pandemia will be under our control, and adoption to “new normal” as for sure we will not revert back to our pre-pandemic reality.

How does it impact the data management field? Pandemia boosted digitalisation, so data became even more important for companies that it was before. Taking into consideration that over the past 2 years we have noticed a significant evolution of Big Data technologies we can expect that the upcoming year will be pretty interesting.

In Getindata we work with various customers globally, so we can track the evolution of data platforms and perception of data products in different companies. We are also tech enthusiasts and early adopters of new tech stacks (not to mention our contributions to Open Source) so we had an internal discussion about what to expect in the data management field in the future. Here is the summary of what we think will be trends for the Big Data ecosystem in 2021.

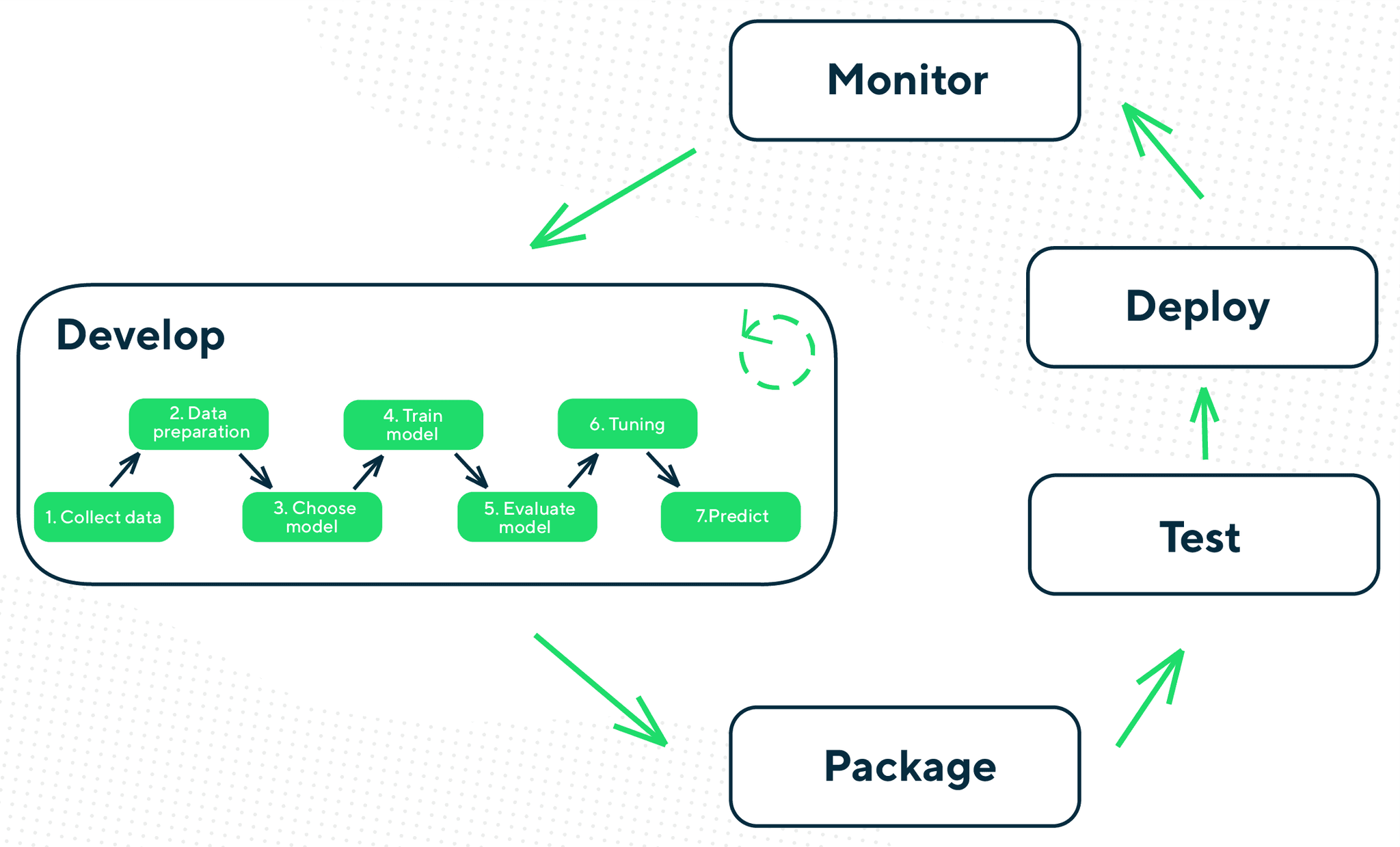

Machine Learning Operations (MLOps) has been a hot topic in 2020 already, but in our opinion, the following year will be a time for wide adoption of this paradigm. Many companies last year hit the wall of scaling up their current Machine Learning modelling and sorting out this problem became a number 1 priority - some of them have been already doing some research or proof of concepts projects for testing out some technologies. However, MLOps should not be considered as just a technology to automate ML modeling and serving. It is more like setting up the cross-functional process of creating, testing and serving ML models that involves different IT capabilities in the organization that were not closely working together before. It is also a way to bridge the knowledge gap between data analytics teams and IT platforms teams as they usually have complementary but hardly overlapping experience in technologies. So if you are just planning to start ML initiatives in your organization it might be a good idea to shape it as a full MLOps implementation with proper training, knowledge sharing and good practices in place. Instead of finding your way to do ML efficiently, what takes time, such a program could place you directly on a correct path.

From a technology stack perspective, we have few tools that are de facto industry standard in their areas (Jupyter, Pandas), but there are many different ideas how to tackle this matter turned into software products, software components and whole platforms with many new names popping up pretty frequently. The next year or two will show us which approach will become an industry standard.

Real-time analytics implemented with stream processing engines have been around for a while already. However, what should be noticed is that from being a very complicated piece of software to be implemented and maintained only by experienced professionals they became pretty accessible complex products that allows even less tech-savvy people to work with them. To give an example - numerous APIs, like SQL and Python support in Flink makes everyone find something to themselves. Stream processing capabilities available at your fingertips in public clouds make even easier to start. Actually, in many cases, there is a little extra cost of switching from batch to real-time processing, with some benefits of such an approach as these frameworks have some capabilities already in place that would make your data ingestion less error-prone and messy. Together with the lower learning curve, the more use cases can be considered. In many business environments, there is an increasing appreciation for having data not only on time but online, almost available instantly, that you can actually act upon. As we all know - the value of information is decreasing over time. Data consumers demand information now, not the next business day. Business stakeholders finally have possibilities to get something out of it for themselves.

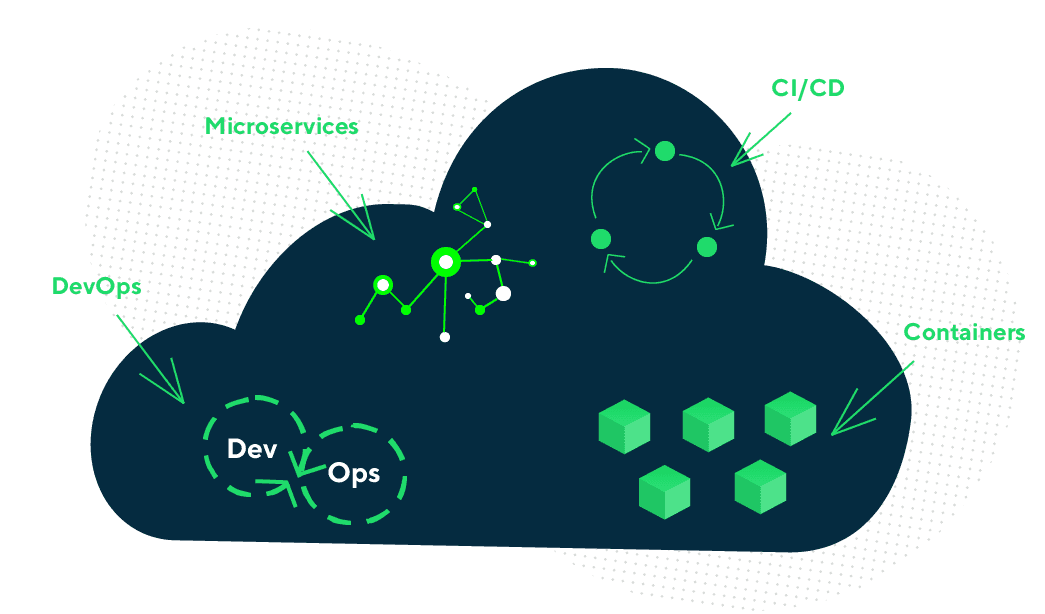

Classical Hadoop environment as we all know is rather decadent technology. Changes in the vendor landscape that were supporting it commercially has just hastened the end of its domination in the Big Data ecosystem (not to mention public cloud providers with their data analytics offering which became alluring alternatives). Cloud-native movement with its containerised software running on modern orchestrators, with everything as a code paradigm and programmatic infrastructure, has changed the way we build and serve applications. Data management world picked that up with a bit of hesitation and legacy baggage, but currently, no one is questioning the way we are going to evolve our data platforms. Clear separation of data storage from processing and querying is progressing in by Open Source software, but you can already work like that in the public cloud environment. In 2021 everyone is looking for Spark fully supported on Kubernetes. There is still an open question about the data storage for on-premise deployments - HDFS seems to be the most solid and performant solution but there are few initiatives about solving the problem of storage for data-intensive applications, like Ozone for example. From the user perspective, the idea of query federation engines, like Trino (formerly known as PrestoSQL) or BigQuery, are the next way of working with distributed data sources and the upcoming year will definitely increase their adoption. There is a brand new concept of data mesh with domain-oriented and decentralised data paradigm, but before we start thinking about the possibilities we could earn and learn about challenges, we need to be overwhelmed by the fact we can easily go beyond our data lake.

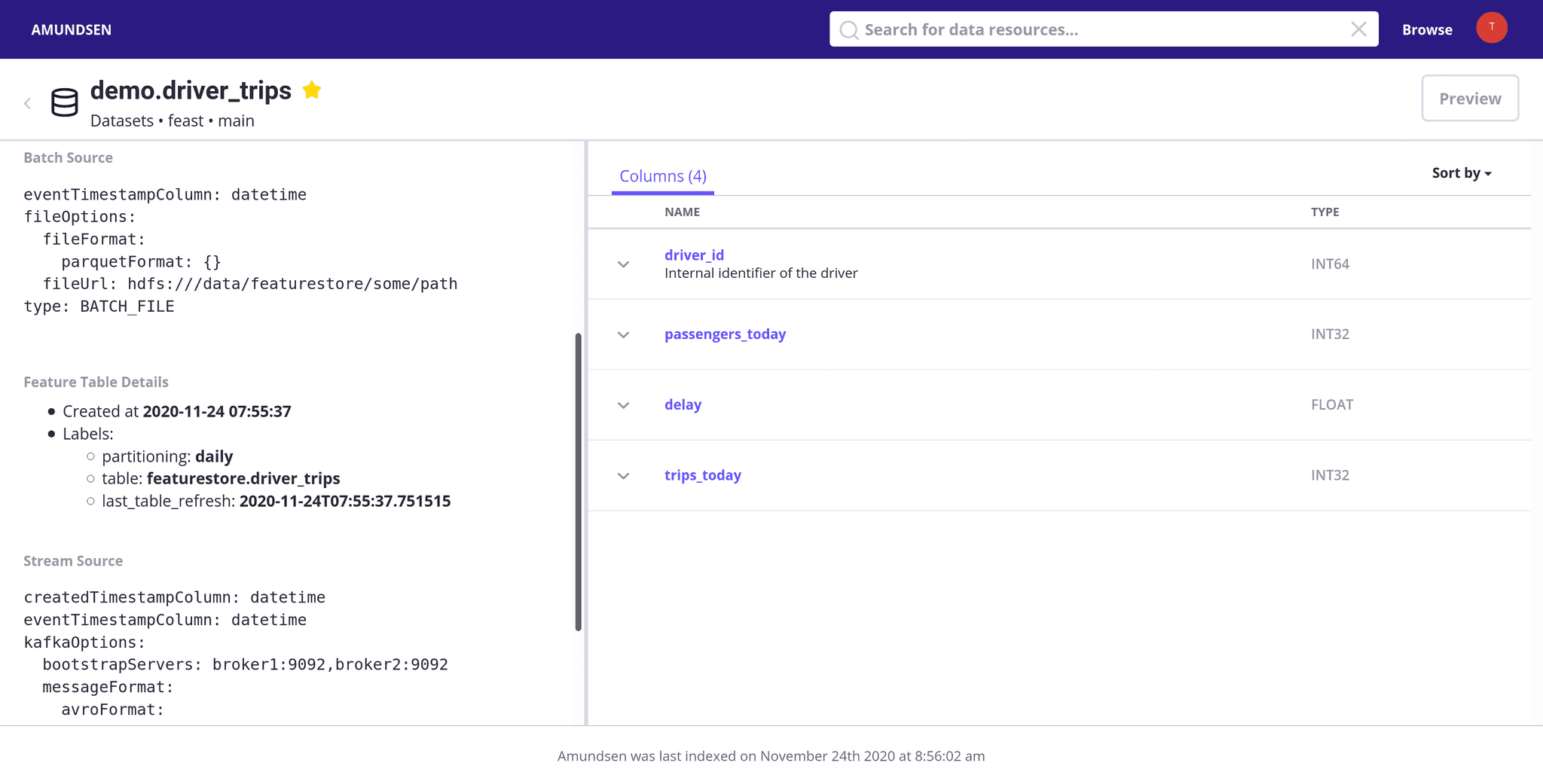

While the idea of having a central catalog of all your data assets is nothing new there is not much adoption. Maybe apart from tech companies, which made it as their starting point for their data scientist to start work. Data-driven companies where all data is widely available for everyone to do analysis found it necessary to invest in such a solution. In case your data is still maintained in organizational silos you might not see value in Data Discovery. However, once you start going outside your well-trodden paths of doing analytics, you will face the challenge of maintaining knowledge about data, before your data scientists get flooded with datasets you will make available for them. Such catalog usually not only includes information about data sources but also some metadata like profiling or quality so you can consciously pick your data for analysis. Data Discovery also becomes a sort of knowledge management tool for the organization. However this is not just a technological problem as it is closely related to Data Governance practices implemented in the organizations. We see large potential for organizations in efficiently maintaining knowledge about their data.

There are two more areas that we think will be growing next year. As our data pipelines, today are more likely to be structured as a code, there is a question about maintaining data quality and observability. DataOps is nothing more like DevOps, but adjusted to data processing. While the currently running pipelines might be still in the ETL tools with fancy graphical user interfaces, there will be probably much less concerns about building new ones with just a code, but with rich testing practices and reproducibility.

Last but not least - public cloud offering for analytics is finally a real alternative for data management. Major vendors not only follow the latest advancements in Big Data technology but in many cases they actively participate in charting development paths. Taking into account that while moving to the cloud you just focus on how you want to shape your data product to support your use cases instead of managing the complexity of all these moving parts, many companies want to try it out in the upcoming year. This is valid also for companies from heavily regulated sectors.

Last year showed us that talking about trends and trying to predict what is going to be hot next year can be really tricky if the reality wants to play a game with us and turn everything upside down. The year 2021 seems to be more under control but still with a huge dose of uncertainty. However in data management, we do not expect revolutions - it is more like a constant evolution but with more attention from stakeholders as digitisation became the only way to go for many companies.

In this blog post, I would like to cover the hidden possibilities of dynamic SQL processing using the current Flink implementation. I will showcase a…

Read moreIn this episode of the RadioData Podcast, Adam Kawa talks with Yetunde Dada & Ivan Danov about QuantumBlack, Kedro, trends in the MLOps landscape e.g…

Read moreStreaming analytics is no longer just a buzzword—it’s a must-have for modern businesses dealing with dynamic, real-time data. Apache Flink has emerged…

Read moreThe GID Modern Data Platform is live now! The Modern Data Platform (or Modern Data Stack) is on the lips of basically everyone in the data world right…

Read moreStaying ahead in the ever-evolving world of data and analytics means accessing the right insights and tools. On our platform, we’re committed to…

Read moreAbout In this White Paper, we described what is the Industrial Internet of Things and what profits you can get from Data Analytics with IIoT What you…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?