Last month, I had the pleasure of performing at the latest Flink Forward event organized by Ververica in Seattle. Having been a part of the Flink Forward conference since 2016, I’m continually impressed by how many big data pioneers are on Flink and how they create trends that other companies follow.

This year's event was full of great presentations, so I was more than excited to talk about the Streaming Analytics Platform powered by Flink, Kafka, dbt and more which enables organizations to scale their real-time capabilities. If you want to know more about it, please contact us, because here I would like to share some key takeaways and reflections from the conference. Hope you enjoy them!

Streamhouse - another buzzword or a rising star?

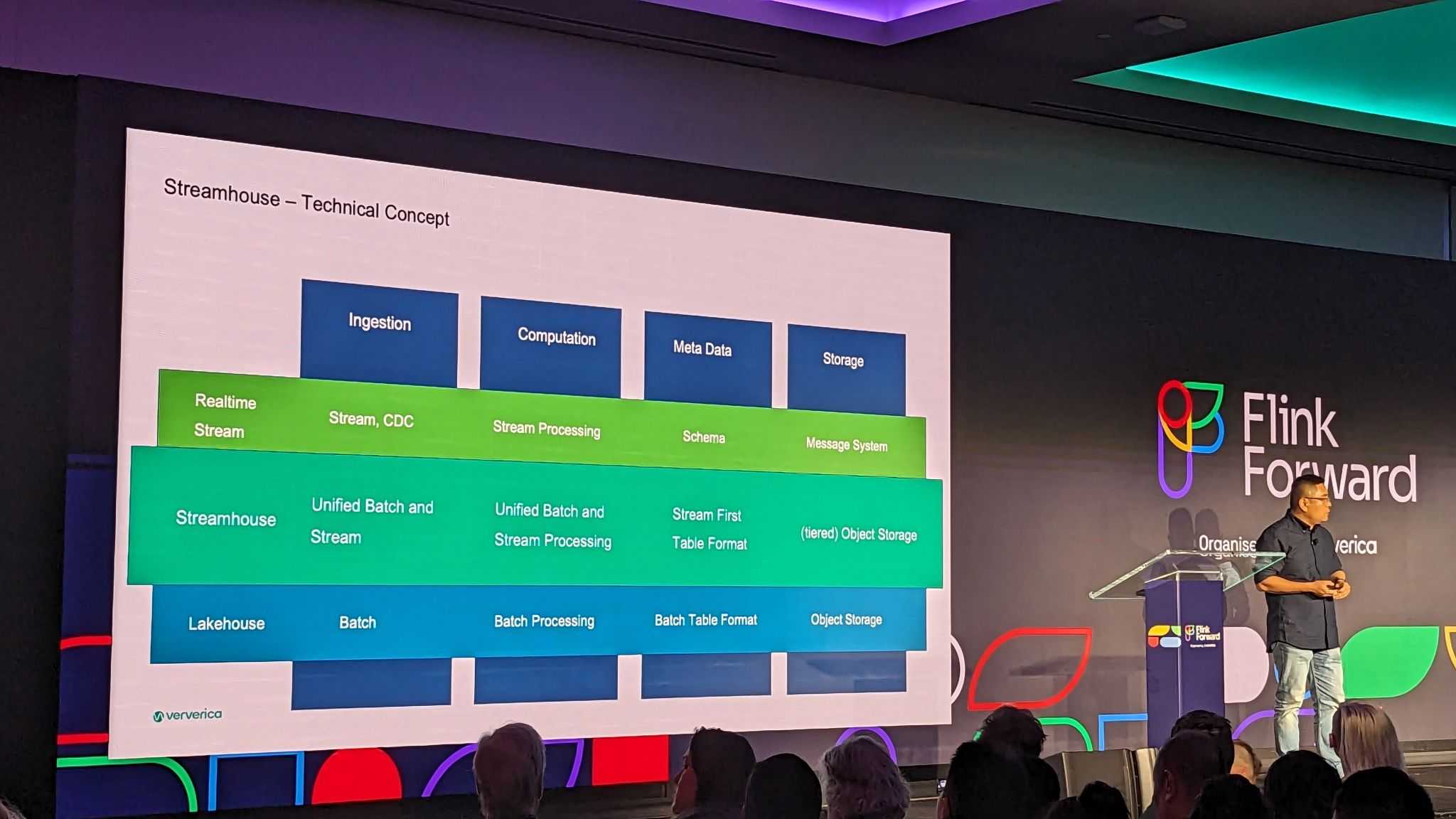

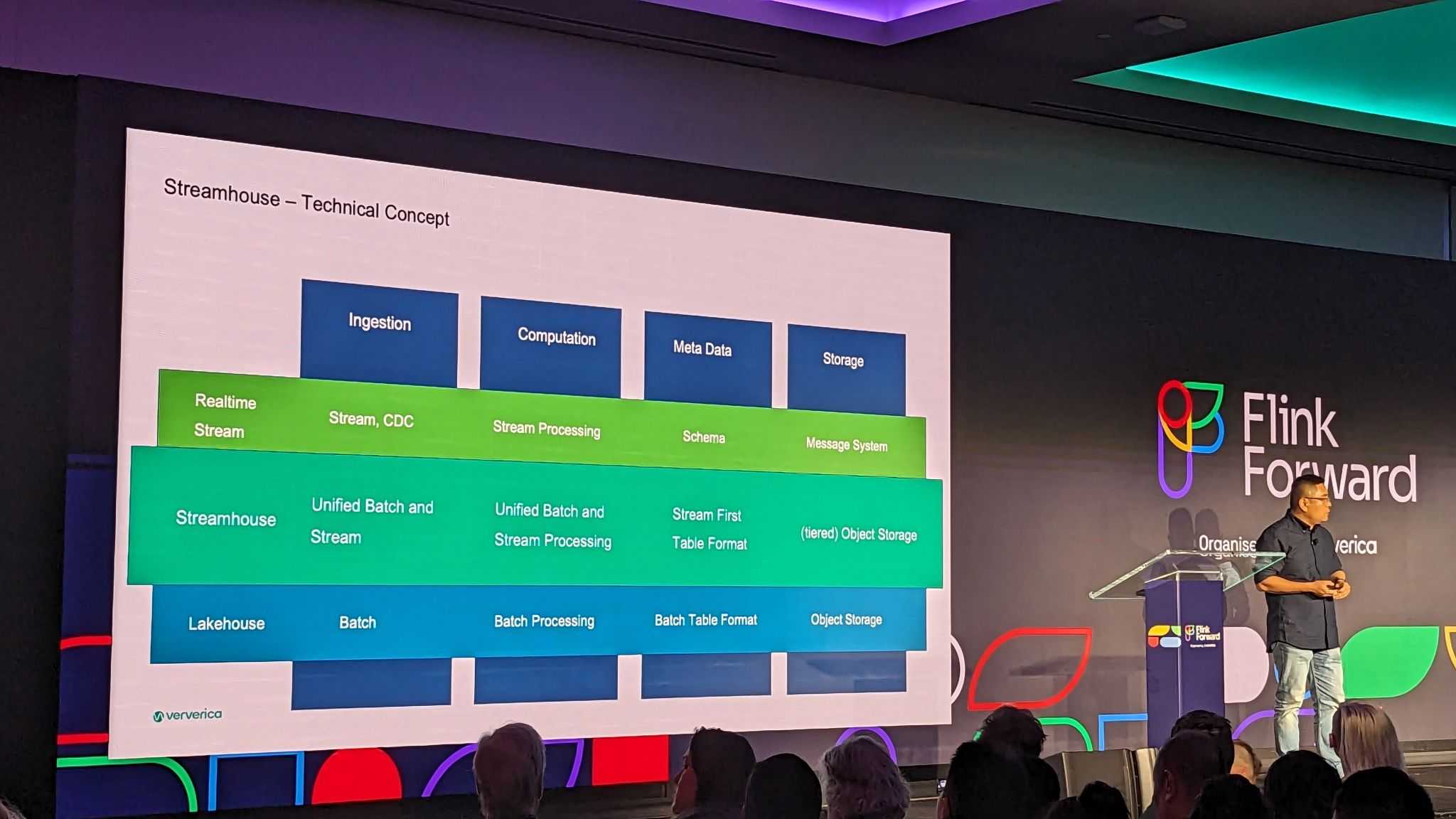

In one of the keynote presentations, Ververica introduced an incredibly interesting concept they called "Streamhouse". Streamhouse is a Lakehouse that has capabilities to not only ingest streaming data like CDC, but process and further deliver the results in streaming, with relatively low data latency.

This concept is enabled by the new tables format called Apache Paimon (similar to e.g. Iceberg or Delta Lake) with some unique capabilities. This format empowers engines like Flink and Spark to perform real-time data operations—inserting, updating and deleting. Additionally, Streamhouse facilitates efficient lookup joins and provides monitoring capabilities for changes within tables.

The Streamhouse concept is the middle ground between non-streaming Lakehouses built with Iceberg or Delta Lake, and fully stream processing architecture done on Kafka. When a company wants to lower the latency of data, they usually look for rebuilding pipelines to work on Kafka topics (i.e. Kafka -> Flink -> Kafka). However, that's quite an investment. Streamhouse can be much simpler to work with, while providing low enough data latency. Building Lakehouse is also a goal in itself, so it can be ready-built with streaming capabilities.

In my opinion, Streamhouse is a serious open-source contender to proprietary features like Snowflake's Dynamic Tables and Databricks Delta Live Tables. It could be more efficient resource- and cost-wise, while also more powerful in terms of analytics. It definitely avoids lock-in to proprietary software, gives freedom of choice that many companies look for. Nevertheless, this has to be proven, I can't wait to read about some comparisons, or - what is more likely - compare them myself!

Lakehouse, especially Apache Iceberg is the king

I have already mentioned Apache Iceberg a few times and it’s not a coincidence because it was a very live topic at the conference: three presentations from Apple and one given by Pinterest. They presented a few achievements of their data platforms, like strong schema evolution, leveraging Iceberg REST catalog, ingesting CDC to Iceberg and even - competing with Paimon’s main strength - building streaming pipelines on Iceberg!

From my experience, Apache Iceberg is today definitely favored by the Flink community as the Lakehouse format and together with Paimon, can become the backbone of the Streamhouse concept.

Yet not only Iceberg is used to build Lakehouses. DoorDash bets on Delta Lake and shows how they leverage Flink to ingest data into it, which is then pulled in near-real-time further into Snowflake.

When a company taps into digital data, events, usage logs, etc. - going for Lakehouse is an inevitable step to take. It fits well into the architecture next to (or before) the Data warehouse to support efficient big data workloads, ML use cases and many more.

Flink SQL as the fundament of self-serviced platforms

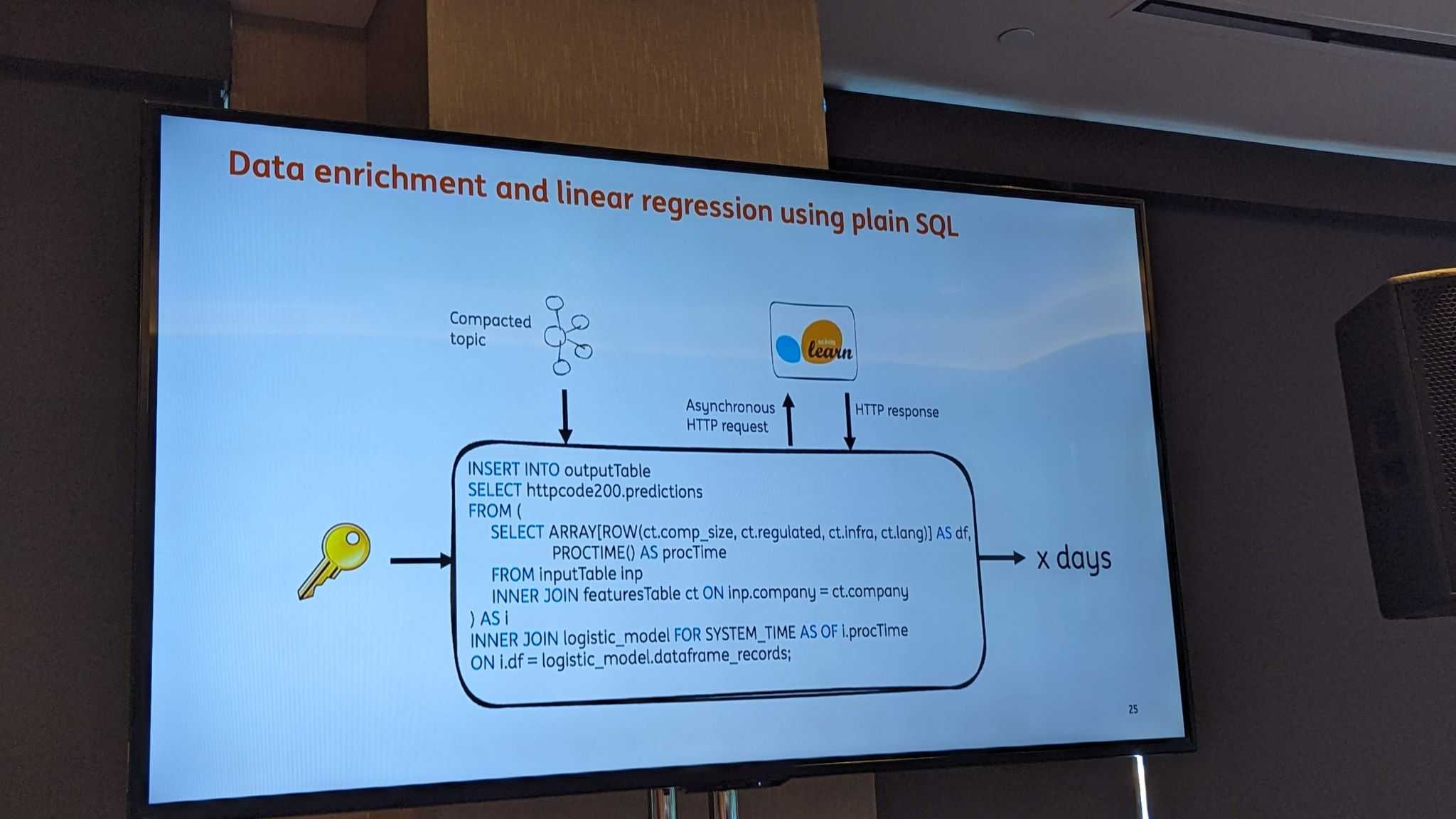

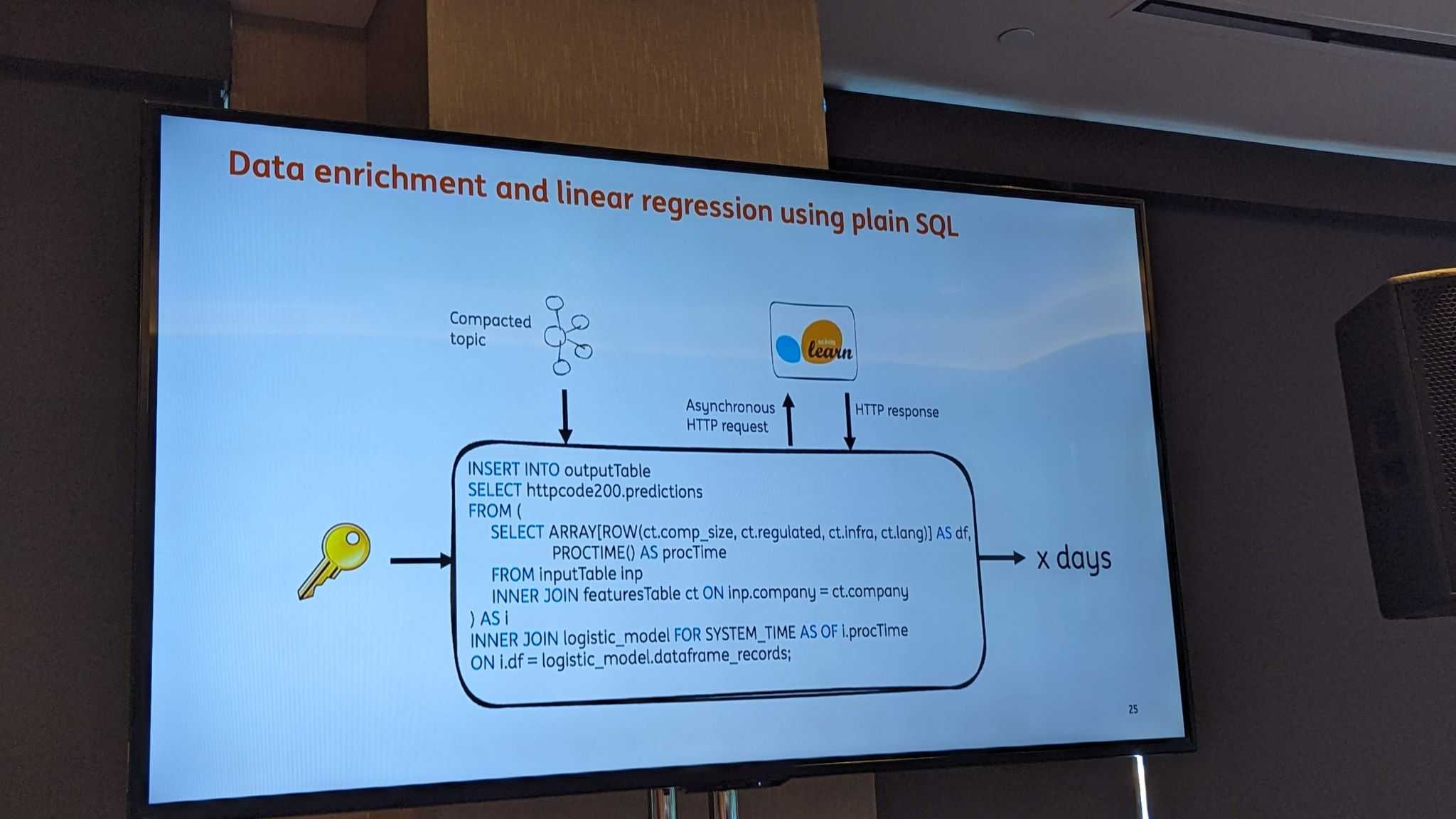

Using Flink in its raw form, with Java/Python APIs is still not a trivial task. That's why companies invest in building platforms where Flink SQL is the main interface - that's their key to lower the barrier and democratize streaming. During the conference, Linkedin showed how their semi-technical engineers build Flink SQL apps for their critical use cases (like Search).

The other company, DoorDash explained how their product engineers can monitor key metrics of their products in real-time by defining metrics in Flink SQL. Then there was an ING presentation where they showed how their data scientists use Flink SQL to build streaming apps that do ML model inference, with their HTTP connector (Check our Flink – http connector).

Finally, it is good to mention my presentation here, where I demonstrated how we build streaming platforms for clients from many industries, where Flink SQL is the interface for doing ETL, analytics and delivery in real-time. This way we enable larger audiences of semi-technical users to build critical real-time features of their products or services. This is much required in today's competitive markets.

What was more? Decodable, Ververica, Aiven, IBM - they all build their managed services with, or on SQL as the main interface.

Everyone is CDC-ing

What was surprising with the Flink Forward, Change Data Capture from operational databases is the fundamental capability that people build with Flink. It's the foundation of Linkedin's, Apple's, Alibaba's and other’s platforms. It's also one of the main use cases for Decodable or Ververica. Now with solid Debezium-based [CDC connectors], it’s possible to stream to (and through) Lakehouses and to data warehouses like Snowflake, so people use it for things like real-time BI, streaming applications, ML features engineering and applications.

Batch and Stream processing unified... It's a journey.

Improving the Batch engine in Flink is still one of the highest priorities of the Flink community. During the Flink Forward, the largest players showed that this is already solid.

Alibaba is especially strong here, its engineers showed how they use a batch engine and how they improved it to work for their use cases on Alibaba scale. To be honest with you, if it works on their scale, whose scale it might not work on? :) .

Another company, Bytedance presented how they do data ingestion (with the framework they implemented using Flink both in streaming and batch. Also, Linkedin underlined how one interface for batch and streaming was important to them. So that people don't need to learn two systems and don't need to support the code base twice. It’s worth mentioning that they took a different path of leveraging Apache Beam for both Flink in streaming and Spark in batch, because they have lots of pipelines on Spark already. Who knows, maybe they will also go for Flink for new or strategic batch pipelines?

There are lots of FLIPs (Flink Improvement Proposals) that are around, making batch and streaming engines work together as seamlessly as possible with smart switching to one or another, mixing them in a single job, and using the best engine for the task automatically. The future is really promising.

More Flink-based Cloud services.

There are so many options now to run Flink or Flink-based workloads in the cloud. Ververica, Confluent, Aiven, Decodable, AWS, Cloudera, Alibaba and recently IBM utilize this. This proves that the market adopts Flink widely, and that it's growing quickly, so many companies have an appetite to invest in it.

Apache Flink 2.0 is on the horizon!

Last but not least, during the conference we could hear more details regarding Flink 2.0. For those awaiting big revolutions, the main theme of the release might be dissatisfying because it's about... removing deprecated APIs, features - that's the goal of bumping the major version.

Of course, that’s not all, a few goodies are actually interesting. The one I found the most exciting is Disaggregated State management. Flink originally kept state on local disks, which is extremely efficient but operationally ... problematic. In the 2.0 version, Flink will use local disks, RocksDb for caching only, while the main copy of data will be kept in remote storage. That should significantly simplify operations like failure handling, autoscaling and others.

Summary

The Flink Forward conference has been organized by Ververica for a few years now and it was great to see how influential this event really is. The latest event in Seattle showcased the growing significance of real-time streaming analytics based on Apache Flink. From the innovative Streamhouse concept to the dominance of Lakehouses and the empowering role of Flink SQL, the future of Apache Flink looks promising. With the unification of batch and stream processing, together with the growing ecosystem of Flink-based cloud services, the appetite for investment in this dynamic technology is stronger than ever.

Especially for the BFSI (Banking, financial services and insurance) industry where streaming data is becoming more necessary.

To sum up, the Flink Forward 2023 conference not only celebrated current achievements, but also shows that the future of real-time streaming analytics is brimming with possibilities.

Delve into real-time data driving business growth and innovation in 2024 in our on-demand webinar. Explore fascinating insights, success stories, and practical applications that showcase the indispensable role of data streaming in today's competitive landscape.