A Review of the Presentations at the DataMass Gdańsk Summit 2023

The Data Mass Gdańsk Summit is behind us. So, the time has come to review and summarize the 2023 edition. In this blog post, we will give you a review…

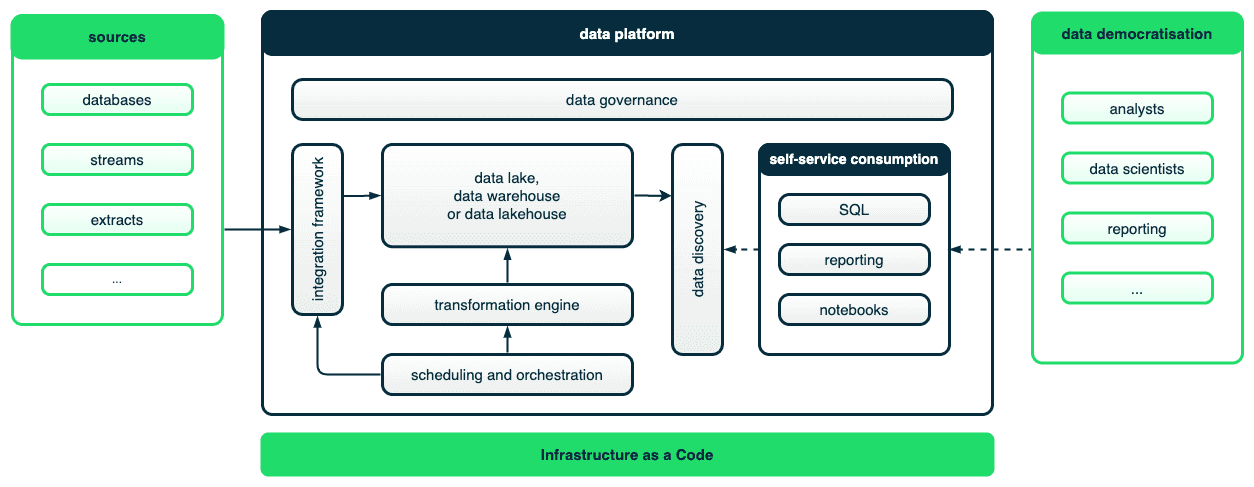

Read moreThe Modern Data Platform (or Modern Data Stack) is on the lips of basically everyone in the data world right now. The need for a more self-service approach towards data-driven insight development has been observed in many of our clients for some time now. One of the most frequently observed painful points was the lengthy time-to-value due to complex, unstandardised data projects & pipelines requiring a lot of devops and engineering effort. Data quality issues and the lack of a consistent, holistic approach to data testing only added to this frustration. Interestingly, this was a common theme in all types of business - from FinTech start-ups to big telco enterprises. This is why we took a closer look at this topic (read our previous blog post: Modern Data Platform - the what's, why's and how's. Demystifying the buzzword) and outlined what new things the modern data stack brings to the industry and how it's even possible. We understood that there was a need to change the way the data teams were organized and data-driven insights were produced, so we compiled our long and vast industry experience and adapted this pattern quickly into a repeatable solution. We also recognized that platform usability was not fully realized with the bag of unintegrated tools available, so we supplemented the design with our own tools to build a seamless analyst’s workflow. Now we are ready to announce that we rolled up our sleeves and evolved this blurrily-defined concept into a solution - a GID Modern Data Platform developed in our R&D Labs. In this article you will learn what it is all about - both from a functional and technical perspective. As usual - be prepared for a nicely cooked data meal containing best-in-class ingredients with an open source flavor (and no black-boxed recipes!). If you’re new to the topic of the Modern Data Platform and have no clue what all the fuss is about - you can quickly get onboarded by listening to our Radio DaTa podcast recorded by Jakub Pieprzyk.

Every market trend has a reason - it is no different with the Modern Data Stack.

More and more products are being built around data, which means that data literacy is basically a must nowadays, if you’d like to stay afloat in this rapidly changing market. The expectations in different industries for data access democratization are as high as never before. This is why there is such a big need for self-service data platforms with a low-code approach that make it possible for the users - analysts or analytics engineers to independently design, develop and maintain their data pipelines, utilizing best engineering practices. When you think about a Modern Data Stack, you probably instantly imagine a cloud data warehouse ingested by modern data integration tools (e.g. Airbyte or Fivetran), data transformation using SQL (like in dbt)and interactive reports generated in a self-service business intelligence tool (like Looker) as the icing on top of the data product cake. These tools are definitely the key building blocks for delivering every data platform, but they alone are not enough to build a seamless user experience. Some of the challenges stumbled upon by analysts include:

How to define data ingestion themselves without the help of data engineers?

How to work on their analysis without installing heavy tooling on their workstation?

How to transfer ingestion, transformation or BI code from development to production reliably?

How to make the results of data pipelines available in BI tools?

How to make a data catalog common across all these tools?

How to start with a new project. Is there a template to start with?

The data infrastructure engineers are also troubled with some of the following questions:

Below you will discover how we addressed these challenges with our platform design. But before we dive into the design, let’s first define the target we are aiming at.

Let’s start from a definition of what we feel a Modern Data Platform should offer. This is a handful of key values which we had in mind when designing our platform. We divided them into three different perspectives in order to address all the points of view that need to be taken into consideration. Some of these functionalities are an inherent part of the core products we use in our stack (e.g. cloud data warehouses), but in order to achieve others (like simplicity or standardization), we had to come up with some dedicated components.

This is the most important point of view because it defines the main functional reasons behind the solution. This encapsulates the value proposition of the product.

Every product team should be independent across the whole span of the data project - including data ingestion, discovery, exploration, transformation, deployment and reportingand. It shouldn’t need a data or software engineer to understand their data. You can probably recall such situations when according to your gut feeling it was the time to act, but you lacked the data engineering resources to support your business decision because of the data, which was stored in sophisticated structures and required technical skills to make sense out of it. As a result you missed a certain business opportunity. It’d be great if a data platform could allow all the SQL-savvy analysts to act independently, wouldn’t it? Think of this solution as Google Maps in the times when you had to hire a guide so as not to get lost in an unknown city. Now you can be your own guide …in the data world and make data-driven decisions.

This comes down to an easy-to-use user interface supported by a web based workbench app for communication with the platform and low-code, SQL-based data transformations for business logic definition, so that it can be easily understood by product teams. There is no need to depend on data engineers and their heavy and sophisticated toolset and waste a lot of energy on repetitive translations from business to technical language. Just sign in to your workbench - delivering value from your data will be within your reach.

Data quality should be guaranteed as early in the pipeline as possible via integrated and automated data testing. This fosters trust in data and reduces the risk of making business decisions based on faulty information. This is also possible thanks to data monitoring and alerting on discrepancies in a timely manner. Provisioning of a data catalog with data lineage helps the user to understand data provenance. Forget about the times when you had to double check every figure in order to make sure that it’s reliable and that the data that you base your decisions upon is fresh and consistent. Thanks to the mechanisms that enforce data quality control, you’re informed when your data deviates from the expectations. Data lineage on the other hand, supports the troubleshooting process to diagnose where the data faults come from.

A data catalog is a self-service single source of truth on the data and associated metadata, being an incentive for collaboration and maintaining good data management practices. It was definitely quite frustrating when the definition of your data assets were spread all over your organization, incomplete or outdated. People often used to look for support on Slack or other communication channels. Now, with the emergence of modern data catalogs, you can get a “Google search” for your data with some useful context and other metadata insights on top.

Keeping everything intact and ready for scaling includes templating similar projects in standardized structures, best practices from the market regarding project structure and well-thought naming convention. Having one framework for the data project templates helps to organize the data assets in your company. This addresses the common problem of a long and steep learning curve that a new person undertakes when being onboarded to the team and prevents having to reinvent the wheel every time a new data product is about to be created. This also makes time-to-market significantly shorter.

Finally, all the most crucial data architecture decisions and assumptions are gathered in one place.

The solution is deployable in any environment (local, dev or production), any popular public cloud (GCP, AWS, Azure) and in any storage facility, while the analyst workflow in any of those configurations stays the same. This highly adaptable and configurable framework is achieved mostly thanks to the consistent abstraction and logical architecture of the whole solution. Unlike on most of the proprietary enterprise platforms, the majority of the components are interchangeable and extensible thanks to a strong open source focus.

Consistent (defined at the source) and easily maintainable data & metadata access control policies are common for all the data clients in the stack.

Support for organizations with data teams that would like to incorporate all the data mesh principles (such as data as a product, domain oriented data ownership & architecture, self-serve data infrastructure as a platform and federated decentralized data governance). This readiness is granted automatically when all previously mentioned features in the platform are provided.

This section covers all the assumptions on what it takes to provision and maintain the underlying infrastructure and core components in order to have a good understanding of the associated complexity and costs.

No lengthy experimentation and development. Stack is provisioned by Infrastructure as a Code (IaaC), which is also used for all the necessary upgrades. The setup also addresses all the scalability issues with the support of native cloud configurations.

Pipeline automation via CI/CD with all the engineering best practices in place that hides deployment complexity from the analytics engineers and other users.

No up-front payments and costly licensing fees, you only pay for what you use.

Most of the components are interchangeable, hence there is negligible risk of getting locked in with a certain solution.

Unblocking the potential of data is something that we’ve always been passionate about. We strongly believe that a well designed Modern Data Platform is a powerful weapon for product teams to make informed data-driven decisions. We put our almost decade-long data industry expertise & experience and our client stories into one pot and seasoned it with our passion to deliver value. We already know that it tasted really good for some of our clients (subscribe to our newsletter to stay tuned). If you want to learn more about our ingredients and how we serve our data meal to our customers, check out the ‘GetInData Modern Data Platform - features & tools’! and if you are interested in the Modern Data Platform for your company, sign up for a full live demo here.

The Data Mass Gdańsk Summit is behind us. So, the time has come to review and summarize the 2023 edition. In this blog post, we will give you a review…

Read moreNowadays, companies need to deal with the processing of data collected in the organization data lake. As a result, data pipelines are becoming more…

Read moreIt was epic, the 10th edition of the Big Data Tech Warsaw Summit - one of the most tech oriented data conferences in this field. Attending the Big…

Read moreHTTP Connector For Flink SQL In our projects at GetInData, we work a lot on scaling out our client's data engineering capabilities by enabling more…

Read moreLearning new technologies is like falling in love. At the beginning, you enjoy it totally and it is like wearing pink glasses that prevent you from…

Read moreBig Data Technology Warsaw Summit 2020 is fast approaching. This will be 6th edition of the conference that is jointly organised by Evention and…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?