EU Artificial Intelligence Act - where are we now

It's coming up to a year since the European Commission published its proposal for the Artificial Intelligence Act (the AI Act/AI Regulation). The…

Read moreIn the dynamic world of e-commerce, providing exceptional customer service is no longer an option – it's a necessity. The rise of online shopping has intensified the demand for personalized shopping experiences and round-the-clock support. This is where chatbots, powered by Large Language Models (LLMs), step in. In this blog post, we'll explore how to create an efficient e-commerce shopping assistant using LLMs, examine why such chatbots are essential and delve into the intricacies of their development, all while shedding light on the capabilities and challenges of these powerful language models.

With the rapid growth of e-commerce, the need for customer shopping assistants has become more important than ever. Customers expect personalized experiences and recommendations while shopping online, and companies need to keep up with this demand to stay competitive.

What's more, chatbots provide 24/7 availability and reduce the workload of your staff. Availability allows customers to receive assistance outside of business hours. By handling routine and repetitive tasks, such as answering frequently asked questions, chatbots can free up employees to focus on more challenging tasks.

Overall, implementing a chatbot can help e-commerce stores provide better customer service, streamline operations and increase revenue.

Recent advances in language modeling, particularly with the emergence of large language models, have enabled brand new possibilities in many different fields. Having chatbots that can understand natural language and respond to customer questions in a more human-like manner are reshaping the landscape of online shopping, allowing chatbots to provide tailored product recommendations and assist customers throughout their purchasing journey.

This ability has made LLMs useful in developing conversational interfaces that can mimic human-like conversation. By leveraging language models, customer shopping assistants can have natural and seamless conversations with customers, which can lead to a better shopping experience.

For example, an e-commerce store could use an LLM-powered chatbot to help customers find products by asking questions about their preferences or suggesting similar items. The chatbot could also answer questions about product details, pricing and shipping information. LLMs can also serve as a natural language summarization tool built on top of a recommendation system, which can enhance customer satisfaction and drive sales.

Overall, LLMs have made it possible to build more sophisticated and intelligent customer shopping assistants that can provide a more natural and personalized experience for customers.

When building a customer shopping assistant with LLMs, there are several key considerations that must be taken into account, including the potential challenges and limitations of using LLMs.

One of the main challenges of using LLMs is that they can produce hallucinations, which are responses that are technically correct, but not contextually appropriate. An example of a hallucination in the context of GPT-3.5 is when it is asked about events or information that occurred after its training data cutoff in September 2021. Despite lacking access to up-to-date information, GPT-3.5 may still attempt to generate responses. This can lead to a frustrating user experience.

Another consideration is LLMs stochasticity. While LLMs have shown great potential in generating human-like text, the inconsistency in reproducing results raises further concerns about the reliability of the outputs. It becomes challenging to guarantee that the same input will consistently yield the same output, which can impact the trustworthiness and dependability of LLM-based applications.

Deployment of machine learning (ML) models, including LLMs, also requires careful consideration. It is important to ensure that the model is deployed in a secure and compliant manner, protecting sensitive customer information and maintaining data privacy. For that reason I encourage you to read this article on how to run your first, private Large Language Model (LLM) on Google Cloud Platform.

Developing LLM-based solutions will be significantly different from standard ML problems. If you want to use LLMs in your products, it might be better to first conduct a feasibility study on a few handpicked examples, to understand whether the project is viable. It's recommended to start with paid APIs like OpenAI, Google or Cohere to take advantage of their best pre-trained models and well-documented APIs.

Once you have confirmed that your project is feasible, you can try to switch to either open-source models or cheaper paid APIs. By having ownership of your infrastructure for LLMs, you can reduce expenses and ensure privacy. Additionally, your solution will not rely on any external APIs. However, it's important to note that open-source models may require more customization (e.g. fine-tuning) and may not be as powerful as paid solutions.

However, how do you know if you are making progress? LLMs are notoriously difficult to evaluate, and it can be challenging to determine whether one language model is better than another. While there are public benchmarks available, it's important to recognize that these benchmarks may not always be the most appropriate measure of success for your specific task. In some cases, state-of-the-art out-of-domain models may perform worse than models that have been specifically trained on domain knowledge. Relying solely on public benchmarks may not always provide an accurate assessment of a language model's capabilities. In order to make real progress with LLMs, reliable evaluation is essential. By prioritizing evaluation, developers can better understand the strengths and weaknesses of LLMs and work towards developing more effective solutions.

When putting your solutions to production, it's vital to monitor the edge cases and have good guardrails in place to ensure that the LLM performs as intended, and avoids making inappropriate responses. There are also significant benefits to incorporating a human-in-the-loop approach. By having human supervision in place, you can ensure that language models are performing as expected, and in cases where they are not, humans can step in to provide the necessary corrections. Additionally, humans can provide feedback, which can be useful in improving the performance of your system in subsequent iterations. This iterative process, with humans and LLMs working together, can result in more robust and effective solutions.

Ok, so you've decided to give LLMs a try and wonder how to start making your prompts better. You may have heard of prompt engineer jobs that are emerging, but how can you improve your prompts in the meantime? Prompts are highly experimental, and sometimes adding a single sentence can completely alter the results.

However, there are many techniques that can help improve LLM responses. The first approach is few-shot prompting, in which you provide a few examples of the task to the model in a prompt. Thanks to that, the language model is able to adapt to the underlying patterns and generalize to new examples better. Let's say you're trying to do sentiment analysis on customer reviews. You might want to give examples like this:

```

What is the sentiment of the following product review? Give your answer as a SINGLE WORD, either "POSITIVE" or "NEGATIVE".

Here are some examples:

Review: Love these headphones! Great sound quality and comfortable to wear.

Sentiment: POSITIVE

Review: Terrible product and customer service, don't waste your money.

Sentiment: NEGATIVE

Review: {{insert_review}}

Sentiment:

```Few-shot prompting can result in a significant improvement in model performance.

A general rule of thumb is to write clear and specific instructions and ask for structured output, such as `JSON` or `HTML`, which can be easily parsed later. There are also tricks that can help improve prompting, such as limiting the output (e.g. "Use at most 2 sentences") or ask the model to focus on the specific aspects of the task (e.g. "Description is intended for retailers, so should be technical"). If you want to learn more about effective prompting techniques, I highly recommend taking Andrew Ng's course, which provides comprehensive insights and strategies for optimizing instructions when working with large language models.

When it comes to LLM-based product development, it's important to embrace the probabilistic nature of these models. Unlike traditional software engineering, you may no longer have the luxury of knowing exactly what input will result in what output. As such, it's generally best to start with LLMs in applications where not always being right won't cause any damage to your product. These use cases provide a great starting point to explore the capabilities of LLMs, while minimizing the risk of negative impact on your business or customers. By embracing the probabilistic nature of LLMs and starting with these safer use cases, you can better understand how to leverage these models to their fullest potential.

Now that we have acquired a solid understanding of the basics, it is time to harness this knowledge to construct a powerful chatbot assistant.

In order to ensure accuracy and reliability, it is advisable to equip the chatbot with the ability to access an external database for information, rather than relying solely on its internal knowledge. This is where the ReAct framework comes into play.

The ReAct framework enables large language models (LLMs) to engage with external tools, allowing them to retrieve additional information. This interaction with external tools enhances the chatbot's responses, making them more reliable and factually grounded.

So, how does ReAct work? Let's illustrate this with an example. It is common knowledge that LLMs often struggle with mathematical calculations. Therefore, let's teach the LLM how to use a calculator through the ReAct framework.

```python

react_prompt = """

You run in a loop of Thought, Action, PAUSE, Observation.

At the end of the loop, you output an Answer.

Use Thought to describe your thoughts about the question you have been asked. Use Action to run one of the available actions - then return PAUSE.

Observation will be the result of running those actions.

Your available actions are:

calculate:

e.g. calculate: 4 * 7 / 3 - runs a calculation and returns the number

Example session:

Question: What is 5 * 2 / 5 ^ 2

Thought: I should use the calculator for calculation.

Action: calculate: 5 * 2 / 5 ^ 2

PAUSE

Observation: 5 * 2 / 5 ^ 2 = 0.4

Answer: The output of the calculation is 0.4

"""

```This is a simple example that serves as a demonstration of the ReAct pattern. You can capture an action using regular expressions (`re.compile('^Action: (\w+): (.*)$')`). The Question represents the user's query, while Thought and Action correspond to the internal thinking process of the LLM. The Observation is the outcome of executing the provided code. Finally, the Answer represents the final response generated by the LLM.

By employing this pattern, the chatbot can dynamically engage with various tools, allowing it to expand its capabilities beyond its initial knowledge base.

So, what tools can a chatbot assistant find useful? Consider, for example, a chatbot designed to enhance an online shopping experience. Customers would benefit from personalized recommendations generated based on their shopping history, which we can enable by integrating a recommender system into our chatbot. To help customers discover new items, our chatbot could employ a robust semantic search function to search the internal database for appealing products. Moreover, many purchasing decisions require a nuanced conversation – a single query often isn't enough to settle on the ideal product. This calls for the ability to facilitate multi-turn dialogues to glean more detailed preferences from customers before delivering tailored recommendations!

Here's an excerpt of the prompt fed to the large language model (LLM):

```python

shopping_assistant_prompt = """

The commands to seek information are:

| Command | Arguments | Description |

| --- | --- | --- |

| semantic_search | text | Runs a semantic search query against a database to find particular articles |

| recommendation | text | Runs a personalized recommendation engine, and returns few articles |

| chat | text | Chats with a customer |

Use the `semantic_search` command when you know what you are searching for.

Use the `recommendation` command when you want a random recommendation.

Use the `chat` command to either ask customers about details of what they are interested in, present recommendations to a customer, or just chat with them in general.

Your response will be in JSON and will include a "Thought" + "Action" to retrieve data or recommend a product. When data has been retrieved, it will be included as an "Observation". You will continue generating thoughts and actions until you get to an answer, or conclude that you can't.

Please remember to only write about products that are provided to you.

When you recommend a specific product to a customer, include the Product_id in the JSON answer. If the product id is not known, leave that field empty.

Your JSON response should be formatted as follows: {"thought": Thought, "action": {"command": Command, "text": Text, "id": Product_id}}

"""

```The actions described here serve distinct purposes:

- `semantic_search` command is deployed when the customer already knows what they're seeking.

- `recommendation` command is perfect for those times when customers desire a spontaneous suggestion.

- `chat` command fosters interaction with customers, whether it's soliciting more information, presenting product recommendations, or just a casual conversation.

We have used Markdown tables to display the available actions, because large language models work best with well-formatted data. By enforcing a JSON response format, we can easily parse the output while ensuring seamless interaction with downstream applications, like cart functionality or product image display, through the inclusion of `product_id` in the response.

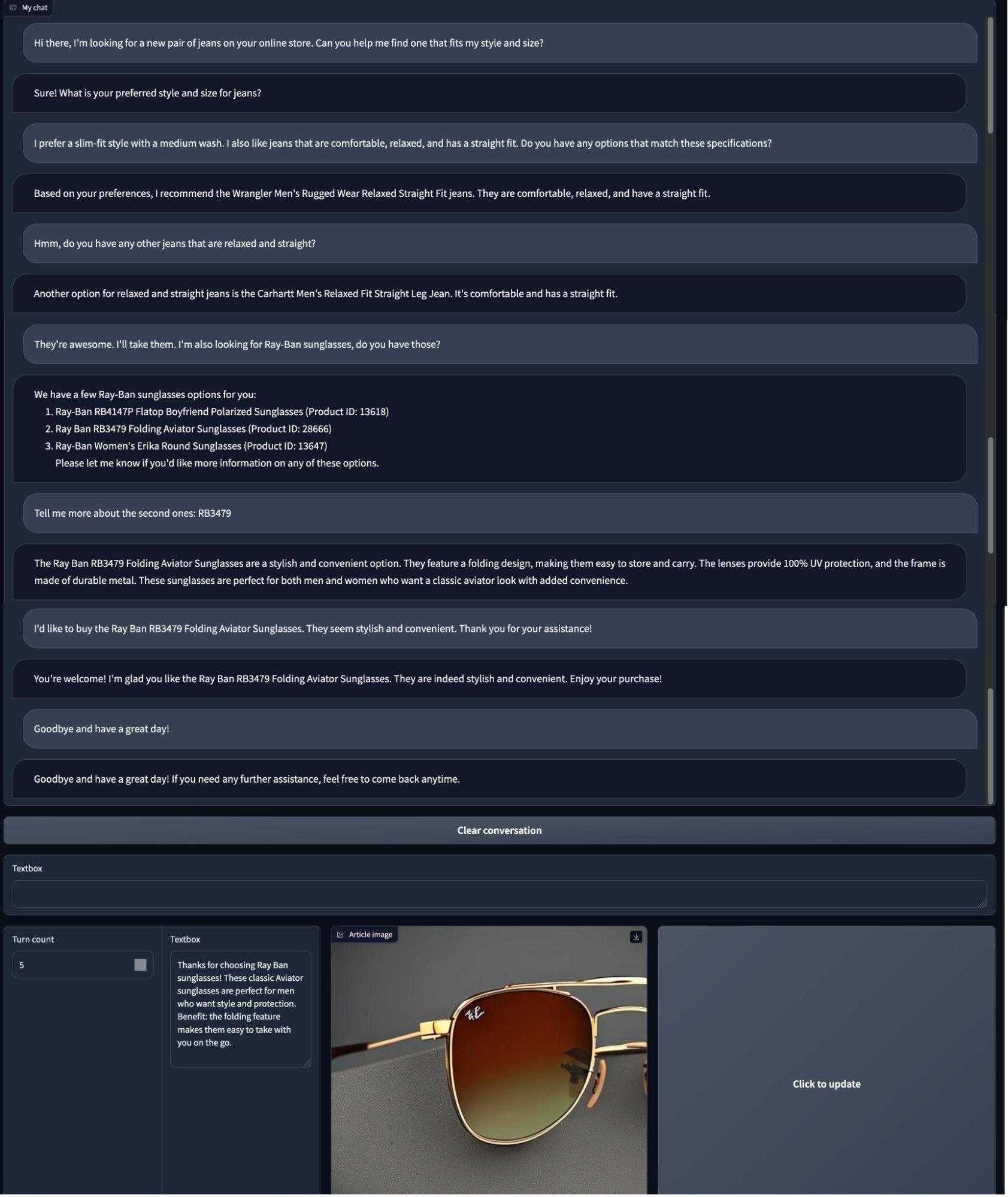

This is how a typical conversation with a customer might look like:

Our demo uses a gradio frontend, a Large Language Model is equipped with extensive tools to gather information about products, ensuring that it can provide accurate and helpful responses. When discussing a specific product, the LLM can generate a JSON containing the product_id, which you can conveniently display on your website along with the article metadata. This integration enhances the customer experience and simplifies the process of delivering relevant information.

This is how interaction between a customer and a assistant looks like under the hood:

Customer: They're awesome. I'll take them. I'm also looking for Ray-Ban sunglasses, do you have those?

LLM Customer Assistant:

{

"thought": "I should search the internal database against Ray-Ban sunglasses",

"action": {

"command": "semantic_search",

"text": "Ray-Ban sunglasses",

"id": ""

}

}

Observation:

`# relevant item metadata are presented here in a Markdown table as a string`Customer:

{

"thought": "Given items from Observation, I should present the Ray-Ban sunglasses options",

"action": {

"command": "chat",

"text": "We have a few Ray-Ban sunglasses options for you:

1. Ray-Ban RB4147P Flatop Boyfriend Polarized Sunglasses (Product ID: 13618)

2. Ray Ban RB3479 Folding Aviator Sunglasses (Product ID: 28666)

3. Ray-Ban Women's Erika Round Sunglasses (Product ID: 13647)

Please let me know if you'd like more information on any of these options.",

"id": ""

}

}

Customer:

Tell me more about the second ones: RB3479LLM Customer Assistant:

{

"thought": "I should provide more information about Ray Ban RB3479 Folding Aviator Sunglasses",

"action": {

"command": "chat",

"text": "The Ray Ban RB3479 Folding Aviator Sunglasses are a stylish and convenient option. They feature a folding design, making them easy to store and carry. The lenses provide 100% UV protection, and the frame is made of durable metal. These sunglasses are perfect for both men and women who want a classic aviator look with added convenience.",

"id": "28666"

}

}

Customer:

` I'd like to buy the Ray Ban RB3479 Folding Aviator Sunglasses. They seem stylish and convenient. Thank you for your assistance!`As it stands, there are a few barriers preventing the full integration of these chatbots into the production environment. Latency, reliability and prompt injections are chief among these concerns. The `ReAct` pattern has significantly improved reliability but hasn't wholly eliminated the issue. As the chatbot's functionalities expand (more tools), a more powerful model is required to execute these complex tasks – but this, in turn, leads to further increased latency.

To surmount these hurdles, we're exploring the fine-tuning of smaller models on chat interactions. This direction seems to be a promising avenue to pursue, and we're excited to delve deeper into the fine-tuning of open-source large language models in our upcoming Advanced Analytics Labs initiatives. So, stay tuned, and subscribe to our newsletter!

It's coming up to a year since the European Commission published its proposal for the Artificial Intelligence Act (the AI Act/AI Regulation). The…

Read moreNowadays, we can see that AI/ML is visible everywhere, including advertising, healthcare, education, finance, automotive, public transport…

Read moreAI regulatory initiatives of EU countries On April 21, 2021, the EU Commission adopted a proposal for a regulation on artificial intelligence…

Read moreTraining ML models and using them in online prediction on production is not an easy task. Fortunately, there are more and more tools and libs that can…

Read moreThe client who needs Data Analytics Platform ING is a global bank with a European base, serving large corporations, multinationals and financial…

Read moreBuilding reliable machine learning pipelines puts a heavy burden on Data Scientists and Machine Learning engineers. It’s fairly easy to kick-off any…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?