Semi-supervised learning on real-time data streams

Acquiring unlabeled data is inherent to many machine learning applications. There are cases when we do not know the result of the action provided by…

Read moreIn this blog post, you will learn what medallion architecture is, the characteristics of each layer of this pattern and how it differs from the classic data warehouse layers.

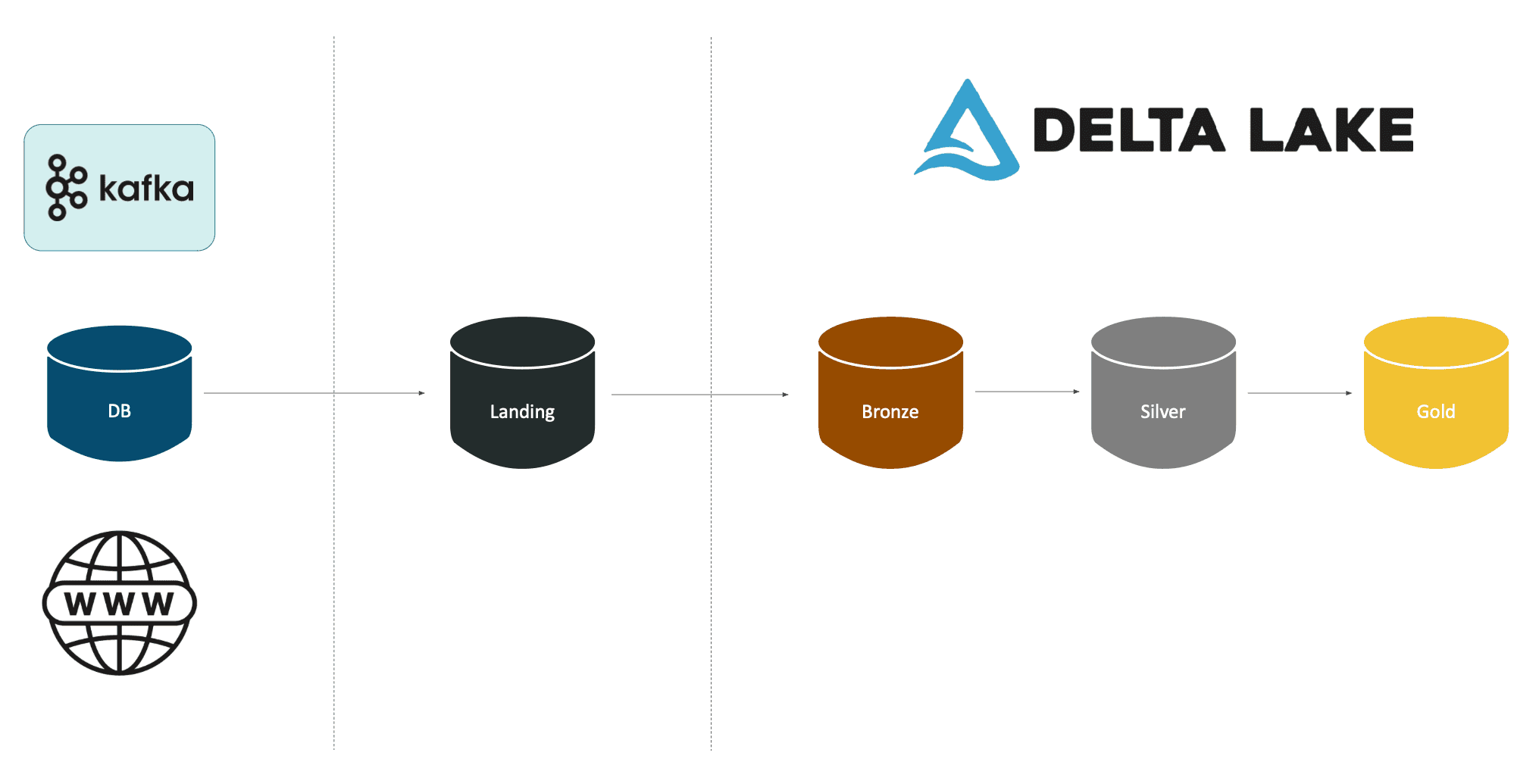

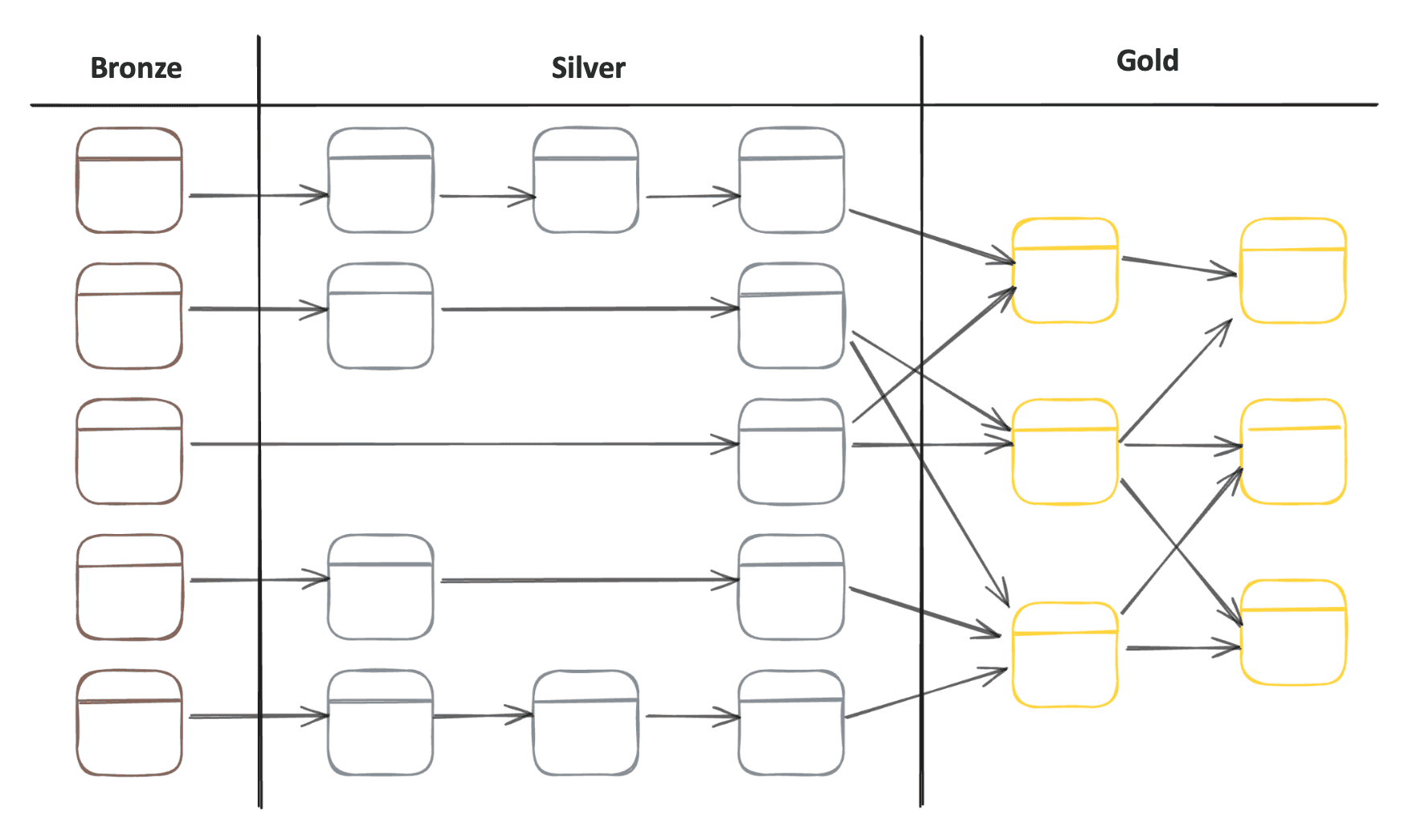

Medallion Architecture (also known as multi-hop) is a data design pattern that gained popularity over the last couple of years. Its main focus is to logically organize data in a lakehouse with gradual improvements in quality by progressing between the layers, taking into account the benefits that lakehouse gives.

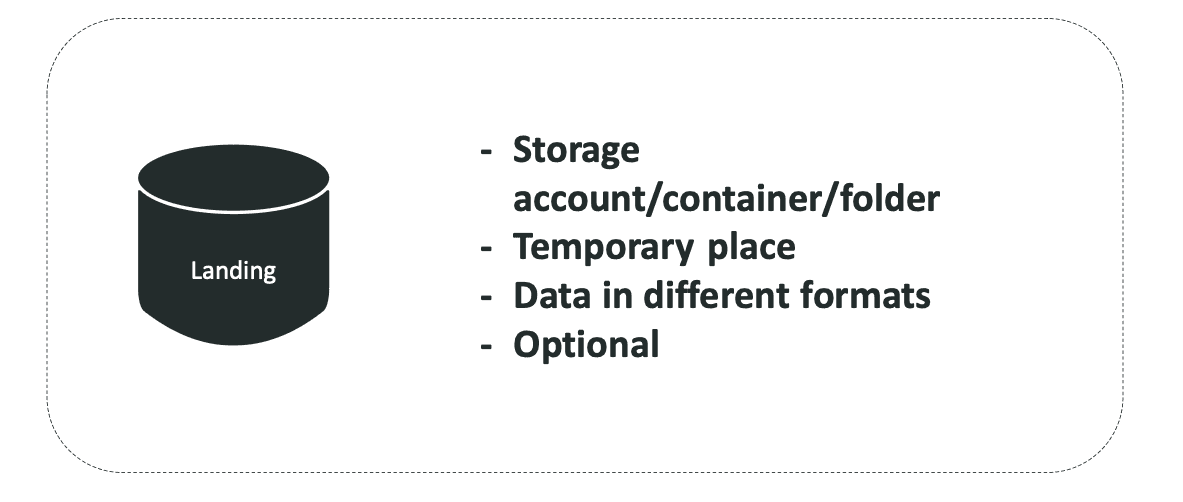

This layer is optional as it’s not officially a part of medallion architecture, but indeed necessary and often overlooked when sketching the architecture. It’s simply a storage account/bucket/container or a folder that temporarily stores raw files extracted from the source systems in different formats.

Its optionality comes from the possibility of pushing the data directly to bronze from different data extraction tools (ex. streaming data).

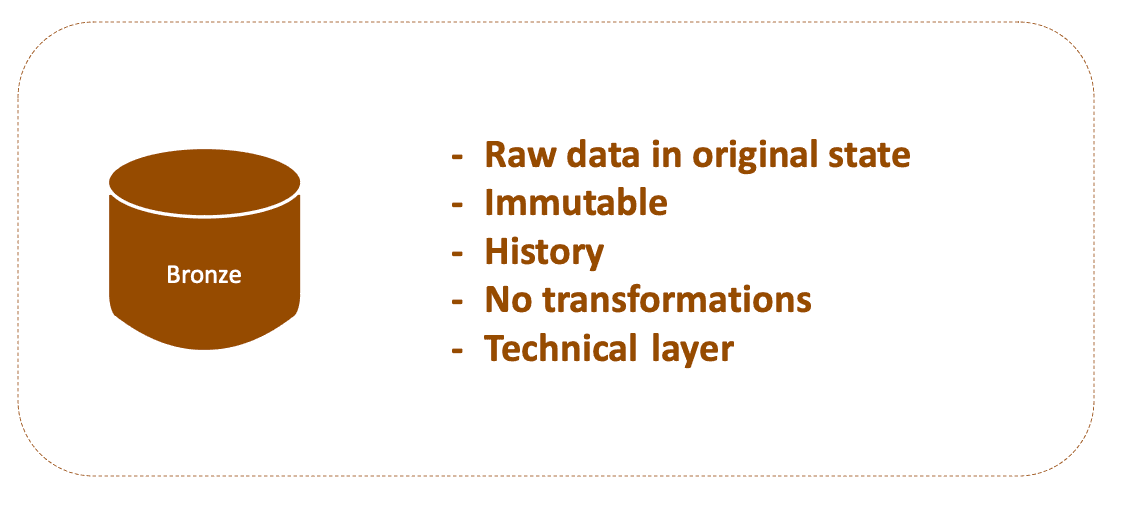

The bronze layer, also known as the raw layer, is a place where we store the data in it's original state (both batch and streaming), meaning that the data should be immutable (however, if the data is fully reloaded every day, data deletion might occur).

Its main purpose is to have a place to store the whole history of data at one time, providing the ability to reprocess if needed without rereading the data from the source system.

No data transformation is allowed here, only adding metadata columns (such as input file name, ingestion date etc.) is permitted.

This layer serves mostly as a technical layer. However I've encountered situations where business users might benefit from the history of the data - especially when they can compare the state of the source tables at one time.

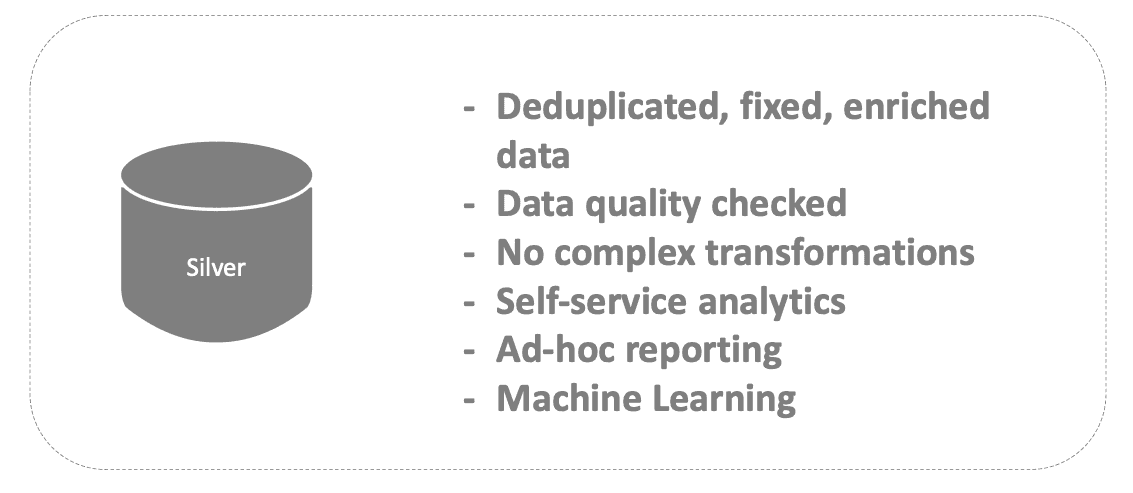

Before ingesting the data into the silver layer, the data should be deduplicated, fixed and in the correct format. It’s also a place where data quality rules can be applied.

In simple words, the silver layer contains cleansed and conformed data that is ready to be consumed by operational, analytical and machine learning workloads.

A few points to keep in mind when thinking about the silver layer:

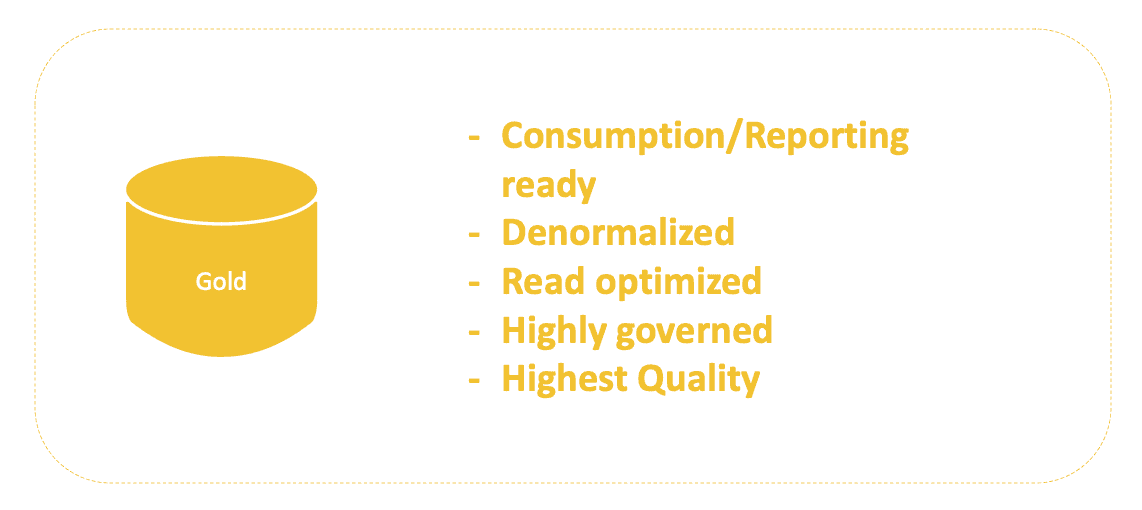

In this layer, the data should be consumption-ready for specific business and analytics use cases.

It’s prepared for reporting and uses denormalized and read-optimized models, having the highest quality, usability, governance and documentation.

Now let’s take a step back and look at how the classic data warehouse layers looked like. We also had three (sometimes more) layers of data like Staging (STG), Operational Data Store (ODS) and Data Warehouse (DWH). Each of them had their own purpose, very similar to Medallion Architecture. Does that ring a bell? To me, the Medallion Architecture is simply another iteration of a concept we've already seen in the data world, but adapted to modern warehousing solutions.

While reading all of those do’s and don'ts you might think “this does not fit into my scenario, I have to do it differently, with different naming, with more layers”. But can you actually do it? Definitely.

All of the data design patterns were invented to propose some kind of standard of doing things (without this there would be chaos), but it’s impossible to invent something that would be perfect. It’s mostly up to you to decide, which way you’re gonna go with your data warehouse design pattern, having in mind the benefits and risks, while considering Medallion Architecture as a starting point in a modern data warehouse. However, the most important thing is to treat the desired pattern as an organization-wide set of standards and good practices.

Acquiring unlabeled data is inherent to many machine learning applications. There are cases when we do not know the result of the action provided by…

Read moreBlack Friday, the pre-Christmas period, Valentine’s Day, Mother’s Day, Easter - all these events may be the prime time for the e-commerce and retail…

Read moreKnowledge sharing is one of our main missions. We regularly speak at international conferences, we contribute to open-source technologies, organize…

Read moreIn one of our recent blog posts Announcing the GetInData Modern Data Platform - a self-service solution for Analytics Engineers we shared with you our…

Read moreFlink is an open-source stream processing framework that supports both batch processing and data streaming programs. Streaming happens as data flows…

Read moreDespite the era of GenAI hype, classical machine learning is still alive! Personally, I used to use ChatGPT (e.g. for idea generation), however I…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?