Deploying secure MLflow on AWS

One of the core features of an MLOps platform is the capability of tracking and recording experiments, which can then be shared and compared. It also…

Read moreMulti-tenant architecture, also known as multi-tenancy, is a software architecture in which a single instance of software runs on a server and serves multiple tenants. The distinction between the customers is achieved during application design, thus customers do not share or see each other's data. Over the past several years this was a common pattern that helped cloud providers to reduce the cost of infrastructure.

Contrary to this, single-tenancy is an architecture in which a single instance of software application and infrastructure serves one customer, providing more isolation, unfortunately resulting in underutilized resources.

As the cost of cloud usage currently comes from on demand usage (in BigQuery most costs are generated by data storage and read bytes) and using serverless solutions like Cloud Run, Cloud Functions does not require provisioning of any machines, therefore tenant architecture should be adjusted from the on-premise environment to cloud.

In this article I will be using the words “client” and “tenants” interchangeably.

This article demonstrates high level architecture design proportions with the trade offs they bring.

Let’s consider that you have been asked to design the cloud architecture of a whole startup which specializes and sells as a product firmographic data analysis and forecasting. The first thing you need is the data. Preferably firmographic data from your clients. Yet you are not sure what kind of data will be used and if the kind of data will be the same across your clients. Due to this uncertainty there is the need to have an easy way of analyzing the data.

As an experienced data engineer you know that data has to be organized (preferably even cataloged).

You would also like to be prepared for client churn, even if you're sure that your product will revolutionize the data analysis world and the churn will be marginal. Client churn for a prolonged period of time was not even considered as a technical issue, but currently when there are GDPR, CCPA and soon other legislative restrictions which could appear, thoughtful data architecture has to support data deletion after churn.

“Zero trust security”, “Least-privilege access” are not the buzzwords and not only mature organizations should follow those rules. You also have been asked to consider fine-grained identity and access management (IAM) into the architecture, preferably with the way to provide temporary access to the data for the analytics team in the future.

Summing up, tenant architecture needs to solve those issues:

Lucky for the company, the CTO decided to choose Google Cloud Platform as a cloud provider.

Let's now take a look at two possible tenant architectures and consider to what extent they address the above issues.

The first approach that could work nice is “separe BigQuery dataset per client”. This architecture design is not isolating data totally. Cross tenant data separation is achieved by proper IAM settings as data stays in a single BigQuery instance.

The idea behind that is fairly simple. If a new client would like to register to product, unique identifier is generated: e.g. clever_beaver, sweet_borg etc. and a new dataset is created in one GCP project that is chosen to store tenants data (let's call it “tenants-production”). It could be done with one simple command:

bq --location=eu mk --dataset --label=tenant:clever_beaver --description="clever_beaver tenant dataset" tenants-production:clever_beaver

Storing data in BigQuery makes it super easy to analyze using Standard SQL, Geospatial analysis, BigQuery ML and present it using BigQuery BI Engine.

The separation by BigQuery dataset does not sound as the best way of organizing data. Imagine that the client is using tens of sources and each of them have dozens of individual resources (e.g. Google Ads can have individual resources like: “campaigns”, “users”, “ads” etc.). That will result in hundreds of tables and yet not mentioning data curation, reports and forecast tables.

Data ingestion tools like Fivetran or Airbyte produce lots of tables and even if they are set up correctly a potential name conflict could occur.

At first glance the answer will be positive - just delete the dataset and that’s all.

BUT!!! A common approach to load data to BigQuery is based on Google Cloud Storage (GCS) and if something would go wrong with the process that loads data, GCS files could not be cleaned up.

OK! So just simply set a lifecycle policy on GCS buckets to delete files after a given time. BUT!!! The platform requires storing client secrets to the system from which data is collected from.

OK! Mitigation to this issue is simply using Google Secret Manager and in case of client churn removing all secrets that belong to the tenant…

The case here was not to recognise all potential data that can be missed from deletion but rather highlighting that it is hard to remember every part of a system which stores clients data and delete it completely without accidental deletion another client data.

Google provides IAM on dataset and table level. After some time when business will grow and the number of tables and datasets will be counted in thousands IAM management will be very problematic. Solution to that is proper IaC setup, but this requires additional effort (you can read about IaC in our other article).

What’s more, tools like Fivetran or Airbyte need read/write access to the whole dataset. This is problematic as in theory raw data could be written to curated or user facing tables.

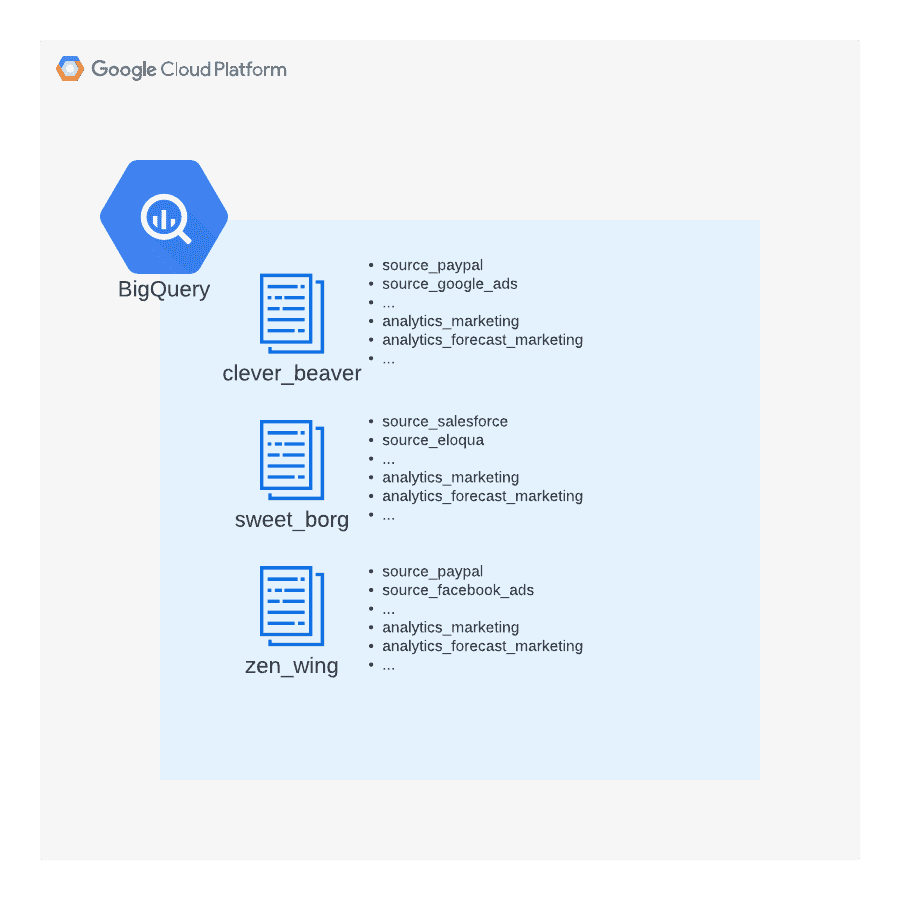

Another approach that is strongly suggested is a separation of tenants per GCP Project. In this approach there is a need to create separate GCP projects per client. Below image shows an example of how three tenants’ (clever_beaver, sweet_borg, zen_wing) infrastructure could look like.

As you can see clever_beaver tenant id have unique_prefix_clever_beaver GCP project and 4 datasets: source_paypal, source_google_ads, analytics and analytics_forecast. There could be an unlimited number of tables inside of any of those datasets similarly there could be an unlimited number of datasets in each of the GCP Project.

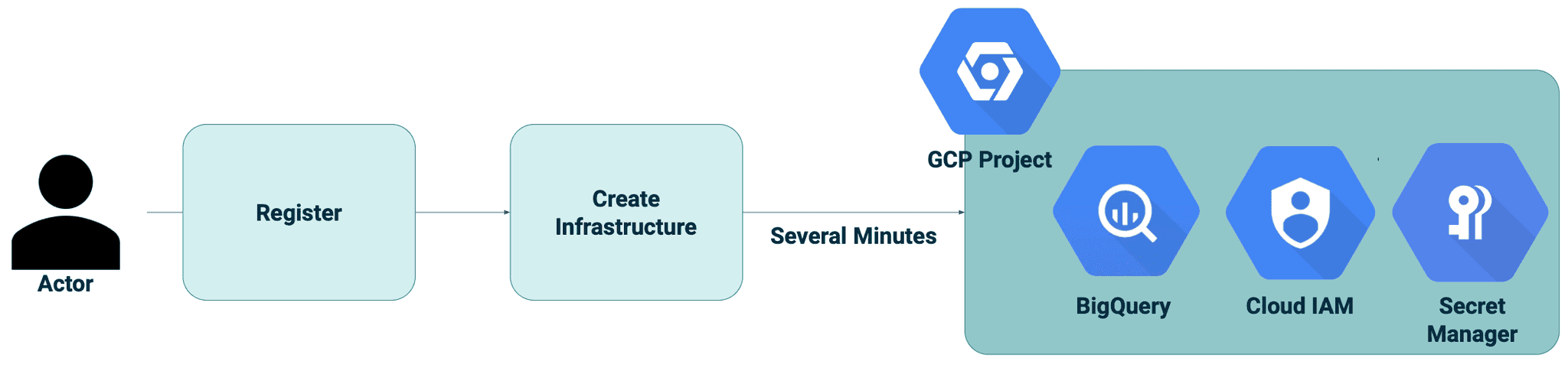

On the other hand this approach requires more upfront work and the user registration process will be much more complicated.

Creation of required infrastructure is not a fast process and could take several minutes

It should be noted that this approach could work with thousands of tenants but requires direct contact with GCP support. Contact with support is pleasant but they could ask questions regarding the business and estimated scale. Cloud quota management is an incremental process and unfortunately it is highly unlikely that in the first iteration GCP support set quota to value sufficient for the entire life cycle of startup.

In order to create a system that will work like that, there is a need to have an automated way of creating, modifying and deleting infrastructure. IaC addresses these problems but not directly.

Let’s assume that there is a terraform module that contains all required and properly named resources: google_project, google_secret_manager_secret, google_service_account etc. Then the only needed configuration to pass to the module is tenant id like: cleaver_beaver.

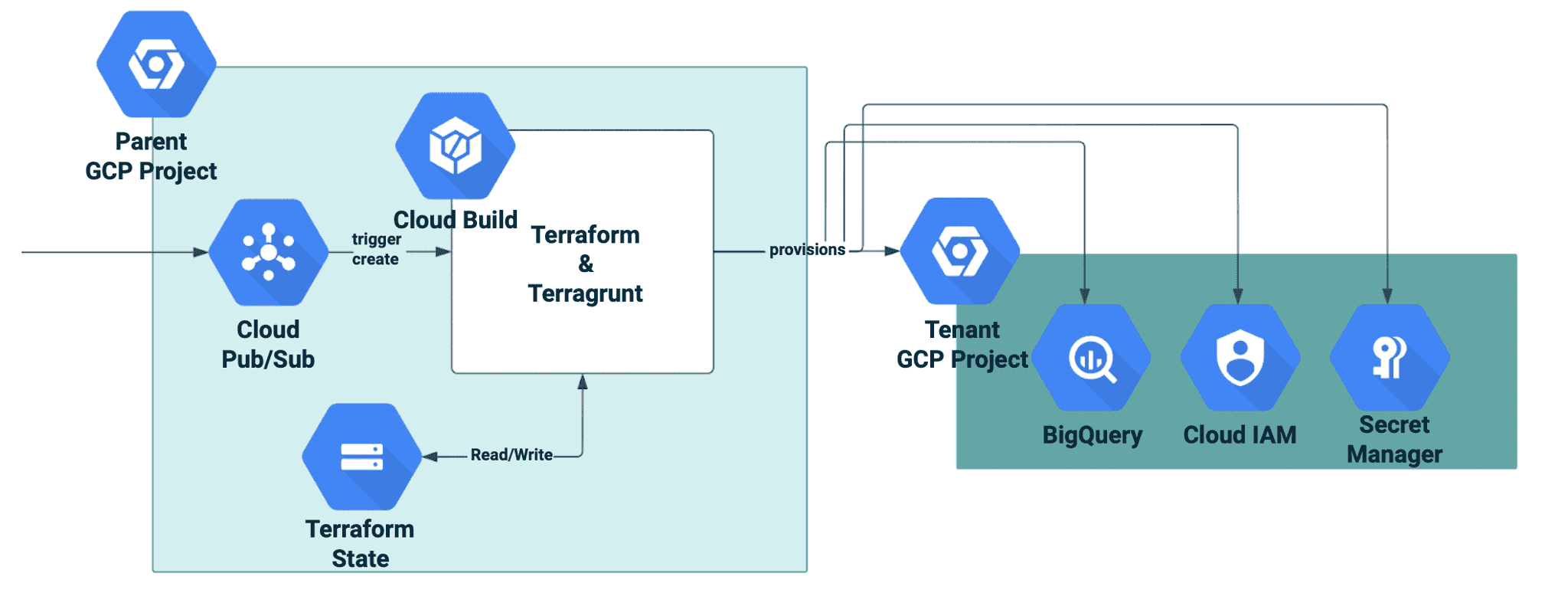

The Terraform module with required resources has to be executed against real infrastructure. To do so there could be multiple approaches. On the diagram below you can see one utilizing Cloud Build service. After receiving pubsub message with tenant id in the body Cloud Build Trigger will execute a job which is responsible for execution of terraform (or terragrunt in case separation of state is required).

Worth investigating extension to pure google_projects resources is GCP native tenancy support which is currently supporting creating projects for each tenant.

During designing such an architecture there is a need to consider having centralized projects. Separate projects could hold Cloud Monitoring service collecting metrics across tenants projects, Artifact Registry service storing custom docker images or packages, Cloud Build for CICD. Having a separate special purpose parent GCP projects are more than welcome.

There is no difference compared with the previous BigQuery Dataset tenancy approach.

The separation by GCP project is great as it gives two levels of categorizing data dataset and tables. For example, the dataset `source_paypal` could have tables representing paypal_transacions, paypal_balances and other. Also common tools like Fivetran or Airbyte generate lots of intermediate tables aside from resulting data. Those intermediate tables would only clutter one dataset.

There is only one rule that needs to be followed. If there is tenant data, it must be stored in a tenant specific GCP project and nowhere else. In case of churn it is enough to delete the whole project. Worth noted, that this will be a soft delete, which means that by 30 days deletion rollback will be possible.

IAM management could be done on the whole project level. Assuming that project will be created in the GCP folder it is fairly easy to set permissions for a specific google group. For example the data analytics team will have Data Viewer permissions on the whole folder, on the other hand the engineering team could have permissions to run the Compute Engine. Permissions from folder level will be inherited to project level.

This article presents two approaches to organizing data in a tenant environment with the use of BigQuery. Both of them bring tradeoffs that have to be understood and considered. Our client case study shows that following the GCP Project Tenancy Approach scales better after initial investment. Also it helped moving fast in the beginning as experimenting does not leave any leftovers contrary to the BigQuery Dataset Tenancy Approach.

Current state of cloud computing has changed the way applications are designed. Nowadays there is no strict boundary between single and multi tenancy as load management for serverless services is a cloud provider concern and cloud users can focus on solving business problems. That’s why hybrid tenancy which blends these approaches is currently the common pattern.

One of the core features of an MLOps platform is the capability of tracking and recording experiments, which can then be shared and compared. It also…

Read moreSince 2015, the beginning of every year is quite intense but also exciting for our company, because we are getting closer and closer to the Big Data…

Read moreThe year 2020 was full of challenges in many areas, and in many companies and organizations. Often, it was necessary to introduce radical changes or…

Read moreHardly anyone needs convincing that the more a data-driven company you are, the better. We all have examples of great tech companies in mind. The…

Read moreDespite the era of GenAI hype, classical machine learning is still alive! Personally, I used to use ChatGPT (e.g. for idea generation), however I…

Read moreNowadays, data is seen as a crucial resource used to make business more efficient and competitive. It is impossible to imagine a modern company…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?