Data Mesh as an answer

In more complex Data Lakes, I usually meet the following problems in organizations that make data usage very inefficient:

- Teams organized horizontally – there are separate teams for data engineering responsible for pipelines and data ingestions, analysts responsible for creating reports, data scientists responsible for creation of ML models and teams responsible for modeling data. Depending on organizations these teams may appear in different configurations but the common problem is dependencies between them. All of that makes management hard and introduces delays. Usually, the biggest problem is a dependency on Data Engineers that sooner or later becomes a bottleneck.

- A single centralized place to store and process data from the whole organization – this is one of the main concepts of Data Lakes but it fails at scale. I saw a lot of solutions where raw data were mixed together with cleaned, enriched and modeled one. That increases complexity and makes it almost impossible to manage them after some point.

- Lack of standards – every person in an organization has his own experience and habits which leads to storing data differently, using different names, conventions and tools to process them. Now let’s imagine a Data Scientist that is trying to use data from multiple sources prepared in different ways without knowing what to expect. In an optimistic case that’s hours spent on trying to understand conventions, in a pessimistic case it may take days or even weeks for more complex cases.

- Difficulty in finding data – a very common situation, even in smaller companies sometimes you don’t know where the data are. Usually, to find what you are looking for you need to go around from one person to another. Another problem, strongly connected to this one, is lack of strong ownership. Even if you find data, you still don’t know who created them, how often they are updated, what is their source, who is using them, how to extend them etc. It may cause a headache and usually ends up duplicating the whole process with small modifications.

- Lack of trust – it is connected with the previous one but definitely worth mentioning separately. Available and already used data often have low quality, are not complete or require additional cleaning. They say that data scientists spend 80% of their time on cleaning data and work is usually repeated by different DS. Why not do it once?

All these problems increase linearly with the scale of the organization. The more data is processed and more areas Data Analysts touch, the more difficult it is. Data Mesh tries to address the following problems. All assumptions in the approach can be divided into three pillars:

Teams organization and responsibilities

The most important Data Mesh assumption is decomposing data into domains. There is no single rule on how to approach it and it strongly depends on the core business and how the company operates on a daily basis. To illustrate the domain better, as an example we could imagine a store. For this kind of business, we could extract the following domains: customer, supplier, product and order.

Teams should be organized around selected domains and they should have strong ownership on data that they were assigned to. They should be responsible from end to end for all processes of collection data, transformations, cleaning, enrichment and modeling. To achieve that teams should be organized also vertically which means they should consist of all roles that are required to deliver data. This usually includes some of the following roles: DataOps, Data Engineers, Data Scientists, Analysts, Domain Expert. It is not necessary for all the roles to be full-time in a team. Sometimes there is not so much work to fulfill FTE for Data Engineers so it is also acceptable that technical people can work as a part of the data teams part-time. Rest of the time they can be part of a centralized technical team responsible for supporting and maintaining a platform. In this setup, it is important that their highest priority should be to meet the data team needs. Even though we work hard on isolation of teams at the same time we don’t want to create silos of data. Each team should be responsible for providing their prepared datasets to any teams downstream. Different teams can still use data from different domains for their purposes. Some data duplications are also acceptable.

According to me, the second most important rule is that the Data Mesh approach is treating data as a product. Data that concerns one domain is a product and every team is responsible for delivering from one to a few products. API/UI in this case are data, but not all of them. Very important aspect is hiding the internals of a product. All raw data and intermediate state shouldn’t be available outside. Only a presentation layer that contains modeled, enriched and clean data can be visible outside. Managing everything via code repositories gives also possibilities for other teams to propose and contribute to adjusting the presentation layer, but the owner team is always responsible for verification and making sure that changes are consistent with their directions. They are responsible for vision of how the data are delivered and should be used from the perspective of their domain.

Standardization and global rules

Implementation of data processing pipelines and storing intermediate data are internals of a product. Because of that, theoretically, it could be implemented in any way. Practically, we are trying to unify tools and infrastructure to be common for an organization. It makes it easier to support, transit people between projects and introduce a new team member. I will write more about the technical aspects of the common platform in the next section. The presentation layer of each product, on the other hand, is available for the whole organization and it is crucial for introducing strict rules on how data is stored and made available to everyone.

Every team wants their product to be used but also wants to spend as little time as possible on supporting it. That’s why it is very important to introduce a standard way for data governance, especially data should be easily discoverable for anyone that is looking for them. By collecting metadata and introducing self-describing semantics and syntax it is possible to make data independently discovered, understood and consumed. Another important aspect is trustworthiness and truthfulness. No one wants to use data that they can't trust. Similarly as writing tests for applications data must be checked automatically by processes that assure the quality.

Even though teams are separated, they still use the same infrastructure under the hood. That’s why proper management of roles and permissions in the data ecosystem is very important. They should separate team responsibilities and hide their internals. It is possible to apply a rule from backend engineering: a database is like a toothbrush - should be used only by one application. Internals of data products should be available only inside the product and everything else can only have read permissions for the presentation layer.

Self-serve data platform

To achieve the previously described set of rules it is required to build a platform that serves these needs. We need to select tools, integrate them and deliver in a way that less technical people can use. Data Mesh’s goal is to provide a platform that will be used by all Data teams without or with minimal support from other dedicated teams. Although processing pipelines are the internal complexity of data domains and are implemented internally by data teams the platform should provide tools and frameworks to be used in the easiest possible way to achieve that. We are aiming at teams to be self-sufficient and minimize Data Engineers' involvement in the daily of Analysts and Data Scientists.

Very important aspect in the creation of such an environment is automation. All possible actions like deployments or compilations should be automated transparently, out of the box.

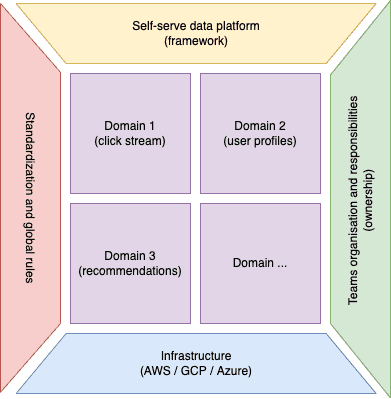

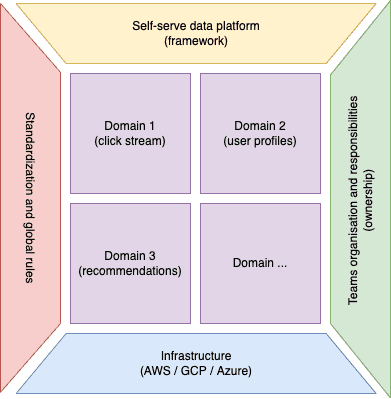

Below, a picture can illustrate the Data Mesh approach looking from above.

Data Mesh and Microservices

To sum it up, at the center we have identified, selected and well defined bounded contexts which are used to form teams around them. The teams with strong ownership obey standards and global organization rules in creating and delivering solutions (data products). Teams use a common platform with a set of tools to make their work easier. The platform is deployed/based on infrastructure which can be either on-premise or cloud-based.

Most Data Engineers, including me, originally come from backend development or at least are familiar with the Microservices concept. For these people, I think Microservices is the best analogy to explain Data Mesh. About 10 years ago the only officially known concept in building systems were monoliths. Time went by, applications grew and monoliths started to be unmanageable. It is very similar in the data world. Currently, still popular, data lakes started to acquire critical mass and are no longer manageable. They are like monoliths and the next step in evolution is Data Mesh. Similarly like in Microservices, Data Mesh promotes the separation of data into bounded context. Communication between bounded contexts should be based on rules and contracts (presentation layer). To be able to manage multiple separate products automation is necessary. Microservices usually use the same infrastructure, they are deployed either on Kubernetes or any other system that supports containers. In the Data Mesh approach, Data Products also uses the same infrastructure. It can be old and well known Hadoop, cloud based Google Big Query or platform agnostic Snowflake.