News Recommendation: the challenging area in building recommendation systems

Remember our whitepaper “Guide to Recommendation Systems. Implementation of Machine Learning in Business” from the middle of last year? Our data…

Read moreThe LinkedIn Engineering blog is a great resource of technical blog posts related to building and using large-scale data pipelines with Kafka and its “ecosystem” of tools. In this post, I provide several pictures and diagrams (including quotes) that summarise how data pipeline has evolved at LinkedIn over the years. The actual content is based on LinkedIn’s articles and presentations that transparently describe the pros and cons of their data infrastructure (thanks LinkedIn for sharing!).

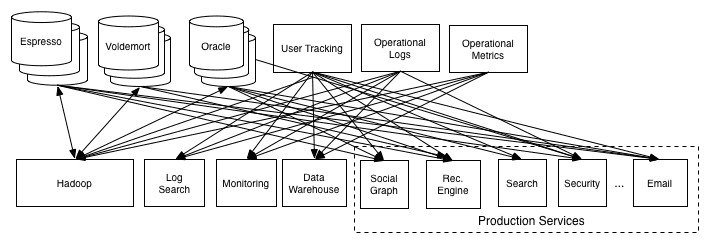

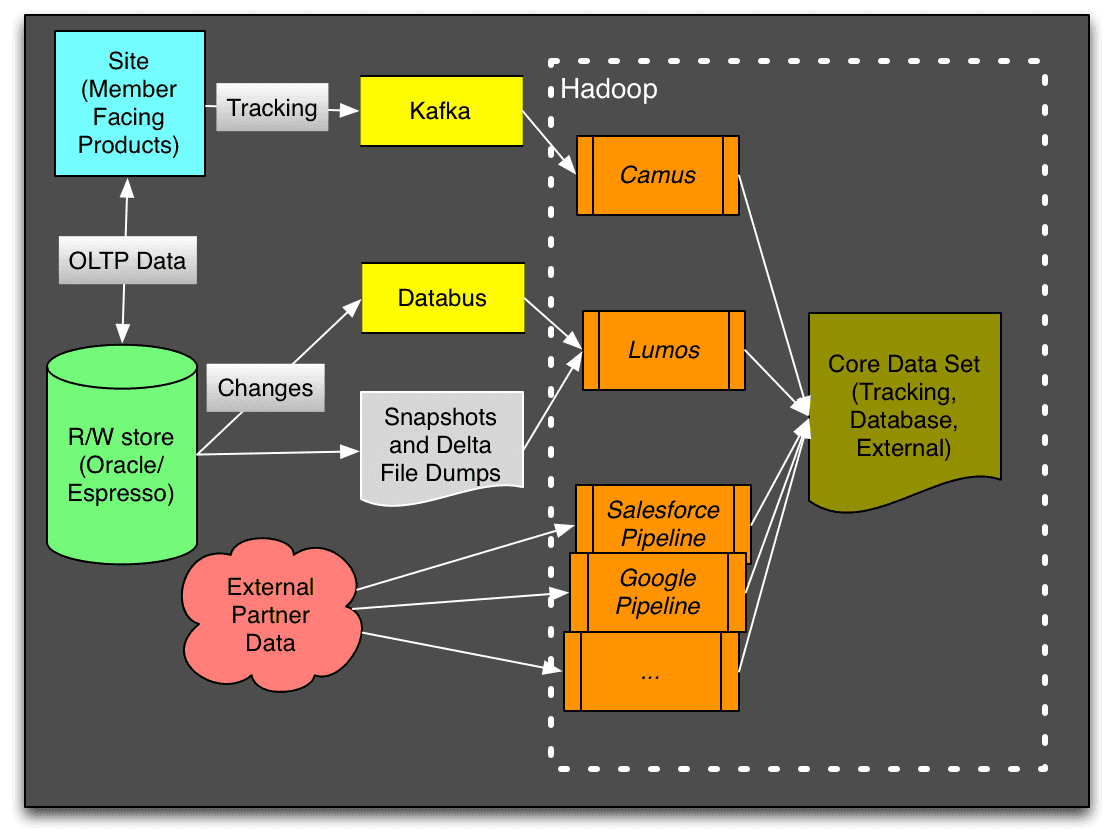

“We had dozens of data systems and data repositories. Connecting all of these would have lead to building custom piping between each pair of systems something like this:”

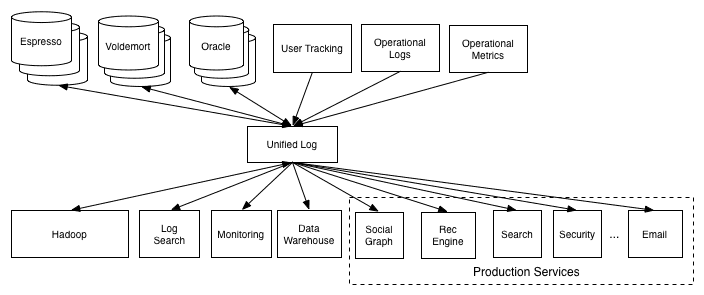

“Instead, we needed something generic like this:”

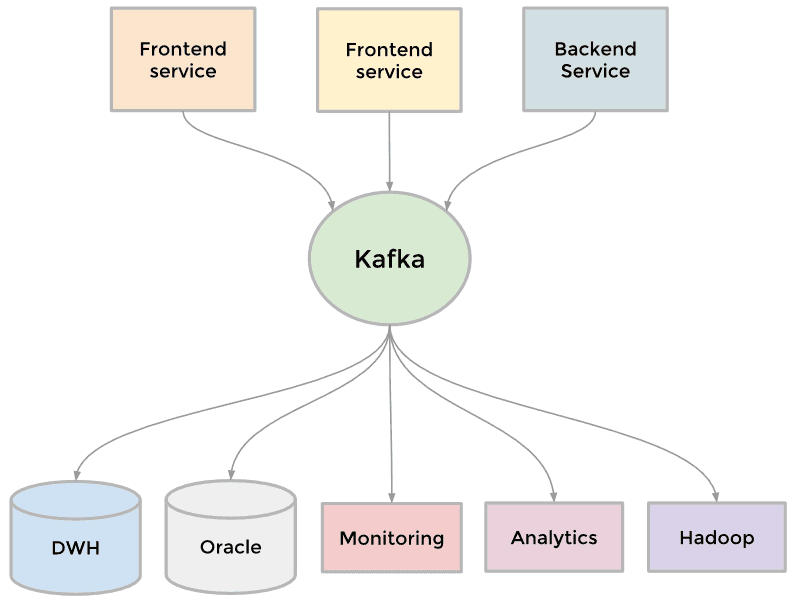

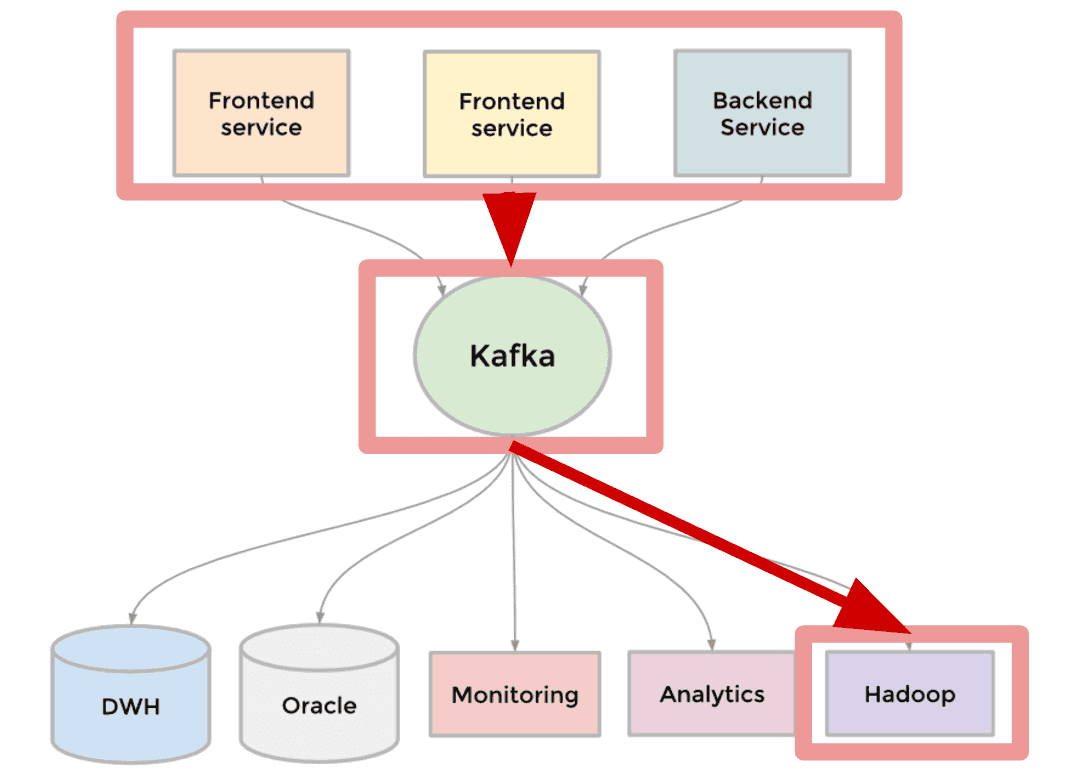

Kafka became a universal pipeline (…) It enabled near real-time access to any data source, empowered our Hadoop jobs, allowed us to build real-time analytics, vastly improved our site monitoring and alerting capability, and enabled us to visualize and track our call graphs.

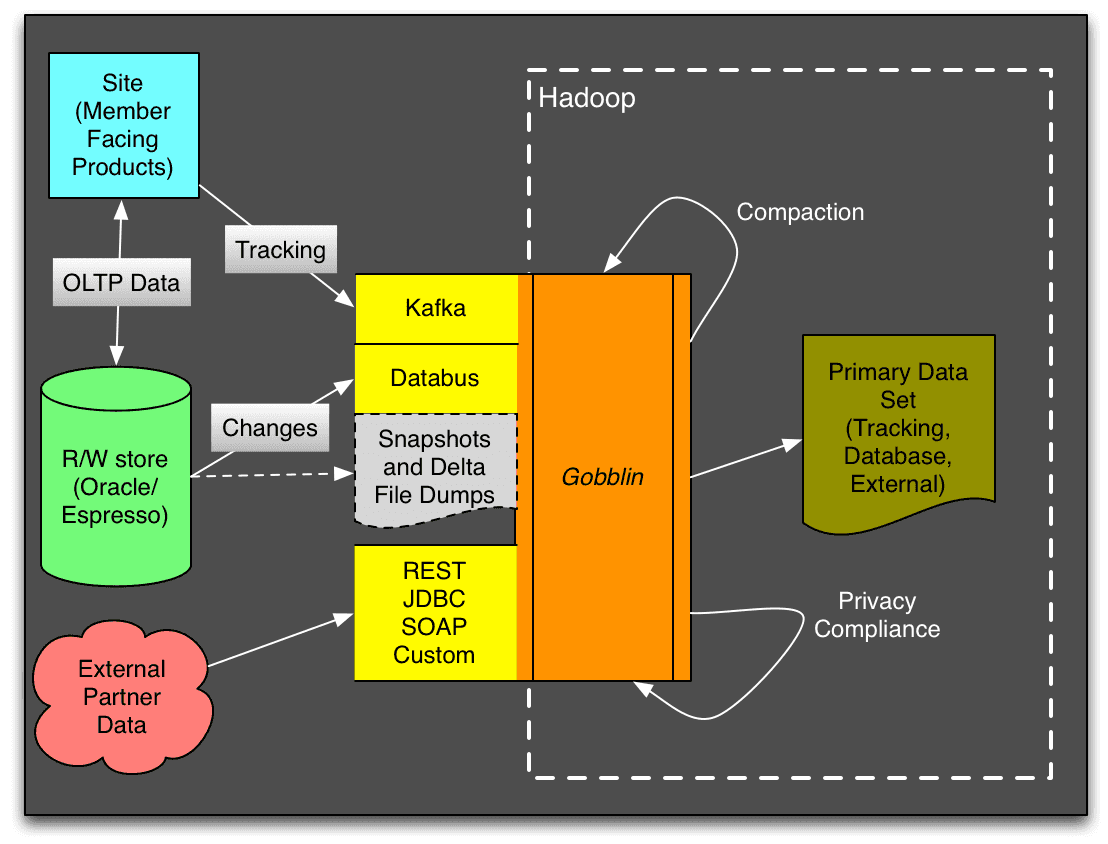

As simple as the picture below:

“The figure shows the complexity of the data pipelines. Some of the solutions like our Kafka-etl (Camus), Oracle-etl (Lumos) and Databus-etl pipelines were more generic and could carry different kinds of datasets, others like our Salesforce pipeline were very specific. At one point, we were running more than 15 types of data ingestion pipelines and we were struggling to keep them all functioning at the same level of data quality, features and operability.”

The quote by Jay Kreps several years ago:

Note that data often flows in both directions, as many systems (databases, Hadoop) are both sources and destinations for data transfer. This meant we would end up building two pipelines per system: one to get data in and one to get data out.

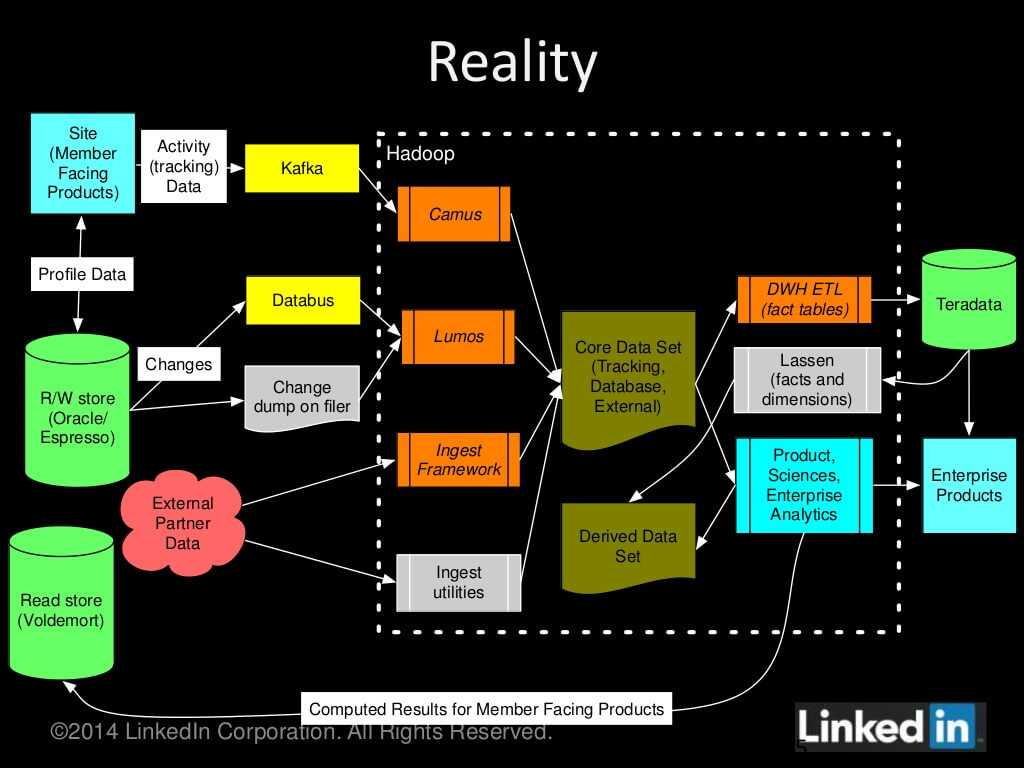

It looks that the quote above didn’t save LinkedIn from building a complex system like below:

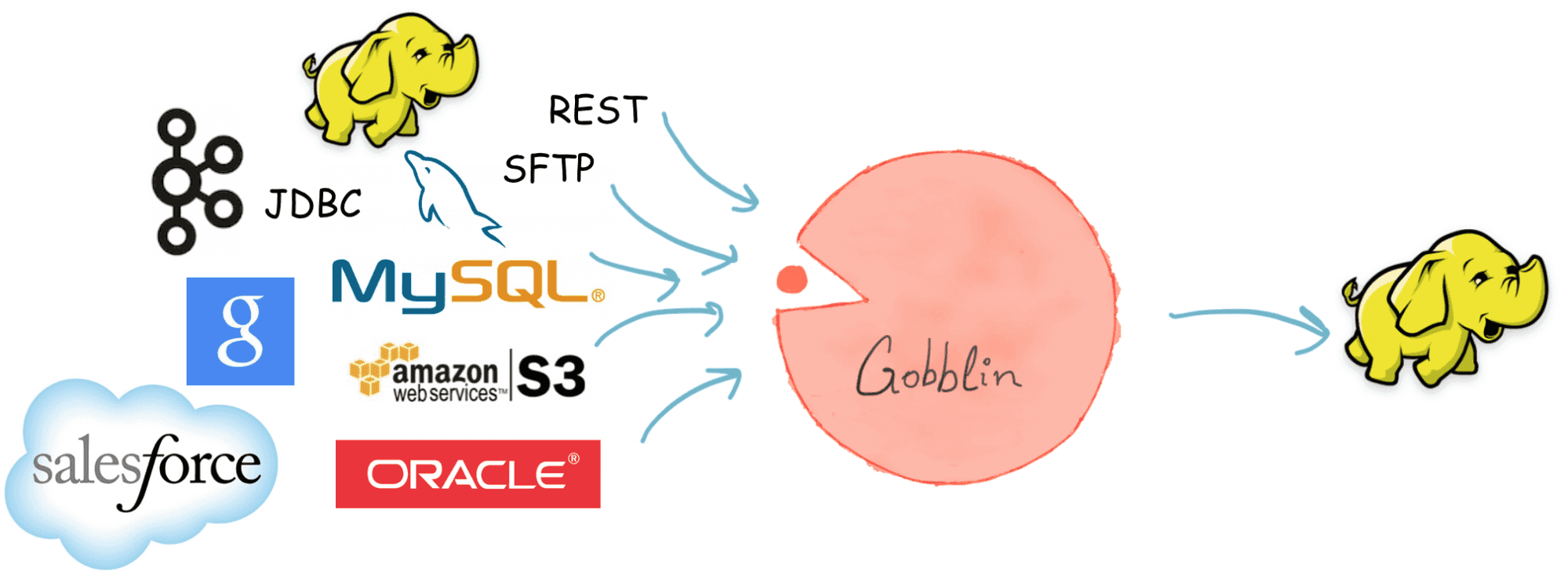

“Late last year (2013), we took stock of the situation and tried to categorize the diversity of our integrations a little better. (…) We also realized there were some common patterns and requirements. (…) We’ve brought these demands together to form the basis for our uber-ingestion framework Gobblin. As the figure below shows, Gobblin is targeted at “gobbling in” all of LinkedIn’s internal and external datasets through a single framework.”

Our motivations for building Gobblin stemmed from our operational challenges in building and maintaining disparate pipelines for different data sources across batch and streaming ecosystems. (…) Our first target sink was Hadoop’s ubiquitous HDFS storage system and that has been our focus for most of last year. (…) At LinkedIn, Gobblin is currently integrated with more than a dozen data sources including Salesforce, Google Analytics, Amazon S3, Oracle, LinkedIn Espresso, MySQL, SQL Server, SFTP, Apache Kafka, patent and publication sources, CommonCrawl, etc.

Sooner or later, Gobblin will be also integrated with sinks different than Hadoop such as real-time stream processing frameworks e.g. Samza, Storm, Flink Streaming, Spark Streaming.

The ideal deployment scenario is where we can deploy Gobblin in continuous ingestion mode. (…) This will bring further latency reductions in our ingestion from streaming sources, enable resource utilization efficiencies and allow us to integrate with streaming sinks seamlessly.

Even though Gobblin is the probably most recent data-ingestion innovation at LinkedIn, there is one more brand-new project that might make a difference soon.

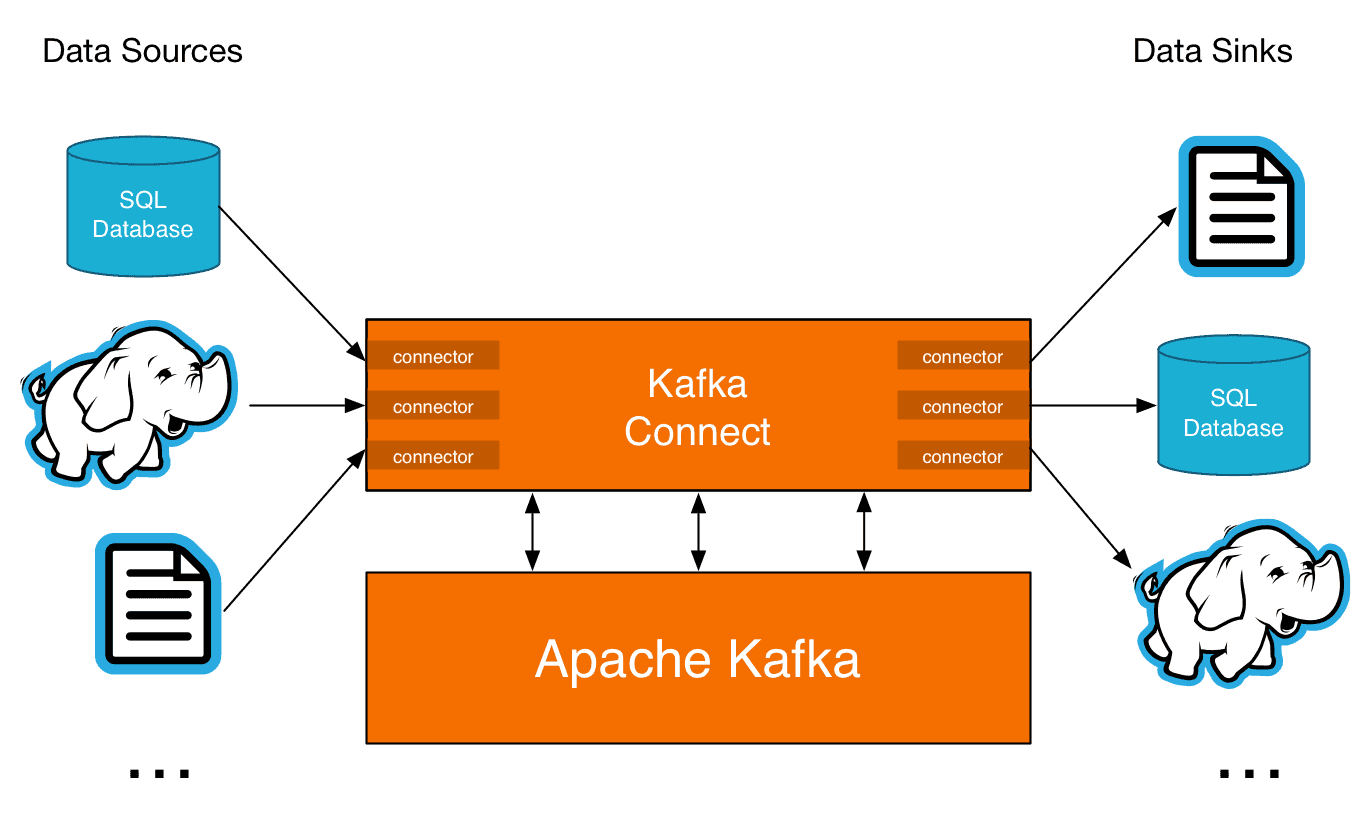

Kafka Connect is a tool for copying data between Kafka and a variety of other systems, ranging from relational databases to logs and metrics, to Hadoop and data warehouses, to NoSQL data stores, to search indexes, and more.

Although it’s great to see new open-source world-class tools that simplify Big Data ingestion, the reality is much more complex than the vision.

The picture is not always worth a thousand words, but sometimes the picture should be explained with a thousand words

Remember our whitepaper “Guide to Recommendation Systems. Implementation of Machine Learning in Business” from the middle of last year? Our data…

Read moreWelcome to the next installment of the "Big Data for Business" series, in which we deal with the growing popularity of Big Data solutions in various…

Read moreStaying ahead in the ever-evolving world of data and analytics means accessing the right insights and tools. On our platform, we’re committed to…

Read moreApache NiFi, a big data processing engine with graphical WebUI, was created to give non-programmers the ability to swiftly and codelessly create data…

Read moreThe Kubeflow Pipelines project has been growing in popularity in recent years. It's getting more prominent due to its capabilities - you can…

Read moreWhen it comes to machine learning, most products are designed to work in batches, meaning they process data at fixed intervals rather than in real…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?