From 0 to MLOps with ❄️ Snowflake Data Cloud in 3 steps with the Kedro-Snowflake plugin

MLOps on Snowflake Data Cloud MLOps is an ever-evolving field, and with the selection of managed and cloud-native machine learning services expanding…

Read moreIn one of our recent blog posts Announcing the GetInData Modern Data Platform - a self-service solution for Analytics Engineers we shared with you our vision of the Modern Data Platform. We intended to create a workspace every analytics engineer would love to use, so we placed dbt in the center point of our framework. dbt allows us to build data pipelines quickly, efficiently and with great attention to data quality - the only requirement regarding the users skills here is the proficiency in SQL.

Everything's been said so far regarding dbt's capabilities to transform the data within a data warehouse for reporting purposes. But what if we would like to use this powerful tool for something more advanced like ML pipelines? Working on ML models usually requires not only a lot of mathematical background, but also proficiency in programming languages, e.g. Python, which means that it often takes a lot of time & money to generate business value out of advanced analytics solutions. This is not the case with BigQuery ML, where feature engineering pipelines, model training and tuning can be made within a data warehouse without the need to move the data and, what’s very convenient - using SQL only.

This means that work with the model can be undertaken not only by a data scientist, but also by a data analyst or analytics engineer. The use of BigQuery ML may prove to be a solution that will provide sufficient results without the need to create an entire Data Science team. It can also be the beginning of work on more advanced challenges from the world of Data Science. But how can we combine all these data transformations and feature engineering into one consistent & manageable pipeline with all these best practices from the engineering world? This is where dbt and BQML join forces!

In this tutorial we will show you that it is possible and easier than you might think. For this purpose, we will use the dbt_ml package that allows you to create models using dbt. The whole thing will be done directly using the MDP platform, thanks to which we also have not only a model but also a pipeline for training models without additional work related to infrastructure.

As part of testing BigQuery ML on our platform, we created a simple customer segmentation model. For this purpose, we used a transaction dataset from Kaggle. It contains the household level transactions within two years from a group of 2,500 households that are frequent customers in retail chain stores. This includes all household purchases, not only limited by categories.

The data contains information about where and when the transaction took place, what was its value and details related to promotional campaigns.

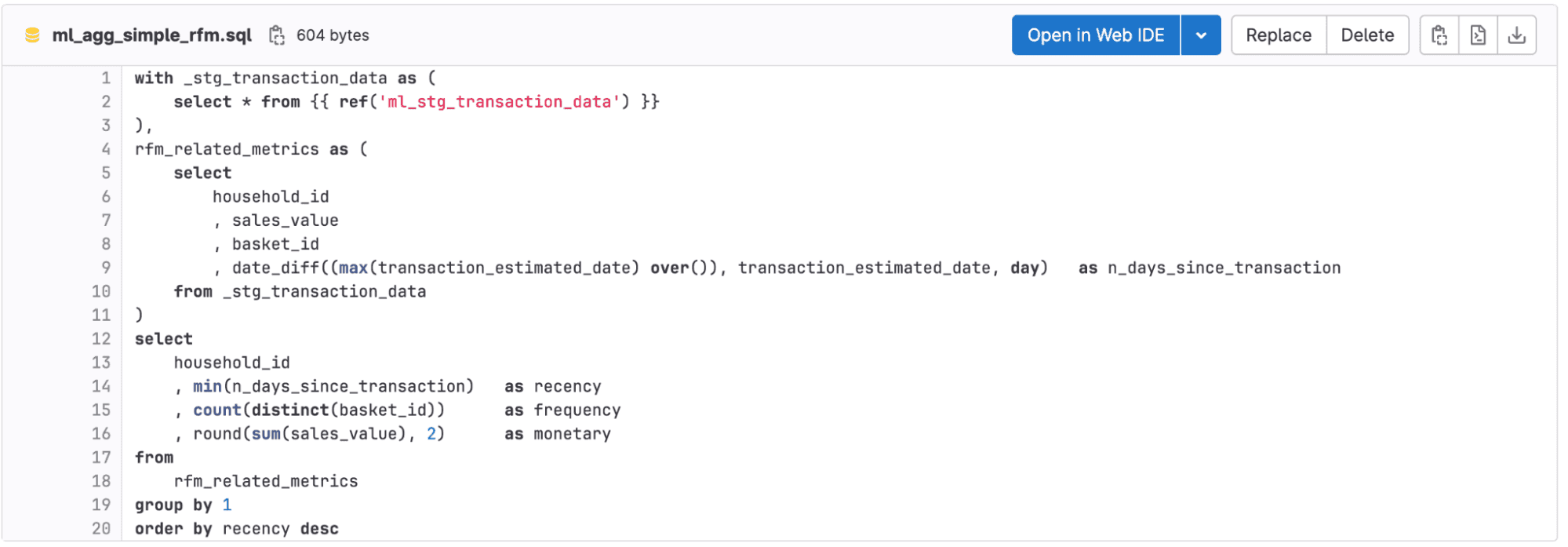

Before we move on to creating a model, we need to look at the data. Check it's quality, e.g. how many nulls occur and what the individual statistics and dependencies look like. For this purpose, we did exploratory data analysis, which we will show here and only the effects - input variables to the model. Such analysis can be performed in R, SQL or Python. We have prepared an input table for our model in the dbt project using SQL. It contains features on a household level:

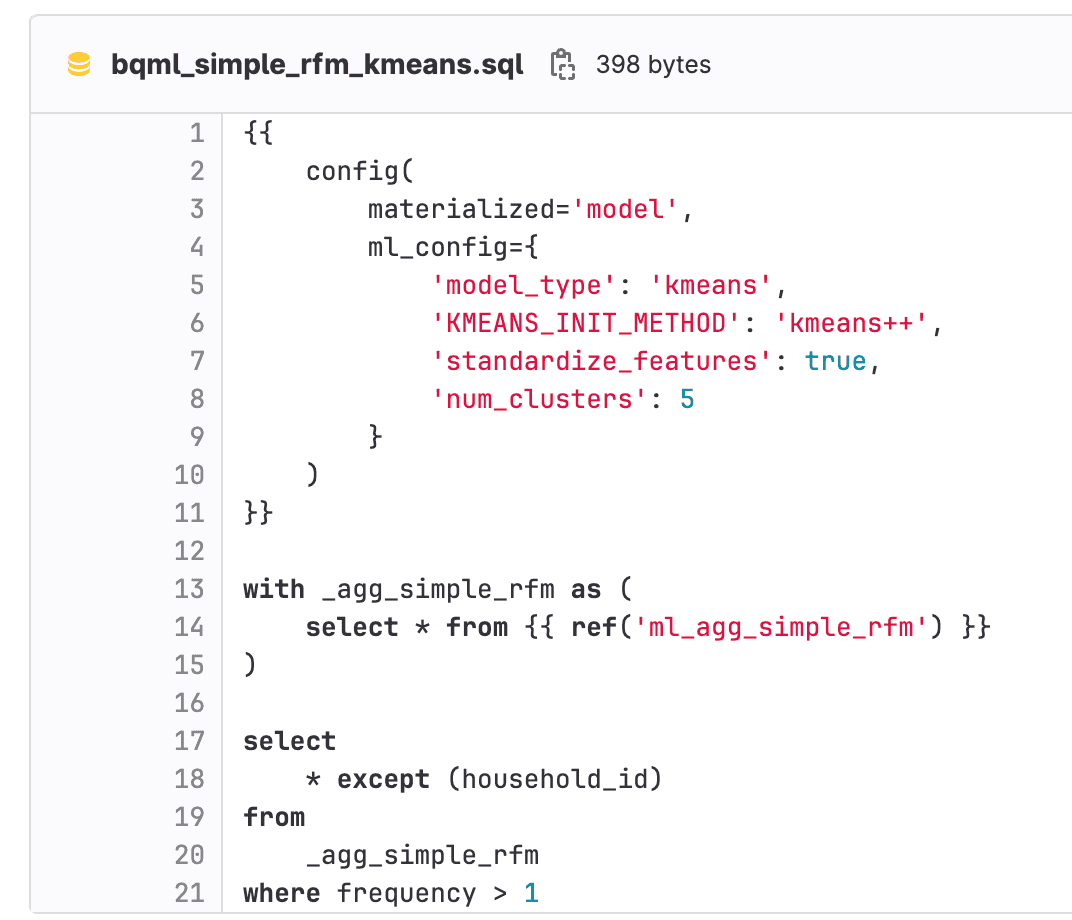

Not only the preparation, but also the models are characterized by simplicity, because everything is created using SQL. We used the k-means++ algorithm to create five clusters, in this case k-centroid points are randomly picked. The differences between different k-means algorithms are explained in an interesting way in the article Understanding K-Means, K-Means++ and, K-Medoids Clustering Algorithms.

A simple syntax allows you to define the model parameters. This information is passed to BigQuery, which performs the calculations and writes the results to our BigQuery.

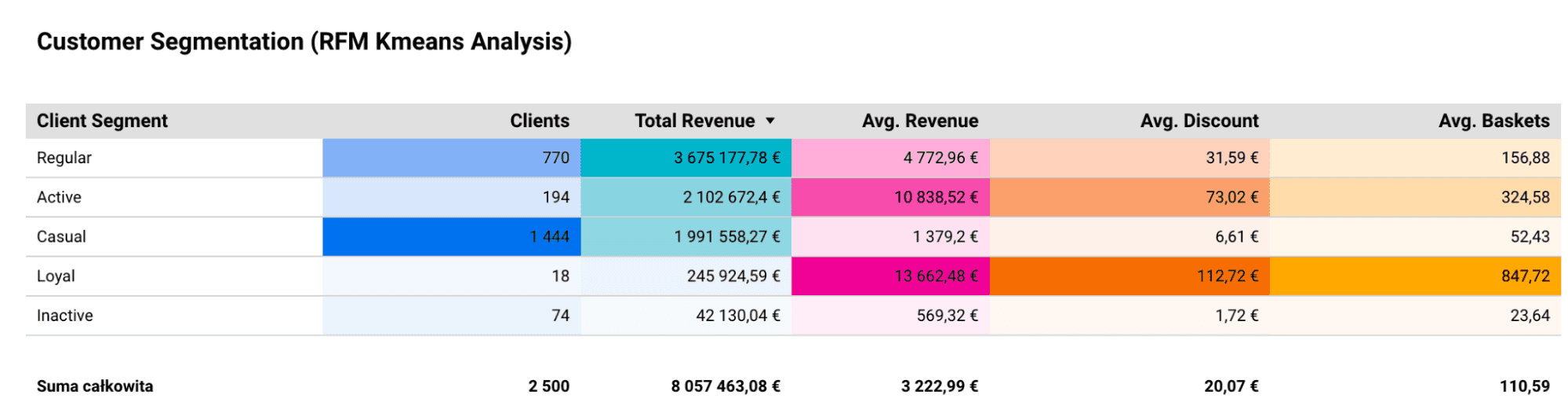

As the output of the model, we have a customer segment for a specific household. The results obtained are five customer groups. The first of them are people who probably no longer shop in the stores they observe. Cluster number two seems to be the group of the best customers because they often visit the store and their basket is characterized by a high average value. The third group is prospective customers worth fighting for, because you can see that they have not had any transaction for a long time. The other two groups are those who visit the chain of stores to a greater or lesser extent. When creating the final tables, we added predicted data to the transactional table to facilitate work from the visualization side.

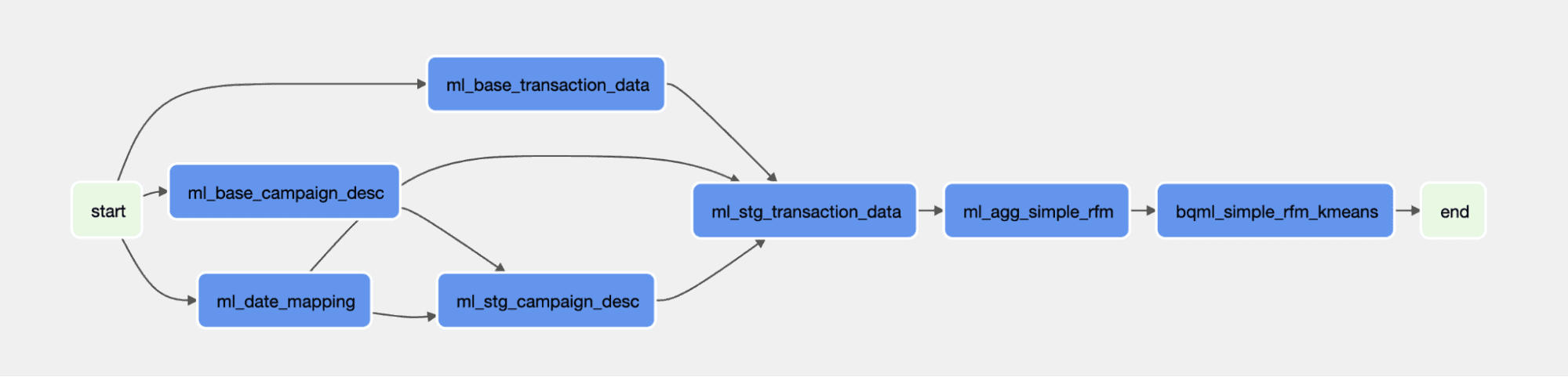

The advantage of using our tool is a ready pipeline, which is created as part of the project. CI/CD checks the code and passes it to Airflow, then the composer passes the information to BigQuery ML to run the model. The platform plays the role of an orchestrator. Thanks to this, we get rid of work related to MLOps.

The created model can be used to perform segmentation on new customers. Thanks to the created pipeline, the CI/CD process loads new data into the model and maps them to the appropriate segments. The data is directly loaded into the table and thus the report prepared on its basis is also updated.

To prepare the visualization, we used Looker Studio, which is integrated with our platform. The table contains some basic metrics regarding revenue, average basket or discounts.

In this tutorial we demonstrated how to define an end to end data pipeline using dbt and BigQuery ML. We discussed the pros and cons of such a solution. The complete step-by-step implementation of BQML was also presented in one of our posts A Step-by-Step Guide to Training a Machine Learning Model using BigQuery ML (BQML). The combination of dbt and BigQuery ML allows for the creation of machine learning models, without the need for a dedicated project or using a different language like Python. By leveraging the implemented models, it is possible to build analyses that can address more advanced business problems, such as customer segmentation. The ease of use is undoubtedly a great advantage. Model definition can be done using SQL, and by using the dbt_ml_package, the syntax familiar to dbt users will not be something new.

The output of the model is the BigQuery results, which can be easily analyzed directly there or using other visualization tools. The dbt_ml_package also allows for maintaining everything within the dbt project, providing version control and keeping the analytical layer in one place. This is a significant facilitation because without it, model creation would happen outside the ETL process and would be completely separate.

Such a solution offers great possibilities as it makes machine learning more accessible and can lead to better insights. For companies familiar with ML, this combination can be treated as a reconnaissance stage for larger projects with a low time investment. It's worth mentioning that the ready-made implementation of algorithms offers convenience but comes with a trade-off of limited customization. When implementing an algorithm from scratch, we have a greater sense of control and predictability. However, this drawback is not unique to this type of solution, as similar considerations can be observed when using packages in Python or R.

It turned out that dbt is not only about batch transformations in reporting workloads - it also supports ML models development but quite soon its use cases will be very common in real-time analytics. At GetInData, we recently created a dbt-flink adapter, that allows the running of pipelines defined in SQL in a dbt project on Apache Flink. You can read about it here.

If you want to discuss this or any other topics, feel free to sign up for a 30 minutes free consultation with our experts.

MLOps on Snowflake Data Cloud MLOps is an ever-evolving field, and with the selection of managed and cloud-native machine learning services expanding…

Read moreWhat is Apache Iceberg? Apache Iceberg is an open table format for huge analytics datasets which can be used with commonly-used big data processing…

Read moreWhat are Large Language Models (LLMs)? You want to build a private LLM-based assistant to generate the financial report summary. Although Large…

Read moreThe two recently announced acquisitions by Google and Salesforce in the thriving business analytics market appear to be strategic moves to remain…

Read moreA monitoring system is a necessary component of any data platform. We can find a lot of different services that use different approaches to the same…

Read moreIn a recent post on out Big Data blog, "Big Data for E-commerce", I wrote about how Big Data solutions are becoming indispensable in modern business…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?