EU Artificial Intelligence Act - where are we now

It's coming up to a year since the European Commission published its proposal for the Artificial Intelligence Act (the AI Act/AI Regulation). The…

Read moreDeploying Language Model (LLMs) based applications can present numerous challenges, particularly when it comes to privacy, reliability and ease of deployment (see: Finding your way through the Large Language Models Hype - GetInData). While one option is to deploy LLMs on your own infrastructure, it often involves intricate setup and maintenance, making it a daunting task for many developers. Additionally, relying solely on public APIs for LLMs may not be feasible due to privacy constraints imposed by companies, which restrict the usage of external services and/or process valuable data which includes PII (see: Run your first, private Large Language Model (LLM) on Google Cloud Platform - GetInData). Furthermore, public APIs often lack the necessary service level agreements (SLAs) required to build robust and dependable applications on top of LLMs. In this blog post, we will guide you through the deployment process of Falcon LLM within the secure confines of your private Google Kubernetes Engine (GKE) Autopilot cluster using the text-generation-inference library from Hugging Face. The library is built with high-performance deployments in mind and is used by Hugging Face themselves in production, to power HF Model Hub widgets.

Falcon LLM itself is one of the popular Open Source Large Language Models, which recently took the OSS community by storm. Check out Open LLM Leaderboard to compare the different models. This guide is focused on deploying the Falcon-7B-Instruct version, however the same approach can be applied to other models, including Falcon-40B or others, depending on the available hardware and budget.

Before diving into the deployment process of Falcon LLM in your private GKE Autopilot cluster, there are a few prerequisites you need to have in place:

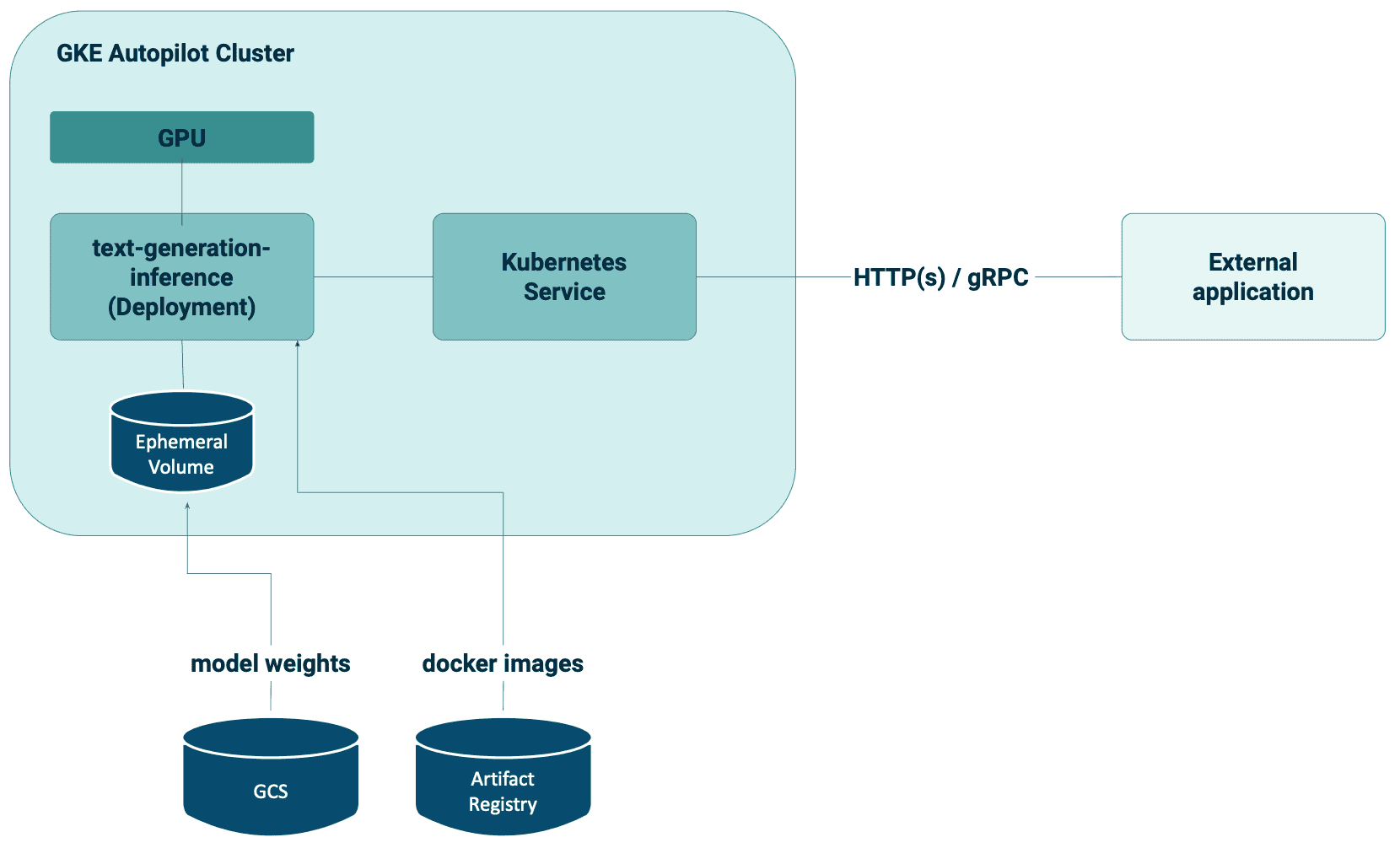

The following diagram shows the target infrastructure that you will obtain after following this blog post.

In order to make deployments private and reliable, you first have to download the model’s files (weights, configuration and miscellaneous files) and store them in GCS.

You have 3 options for that:

Git LFS Clone: Alternatively, you can use Git LFS to clone the model repository. Run the following command:

git lfs clone --depth=1 https://huggingface.co/tiiuae/falcon-7b-instructNote that the disk size required for cloning is typically double the model size due to the git overhead.

After downloading the model files, you should have the following structure on your local disk:

falcon-7b-instruct

├── [9.6K] README.md

├── [ 667] config.json

├── [2.5K] configuration_RW.py

├── [ 111] generation_config.json

├── [1.1K] handler.py

├── [ 46K] modelling_RW.py

├── [9.3G] pytorch_model-00001-of-00002.bin

├── [4.2G] pytorch_model-00002-of-00002.bin

├── [ 17K] pytorch_model.bin.index.json

├── [ 281] special_tokens_map.json

├── [2.6M] tokenizer.json

└── [ 220] tokenizer_config.jsonNow, upload the files to Google Cloud Storage (GCS)

gsutil -m rsync -rd falcon-7b-instruct gs://<your bucket name>/llm/deployment/falcon-7b-instructHugging Face’s text-generation-inference comes with a pre-build Docker image which is ready to use for deployments which can be pulled from https://ghcr.io/huggingface/text-generation-inference. For private and secure deployments, however, it’s better to not rely on an external container registry - pull this image and store it securely in the Google Artifact Registry. Compressed image size is approx. 4GB.

docker pull ghcr.io/huggingface/text-generation-inference:latest

docker tag ghcr.io/huggingface/text-generation-inference:latest <region>-docker.pkg.dev/<project id>/<name of the artifact registry>/huggingface/text-generation-inference:latest

docker push <region>-docker.pkg.dev/<project id>/<name of the artifact registry>/huggingface/text-generation-inference:latestYou should also perform the same actions (pull/tag/push) for the google/cloud-sdk:slim image, which will be used to download model files in the initContainer of the deployment.

GKE Autopilot clusters use Workload Identity by default. Follow the official documentation to set up:

storage.yaml)Begin by creating a Storage Class that utilizes fast SSD drives for volumes. This will ensure optimal performance for the Falcon LLM deployment - you want to copy the model files from GCS and load them as fast as possible.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ssd

provisioner: pd.csi.storage.gke.io

volumeBindingMode: WaitForFirstConsumer

allowVolumeExpansion: true

parameters:

type: pd-ssddeployment.yaml)

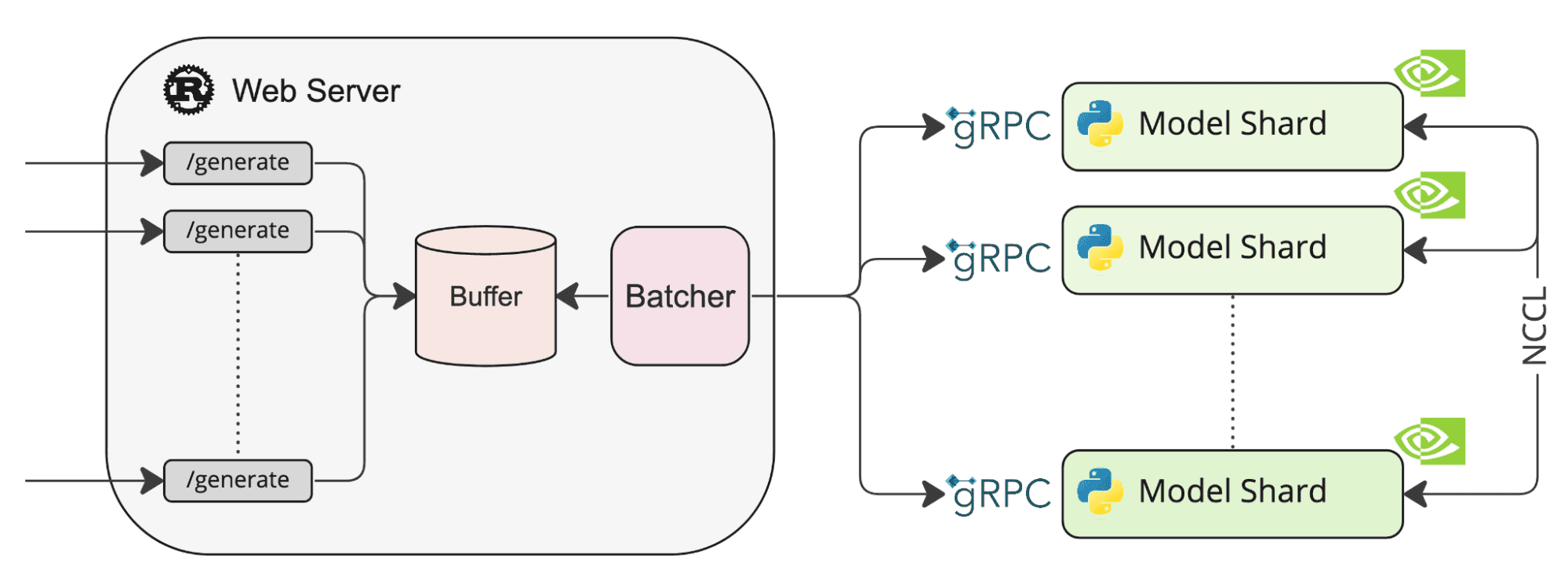

The Deployment manifest is responsible for deploying the text-generation-inference-based model, which exposes APIs as shown in the diagram above. Additionally, it includes an initContainer, which pulls the necessary Falcon LLM files from GCS into an ephemeral Persistent Volume Claim (PVC) for the main container to load.

apiVersion: apps/v1

kind: Deployment

metadata:

name: falcon-7b-instruct

spec:

replicas: 1

selector:

matchLabels:

app: falcon-7b-instruct

template:

metadata:

labels:

app: falcon-7b-instruct

spec:

serviceAccountName: marcin-autopilot-sa

nodeSelector:

iam.gke.io/gke-metadata-server-enabled: "true"

cloud.google.com/gke-accelerator: nvidia-tesla-t4

volumes:

- name: model-storage

ephemeral:

volumeClaimTemplate:

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "ssd"

resources:

requests:

storage: 64Gi

initContainers:

- name: init

image: google/cloud-sdk:slim

command: ["sh", "-c", "gcloud alpha storage cp -r gs://<bucket name>/llm/deployment/falcon-7b-instruct /data"]

volumeMounts:

- name: model-storage

mountPath: /data

resources:

requests:

cpu: 7.0

containers:

- name: model

image: <region>-docker.pkg.dev/<project id>/<artifact registry name>/huggingface/text-generation-inference:latest

command: ["text-generation-launcher", "--model-id", "tiiuae/falcon-7b-instruct", "--quantize", "bitsandbytes", "--num-shard", "1", "--huggingface-hub-cache", "/usr/src/falcon-7b-instruct", "--weights-cache-override", "/usr/src/falcon-7b-instruct"]

env:

- name: HUGGINGFACE_OFFLINE

value: "1"

- name: PORT

value: "8080"

volumeMounts:

- name: model-storage

mountPath: /usr/src/

resources:

requests:

cpu: 7.0

memory: 32Gi

limits:

nvidia.com/gpu: 1

ports:

- containerPort: 8080The most important bits in the manifest are:

nodeSelector:

iam.gke.io/gke-metadata-server-enabled: "true"

cloud.google.com/gke-accelerator: nvidia-tesla-t4The node selectors instruct the GKE Autopilot to enable the metadata server for the deployment (to use Workload Identity) and to attach a nvidia-tesla-t4 GPU to the node that will execute the deployment. You can find out more about GPU-based workloads in the GKE Autocluster here.

initContainers:

- name: init

image: google/cloud-sdk:slim

command: ["sh", "-c", "gcloud alpha storage cp -r gs://<bucket name>/llm/deployment/falcon-7b-instruct /data"]The init container copies the data from GCS into the local SSD-backed PVC. Note that we’re using the gcloud alpha storage command that is the new, throughput-optimized CLI interface for operating with Google Cloud Storage, allowing the user to achieve throughput of approximately 550MB/s (*this might vary depending on the zone/cluster etc).

containers:

- name: model

image: <region>-docker.pkg.dev/<project id>/<artifact registry name>/huggingface/text-generation-inference:latest

command: ["text-generation-launcher", "--model-id", "tiiuae/falcon-7b-instruct", "--quantize", "bitsandbytes", "--num-shard", "1", "--huggingface-hub-cache", "/usr/src/falcon-7b-instruct", "--weights-cache-override", "/usr/src/falcon-7b-instruct"]

env:

- name: HUGGINGFACE_OFFLINE

value: "1"

- name: PORT

value: "8080"The main container (model) starts the text-generation-launcher with the quantized model (8-bit quantization) and most importantly - with overridden --weights-cache-override and --huggingface-hub-cache parameters, which alongside the HUGGINGFACE_OFFLINE environment, prevent the container from downloading anything from the Hugging Face Hub directly, giving you full control over the deployment.

service.yaml)The last piece of the puzzle is a simple Kubernetes service, to expose the deployment:

apiVersion: v1

kind: Service

metadata:

name: falcon-7b-instruct-service

spec:

selector:

app: falcon-7b-instruct

type: ClusterIP

ports:

- name: http

port: 8080

targetPort: 8080Once the manifests are ready, apply them:

kubectl apply -f storage.yaml

kubectl apply -f deployment.yaml

kubectl apply -f service.yamlAfter a few minutes, the deployment should be ready

kubectl get pods -l app=falcon-7b-instruct

NAME READY STATUS RESTARTS AGE

falcon-7b-instruct-68d7b85f56-vbcsv 1/1 Running 0 8mYou can now connect to the model!

Now that the model is deployed, you can connect to the model from within the cluster or from your local machine, by creating a port-forward to the service:

kubectl port-forward svc/falcon-7b-instruct-service 8080:8080In order to query the model, you can use HTTP API directly

curl 127.0.0.1:8081/generate \

-X POST \

-d '{"inputs":"Who is James Hetfield?","parameters":{"max_new_tokens":17}}' \

-H 'Content-Type: application/json'

Response:

{"generated_text":"\nJames Hetfield is a guitarist and singer for the American heavy metal band Metallica"}Or using Python (the example shows zero-shot text classification / sentiment analysis):

from text_generation import Client

client = Client("http://127.0.0.1:8080")

query = """

Answer the question using ONLY the provided context between triple backticks, and if the answer is not contained within the context, answer: "I don't know".

Only focus on the information within the context. Do NOT use any outside information. If the question is not about the context, answer: "I don't know".

Context:

```

I really like the new Metallica 72 Seasons album! I can't wait to hear the next one.

```

Question: What is the sentiment of the text? Answer should be one of the following words: "positive" / "negative" / "unknown".

"""

text = ""

for response in client.generate_stream(query, max_new_tokens=128):

if not response.token.special:

text += response.token.text

print(response.token.text, end="")

print("")Result:

> python query.py

Answer: "positive"Full documentation of the service’s API is available here.

By following the steps in this blog post, you can seamlessly deploy Falcon LLMs (or other OSS large language models) within the secure, private GKE Autopilot cluster. This approach allows you to maintain privacy and control over your data while leveraging the benefits of a managed Kubernetes environment in terms of scalability.

Embracing the power of Open Source LLMs empowers you to build reliable, efficient and privacy-focused applications that harness the capabilities of state-of-the-art language models.

It's coming up to a year since the European Commission published its proposal for the Artificial Intelligence Act (the AI Act/AI Regulation). The…

Read moreAt GetInData we use the Kedro framework as the core building block of our MLOps solutions as it structures ML projects well, providing great�…

Read moreThe client who needs Data Analytics Play is a consumer-focused mobile network operator in Poland with over 15 million subscribers*. It provides mobile…

Read moreNowadays, we can see that AI/ML is visible everywhere, including advertising, healthcare, education, finance, automotive, public transport…

Read moreIn today's digital age, data reigns supreme as the lifeblood of organizations across industries. From enabling informed decision-making to driving…

Read moreIn this episode of the RadioData Podcast, Adama Kawa talks with Jonas Björk from Acast. Mentioned topics include: analytics use cases implemented at…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?