Data Modelling in Looker: PDT vs DBT

A data-driven approach helps companies to make decisions based on facts rather than perceptions. One of the main elements that supports this approach…

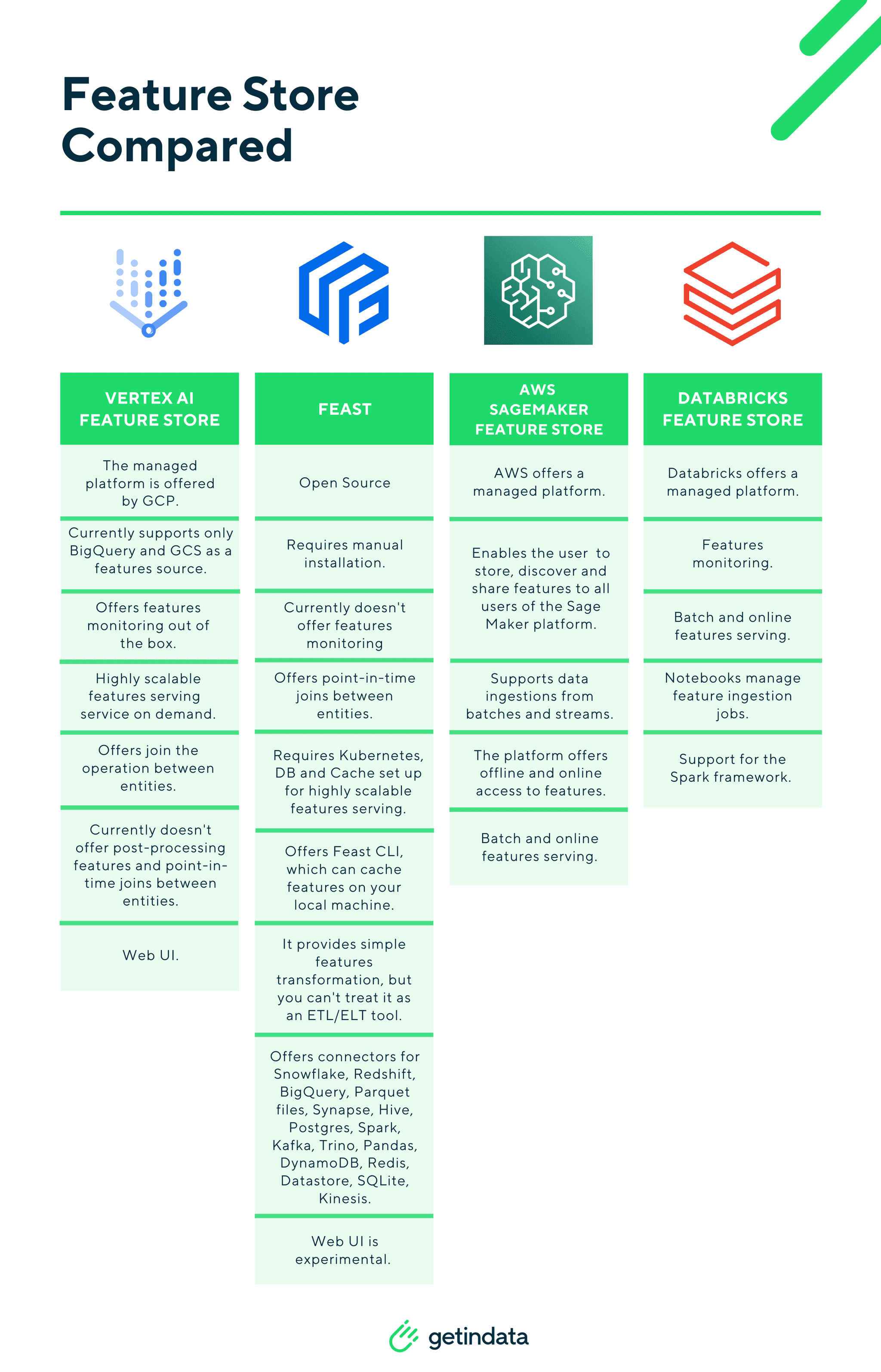

Read moreIn this blog post, we will simply and clearly demonstrate the difference between 4 popular feature stores: Vertex AI Feature Store, FEAST, AWS SageMaker Feature Store, and Databricks Feature Store. Their functions, capabilities and specifics will be compared on one refcart. Which feature store should you choose for your specific project needs? This comparison will make this decision much easier. But first:

A feature store is a data storage facility that enables you to keep features, labels, and metadata together in one place. We can use a feature store for training models and serving predictions in the production environment. Each feature is stored along with metadata information. This is extremely helpful when working on a project, as every change can be tracked from start to finish, and each feature can be quickly recovered if needed.

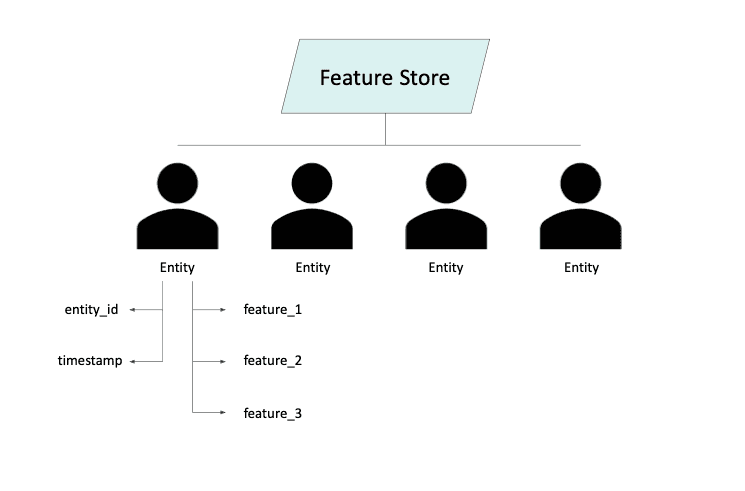

Before we go any further, let's look at the Feature Store data model in the diagram below.

A Feature Store contains the set of entities of a specified entity time. Each entity type defines fields like "entity_id", "timestamp" and a list of features like "feature_1", "feature_2" and so on.

So, we can think of a Feature Store as a centralized set of entities from the whole organization:

The machine learning platform needs to access those features at scale when running your models in production.

The Feature Store can solve business problems, which I mentioned in this article: MLOps 5 Machine Learning problems resulting in ineffective use of data.

Still, before that, I would like to briefly introduce the solutions available on the market.

Below in the refcart, you will find a very specific comparison of the basic differences of the four most popular Feature Stores: Vertex AI Feature Store, FEAST, AWS SageMaker Feature Store, and Databricks Feature Store.

An internal feature store to manage and deploy features across different machine learning systems is key practice for MLOps. Feature stores help develop, deploy, manage, and monitor machine learning models. It allows you to improve the development lifecycle of your model and the flexibility and scalability of machine learning infrastructure. You can also use the feature store to provide a unified interface for access to features across different environments, such as training and serving.

We are in the process of completing the release of an ebook that will show you specifically step-by-step, how to build a feature store from scratch by using the Vertex AI platform, and how to resolve business problems that can occur in the Machine Learning process. We will also point out the differences between BigQuery and Snowflake, a cloud-native data warehouse. Furthermore, we will demonstrate how to use dbt to build highly scalable ELT pipelines in minutes.

If you have any questions or concerns in the area of Machine Learning and MLOps we encourage you to contact us. We have experience in the implementation and optimization of Machine Learning and MLOps processes. We have also developed original solutions in niche areas. We will be happy to serve you with our expertise.

Interested in ML and MLOps solutions? How to improve ML processes and scale project deliverability? Watch our MLOps demo and sign up for a free consultation.

A data-driven approach helps companies to make decisions based on facts rather than perceptions. One of the main elements that supports this approach…

Read moreAt GetInData we use the Kedro framework as the core building block of our MLOps solutions as it structures ML projects well, providing great…

Read moreMLOps platforms delivered by GetInData allow us to pick best of breed technologies to cover crucial functionalities. MLflow is one of the key…

Read moreThe two recently announced acquisitions by Google and Salesforce in the thriving business analytics market appear to be strategic moves to remain…

Read moreThese days, companies getting into Big Data are granted to compose their set of technologies from a huge variety of available solutions. Even though…

Read moreSales forecasting is a critical aspect of any business, especially in the fast-paced and competitive world of e-commerce. Accurately predicting future�…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?