Run your first, private Large Language Model (LLM) on Google Cloud Platform

What are Large Language Models (LLMs)? You want to build a private LLM-based assistant to generate the financial report summary. Although Large…

Read moreCoronavirus is spreading through the world. At the moment of writing this post (on the 26th of March 2020) over 475k people have been infected and over 21k people died (Source: https://coronavirus.jhu.edu/map.html). As East-Asian examples have shown, an effective fight with the outbreak means tracking down individual cases and thoroughly separating infected people from healthy ones. The mechanics behind it is well described in this popular article: Coronavirus - act today or people will die:

“Containment is making sure all the cases are identified, controlled, and isolated. It’s what Singapore, Hong Kong, Japan or Taiwan are doing so well: They very quickly limit people coming in, identify the sick, immediately isolate them, use heavy protective gear to protect their health workers, track all their contacts, quarantine them… This works extremely well when you’re prepared and you do it early on, and don’t need to grind your economy to a halt to make it happen.”

People around the world are taking initiatives to fight with COVID-19. One of those are Hack The Crisis hackathons. My friends and I have decided to take part in the Polish edition. Our goal was to build a system for quarantine tracking.

The South Korean system for quarantined people works very effectively. Once a user starts quarantine they are instructed to stay at home location for at least 2 weeks, in isolation from others and report health conditions. By the time the system was introduced, there were 30k people nationwide self-isolating and the limit of human resources to track each quarantined user was a serious issue. The solution was a mobile application that tracks device location. Once the user leaves „home area” an alert is raised to the case officer. In the South Korean version, there is no additional verification (check). The Ministry is aware of flaws in GPS tracking, but the exact algorithm of checking whether a user is outside of the „home area” is not disclosed.

Coronavirus is spreading fast. When things go wrong we will count active cases in millions. To operate smoothly geofencing apps that monitor people under quarantine need to include different AI-based features to decide whether a user remains in place and to prevent fraudulent activities. Whole system should work in real-time to deliver notifications and reports immediately. Finally, security, reliability, and scalability aspects cannot be ignored as the solution may need to comprise a large chunk of the population.

Notice: The system described in this article is an attempt to build a prototype and not a production-grade system.

Briefly, let’s enumerate the most important use-cases that our system should address:

We are going to build a quarantine tracking system. The system should be scalable to support the growing number of users. It should perform fast and deliver up-to-date data and insights. Equally important is time-to-market - the sooner the solution can be introduced the more effective quarantine will be.

Considering functional and non-functional requirements usage of public cloud with emphasis on serverless offering seems to be a good start. Serverless favors writing loosely coupled microservices.

From a high-level perspective we can distinguish three main areas of the system:

![]()

Overview of the system from the point of view of services. Arrows indicate dataflows. Services are grouped by use-case.

Users who start their quarantine agree to share their current location every 15 minutes. This event is published in the background and no more action from the user is needed. Our system can provide a public endpoint that will handle incoming location events. Once received, the function will publish a message to Cloud Pub/Sub topic.

Sample event could look like this:

{

"created_at": "2020-03-23T14:11:50Z",

"coordinate": {

"latitude": 50.123,

"longitude": 21.123,

"accuracy": 20

},

"user_id": "KjaGj71NlrHO5lH0G6b7"

}On the other side, we can make a subscription to this topic in Cloud Dataflow. Cloud Dataflow is a managed batch and streaming data processing service, which uses pipelines written in Apache Beam framework. It reads events, joins them with the definition of the user's quarantine area and decides if the user is inside or outside. When outside, it publishes event. Finally, we can stream all events into BigQuery for further data analytics.

Possible improvements here are:

Remember the event published when a user is outside the quarantine area? Now, we can use this event to trigger a push notification to users and case officers! The number of such events will be significantly lower than one of incoming location events.

Cloud Function with Cloud Pub/Sub trigger will do the job. This function obtains the user'sfcmTokenfrom Firestore Cloud Messaging and sends push notification.

The goal of quarantine is to obey rules. Subjects cannot interact with others - period. Focus on precise verification will help in keeping people isolated.

Our idea is to give a simple task to a user that will prove their identity and location as the quarantine subject.

We can base the verification process on:

At the beginning of their quarantine users will be asked to make a reference verification. Users will need to memorize the reference verification, namely: what was on the picture and how the picture was taken.

Users will receive verification requests at random times, or when being outside of their quarantine area. Here we can use a mix of Cloud Tasks, Cloud Scheduler and Cloud Functions.

Once verification is triggered, the user will be asked to mimic reference verification as closely as possible. It will be much harder to cheat this method because the user would have to take fake photos, set fake GPS coordinates and even fake the orientation of the device in order to spoof the verification. Hopefully, the effort required to cheat the procedure will be enough to discourage people from breaking the quarantine rules.

Now, we need to assess whether the submitted verification request matches the reference. We have five main input features: photo of an object, photo of a user's face, measurements of gyroscope, GPS coordinates and timestamps.

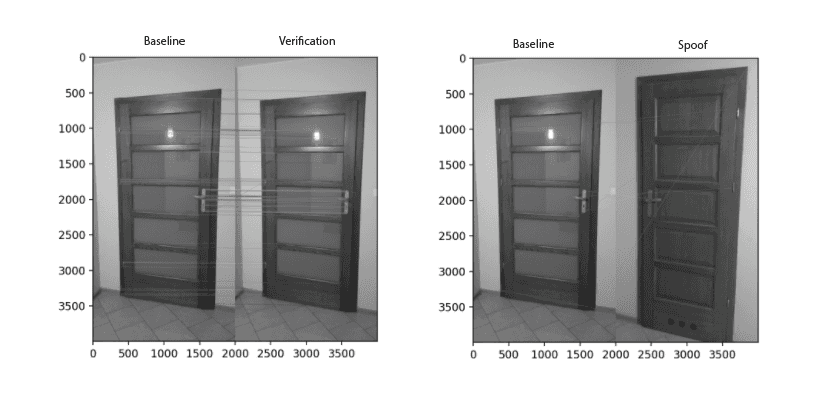

In the preprocessing step, we need to compute features quantifying differences between reference and actual verification. For the image, we need a method to compare two images discarding light setup, shadows and simple distortions.

Accelerated KAZE is described as a “novel and fast multiscale feature detection and description approach that exploits the benefits of nonlinear scale spaces”. Scary name, but it’s already implemented in OpenCV. Essentially, it assigns keypoints to each image and tries to find matching pairs.

For face photos, we can use well-known face matching algorithms based on face keypoints and pre-trained models. Alternatively, there are commercial APIs like Azure Cognitive Services Face Verification.

Having numerical features we can use heuristics or train ML binary classification models. For prototype, we'll be fine with simple heuristics that calculate a weighted average and return boolean decision based on a specified threshold.

GCP has many services to support machine learning solutions. For example, the model can be deployed as a Cloud Run service or using AI Platform in the future.

Finally, the result is saved back to the verification document in Firestore and returned to the user.

Location data is streamed to BigQuery. We also can capture the history of user data stored in Firestore documents using out-of-the-box Firebase Extension: Firestore BigQuery Export.

Now, we have all our data in a single place (locations, user profile, verification results). This gives us a detailed view of the current state of the system and how it has changed over time.

Example analyses that we can perform on such data model are:

BigQuery comes with GIS and machine learning features. Using BQML we can train classification, regression and clustering models with simple SQL statements.

BigQuery integrates seamlessly with BI tools like Data Studio, Tableau or Looker. Such dashboards can serve as simple yet powerful monitoring tools summarizing key metrics and providing useful insights.

Core of the system is ready. We have fulfilled the proposed use-cases. The functionality of the system can be extended in multiple ways:

In this post, we’ve addressed a real problem that can significantly improve quarantine tracking and directly relate to the decreased number of SARS-Cov-2 victims.

We utilized Google Cloud Platform offering to collect, process, store data and host services. Managed, serverless services like BigQuery, Cloud Functions or AI Platform can significantly reduce time-to-market and still provide enough scalability, reliability and security, which makes them a perfect fit for such use-case.

We’ve seen countries like South Korea using such technology before. It has a significant impact on decreasing growth rate and avoiding “super-spreaders”. All the technology to save lives is ready and what needs to be done is just to glue it up!

Final note

Described solution was designed and prototyped for https://www.hackcrisis.com/, hackathon supported by the Polish government. Project has been selected for the final TOP30 out of over 180 submissions. Solution is the result of equally hard work of a team of me and my friends from outside of GetInData: Łukasz Niedziałek, Bartłomiej Margas, Wojciech Pyrak, Katarzyna Rode, Jakub Wyszomierski and Aleksandra.

From this point, I would like to say many thanks to the GetInData team for their support and an opportunity to share the solution.

What are Large Language Models (LLMs)? You want to build a private LLM-based assistant to generate the financial report summary. Although Large…

Read moreMoney transfers from one account to another within one second, wherever you are? Volt.io is building the world’s first global real-time payment…

Read morePlanning any journey requires some prerequisites. Before you decide on a route and start packing your clothes, you need to know where you are and what…

Read moreData space has been changing rapidly in recent years, and data streaming plays a vital role. In this blog post, we will explore the concepts and…

Read moreWelcome to the next instalment of the “Power of Big Data” series. The entire series aims to make readers aware of how much Big Data is needed and how…

Read moreWe are producing more and more geospatial data these days. Many companies struggle to analyze and process such data, and a lot of this data comes…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?