Have you worked with Flink SQL or Flink Table API? Do you find it frustrating to manage sources and sinks across different projects or repositories, along with all the properties of the tables? How do you prevent duplication of table definitions? While platforms like Ververica offer metadata catalogs, what if you don't have access to them?

Here's a viable alternative: the reliable old Hive Metastore Service (HMS). I will walk you through the entire process and demonstrate how to seamlessly set up and work with Flink and HMS. If you're interested, read on!

Setup HMS

The Hive Metastore is a standalone service designed to manage metadata, including table definitions, partitions, properties and statistics. While it's well-known in the Spark community, it can also be valuable for Flink developers.

By default, the Hive Metastore uses Apache Derby as its storage backend, but it's possible to configure it to use any JDBC-compatible database. For this example, I've opted to use PostgresDB.

To install the Hive Metastore, follow these steps:

- Install the Hadoop package.

- Install the standalone Hive Metastore.

- Install the JDBC driver for your chosen database.

Configuration is provided via the hive-site.xml and metastore-site.xml files. To start the Hive Metastore, execute the following command:

/opt/hive-metastore/bin/schematool -dbType postgres

You can use the -validate flag to validate the database schema and -initSchema if it's the first time you're running it.

The full example as a docker image is available here. You can deploy it using a helm chart, published here (remember to set up Postgres db).

Flink dependencies

Flink and Hadoop always lead to dependency conflicts. This problem can be solved by shadowing packages.

I found this worked by adding the following to Flink libraries:

The next step is the hive-site.xml configuration file. I created it in the /home/maciej/hive directory.

<configuration>

<property>

<name>hive.metastore.uris</name>

<value>thrift://hive-metastore.hms.svc.cluster.local:9083</value>

<description>IP address (or fully-qualified domain name) and port of the metastore host</description>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>true</value>

</property>

</configuration>

Flink Table API/SQL

To use HMS, you need to define your catalog in Flink (pointing to a directory with hive-site.xml) and set it:

CREATE CATALOG hms_example WITH (

'type' = 'hive',

-- 'default-database' = 'default',

'hive-conf-dir' = '/home/maciej/hive'

);

USE CATALOG hms_example;

Now you have access to all of the table’s definitions (e.g. list them by SHOW TABLES). If you create a new table it will be added to HMS and be available in the other Flink jobs.

/** JOB 1 */

CREATE TABLE table_a (

id INT,

description STRING

) with (

'connector'='datagen'

)

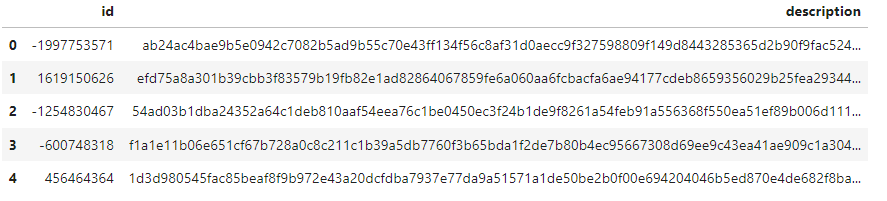

/** JOB 2 */

SHOW TABLES

/** JOB 2 */

SELECT * FROM table_a LIMIT 5

Security

The hive server supports multiple security mechanisms to control access to data. It can be integrated with Kerberos or LDAP to provide Single Sign-On (SSO) authentication. Access to tables can be defined using Storage-Based Authorization (access to databases, tables and partitions based on the privileges defined in the storage), SQL Standards-Based Authorization (fine-grained access granted via SQL) or Apache Ranger.

SQL-based, Ranger, or Hive's default authorization model will be enforced during the query compilation time by the Hive server. However, this is outside the control of HMS and therefore cannot be used with the Flink framework.

Only Storage-Based Authorization falls under HMS's responsibility and could be used in conjunction with Flink, although the extent of its usefulness is limited.

HMS is built on top of an RDBMS. It's possible to create users with different rights, such as one with read-write (RW) access and another with read-only (RO) access. This way, you can protect your metadata from accidental modifications.

Please note that all of the table’s properties are accessible to all jobs, and any credentials can be easily read. Granting access to HMS grants access to all underlying passwords.

That’s all

I hope that you find it easy to set up and use HMS with Flink. It’s beneficial for managing the tables’ definitions between Flink jobs, projects, or repositories, separating the configuration from SQL code. Need help with Flink? Sign up for a free consultation with one of our experts.