3 Apache Flink Blogs That Will Revolutionize Your Streaming Game

Streaming analytics is no longer just a buzzword—it’s a must-have for modern businesses dealing with dynamic, real-time data. Apache Flink has emerged…

Read moreIn the first part of the blogpost, we presented our kedro-snowflake plugin that enables you to run your Kedro pipelines on the Snowflake Data Cloud in 3 simple steps. This time we are going to demonstrate how you can implement the end-to-end MLOps platform on top of Snowflake powered by Kedro and MLflow. Inspired by the blog posts: 1 and 2 - in this article we present a novel approach that tries to address the problem of missing External Internet Access in Snowpark.

This feature would be an absolute game changer for implementing any integration with third party tools not natively available in the Snowflake ecosystem and according to the best of knowledge is on the Snowflake roadmap. So let’s dive into the MLOps Platform for Snowflake Data Cloud.

What is Kedro? The MLOps Framework

Kedro is a widely-adopted MLOps framework in Python, that brings engineering back to the data science world to help productionize machine learning code seamlessly. Kedro lets you build machine learning pipelines that can work on cloud, edge or on-premises platforms. It's open source and offers tools for data scientists and engineers to create, share and collaborate on machine learning workflows. Additionally, Kedro allows you to track the entire machine learning lifecycle from data preparation to model deployment.

See also:

Kedro is a tool that can make your Machine Learning projects more scalable and flexible, while keeping things simple. It can run on any platform, whether cloud or edge computing and is designed to be easily scalable. With Kedro, data scientists and engineers can build machine learning workflows compatible with different platforms without worrying about scalability. In addition, Kedro provides flexibility in building machine learning workflows, supporting various data sources, models, and deployment targets. It makes it easier for teams to experiment with new technologies and techniques while maintaining a consistent pipeline.

MLflow is an open source platform that manages the entire lifecycle of machine learning models. It offers tools to track, manage and visualize workflows, from data preparation to model deployment. Additionally, MLflow promotes collaboration between data scientists and engineers by providing a shared language and understanding of the machine learning process.

Why should you consider MLflow in your MLOps toolbox? Here are the three main benefits MLFlow introduces:

Both Kedro and MLflow are projects supported by Linux Foundation.

Terraform is an open-source software tool that enables the infrastructure as code (IaaC) approach in cloud computing, network automation and security. It provides tools to manage your infrastructure using simple, declarative configuration files instead of complex, error-prone manual configurations or scripts. Terraform helps you automate provisioning, updating and deleting resources across multiple cloud providers such as AWS, Azure and Google Cloud Platform (GCP).

GetInData is also an active contributor to the official Snowflake Terraform Provider, in particular we have recently added support for external function translators that was required for the presented MLflow integration

In the recent release of our Kedro-snowflake plugin we added beta support for MLflow integration.

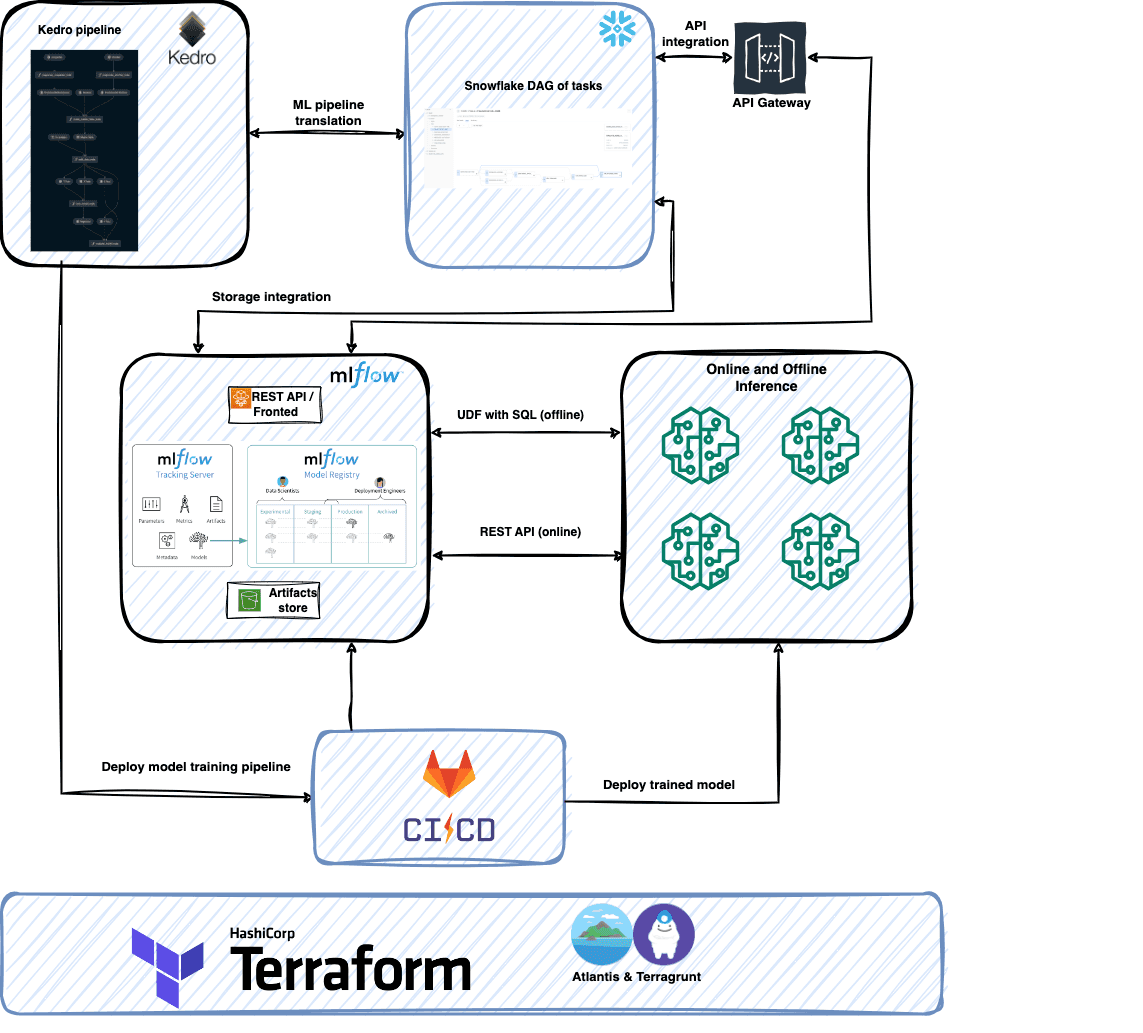

The diagram below presents proposed MLOps platform architecture in the case of AWS cloud.

GCP and Azure deployment scenarios are very much the same except for:

Kedro-snowflake <-> MLflow integration is based on the following concepts:

Our MLOps platform assumes a fully native integration with the Snowflake ecosystem and leverages it for data access, pipeline orchestration, model training as well as model deployment and inference. Such an architecture has a number of advantages, just to name a few:

This is of course not a one-size-fits-all architecture and there are shortcomings that have not yet been addressed in the Snowflake Data Cloud, i.e.:

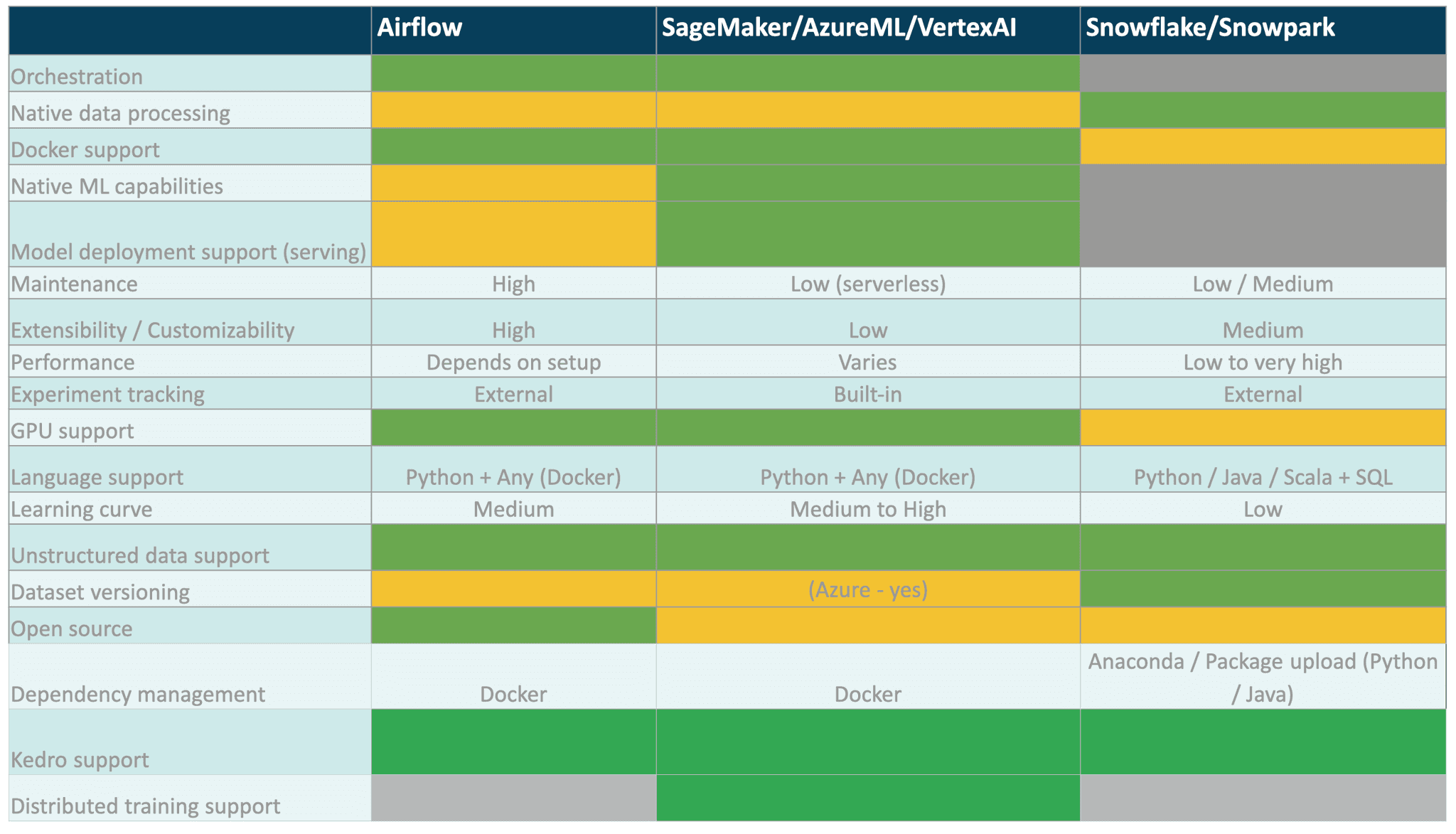

Alternatively, one can only use Snowflake in a data-centric way and offload the machine learning pipeline orchestration and training to external systems such as Azure ML, Vertex AI or SageMaker. By doing so, a trade-off will be introduced - some of the ML/AI-native capabilities of the external services could potentially be leveraged, while the data transfer and cross-cloud connection/setup costs may appear. Such an approach may be, however, desirable in some cases when there is a need to reuse an external pipeline orchestrator service that is already used in organizations, such as Apache Airflow.

By building your core ML project and delivering the business value on top of the Kedro framework, later migration and switching between those two approaches is possible with minimal effort. So far, we’ve open sourced 6 major plugins for Kedro: Kedro-Snowflake, Kedro-AzureML, Kedro-VertexAI, Kedro-SageMaker, Kedro-Airflow and Kedro-Kubeflow (check our GitHub repos).

The below table summarizes the pros and cons of different approaches:

In this condensed blog post we presented our approach to architecting a cloud-agnostic MLOps platform on top of Snowflake Data Cloud, based on three building blocks: Kedro, MLflow and Terraform.The proposed solution solves the issue of missing external access to third party services in the Snowflake ecosystem - this feature once implemented, would simplify the overall architecture by removing the need of using external function wrappers and API gateways.

But it’s not the end of the story - stay tuned for the third part of this blogpost in which we are going to present how to extend this platform even further to support Large Language Models (LLMs).

Interested in ML and MLOps solutions? How to improve ML processes and scale project deliverability? Watch our MLOps demo and sign up for a free consultation.

Streaming analytics is no longer just a buzzword—it’s a must-have for modern businesses dealing with dynamic, real-time data. Apache Flink has emerged…

Read moreThis blog post is based on a webinar:”Real-Time Data to Drive Business Growth and Innovation in 2024” that was held by CTO Krzysztof Zarzycki at…

Read moreThe two recently announced acquisitions by Google and Salesforce in the thriving business analytics market appear to be strategic moves to remain…

Read moreThis blog series is based on a project delivered for one of our clients. We splited the content in three parts, you can find a table of content below…

Read moreQuarantaine project Staying at home is not my particular strong point. But tough times have arrived and everybody needs to change their habits and re…

Read moreApache NiFi, a big data processing engine with graphical WebUI, was created to give non-programmers the ability to swiftly and codelessly create data…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?