Modern Data Stack has been around for some time already. Both tools and integration patterns have become more mature and battle tested. We shared our solution blueprints a couple of months ago: GetInData Modern Data Platform - features & tools. Since then we’ve received plenty of questions about our platform and the proposed technologies used - one of the most common ones was: why don’t we start quick & small and go for a lightweight managed orchestrator? This was a clear indication that we needed to be prepared for some alternatives for Apache Airflow and Cloud Composer. This is how we incorporated GCP Workflows as a fully-fledged element of our stack. Below you'll learn what its typical use cases are and how we got around the topic of the integration of GCP Workflows with other components

Part 1: Introduction

Before we deep dive into data pipelines and different ways they can be orchestrated, let’s start with some fundamentals.

What is dbt?

dbt (Data Build Tool) is an open-source command-line tool that enables data analysts and engineers to transform, test and document their data pipelines.

dbt allows users to build and deploy data models and transformations and tests in a streamlined and repeatable manner.

dbt is a very attractive tool because of its modularity and version controlled approach to data transformation and modeling. It also provides automated testing, documentation and flexibility to work with a variety of databases and data warehouses.

dbt is free and open-source, meaning that users can use, modify and distribute the software without incurring additional costs. This makes dbt an accessible and cost-effective option for companies of all sizes, especially smaller startups or organizations with limited budgets.

Orchestration with Apache Airflow

The definition of data transformations and their dependencies is definitely a core functionality of a data pipeline, however we would not be able to say that we have a fully automated stack without its proper orchestration. Initially we integrated our solution with Apache Airflow, one of the most commonly used tools on the market.

As a part of this integration, at GetInData we developed the dbt-airflow-factory package, that combines dbt artifacts with Airflow by transforming the dbt-generated manifest file on-the-fly into a Directed Acyclic Graph (DAG) with a manageable graphical representation of the data pipeline. When shifting our attention to GCP Workflows, it became obvious that we would need to come up with a similar automation, and this is where the idea of dbt-workflows-factory originated.

What is GCP Workflows & Cloud batch?

GCP Workflows is a managed workflow orchestration service. Provided by GCP, it allows the user to automate, manage and analyze complex workflows across multiple services provided by Google.

Workflows' primary use cases automate data processing pipelines, allowing the data to be ingested from various sources, transformed and exported to other systems or applications.

GCP Workflows is a powerful platform that offers ease of use, seamless integration with other GCP components and cost-effectiveness. With a simple and intuitive interface, users can quickly build and execute complex workflows across GCP services. GCP Workflows charges based on a pay-as-you-go model, with free tier and pricing plans that include discounts for sustained usage. Overall, GCP Workflows is a versatile and cost-effective platform that can help organizations streamline their workflows and save time and money.

GCP Batch jobs is a managed batch processing service that is also provided by Google, enabling users to process large volumes of data using distributed computing techniques. It doesn’t require the user to manage the underlying infrastructure.

It was designed to work seamlessly with other Google Cloud services, allowing users to define their batch jobs using Docker containers. It also provides features for job scheduling, monitoring and management.

GCP Batch also charges based on a pay-as-you-go model, with pricing determined by the number of virtual machine instances used and the duration of their use. There is also a free tier available.

When comparing GCP Batch to Apache Airflow, it's important to note that Airflow is not a serverless platform and requires infrastructure management.

However, Airflow does offer more flexibility and control over your workflows, as well as a more mature ecosystem of plugins and integrations. Additionally, Airflow is free and open-source, although you will need to manage the infrastructure it runs by yourself.

Running GCP Batch jobs orchestrated from GCP Workflows offers multiple benefits, but may not always be so easy. While the process is described below, let's focus on the pros it provides:

- scalability - Batch jobs are great with processing large volumes of data quickly and efficiently, while Workflows easily manages the processes.

- automation - GCP Workflows allow users to automate the process from A to Z, allowing the triggering of jobs, monitoring progress and handling any errors.

- flexibility - Workflows can orchestrate batch jobs that utilize various GCP services, which gives the users a lot of fields for discovery.

This is why we would now like to introduce you to the dbt-workflows-factory - a python library allowing users to integrate dbt tasks and orchestrate and run tasks with GCP tools.

Part 2: Running the flow

How can you run a dbt pipeline on Cloud Batch using GCP Workflow?

To run the dbt workflow on Cloud Batch, users can create a simple GCP Workflow that will trigger all of the jobs automatically.

To do so, only a simple .yaml file needs to be created in order to run it.

You can create such a .yaml using the new dbt-workflows-factory.

Creating configuration

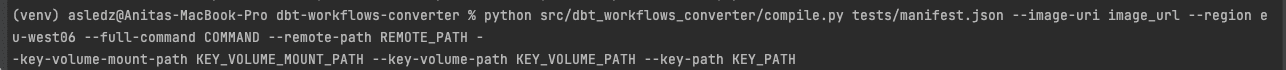

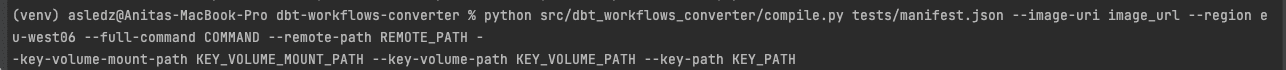

How can you use the dbt-workflows-factory from CLI?

To run from CLI, in the library you will simply need to call

Where you specify the arguments as follows:

image_uri: url address for the imageregion: the location where tge workflow executes on GCP (example: us-central1 or europe-west1)full_command: full command executed on image (example: "dbt --no-write-json run --target env_execution --project-dir

/dbt --profiles-dir /root/.dbt --select ")remote_path: gcs mount path (example: "/mnt/disks/var")key_volume_mount_path: path for mounting the volume containing key (ex. /mnt/disks/var/keyfile_name.json)key_volume_path: path for mounting (ex. ["/mnt/disks/var/:/mnt/disks/var/:rw"])key_path: is a remote path for bucket containing key to be mounted

How can you use the dbt-workflows-factory from python?

Specify the parameters and run the converter to create a workflow.yaml file from manifest.json

from dbt-workflows-converter import DbtWorkflowsConverter, Params

params = Params(image_uri='my_image_url', region='us-central1', full_command='dbt run', remote_path='/mnt/disks/var', key_colume_mount_path='/mnt/disks/var/keyfile_name.json', key_volume_path='/mnt/disks/var/:/mnt/disks/var/:rw', key_path='bucketname' )

converter = DbtWorkflowsConverter(params)

converter.convert() # writes to file workflow.yaml

How can you run the configuration from GCP?

When you have your .yaml ready, and the secrets are in the correct bucket, go to GCP Workflows.

You have two options:

- Run the job from the GCP page

Click +, set up the region and then paste your .yaml file.

- Run from GCP CLI

Log in to GCP

gcloud auth login*

gcloud config set project \[YOUR_PROJECT_ID]*

And then run using the yaml:

gcloud workflows execute \[WORKFLOW_NAME] --source \[WORKFLOW_FILE]*

gcloud workflows executions describe \[EXECUTION_ID]*

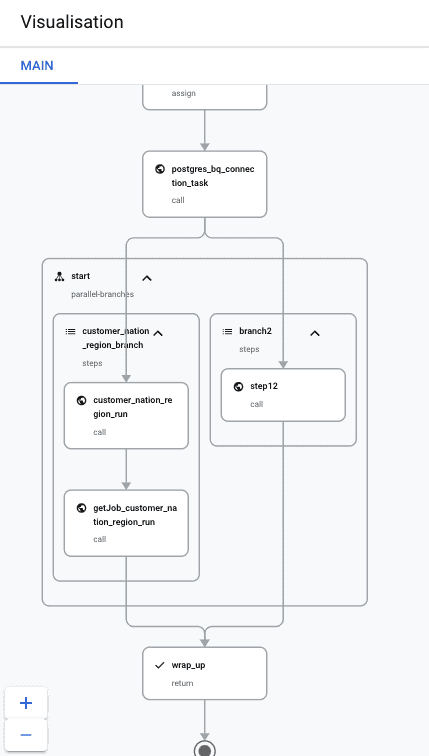

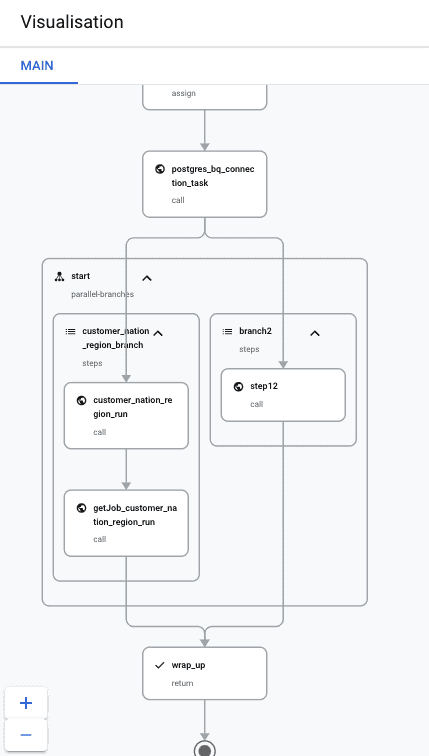

Either way, after doing so, you should see your flow in GCP:

The separate jobs should also be visible in GCP Batch:

And that's it!

Part 3: About the library

About

The library streamlines the process of converting dbt tasks into GCP Workflows, making it easier for developers to manage data pipelines on GCP.

This library is available on GitHub at https://github.com/getindata/dbt-workflows-converter

The first version of the library is currently only capable of processing the run and model dbt tasks. However, future development plans include parsing more complicated tasks, making it easier to automate even more complex data pipelines.

When to use GCP Workflows instead of Airflow

When deciding between GCP Workflows and Airflow for workflow orchestration, it's important to consider the unique strengths and weaknesses of each platform.

GCP Workflows is a fully managed, serverless platform that is ideal for running workflows that require integration with other GCP services. It is particularly well-suited for building data pipelines that process data stored in GCP storage services, such as BigQuery or Cloud Storage.

On the other hand, Airflow is a powerful open-source platform that provides a wider range of customization options and supports a larger number of third-party integrations.

Airflow is an excellent choice for complex workflows that require extensive customization and configuration. Airflow can also run on-premises or in any cloud environment, making it a more flexible option than GCP Workflows.

In summary, if your workflow primarily involves GCP services and requires integration with other GCP tools, GCP Workflows is likely the best choice.

However, if you require more flexibility, control and third-party integrations, Airflow may be the better option.

Ultimately, the choice between these two platforms will depend on the specific needs of your project, so it's important to evaluate both options carefully before making a decision

Plans for future development

In the future, the library will be integrated as a plugin for the DP framework, making it more easily accessible for developers.

Additionally, our Modern Data Platform Framework will be extended to include the possibility of deploying on GCP Workflows, further streamlining the process of automating data pipelines.

The library will also integrate scheduling jobs in GCP Workflows by cloud scheduler, making it possible to automate data pipelines at regular intervals.

Finally, the library will extend the configurability of workflows jobs, making it possible to fine-tune data pipelines to fit specific needs.

These and more future developments will make it even easier for developers to manage data pipelines on GCP, simplifying and streamlining the data engineering process.

Conclusion

We look forward to seeing the impact that this new package will have and to continue our mission of providing innovative solutions to the challenges faced by our community.

We would like to take this opportunity to encourage everyone to contribute to the further development of this package here. Your feedback and suggestions are invaluable to us and we welcome any contributions you may have.

If you would like to learn more about the Modern Data Platform or have any questions or comments, please do not hesitate to contact us. You can also schedule a FREE CONSULTATION with our specialist. We look forward to hearing from you and working together to continue improving our tools and resources.