Real-time ingestion to Iceberg with Kafka Connect - Apache Iceberg Sink

What is Apache Iceberg? Apache Iceberg is an open table format for huge analytics datasets which can be used with commonly-used big data processing…

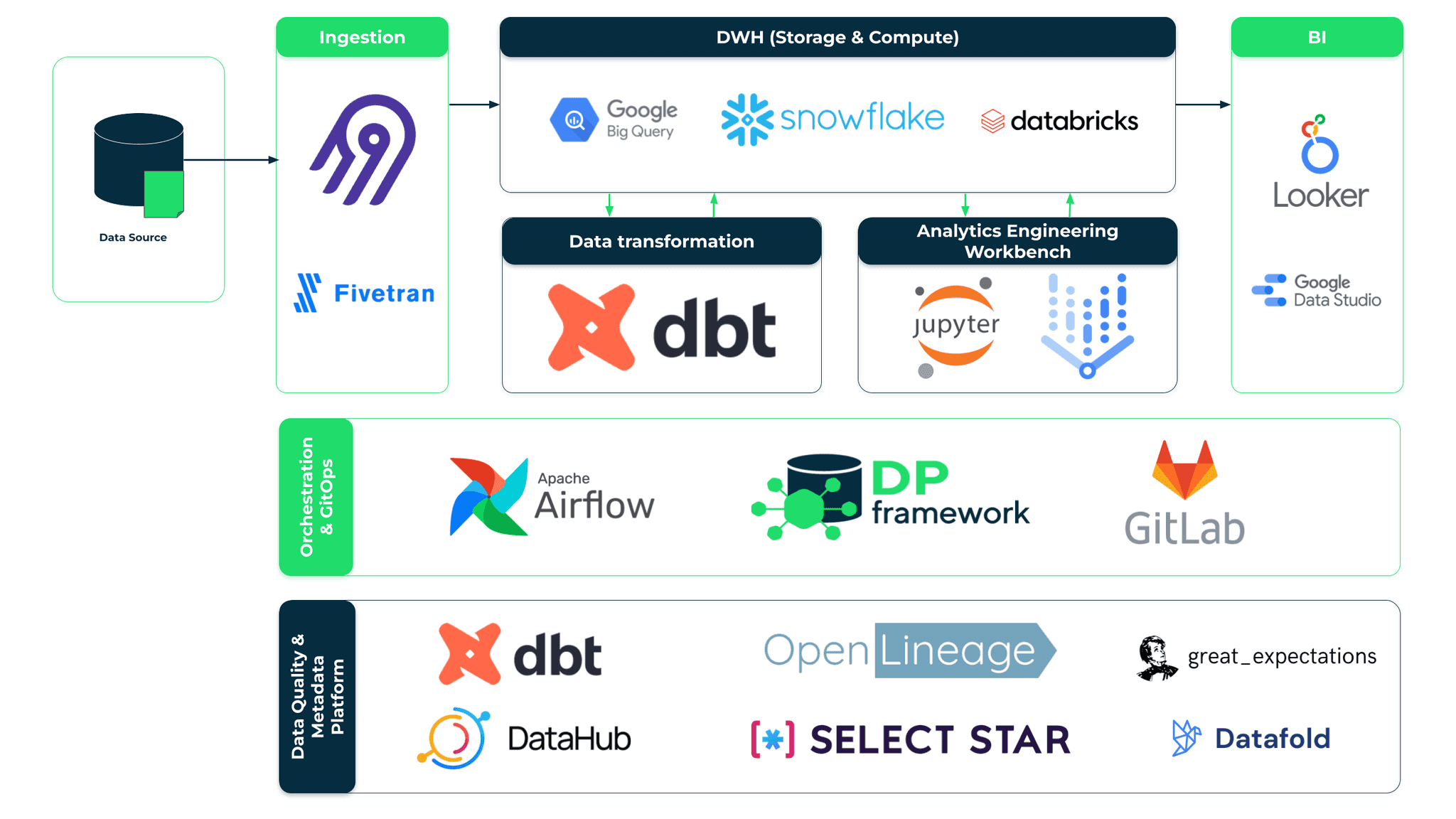

Read moreIn our previous article you learned what our take on the Modern Data Platform is and that we took some steps to make it something tangible to deliver value to our clients. I believe that now your appetite has been sharpened and you’re ready for the main course, namely - what the main features and components of our solution are. For dessert, you’ll also learn what it takes to introduce our platform to your company. So, let’s get ready for a deep dive!

Note - all the diagrams and examples below are provided in GCP, but we support all three of the most popular public clouds (GCP, AWS and Azure).

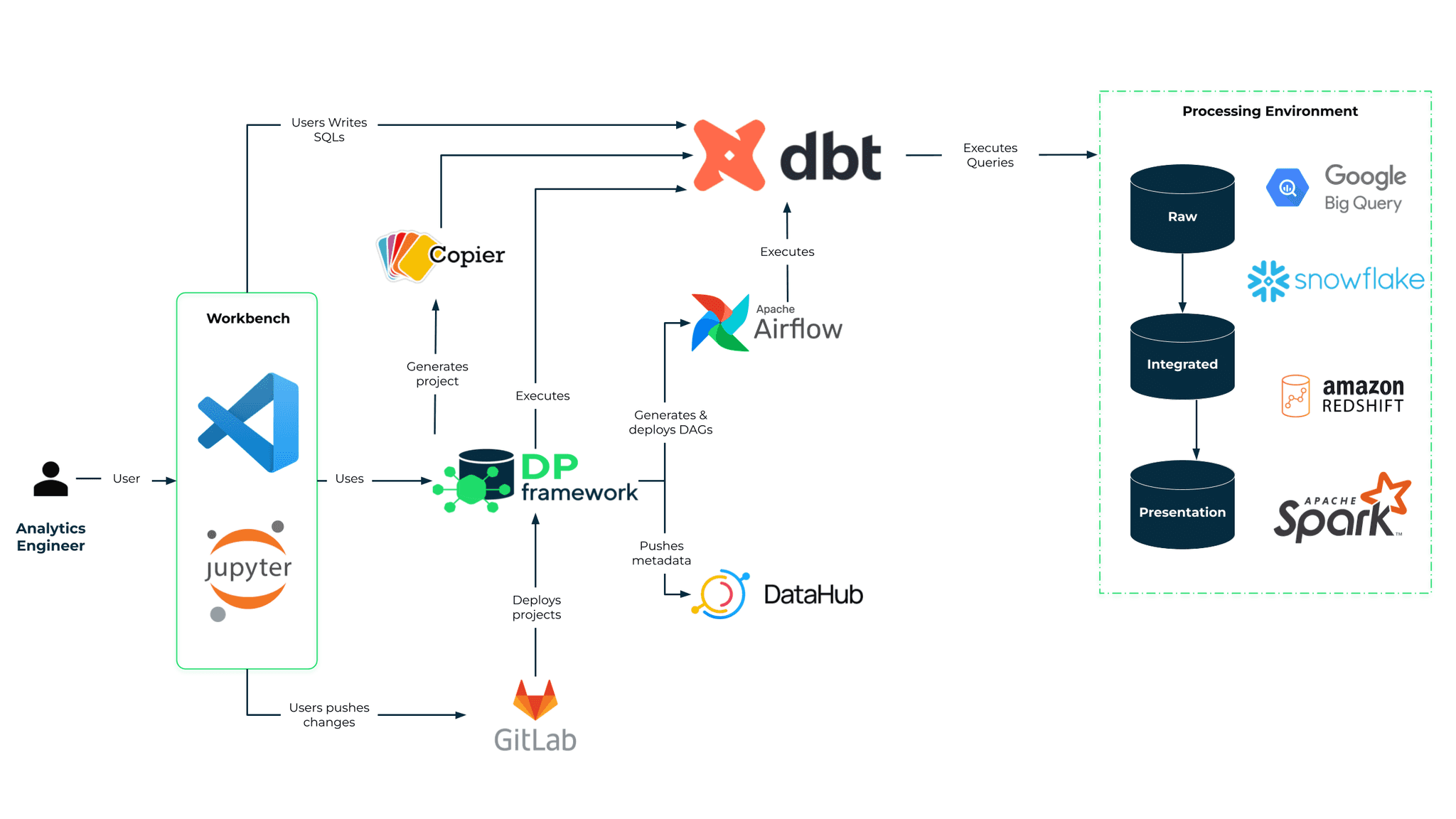

Now, after the key goals have been defined, it’s time to have a closer look at how we managed to address them within our platform. As mentioned earlier, we believe that the stack itself is not just a bunch of popular tools. It is a solid foundation but in fact just a part of the successful modern data platform rollout. What we have added on top of it is something that we call the DP Framework. What lies behind this term is not only our artifacts (integration packages, configurations and other accelerators) but also data architecture and engineering best practices that we gathered throughout years of experience in the data world.

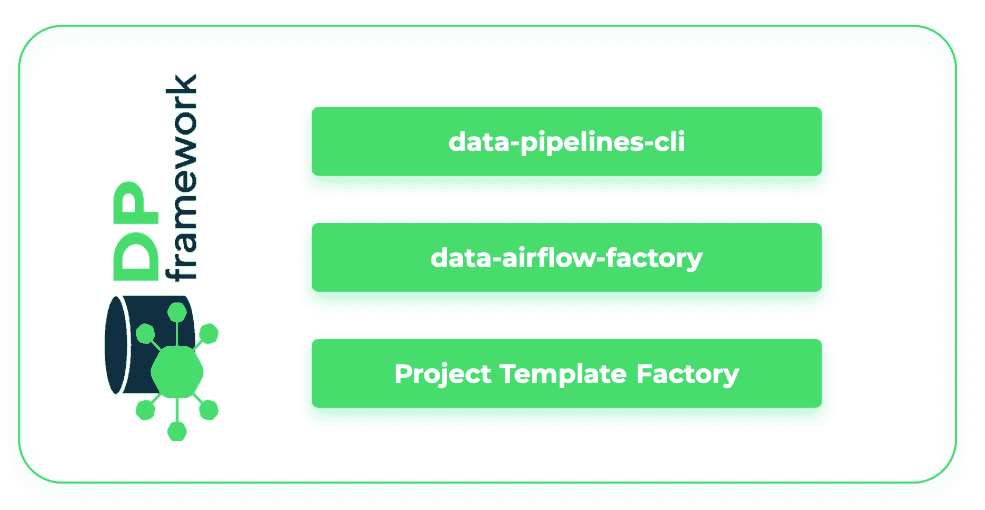

In order to deliver all the above-mentioned functionalities, we came up with a framework consisting of tools, configurations, standards and integration packages that would secure the seamless integration of the components of our stack and provide a user-friendly interface. One of our key framework components is a data-pipelines-CLI package that covers the technical complexities of data pipeline management behind a user-friendly interface. It also simplifies the automation of transformations, deployments and communication between different tools in a modern data stack. Another package that came in handy is dbt-airflow-factory which helps with the integration of transformation and scheduling features. Thanks to this package, dbt and Airflow artifacts are effortlessly and automatically translated at runtime, providing the user with a single place to define the data processing logic. Both packages were built and open sourced in our R&D DataOps Labs. Feedback and contributions are very welcome!

We have already mentioned that being able to incorporate best practices from the software engineering world is one of the cornerstones of the modern data stack. However, we understand that analytics engineers or analysts - our main users - might come from a different background. That’s why we encapsulated and automated some of the most sophisticated steps in CI/CD pipelines. We have also prepared an infrastructure automation setup (IaaC) so that the solution can be deployed in any environment andcloud in a scalable way.

We are also very serious about data security and access control standards. Hence we provide and encourage the use of guidelines on how to define all the necessary setup at the source, with propagation of the roles and permissions to the remaining components of the stack. This is to make sure that the user has the same, well synchronized access to the data regardless of which client they use.

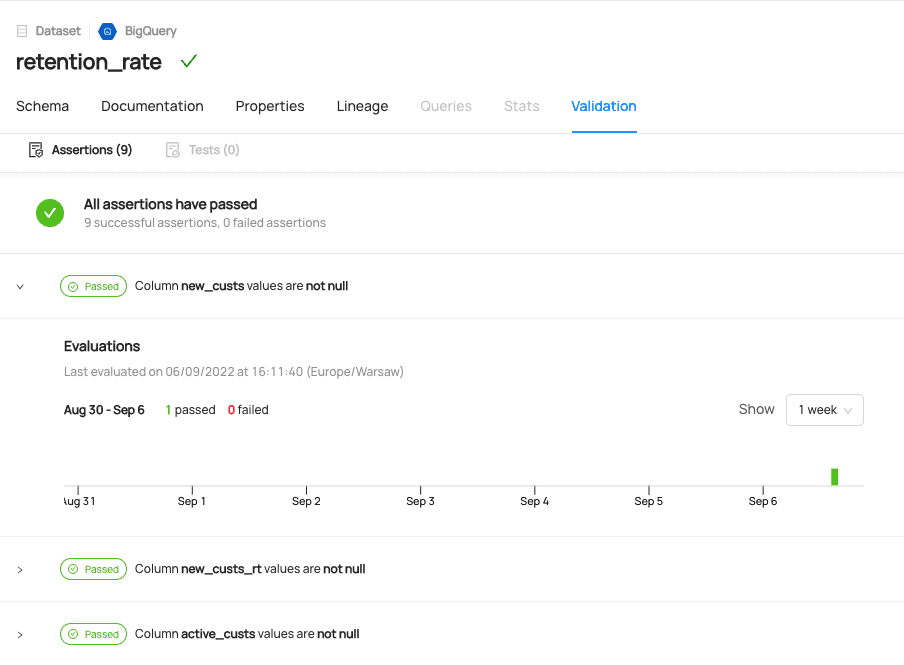

Data quality tests are defined and validated at the time of development. In case any discrepancies occur, an alert is sent on a dedicated Slack channel. Moreover, the history of data quality checks is available via a data catalog. You can also leverage its lineage functionality to troubleshoot issues in your data pipelines.

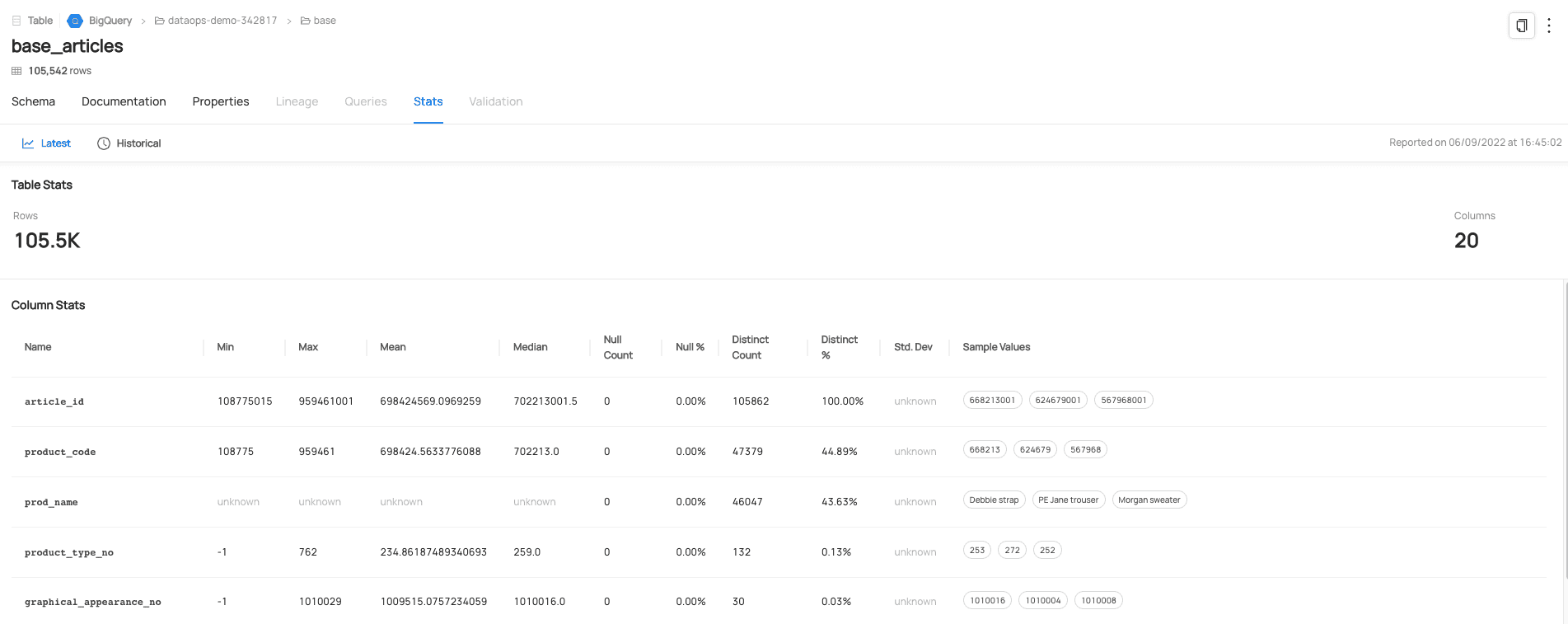

Thanks to integration with data catalog (e.g. DataHub), data discovery is an integral part of the stack. This allows the users to easily find the data and associated metadata they need for their job including usage statistics, query history, data documentation and even data profiling.

Based on our experience, we know that managing a portfolio of data projects can be challenging in big companies. There is always some duplicated effort, and teams end up reinventing the wheel over and over again, which often leads to multiple standards and frameworks doing the same thing. We noticed that there is plenty of room for reusable and configurable components like project structures, processes and user interfaces that could be reused. As an organization or a team, you could build your own base of templates that could be leveraged to standardize your projects and shorten your road to value. To learn more about how we do this, check out our already mentioned data-pipelines-CLI package.

For those who know our company well, it’s no surprise that you see open source solutions all over the place. We have always been enthusiastic about open source and… we still are. However, what is most important for us is to see the world from our client’s needs perspective. And this sometimes proves that there are some very competitive proprietary or managed solutions that are worth being included in our modern data platform architecture.

That’s why in our blueprints you’ll often find a nice combination driven by our experience of open source, proprietary and managed solutions.

Let’s finally check out our main GID Modern Data Platform user interfaces.

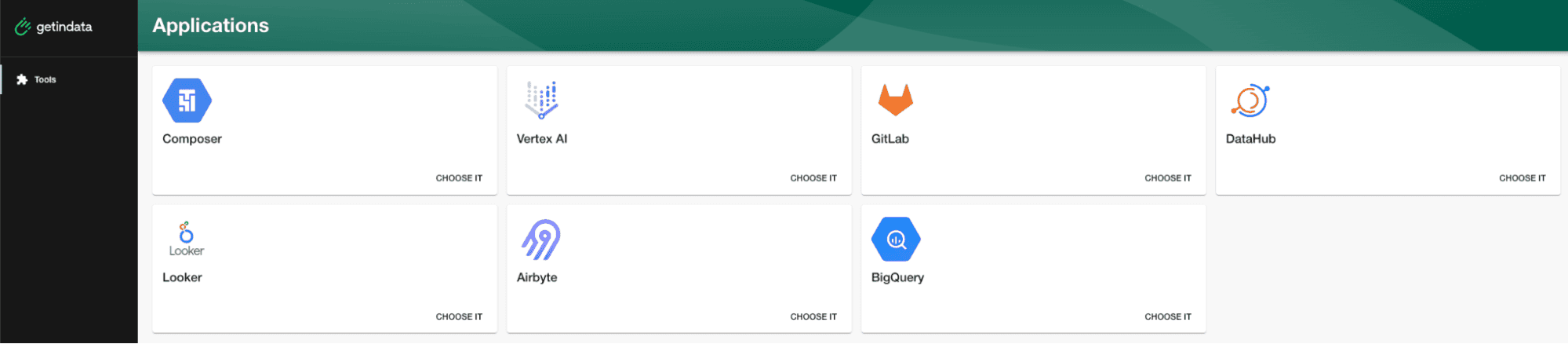

For the sake of user convenience and simplicity, we created a portal where upon a single sign-on, users can have access to all the tools in one place. This is how a simple version of the user’s GID portal could look like:

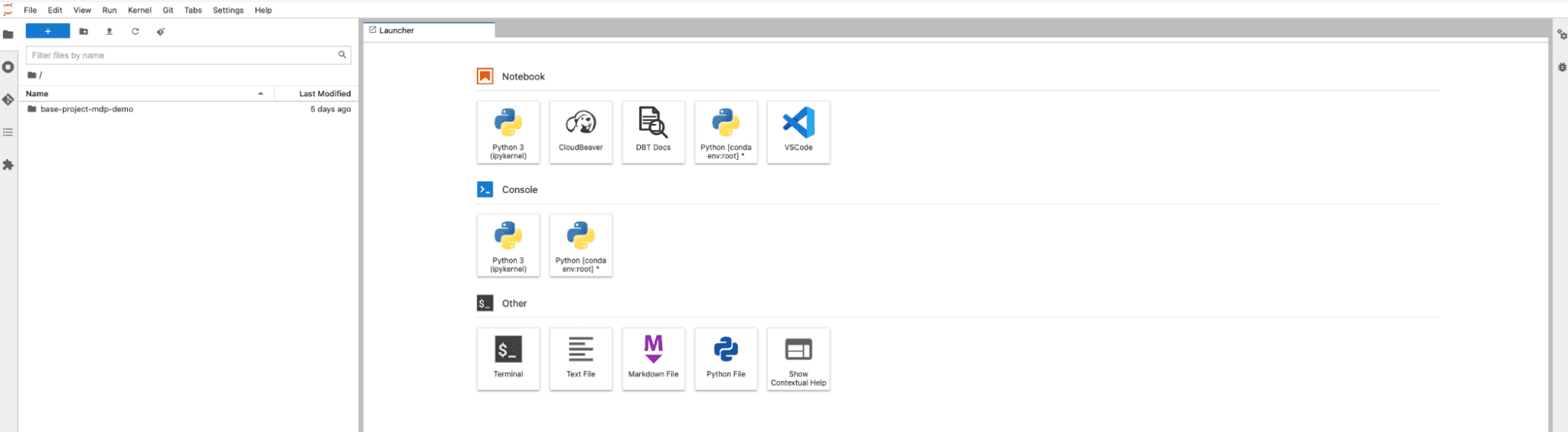

One of the icons in the GID Portal is the actual user’s playground, with access to all the data wrangling tools - GID MDP Workbench (here utilizing Vertex AI on GCP). From here, the user can explore the data using Cloud Beaver, access the VSCode IDE for data transformation definitions in dbt or manage the whole pipeline via data-pipelines-CLI in the terminal.

We don’t believe that there is such a thing as a client-tailored, black-boxed generic modern data platform. That’s why when embarking on a journey with the client, we make sure that the building blocks we put together don't collapse when the market conditions or client’s needs change. Having a solid foundation for our platform & framework, we work together with our clients to make sure that the setup and configuration reflects their key strategic goals. I encourage you to see our demo in order to get a better feeling of which values it can bring to your organization. We also have some success stories to share - you will soon hear about one of our Modern Data Platform rollouts for one of our fin-tech clients - stay tuned!

As mentioned earlier, our solution foundations are based on open-source, so why don’t you grab your cloud’s credentials and give it a try. A tutorial with a step by step onboarding to our platform is available here: https://github.com/getindata/first-steps-with-data-pipelines

We’d love to hear what you think about our solution - any feedback and comments are welcome.

If you would like to learn more about our stack, please watch a demo trailer below and sign up for a full live demo here.

Also stay tuned for more content on our GID Modern Data Platform - including tutorials, use cases and future development plans. Please consider subscribing to our newsletter in order to not miss any valuable content.

What is Apache Iceberg? Apache Iceberg is an open table format for huge analytics datasets which can be used with commonly-used big data processing…

Read moreDuring my 6-year Hadoop adventure, I had an opportunity to work with Big Data technologies at several companies ranging from fast-growing startups (e…

Read morePlease dive in the second part of a blog series based on a project delivered for one of our clients. If you miss the first part, please check it here…

Read moreBlack Friday, the pre-Christmas period, Valentine’s Day, Mother’s Day, Easter - all these events may be the prime time for the e-commerce and retail…

Read moreAs the effort to productionize ML workflows is growing, feature stores are also growing in importance. Their job is to provide standardized and up-to…

Read moreThe Airbyte 0.50 release has brought some exciting changes to the platform: checkpointing (so that you don’t have to start from scratch in case of…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?