How do we apply knowledge sharing in our teams? GetInData Guilds

Do you remember our blog post about our internal initiatives such as Lunch & Learn and internal training? If yes, that’s great! If you didn’t get the…

Read moreMoney transfers from one account to another within one second, wherever you are? Volt.io is building the world’s first global real-time payment network, powered by open banking. To build a payment platform that is fast and reliable, they need to use huge amounts of data from different sources such as: translation status, transaction quality, availability of banks, quality of internal processes and more. To achieve this, Volt decided to create a platform that processes and combines data from all sources in a short time period, but they didn’t have the advanced analytical or data engineering skills in-house. That's why they needed to cooperate with an experienced partner to kick-start advanced analytics, reporting and data lake and machine learning initiatives. This was the moment that GetInData started cooperation with Volt to help them take their first step in this data analytics journey.

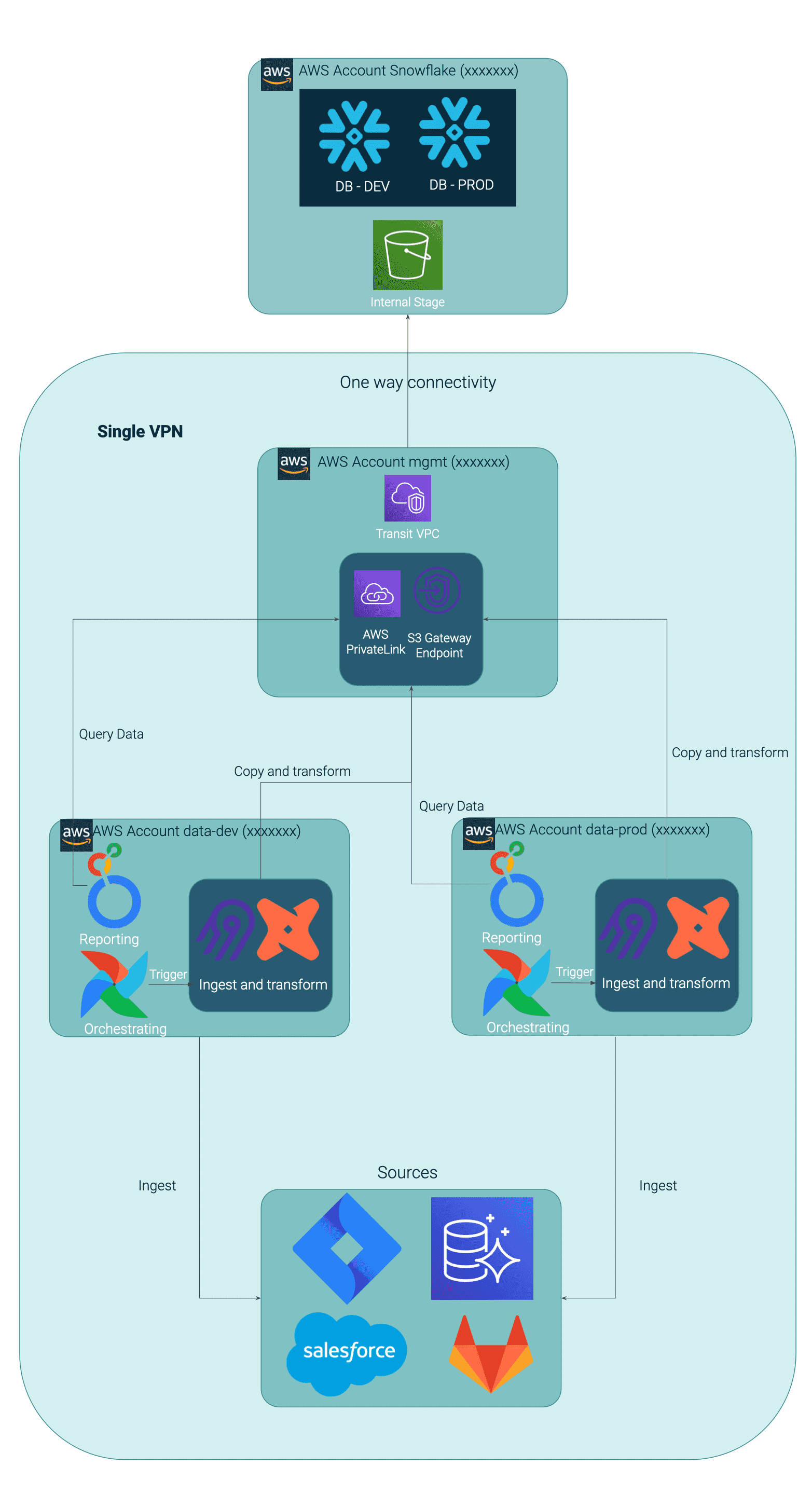

Within 4 months we designed and implemented a Modern Data Platform for them, that was based upon Cloud components including data ingestion(Airbyte), data transformation (dbt), orchestration(Amazon Managed Workflows for Apache Airflow) and a data warehouse (Snowflake).

In this blog post, we describe the architecture in detail, to show you what data technology stack we used to build the MDP for Volt.io. But first, let’s take one step back.

The objective of the project was to establish the core of the data platform, enabling Volt to have a base setup of key performance indicator dashboards, a self-service BI-tool, ad-hoc analytics capabilities, integration of this insight back with their products and future growth with a focus on ML. What's more, the overall architecture needed to be compliant with certain, very strict security standards such as PSD2, PCI and GDPR.

We started the project (together with Data Edge) with an assessment of the problem and approach - after conducting a series of interviews with the stakeholders, we determined the current situation and identified the problems and needs of the client. This allowed us to define their technological requirements and business needs. The information we gathered confirmed that the implementation of the Modern Data Platform would not only cover the client's current requirements, but also open the door to the next steps.

When proposing the Modern Data Platform, we addressed a few crucial areas that bring business value to the customer. Firstly we focused on the need for self-service by the client. Thanks to the proposed architecture and by adopting lean and automated processes, the data platform could be scaled with added complexity without much maintenance work. By doing it this way we decreased the cost of maintenance and were able to use these savings to increase the platform functionality.

The second important area was modularity of the platform. We built a platform that hosted modular services that could be distributed to cater for the growing number of data producers and consumers. Thanks to this approach, the business no longer had any limitations when it came to scaling the platform and was able to add more data sources without any platform interruptions. This increased the platform's stability which positively impacted on the smooth work of Data Analytics.

Following the scalability, we addressed managed data ownership to secure compliance and unforeseen data quality issues. Security was an important part of the platform from the start of creating the architecture. We wanted the platform to be flexible but simultaneously secure. Some of the data had to comply with certain, very strict security standards. Therefore we proposed to use components that fulfilled that requirement.

Lastly, we addressed the area of maintaining the balance between upfront costs and the cost of maintenance, to derive the optimal total cost of ownership. With no long-term commitments in mind and a pay as you go maintenance platform, we managed to keep payments to a minimum by leveraging the cloud components that kept the cost of the platform transparent and controllable.

The Platform was built around the modern data stack, deployed on the AWS cloud platform. We split the process of data management into data ingestion, data transformation, data presentation, data orchestration and data governance. We have mentioned the specified technologies below.

Every piece of the aforementioned data technology stack was deployed and customized using terraform and ansible. Each tool has its version on the development environment and production to make sure that our processes are well tested before being deployed to production.

Let’s start by discussing the data platform architecture from a key topic - security.

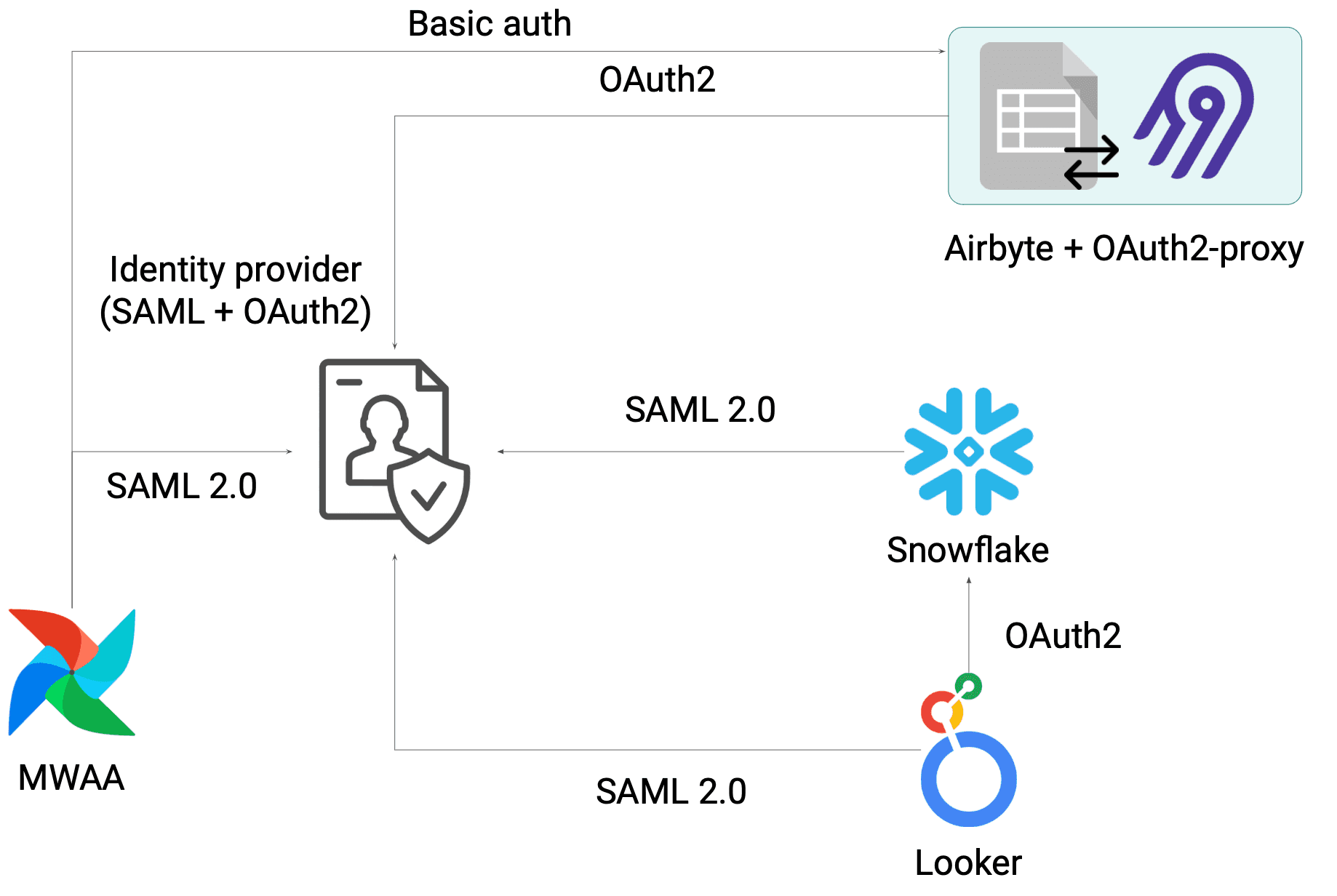

Data security is crucial for every IT project, and for data platforms this is no different. The modern data platform can also be tricky, as there are a lot of tools which don't support the authentication and authorization part and that has to be done using proxying methods to secure endpoints. For example, airbyte doesn't have any authentication part, so we had to create this on our own.

The next thing was to join all of the pieces together. In the case of user management regarding the authorization part, each of the systems could have their own implementation like Snowflake or Looker. We decided to integrate Snowflake and Looker with SSO, which is currently provided by Google Workspace using the SAML 2.0 mechanism. This is a widely adopted way of providing unified security at an enterprise level. Thanks to this, it will be possible to change the Identity provider (IdP) in the future (e.g. to ADFS or Okta).

We wanted to secure data flow as much as possible. Every piece of the data platform lay in the same vpc network, and not all of the network traffic left the local network. Even Snowflake instances used private links to communicate between the rest of the platform.

The data platform was one thing, but to keep the data safe we needed secure CI processes and deployment. Even though that data never left the local VPC, we used FarGate workers in our gitlab ci/cd jobs to make sure that we secured the data as much as possible even there.

AWS Secret manager is the place where all the secrets were stored and from there we propagated everything to downstream processes. Apache airflow used dynamic pulling secret manager variables to its own variables, we only had to use specific naming conventions. The dbt process scheduled on MWAA used env variables which were delivered using Airflow plugins. The CI/CD processes also used secret manager values instead of obtaining them from the gitlab variables. One drawback of airbyte used to be the configuration management, until Octavia CLI came along. With the use of yaml files separated into sources, destinations and connections, we were able to easily create scripts around the configuration which allowed us to use secret manager to fill env variables during CI/CD processes to apply configuration. Imagine the moment where the password needs to be rotated, we only need to re-run the job on gitlab to push the new configuration to the airbyte instance in a secure way.

We can now continue our journey round the data platform, by moving on to discuss the Data Warehouse in the heart of our solution, Snowflake.

Snowflake gave us the flexibility to be able to scale and turn off the compute power whenever this was needed. Powerful and flexible SQL provides the possibility of delivering everything faster. Snowflake can be harmoniously integrated with dbt to run the models using its engine. With a set of defined roles, the data can be managed securely and encapsulate every piece which should not be visible for a certain group of people.

Snowflake provides a tremendous number of functions out of the box, and is easily extensible by User defined functions (written in languages like Python, Java and more) and also by executing, for example, lambda functions or external APIs. If you prefer more of a coding approach, you can use SnowPark here.

To make sure that data does not leave the local VPC network, you can utilize the business critical edition with a private link in place.

Snowflake is the central point of the data platform, so let’s now introduce how the data moves between the integrated systems to Snowflake.

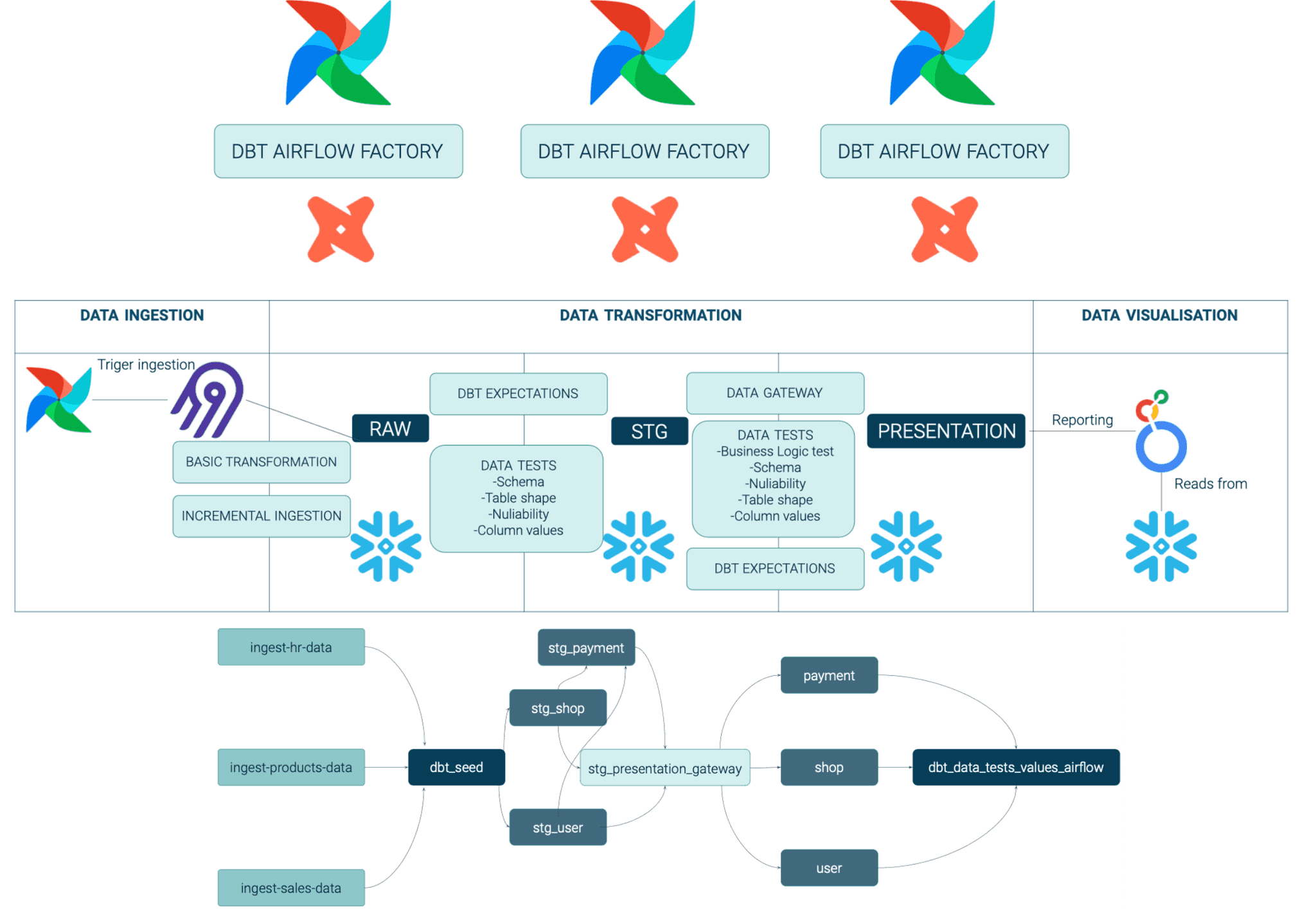

Data flow starts with data ingestion in Airbyte. Ingestion process was triggered using Apache Airflow, we decided to keep the data scheduler in one place and by default switch off any automatic ingestion using Airbyte built in scheduler, instead we used AirflowSyncOperator to trigger it. Data is loaded in an incremental fashion based on the date cursor column, to avoid constantly saving the same data and simultaneously reduce the costs. All streams from sources like:

were ingested using Airbyte with no encountered difficulties. One drawback was that with an increased number of records, the processing time could be quite high regarding Airbyte. Additionally, all of the tables from Aurora were transformed with basic transformation. Such data is saved in the RAW schema where later on with the use of dbt, it will be processed and saved in STG schema. Raw data is tested with simple tests like schema (column names, types etc.), table shape (number of columns, number of records), nullability and column values to make sure that our data is reliable and can be the source for later BI and reporting processes.

Aggregates in the staging phase are also tested using unit-like tests, yet this process also includes some business logic tests to make sure that what we calculate makes sense in business logic terms.

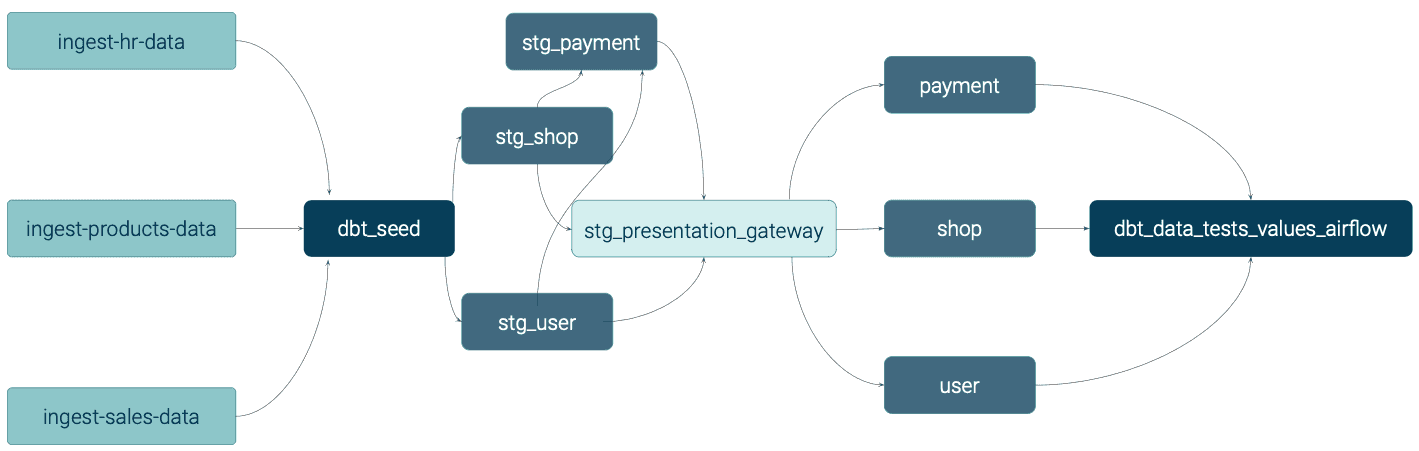

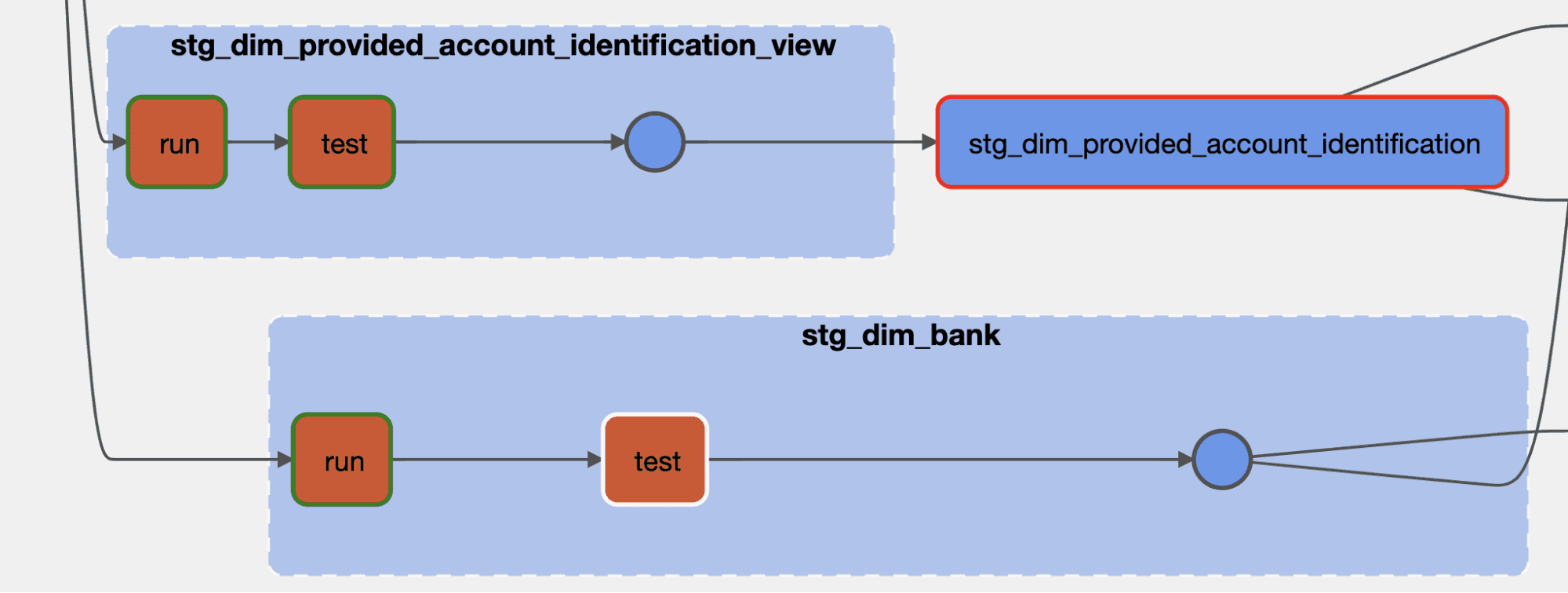

dbt-expectations is an awesome package to create great expectations of your dbt project. A wide list of predefined tests can speed up defining the tests for your application. We also included our own tailored set of tests written as dbt macros. To make sure that our data was consistent between the stg and presentation layer and to confirm that our processing ensured a reliable transaction of data (atomicity), we created a gateway between the staging layer and presentation to serve the data to Looker in an atomic way. It's worth mentioning that we didn’t define any airflow operator representing the dbt model on our own, we used the dbt-airflow-factory which automatically translates your dbt project to airflow tasks and supports the gateway between the staging and presentation layer. It also runs the dbt seed before each pipeline run and ensures that this occurs after all model tests are performed. Furthermore, if any tests fail downstream, dependencies will not be started. This is a valuable feature when it comes to data consistency and reliability. We will talk more about data quality in a later chapter.

The data from staging was saved to the presentation using 1:1 rewrites, which ensures data correctness.

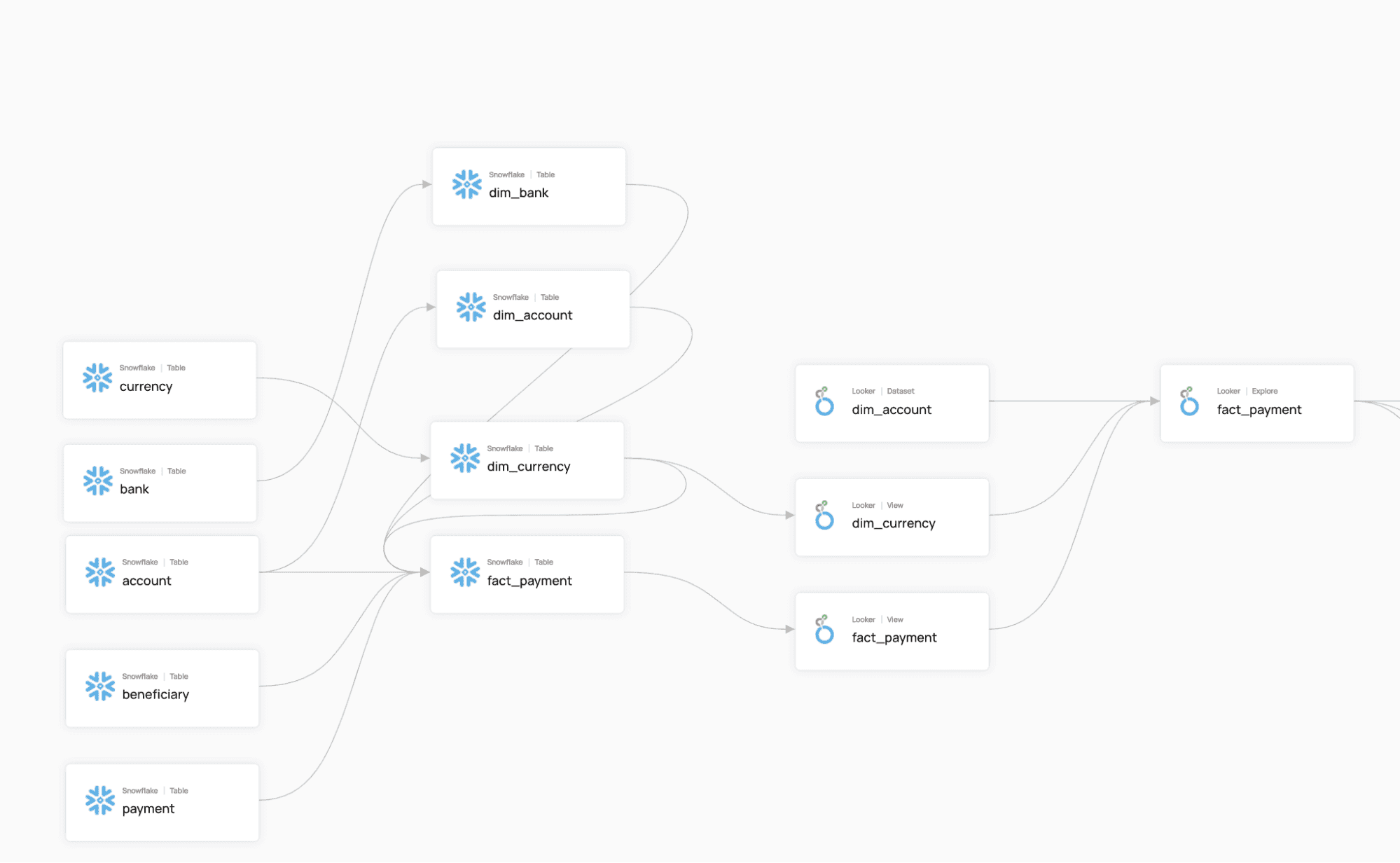

As visible in the images, we tried to follow the kimball approach in terms of data modeling, with some discrepancies. The initial approach was to use dbt snapshots to create scd2 like models, but due to the fact that the only way to represent ids was to use the already hardcoded hashing function, we decided to utilize our own implementation based on dbt macros and an incremental approach. We ended up with scd2-like tables with identifiers created from Snowflake sequences (numeric identifiers). So how did we provision all of these tools to make them work together? Terraform along with ansible helped us to automate the infrastructure provisioning and ensure that all the components could communicate with each other in a secure way, including user access.

The whole infrastructure is manageable from a repository level and can also be deployed on any AWS account using terraform and ansible. Services like:

are fully managed by terraform and ansible. All required networks and permissions were furthermore fully automated.

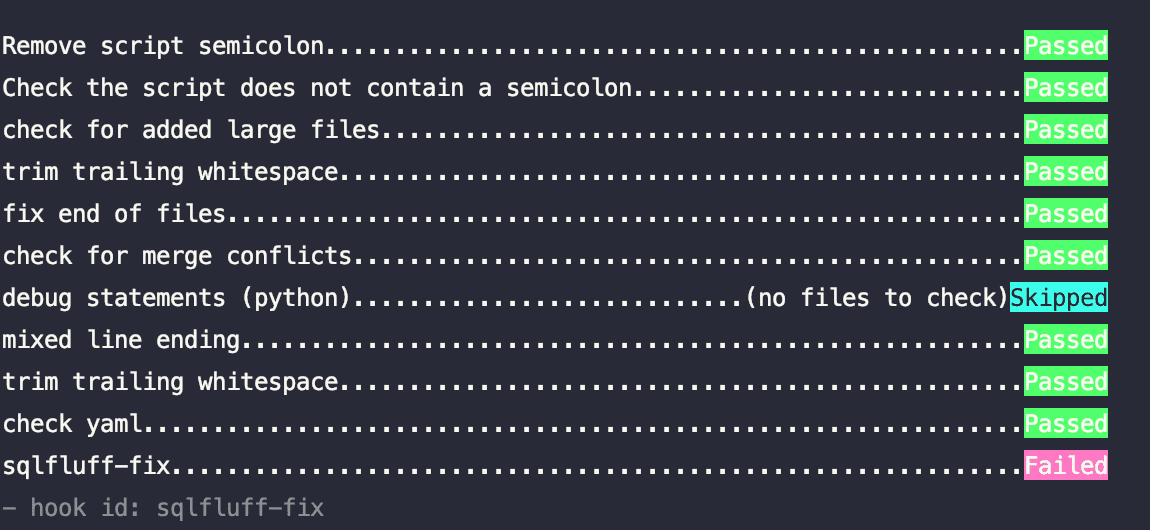

On a repository level, thanks to pre commits things like:

automatically take place during the developmental phase on local machines.

Whenever a Merge request occurs, the terraform code is verified using terraform validate and plan commands. Only once the MR request is accepted by at least two team members, can it be deployed on a dev and prod environment.

Making data reliable is a recent hot topic in data space. To avoid the garbage in garbage out approach, you need to make sure that your data is tested in many spaces like:

Dbt has a lot of built-in tools to provide you with an easy way of testing your data. This can be achieved by:

Additionally, to avoid data inconsistency each airflow task representing the dbt data model is followed by a testing phase, so whenever the model testing result is negative, no other downstream process will be continued. The good thing about the dbt-ariflow factory is that you get that out of the box. Furthermore, to create some kind of circuit breaker, the dbt-airflow factory allows you to define a gateway between the data zones (bronze, silver and gold) to make sure that airflow will hold all tasks until the previous zone as a whole is completed.

This means that no presentation layer process will be started until all staging is finished.

Testing data is a valuable thing, but it means nothing when you are working on your local machine. There is no chance of catching regression tests after the changes have been made. In an agile approach to software development changes are inevitable, so we need to somehow make sure that the changes which we make are properly tested. We divided our testing processes into a few stages.

Every developer has dbt installed on a local machine and makes sure that the data produced meets the quality standards required. Separate Snowflake schemas and dbt profiles help to make the work of each developer independent from one other. Using test tags, data samples and a feedback loop can be really efficient and make the development process faster. We need to make sure of this each time we run the lightweight tests.

Before the code is even pushed to the repository it’s tested and the code quality is verified and properly linted using sqlfluff.

Ci/CD tests on a larger data scope to make sure that we didn’t mess up any important part of our solution. On CI/CD we also only run lightweight tests and integration ones based on the data subset.

Testing on the data pipeline on Airflow - after each model, task tests are run against that data. If the test fails, no other downstream process will start. Additionally, data ingestion is a part of data transformation, to confirm that each step is using the newest possible data and there is no delay causing a 2 day gap.

After the staging phase we also included a gateway whose function was to be a circuit breaker to establish that we produced all the tables, or none of them.

Looker is a great tool which provides a software-like approach to create dashboards, before the dashboard is visible to a broader audience and is tested on local branches by developers. Looker integrates well with Snowflake, which helps with creating dashboards and data visualization.

We used two Looker instances, dev and prod. The main purpose for a dev instance is quality assurance, which utilizes a separate branch on gitlab in comparison to prod which uses the main branch.

The development approach is similar to the other solutions. We must use gitlab CI pipelines to validate the LookML models before we can merge them into the main branch. To validate their correctness we used tools like:

Access to Looker was managed using google SSO as you could read more in the security section.

Make sure that you understand the data and you know where to find any downstream processes affected by your data (data as a product paradigm). It's good to have a visual representation to show what is happening. When creating a solution around a dbt project tool, the first thing that comes to mind is dbt docs, which can help you visualize the data pipelines built by your team (we published dbt docs on gitlab pages and updated it after each merge to the main branch). However, what if we want to know which table is being used by which dashboard or which data is being produced by which tool? To answer those questions, we used Datahub, which is a tool to manage your data to see what exactly is happening in your data pipelines. Rich data catalogs and support for multiple tools make datahub easy to use and exceptionally useful when it comes to understanding your data pipeline processes.

If you want to, check our GitHub here.

The Modern Data Platform is just the beginning of the Volt data journey, but this allowed them to take their first step towards becoming a data-driven organization. Now Volt is able to use a Modern Data Platform that provides performance indicator dashboards, a self-service BI-tool and ad-hoc analytics capabilities. In the near future they will consider ML and MLOps extensions.

The Data Platform we built for Volt is strongly connected with the GetInData Modern Data Platform we developed at our GetInData DataOps Labs, a self-service solution for Analytics Engineers which you can read more about here. If you would like to see how it works live, please sign up for a full live demo here.

Do you remember our blog post about our internal initiatives such as Lunch & Learn and internal training? If yes, that’s great! If you didn’t get the…

Read moreIt’s been already more than a month after Big Data Tech Warsaw Summit 2019, but it’s spirit is still among us — that’s why we’ve decided to prolong it…

Read moreWe are excited to announce that we recently hit the 1,000+ followers on our profile on Linkedin. We would like to send a special THANK YOU :) to…

Read moreYou could talk about what makes companies data-driven for hours. Fortunately, as a single picture is worth a thousand words, we can also use an…

Read moreIn this episode of the RadioData Podcast, Adam Kawa talks with Michał Wróbel about business use cases at RenoFi (a U.S.-based FinTech), the Modern…

Read moreIn this episode of the RadioData Podcast, Adama Kawa talks with Alessandro Romano about FREE NOW use cases: data, techniques, signals and the KPIs…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?