The Kubeflow Pipelines project has been growing in popularity in recent years. It's getting more prominent due to its capabilities - you can orchestrate almost any machine learning workflow and run it on a Kubernetes cluster. Although KFP is powerful, its installation process might be painful, especially in cloud providers other than Google (who are the main contributor to the Kubeflow Project). Due to its complexity and high entry level, Data Scientists seem to be discouraged to even give it a go. At GetInData, we have developed a platform-agnostic Helm Chart for Kubeflow Pipelines, that will allow you to get started within minutes, no matter if you're using GCP, AWS or whether you want to run with KFP locally.

How to run Kubeflow Pipelines on a local machine?

Before you start, make sure you have the following software installed:

- Docker with ~10GB RAM reserved, at least 20GB of free disk space

- Helm v3.6.3 or newer,

- kind.

Once you have all of the required software, the installation is just a breeze!

Create a local kind cluster:

kind create cluster --name kfp --image kindest/node:v1.21.14

It usually takes 1-2 minutes to spin up a local cluster.

Install Kubeflow Pipelines from GetInData's Helm Chart:

helm repo add getindata https://getindata.github.io/helm-charts/

then

helm install my-kubeflow-pipelines getindata/kubeflow-pipelines --version 1.6.2 --set

platform.managedStorage.enabled=false --set platform.cloud=gcp --set

platform.gcp.proxyEnabled=false

Now you need to wait a few minutes (usually up to 5, depending on your machine) for the local KIND cluster to spin up all apps. Don't worry if you see ml-pipeline or metadata-grpc-deployment pods having a CrashLoopBackOff state for some time - they will become ready once their dependent services launch.

The KFP instance will be ready once all of the pods have this status Running:

kubectl get pods

| NAME | READY | STATUS | RESTARTS | AGE |

|---|

| cache-deployer-deployment-db7bbcff5-pzvwx | 1/1 | Running | 0 | 7m42s |

| cache-server-748468bbc9-9nqqv | 1/1 | Running | 0 | 7m41s |

| metadata-envoy-7cd8b6db48-ksbkt | 1/1 | Running | 0 | 7m42s |

| metadata-grpc-deployment-7c9f96c75-zqt2q | 1/1 | Running | 2 | 7m41s |

| metadata-writer-78f67c4cf9-rkfkk | 1/1 | Running | 0 | 7m42s |

| minio-6d84d56659-gcrx9 | 1/1 | Running | 0 | 7m41s |

| ml-pipeline-8588cf6787-sp68f | 1/1 | Running | 1 | 7m42s |

| ml-pipeline-persistenceagent-b6f5ff9f5-qzmsl | 1/1 | Running | 0 | 7m42s |

| ml-pipeline-scheduledworkflow-6854cdbb8d-ml5mf | 1/1 | Running | 0 | 7m42s |

| ml-pipeline-ui-cd89c5577-qhgbc | 1/1 | Running | 0 | 7m42s |

| ml-pipeline-viewer-crd-6577dcfc8-k24pc | 1/1 | Running | 0 | 7m42s |

| ml-pipeline-visualizationserver-f9895dfcd-vv4k8 | 1/1 | Running | 0 | 7m42s |

| mysql-6989b8c6f6-g6mb4 | 1/1 | Running | 0 | 7m42s |

| workflow-controller-6d457d9fcf-gnbrh | 1/1 | Running | 0 | 7m42s |

Access local Kubeflow Pipelines instance

In order to connect to KFP UI, create a port-forward to the ml-pipeline-ui service:

kubectl port-forward svc/ml-pipeline-ui 9000:80

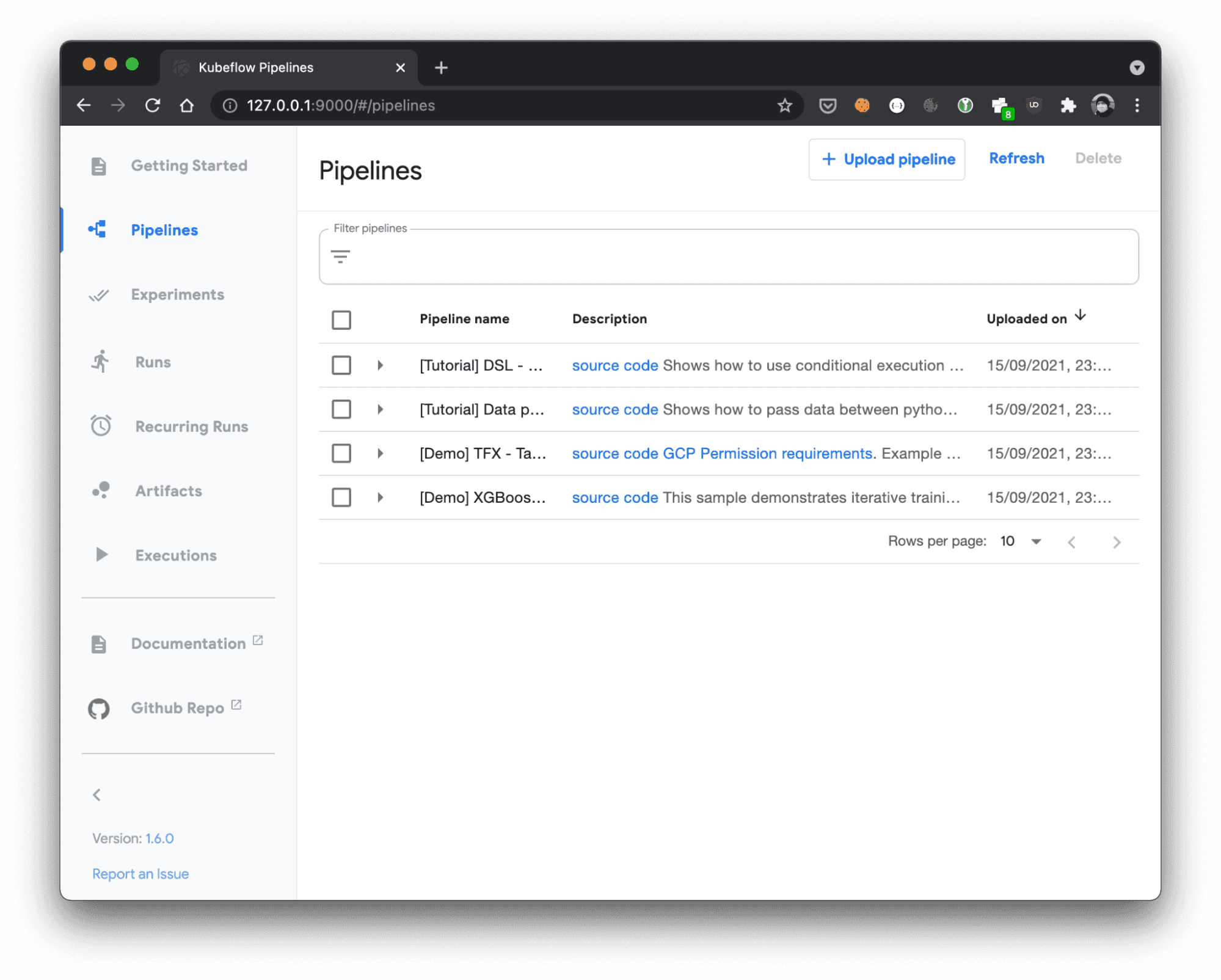

and open this browser: http://localhost:9000/#/pipelines

Implementation details

Our platform-agnostic KFP Helm Chart was based on the original chart maintained by the GCP team. At the moment of the fork, GCP chart was running version 1.0.4, we upgraded all of the components so that KFP was running the up-to-date version 1.6.0 (at the time of writing this post). GCP-specific components, such as CloudSQLProxy, ProxyAgent were refactored to be deployed conditionally, based on values provided in the chart.

We introduced a setting to enable or disable managed storage. Once enabled, it can use:

- CloudSQL and Google Cloud Storage - when running on the Google Cloud Platform

- Amazon RDS and S3 - when running on AWS. If the managed storage is disabled, a local MySQL database and MinIO storage buckets are created (as in this post). As for now, Azure support is pending, feel free to create a pull request to our repository!

Next steps to running Kubeflow Pipelines

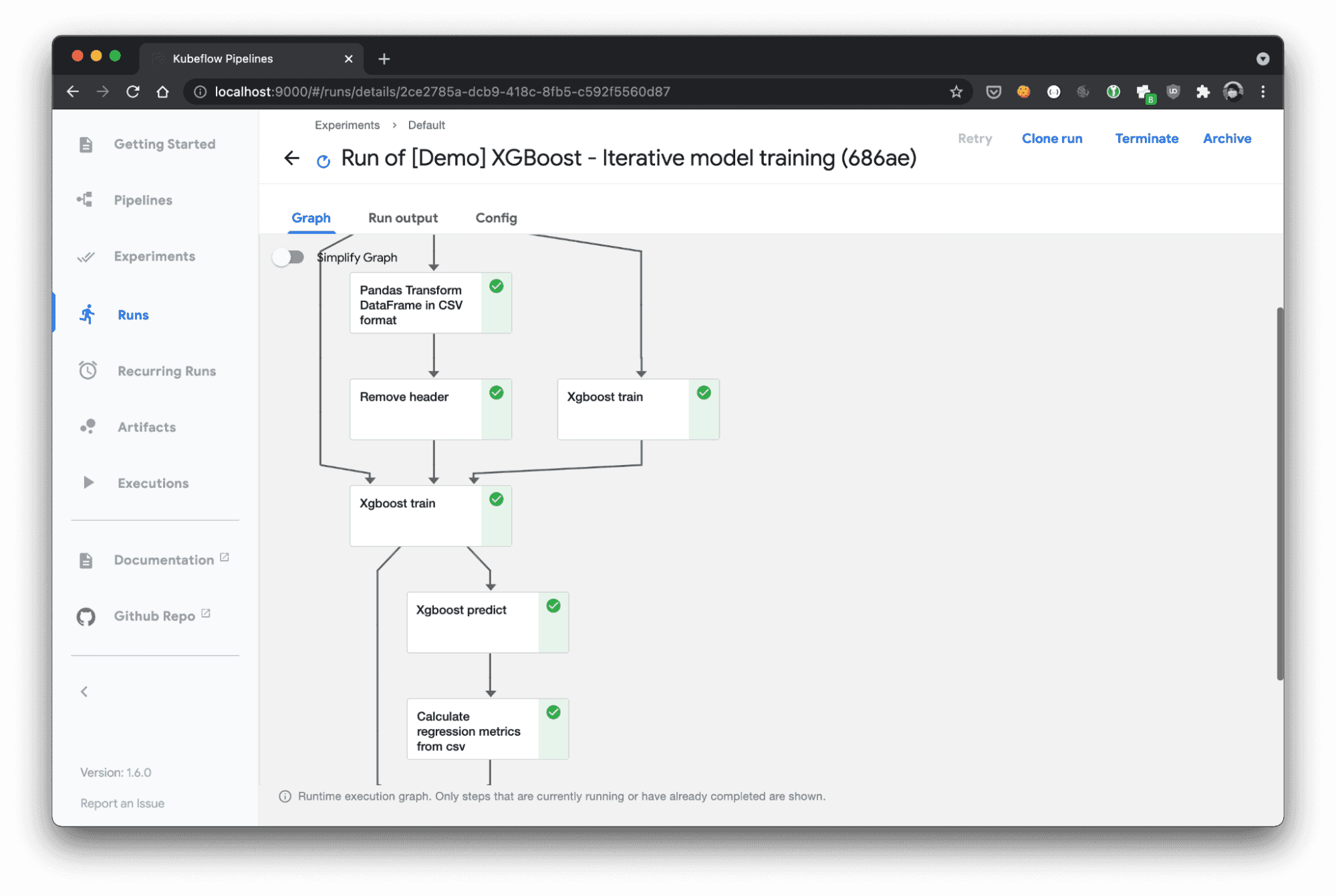

Now that you have a fully working local Kubeflow Pipelines instance, you can learn KFP DSL and start building your own machine learning workflows without the need for provision of a full Kubernetes cluster.

I encourage you to also explore GetInData's Kedro Kubeflow plug-in, which enables you to run the Kedro pipeline on Kubeflow Pipelines. It supports translation from the Kedro pipeline DSL to KFP (using Pipelines SDK) and deployment to running a Kubeflow cluster with convenient commands. Once you create your Kedro pipeline, configure the plug-in to use a local KFP instance by setting the host parameter in conf/base/kubeflow.yaml:

host: http://localhost:9000

# (...) rest of the kubeflow.yaml config

To stay up-to-date with the KFP Helm Chart, follow the Artifact Hub page! If you would like to know more about Kedro Kubeflow plug-in, check the documentation here.

Interested in ML and MLOps solutions? How to improve ML processes and scale project deliverability? Watch our MLOps demo and sign up for a free consultation.