White Paper: Monitoring and Observability for Data Platform

About In this White Paper, we described a monitoring and observing data platform in case of continuously working processes. What you will find there…

Read moreThe year 2023 has definitely been dominated by LLM’s (Large Language Models) and generative models. Whether you are a researcher, data scientist, or manager, many new concepts, terms, or names must have reached your ears - to name a few, it could be RAG (Retrieval Augmented Generation), Reinforcement Learning from Human Feedback (RLHF), or hallucination. So much has been written about the significance of these developments that virtually every subsequent summary of the year seems somewhat derivative and unnecessary.

On the other hand, somewhat in the shadows, the more classical stream of data science, based sometimes on statistical methods and sometimes on neural networks, is still developing. Because of my personal interests, I have decided to focus on this area. In particular, I have looked at innovations in the field of gradient boosting and time series models. There is also a lot of interesting material in the field of synthetic data generation, where I have focused on tabular data. Another important trend is the emergence and development of libraries to monitor and test predictive models, as well as the quality of the input data.

When selecting the papers, I have considered only those that were published in 2023. My selection is largely subjective, and I have tried to select topics that seem promising but have not gained sufficient publicity. As far as frameworks and libraries are concerned, I have focused on those that premiered last year, have significantly gained in popularity, or I simply came across for the first time last year. I hope you will also find something on this list for yourself - a new framework that you decide to use in your work or a paper that inspires you to explore an interesting topic.

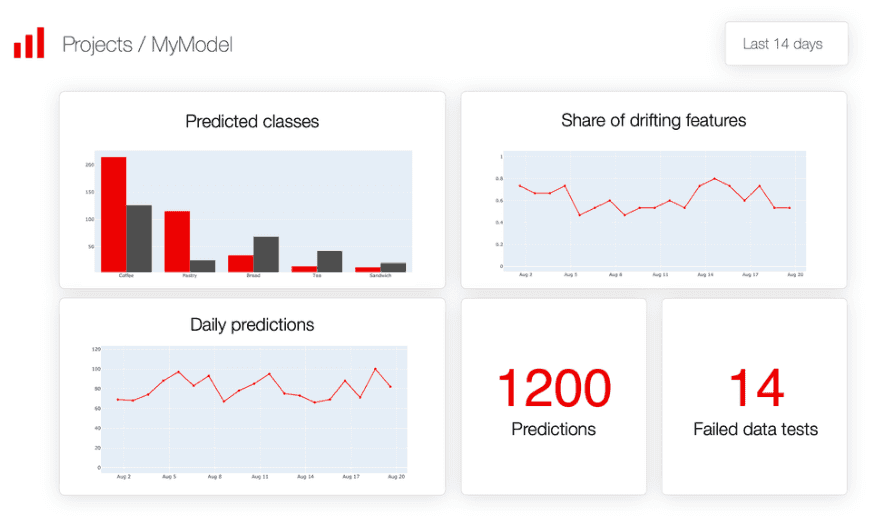

Evidently AI is a tool that facilitates the monitoring of machine learning pipelines. It can be used for monitoring models based on tabular data, NLP models and LLM’s. Evidently AI combines multiple functions in one place, allowing you to track the quality of your models graphically. It currently provides the following reports:

Here is the article on medium showing how to use Evidently AI.

Just a few years ago the arrival of XGBoost 2.0 would probably have caused quite a stir, meanwhile it was easy for the event to be completely overlooked under the onslaught of news about new LLM models. So, what does the next version bring with it? Now XGBoost can build one model for multi-target cases like multi-target regression, multi-label classification, and multi-class classification. The multi-target feature is still a work in progress. Another change is the setting of hist as the default tree method. There are also few changes related to gpu training making the procedure more efficient. In the new version, quantile regression is also available

You can find full list of improvements here.

giskard is a platform for testing ML models. In recent years, especially after the emergence of machine learning models, trust in artificial intelligence models has become crucial. giskard automatically detects vulnerabilities in different types of models, from tabular models to LLM models, including: performance bugs, data leaks, false correlations, hallucinations, toxicity, security issues, unreliability, overconfidence, under-confidence, unethical issues, etc.

You can check it on github too.

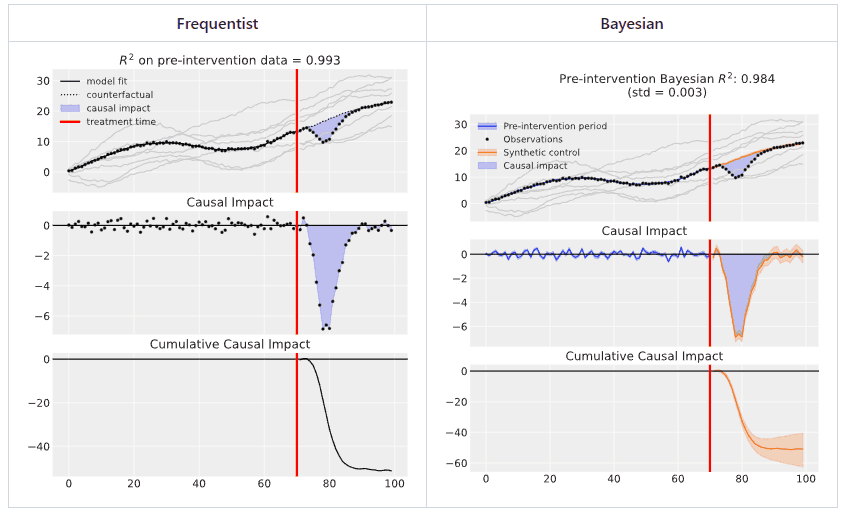

CausalPy is a Python library focusing on causal inference in quasi-experimental settings. The package allows the use of advanced Bayesian model fitting methods in addition to traditional OLS.

You can check it on github too.

Pandera is a Python library that provides flexible and expressive data validation for pandas data structures. It is designed to increase the rigor and reliability of the data processing steps, ensuring that data conforms to specific formats, types and other constraints before analysis or modeling. Various types of data frameworks can be used for data validation, such as pandas, dask, modin, and pyspark.pandas. Pandera promotes data integrity and quality through data validation. The scope of its work does not end with data type checking, Pandera also uses advanced statistical validation to ensure data quality. Pandera distinguishes itself by offering schema enforcement, adaptable validation and integration with pandas.

You can check it on github too

PyGlove is a Python library for symbolic object-oriented programming. While its description may initially seem confusing, the basic concept is simple. It is a great tool to share complex machine learning concepts in a scalable and efficient manner. PyGlove represents ideas as symbolic rule-based patches. Thanks to that researchers can articulate rules for models they "haven't seen before". For example, a researcher can specify rules such as "add skip-connections." This permits a network effect among teams, allowing any team to immediately issue patches to all others.

Here you can find a notebook showing how to use PyGlove.

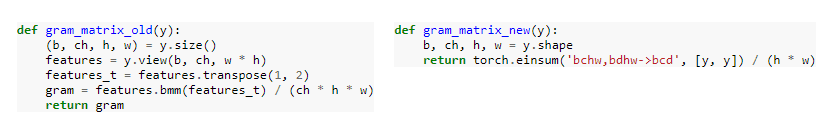

einops is a powerful library for tensor operations in deep learning. It supports numpy, pytorch, tensorflow, jax, and others. The intriguing name, einops, derives from "Einstein-Inspired Notation for operations". This naming reflects the library's goal of making matrix operations feel clear and intuitive. A unique feature of einops is the simplification of complex pytorch transformations, making them readable and easier to analyze.

You can check it on github too.

aeon is a toolkit for various time series tasks such as forecasting, classification and clustering. It is compatible with scikit-learn and provides access to the latest time series machine learning algorithms, in addition to a range of classic task learning techniques. By using numba, the implementation of time series algorithms is very efficient. It is compatible with interfaces of other time series packages to provide a uniform structure for comparing algorithms. aeon can be an interesting alternative to nixtla and other time series frameworks.

You can check it on github too.

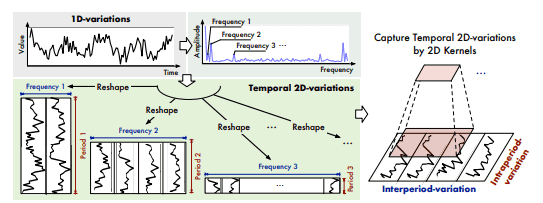

TimesNet is a new time series model. Unlike previous models such as N-BEATS, N-HiTS and PatchTST, it uses a CNN-based architecture to achieve state-of-the-art performance on a variety of tasks. TimesNet transforms 1D time series data into 2D tensors, capturing complex temporal changes for tasks such as forecasting and anomaly detection. Looking at benchmark tests, it provides really great results. TimesNet is part of the nixtla’s neuralforecast and TSlib libraries, so you can test the model performance yourself.

Link to text is here.

Despite the popularity of deep learning for text and images, machine learning with diverse tabular data still relies heavily on tree-based ensembles. Recognising the need for gradient-based methods adapted to tabular data, the researchers behind GRANDE (GRAdieNtBased Decision Tree Ensembles) present a novel approach.

Their new method using end-to-end gradient descent for tree-based ensembles combines the inductive bias of hard, axis-aligned partitioning with the flexibility of gradient descent optimisation, and, in addition, provides advanced instance weighting to learn representations of both simple and complex relationships in a single model.

Link to the paper is here.

Although random forests are considered to be effective in capturing interactions, there are examples that contradict this thesis. This is the case for some pure interactions that are not detected by the CART criterion during tree construction. In his article, the author investigates whether alternative partitioning schemes can improve the identification of these interactions. Additionally, the author extends the recent theory of random forests based on the notion of impurity decrease by considering probabilistic impurity decrease conditions. Under these assumptions, a new algorithm called 'Random Split Random Forest' was established adapted to function classes involving pure interactions. In a simulation study, it was confirmed that the considered modifications increase the fitting capacity of the model in scenarios where pure interactions play a key role.

Link to the paper is here.

This paper presents a new approach to generating and imputing mixed-type (continuous and categorical) tabular data using score-based diffusion and conditional flow matching. In contrast to previous studies that used neural networks to learn a score function or vector field, the authors chose XGBoost model. Empirical results, based on 27 different datasets, show that the proposed approach produces highly realistic synthetic data when the training dataset is clean or contains missing data and produces diverse and reliable data imputations. Furthermore, the method outperforms deep learning generation techniques in terms of data generation performance and effectively competes in data imputation. Importantly, the method can be trained in parallel using CPUs without the need for GPUs. R and Python libraries implementing this method are also available.

Link to the paper here or on github.

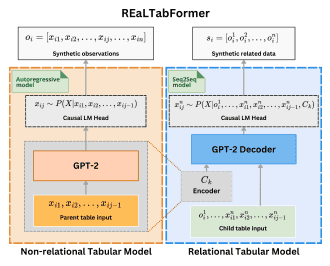

The main problem when modeling relational data is the need to have both a master table and relationships between the various tables. REaLTabFormer (Realistic Relational and Tabular Transformer), a tabular and relational model for generating synthetic data, first creates a master table using the GPT-2 model, and in a second step generates a relational dataset conditioned on the parent table using the sequence-sequence model (Seq2Seq). REaLTabFormer also achieves great results in prediction tasks, 'out-of-the-box', for large non-relational datasets without the need for tuning. It seems interesting how the performance of the model would be affected by using newer versions of GPT.

Link to the paper here or on github.

This article deals with a problem that seems to be well researched already, where appropriate methods of dealing with it have been developed and nothing can surprise us anymore. However, it turns out that there is one issue that is often overlooked. The author demonstrates that unsupervised preprocessing done prior to cross-validation can introduce significant bias into cross-validation estimates, potentially biasing model selection. This bias, positive or negative, varies in magnitude and depends on all the parameters involved in the problem. Consequently, this bias can lead to overly optimistic or pessimistic estimates of model performance, resulting in suboptimal model selection. The extent of bias depends on the specific data distribution, modeling procedure and cross-validation parameters.

Link to the paper.

As you can see, there is still a dynamic universe developing outside the mainstream of generative AI. However, it is certainly more difficult to access this type of content, as I have experienced in creating this summary. I'm very curious to see how the situation will look next year, whether all eyes will still be on LLMs or whether the hype for them will subside somewhat. Or will we be surprised by something completely new and groundbreaking, even more so than what we witnessed in the past year?

If you would like to stay up to date with interesting articles, blogs, technologies, and papers, I encourage you to subscribe to the Data Pill Newsletter we have co-created.

About In this White Paper, we described a monitoring and observing data platform in case of continuously working processes. What you will find there…

Read moreGenerative AI and Large Language Models are taking applied machine learning by storm, and there is no sign of a weakening of this trend. While it is…

Read moreBig Data Technology Warsaw Summit 2020 is fast approaching. This will be 6th edition of the conference that is jointly organised by Evention and…

Read moreFlink is an open-source stream processing framework that supports both batch processing and data streaming programs. Streaming happens as data flows…

Read moreAirflow is a commonly used orchestrator that helps you schedule, run and monitor all kinds of workflows. Thanks to Python, it offers lots of freedom…

Read moreMain Goals GetInData has successfully introduced the Scrum framework in cooperation with Dema. Thanks to the use of Scrum, the results of the…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?