Introduction to dbt Cloud - features, capabilities and limitations

dbt Cloud is a service that helps data analysts and engineers put their dbt deployments into production. As data-driven organizations continue to grow…

Read moreAs the effort to productionize ML workflows is growing, feature stores are also growing in importance. Their job is to provide standardized and up-to-date features ready to use in production models, making it possible to reuse the existing features for different models, as well as to serve as a data discovery platform - a database of feature metadata. On top of that, feature stores may provide limited feature engineering capabilities.

Currently, judging by the number of GitHub stars, the most popular open source feature store implementation is Feast. The combined Feast's and Amundsen's data discovery capabilities have already been presented in Mariusz's genius blog post Machine Learning Features discovery with Feast and Amundsen. In this blogpost, we will cover how to use point-in-time joins on data flowing from different sources from a relational database, as well as from a real-time data stream to serve production-ready features.

In order to showcase Feast's capabilities, we created a demo containing a sample business case with two data sources, one of which is the Postgres database and the other is a Kafka topic. Its source code is available right here on GitHub.

Let's assume the following business case - an e-commerce company offers products on its website. The company has access to data about users and their orders (stored in Postgres) and a data stream regarding website traffic, ingested via Kafka topics.

The company wants to create features with the help of Feast and use them to understand their consumers' behavior better - on top of the data that defines the user (coming from the Postgres table) there is the need to engineer features based on web traffic data, such as the number of landings on the listing, product and photo pages.

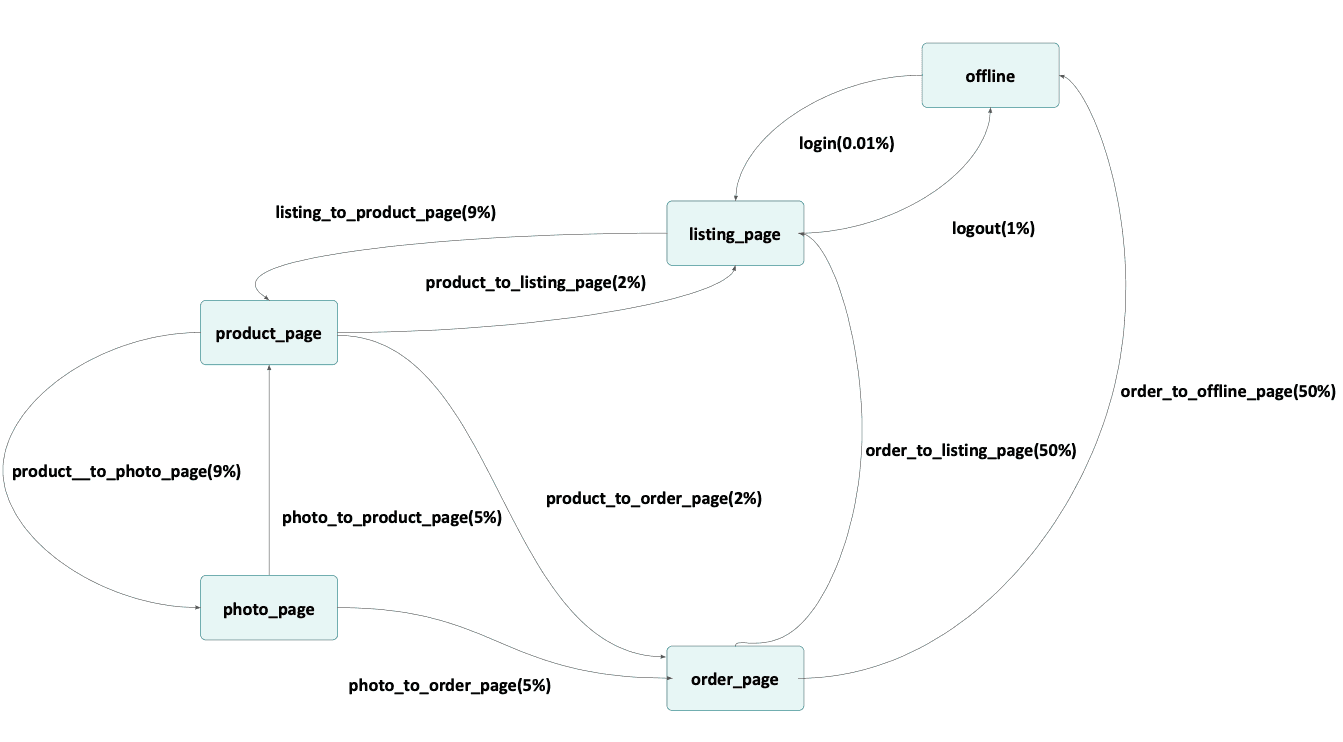

Sample user behavior was modeled and corresponding data was generated using the invaluable doge_datagen project. This is the user behavior model's diagram:

Feast requires a feature_store.yaml configuration file and one or more Python files with Feast object definitions. The configuration we tested included using Postgres as an offline store and Redis as an online store.

Postgres and Kafka source support are new things in Feast appearing in versions v0.21 and v0.22 respectively. As of version 0.23, Kafka stream support is in the experimental stage and we believe there is still room for improvement with regards to its interface. As stated in the Feast docs, Kafka sources must have a batch source specified, which can be used for retrieving historical features. Therefore, even if the Kafka stream is only used as an online store source, a mock batch source must be created. So in the demo we ended up with three defined data sources:

Those sources identified their records using a subset of three defined entities: user, order and web traffic id. Finally, we defined two feature views, one for order details and the other for user traffic, which we used to create a simple visualization. Below we have presented an animation of an example demo run:

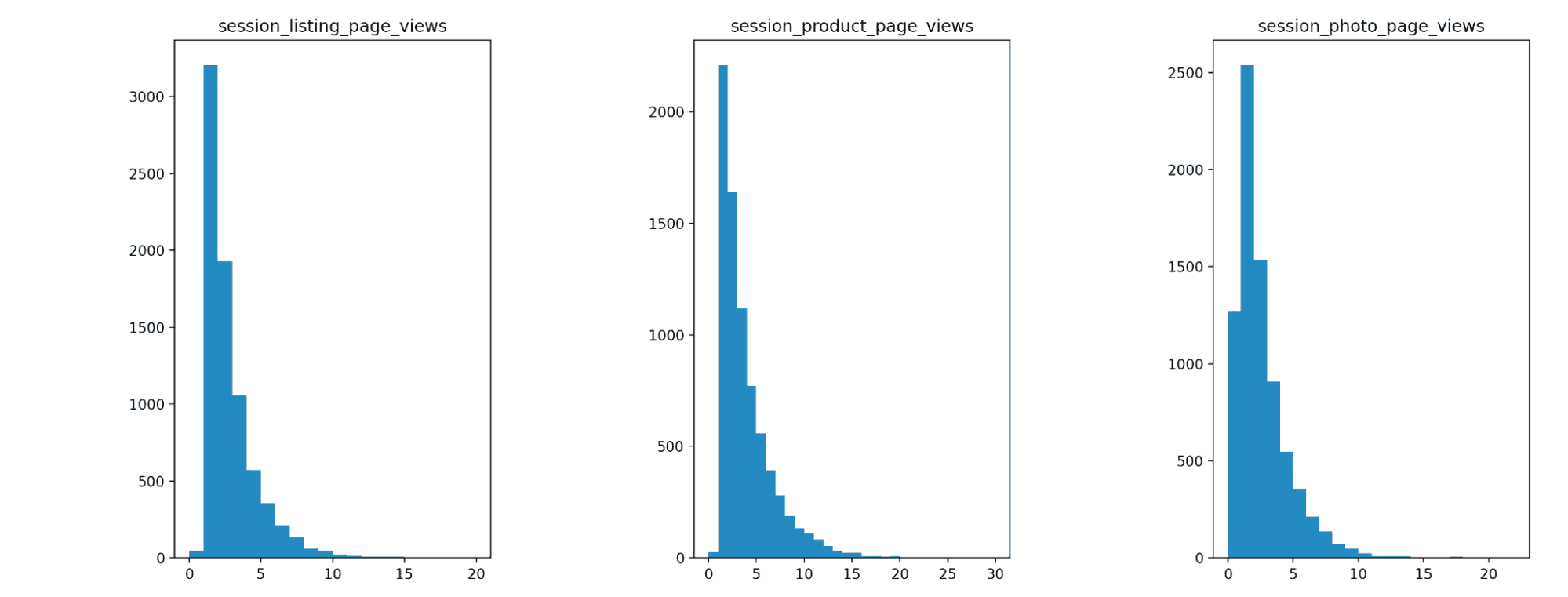

To showcase one step in visualizing the data, we created histograms showing the expected number of visits on the listing, product and photo pages per one successful transaction. As you can see, all those histograms show similar distributions (which is expected, as this can be deduced from the datagen model), and while most of the customers decide to buy the product after just a few clicks, some seem to ponder for a long time, before finally clicking the shopping cart icon!

During the work on the demo, we encountered some issues which you may want to be aware of, especially if you are thinking about using Feast to implement Feature Store in your own project. Most of those issues can be linked to the early stage of the project, so be sure to check out new Feast releases regularly.

Feature extraction is still in the alpha stage and its capabilities are limited. For example, entity values are not provided in the "on demand" feature views input dataframe, thus the dataframe is not suitable for aggregates extraction. Due to this limitation, we ended up transforming the Kafka source data before passing it on to Feast. Moreover, we did not find a way to take a random sample from the offline/online store. Documentation says it is possible to provide pd.Dataframe or SQL. The SQL solution seems more elegant, but we could not manage to successfully use this option.

What's more, as it stands, debugging is a pain, as during development internal libraries' have exceptions galore. When a feast applies command results in Pandas or psycopg2 exception, deducing what the problem is demands imagination and time. It also seems that Feast makes some assumptions about schemas that are not documented. For example, the defined source's event timestamp field and created timestamp field may not be the same field, as such a defined source will throw out an internal SQL generation exception. During my testing, the Kafka online source has also silently failed to ingest records, without any exception, because of schema mismatch.

However, it seems that the Feast team is aware of the aforementioned debugging issue and some fixes may be applied in subsequent releases.

Feature stores serve an important role in operationalizing machine learning models and Feast is certainly one of the most popular open source projects in this area. The Feast framework still has some teething issues, which we summed up in the previous chapter, however, the rate of improvement is fast and we hope that as time goes by, the rough edges will be eliminated and the solution's productivity multiplier will be even greater. If you would like to know more about our other Feature Store implementations, check out our blog post Feature Store comparison: 4 Feature Stores - explained and compared.

Interested in ML and MLOps solutions? How to improve ML processes and scale project deliverability? Watch our MLOps demo and sign up for a free consultation.

dbt Cloud is a service that helps data analysts and engineers put their dbt deployments into production. As data-driven organizations continue to grow…

Read moreMachine learning is becoming increasingly popular in many industries, from finance to marketing to healthcare. But let's face it, that doesn't mean ML…

Read moreStreaming analytics is no longer just a buzzword—it’s a must-have for modern businesses dealing with dynamic, real-time data. Apache Flink has emerged…

Read moreFlink complex event processing (CEP).... ....provides an amazing API for matching patterns within streams. It was introduced in 2016 with an…

Read moreHave you worked with Flink SQL or Flink Table API? Do you find it frustrating to manage sources and sinks across different projects or repositories…

Read moreSQL language was invented in 1970 and has powered databases for decades. It allows you not only to query the data, but also to modify it easily on the…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?