GetInData in 2022 - achievements and challenges in Big Data world

Time flies extremely fast and we are ready to summarize our achievements in 2022. Last year we continued our previous knowledge-sharing actions and…

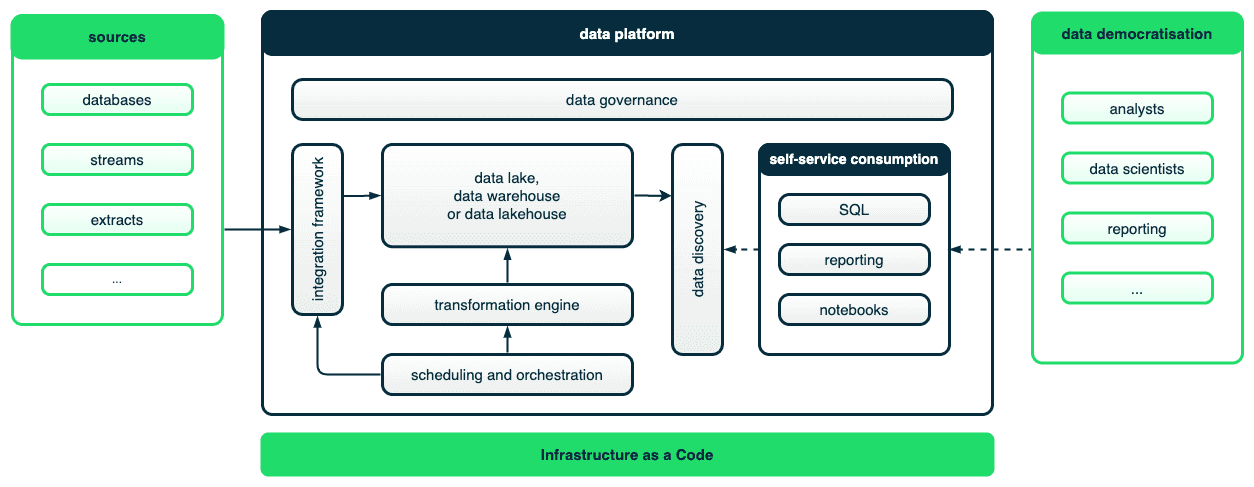

Read moreNowadays, data is seen as a crucial resource used to make business more efficient and competitive. It is impossible to imagine a modern company without taking care of its data and using it to its full potential. Capturing, storing and processing the datasets in an efficient and reliable way has always been a challenge, especially when there is the need to handle large data volumes, provide a quicker response time and accommodate various use cases.

Over time, the industry response to these challenges has evolved and in this blog post we will try to identify some of the changes that can be observed in data-related projects. We won’t dive deep into the technical details of any of the tools or frameworks but rather focus on the overall trends and identify some key characteristics of a modern data platform.

There are some visible changes in the area of developing data platforms, which means a set of tools allowing to process data assets. Fast changing requirements and working in an agile environment require a well defined development process. Modern tools to develop data processing pipelines borrow a lot from software engineering practices - promote using tests, automatic deployment and support for multiple environments. Central code repository, code review process for all layers of the final solutions, starting from infrastructure (IaaC) to pipeline orchestration and ETL/ELT job definition definitely make the whole process much more reliable.

Cloud has revolutionized many companies. Nowadays, data platforms are taking advantage of this too. Ease of deployment, a serverless model or pay-as-you-go approach have definitely become important aspects of a new data platform in many projects.

But a modern data platform is also defined by the way it is used. Complex and independent data sources require easy maintainable and collaborative cataloging services to enable data discovery. This is crucial, because these days data access is democratized within the companies. According to common expectations, users should be able to independently use the platform and explore data. Therefore, self-service in reporting, analysis and even developing workflows is gaining attention in many organizations.

One of the trends that can be observed is the adoption of best practices from the software development domain. Historically, it was quite common to use proprietary enterprise tools to draw and implement ETL jobs. In other cases, raw SQL queries were used and executed from stored procedures right in the databases. The limitations of such an approach was that it was difficult to implement standard ways of working known to software engineers, like testing, support for separate environments that have synchronized code deployment.

In a modern data platform, ETL/ELT jobs are code and all the practices used to build reliable software are used to maintain high quality of the data. Tests should be used to check the developed transformations, builds should be triggered automatically and code should be deployed using CI/CD pipelines. It’s good to have Infrastructure as a Code, to make sure the code repository contains the full definition of the environment and can be easily deployed at different stages (sandbox, development or production).

Fortunately, there are frameworks which promote this way of working.** dbt** (data build tool) is a good example of such an approach. The data pipeline is defined as a series of transformations, each of them expressed in SQL. Since the whole project is in code and YAML config files we can benefit from code review, deployment on multiple environments and use CI/CD pipelines. A wide variety of tests is also supported – starting from simple checks on column values (unique, not null) to more complex cases where we run our transformation on test data.

dbt is a core part of our modern data stack that we deploy to our clients and we have open sourced several extensions which make, for example, scheduling with Apache Airflow much easier or provide CLI to manage data projects. Details on our solutions can be found in the Airflow integration and Data Pipeline CLI projects. If you'd like to learn more details about our modern data platform, please do check "Let your analytics build data transformations easily with modern data platform" given by Mariusz Zaręba during the Big Data Technology Warsaw Summit 2022.

Another thing that is very characteristic for the data stacks that are called modern is aclear shift from ETL (Extract-Transform-Load) to ELT (Extract-Load-Transform) paradigm when it comes to integrating data for analytics. ETL pipelines that integrate data in specialized tools before loading to the DWH proved to be problematic at scale due to the growing number of use cases, which led to a bottleneck of engineering resources, disconnection between engineers and business data consumers and mistrust of data due to inconsistency of results at the end of the day. The rise of cloud data warehouses with great processing capabilities has encouraged the shift towards ELT pipelines - transforming data in the DWH, which leverage relatively cheap scaling without any storage limitations and tools (like the already mentioned dbt) that allow data transformation directly in the warehouse. This naturally does not mean that we can dump all the data into the DWH and magic will happen - without proper data governance, modeling and quality control embedded in the pipelines, at some point we might face similar issues and bottlenecks as with ETL jobs. The topic of data governance in the modern data stack will be covered in more detail in one of our following blog post.

Large data volumes, typical for data platforms, usually require large computing resources and complex processing. In many cases it is non-trivial to plan the capacity in advance.

It’s even more difficult for startups or companies that are scaling up, where expected workloads are simply unknown. Therefore more and more systems provide serverless or close to serveless ways of using the data platform. There are many products on the market that work in this way.

Google’s BigQuery is a good example. We are billed by the volume of data read from the storage while executing our queries. AWS Athena or AWS Redshift Serverless (currently in preview) also work in a similar fashion. Snowflake has an idea of computing clusters (called virtual warehouses) that are decoupled from storage and can be started up within seconds when a query arrives and shut down automatically when not used.

The great benefit of such a model is that it lets companies (or data teams) start small and deploy fast without committing to estimated capacity. This business model speeds up the decision making process and encourages companies to use the full potential of their data without worry of too high initial costs.

This trend doesn’t relate only to the data warehouse execution engine. Many integration services run also in a serverless way. Fivetran is an example of such an approach where Software as a Service tool is used to integrate different data sources into a common platform. The benefit is similar as described above - a pay-as-you-go pricing model and reduced set-up cost.

But the serverless execution model is not the only change related to cloud computing. Cloud has been a revolution for the whole industry and it is one of the trends that we can observe in data platforms as well.

Pre-cloud data platforms were usually built around a well defined technological stack. No matter if this was an enterprise proprietary data warehouse solution or open source Hadoop distribution, it was often challenging to accommodate non-standard use cases. In some cases it would require extending the stack and team, in others building a separate system along existing solutions.

First of all, having a data platform built in the cloud gives us much easier access to other services from the cloud provider portfolio. This makes integration with other systems much easier.

I could see that especially in situations where products built on a data platform (let’s say recommendations or customer segmentation) need to be shared with other teams to be used by backed services. In on-premises deployments, this usually required much more effort, in many cases finding new tools that will be used to share or host the results and extend the team. In the cloud we are free to use services that are already in place and engineers familiar with the cloud usually know how to use them. Collaboration between data and backend teams is much easier in such setups. From a business perspective, delivery time of new features can be much quicker.

Cloud also gives us other unique opportunities. Cost management is much easier when compared to on-premises solutions. A tagging mechanism can be used to define resources used in a given project and later, with just a few clicks, we can report all costs allocated to it. This would be very difficult to achieve on-premises where a lot of resources (data warehouse, Hadoop cluster) were shared by all the projects. Cost allocation matters, because it allows the set up of priorities accordingly. Stakeholders can make better decisions if a project is worth continuing, having the costs already used for computing in mind. Data governance, access control and auditing – all of these critical features were available on-premises, but in cloud it is much easier to use and get configured properly without hidden back door exceptions.

In the fast changing world of computer science, it is quite funny that a language that was developed in the mid-1970s still is regarded as a universal data access and data transformation language. Moreover , many of the new frameworks that are introduced,sooner or later end up with SQL support, as it is difficult to promote new products with a custom language. SQL is still taught at University, you will find it as a top requirement in job advertisements in the data domain and it may be the only common language used by engineers, data scientists, analysts and business in your organization.

Using a well known language has a huge impact on data democratization. Low entry barriers mean more people can test their hypothesis, analyze results and promote data culture within the company. It is extremely important since the data mesh concept is gaining popularity. Decentralized data ownership definitely works better if we can provide a solution with easy access and low entry barriers. You can find out more about data mesh in our blog post “Data Mesh as a proper way to organise data world”.

Support for SQL makes it also easier to encourage more people in active ETL/ELT development, with only little support from Data Engineers. Both traditional and modern data platforms support SQL and it doesn’t seem to have changed. Long Live SQL!

Not so long ago, the data warehouses used to run in a daily batch mode. It is still sufficient in many cases but everything is changing and a modern data platform must be prepared to accommodate, if required. Stream processing cases are not rare. In many businesses, stream processes it is used to react immediately, for instance, to analyze clickstream traffic, in fraud prevention or recommendation systems.

It seems there is not one approach that fits all cases, but it is highly desirable to have a data platform that is able to handle different workloads. Cloud environments often make this easily possible as we already have a rich portfolio of services to use. In the case of AWS, for example, we can use managed Kafka clusters to handle streaming data and process them in AWS EMR cluster running Spark Streaming or Flink application. If we prefer to have a service that handles streaming and processing at once, we can use AWS Kinesis to receive the traffic and process it on the fly using SQL-like expressions. All of this can happen in parallel with existing batch processing that operates on S3 and EMR.

There are some trends which affect the whole IT industry. Cloud computing, serverless approach, Infrastructure as a Code are just few of them and they have also influenced the shape of modern data platforms. The most important thing is to make sure we use techniques that proved to be successful in other areas: testing, CI/CD, code review. Technological advances and cloud computing allow data platform initiatives to start easily without over provisioning in advance. Cloud native approaches give us the opportunity to improve compliance and cost optimization.

With the ever popular agile approach to software development, modern data platforms must also be flexible enough to handle different workloads, even those unforeseen circumstances at the beginning and allow easy collaboration of data engineers, business analysts and data scientists.

Are there any ideas we can learn from the traditional, old data platforms that were built years or decades ago? I think so, - when chasing for the best framework, the newest library or the most robust tool, it is easy to forget what really matters. In the end, we are not delivering software or platforms but the data itself and we are as good as the data we deliver. This hasn't changed and it is still worth remembering.

We build Modern Data Platforms for our clients, if you would like to discuss this, we encourage you to sign up for a live demo here.

Time flies extremely fast and we are ready to summarize our achievements in 2022. Last year we continued our previous knowledge-sharing actions and…

Read moreWhile a lot of problems can be solved in batch, the stream processing approach can give you even more benefits. Today, we’ll discuss a real-world…

Read moreThe Data Mass Gdańsk Summit is behind us. So, the time has come to review and summarize the 2023 edition. In this blog post, we will give you a review…

Read more2020 was a very tough year for everyone. It was a year full of emotions, constant adoption and transformation - both in our private and professional…

Read moreIn this episode of the RadioData Podcast, Adam Kawa talks with Michał Wróbel about business use cases at RenoFi (a U.S.-based FinTech), the Modern…

Read moreIt’s been already more than a month after Big Data Tech Warsaw Summit 2019, but it’s spirit is still among us — that’s why we’ve decided to prolong it…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?