Apache NiFi, a big data processing engine with graphical WebUI, was created to give non-programmers the ability to swiftly and codelessly create data pipelines and free them from those dirty, text-based methods of implementation. Unfortunately, we live in a world of trade-offs, and those features come with a price. The purpose of our blog series is to present our experience and lessons learned when working with production NiFi pipelines. This will be organised into the following articles:

We need to keep it simple

The use-case for the project was at first presented in a very simple way. We had to substitute the current solution with a pipeline based on Apache NiFi and the requirement was “to take files from one place, check if data inside fulfills the requirements and put them on HDFS”. The main focus, as stated by one of the engineers working with us at the time, was to “keep it simple” and with the task being not too complicated in itself, we went with it.

We started with a proof of concept (PoC) and the idea was to verify if NiFI is the right tool to go with. We decided to go for a generic solution and one NiFi flow that could handle hundreds of different files with several processing rules applied. After a couple of weeks we had most things (required at that time) up and running. There was a single requirement: “validate content of the ingested files through custom rules’ set” that we didn't find suitable for NiFi. To solve this, we wrote an Apache Spark job and used SparkLauncher library to run Spark jobs from within the ExecuteGroovyScript processor. While NiFi is good for generic data pipeline problems, Apache Spark allows the implementation of custom logic in a better way with unit tests included.

Our flow ingested data to HDFS and HIVE, and the other requirement was to not process two datasets affecting a single Hive partition at the same time. This required an external locking mechanism which was the other thing that we decided to implement outside the NiFi.

After a couple of weeks, we were able to see our flow running on mock datasets. PoC was ready and performance metrics were improved several times when compared to a legacy system. We knew that we were missing some important features and started implementing them in the next sprints. Although we did try to identify the necessary features in advance, there were still many missing that got discovered when comparing the output of our system and the legacy one. These were the new requirements that came to light and had to be implemented.

Simple features make the system complex

Multiple simple features put into a single system make it complex. The beauty of going the agile way is that you always focus on the most important things and don't work based on wrong assumptions made several months prior. However, keep in mind that the agile way not only affects the business features but also the architecture and the codebase.

At the beginning, we used just NiFI processors but at some point, there were not enough to handle all the corner cases and we started writing Groovy scripts. A couple of lines of Groovy code made the work done but became messy and hard to manage. The good example for using Groovy scripts may be generating complex Hive queries based on flowfile’s attributes, or listing the directory to find the oldest file matching a specified regex pattern. Although this splitted the codebase, it did not reduce the complexity as both: NiFI and Groovy scripts were tightly coupled. The things were working but still there was a feeling that the flow was getting bigger and bigger and we needed to stop the growth.

At some point we have managed to achieve that with REST microservices and extracting logic from NiFI to separate systems. REST endpoints decoupled systems as the communication is done through clearly defined requests and responses. This increased the codebase but splitted the logic to smaller chunks which are easier to handle and understand.

Divide and conquer

This has worked for some time but still a single NiFi flow did everything and it was growing although not that fast as earlier. It is either you who manages the complexity, or the complexity that manages your architecture. At some point we realized that the flow is getting too big again and decided to split it.

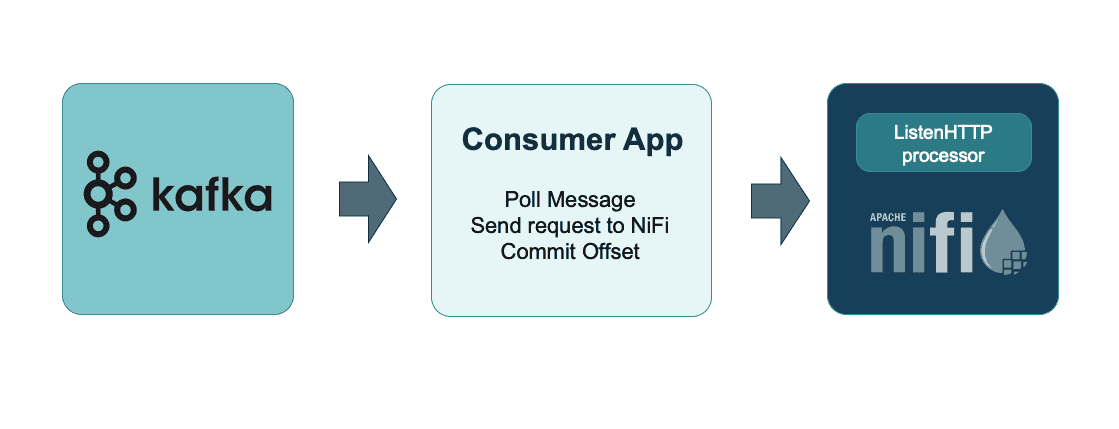

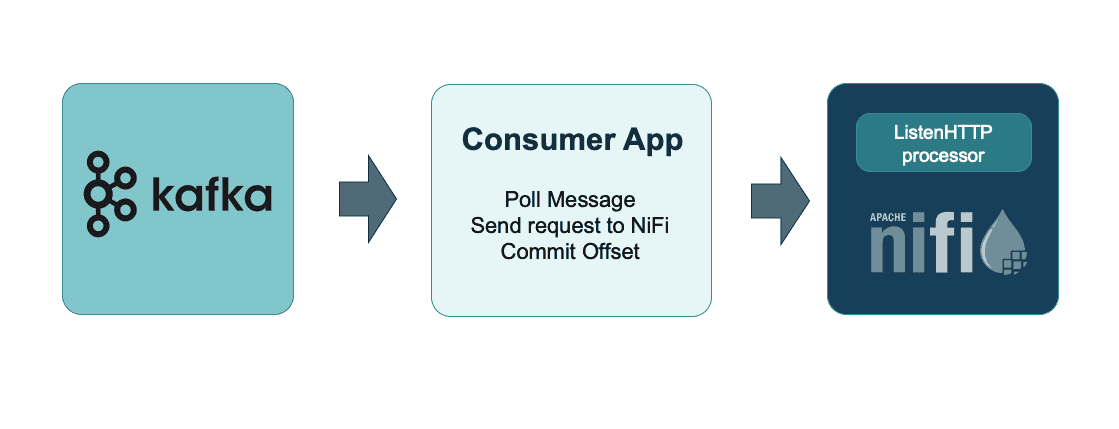

We have achieved that by using Apache Kafka. The flow that ends the processing publishes an event which gets consumed and triggers the next flow to start. Although NiFi provides processors to access Kafka, we have decided to do that by microservices in between. In the project, it is not our team who manages and upgrades the Kafka and we did not want to get into version compatibility issues later on. We have created a REST endpoint to publish messages to Kafka and an event consumer that polls Kafka events and notifies NiFi by requesting an endpoint in NiFi run by ListenHTTP processor. That form of communication is really important, because it allows our consumer to commit Kafka offset after the event is successfully transmitted to NiFI. We commit after retrieving 200 response codes from NiFI which makes sure there has been a successfully created flow file and data will not get lost.

The split of the flows became really beneficial from the development perspective. Each flow is half of the size of the initial one and can be understood easier by new team members. In former posts we have mentioned some NiFi limitations like lack of branches when working on development environments. Splitting the flow mitigated that issue.

From Proof-of-Concept to production

Retry whatever can fail

Within a proof of concept phase we did not care much about corner cases. For example, when running a Hive query, we did not bother with possible retry policy when an error occurs. This came later on and it was then necessary to add retries to any processor that connects any third party systems like: HDFS, Hive, Kafka or REST services. To solve it in a generic way, we have created a retry process group that consisted of over 10 processors. It has done exactly what we needed and we have put in all the places when necessary. The downside was that our flow size increased by over 200 processors due to the retry mechanism. With an upgrade to NiFi 1.10 a RetryFlowFile processor was introduced which does almost anything we needed (except for the yield time which cannot change over next retries).

Generate data for development environment

We have spent a lot of time preparing data for lower environments and this paid off. In many cases, it is the only way to properly test the pipeline before going live on production. We have built in NiFi separate flows that prepare mock datasets to process and flows that clear the results of the processing so that you never run out of disk space.

Log as much as possible outside the happy path

Logs were our best friend whenever debugging the behaviour of the application. NiFi is running on multiple machines, so we decided to grab all the logs to ElasticSearch and expose in Kibana. NiFi is a Java Application and we were able to modify its logback configuration so that it was sending the logs where needed. This allowed us to gather logs from Groovy scripts and we also used it to handle metrics associated with logs through MDC (Mapped Diagnostic Context). Additionally, NiFi provides a LogMessage processor which we used to create log entry whenever such information was valuable. Whenever flowfile processing terminates with a failure, we have created a descriptive log entry.

Conclusion

What we loved? We were able to experiment with new features really fast. The time to see the feature in action is really low which allows stakeholders to test functionalities at early stages. If you’re going to fail with the feature, you will fail quickly which is really good.

What we hated? The time to see the feature in action is NOT the time-to-market. Making the working NiFi flow production-ready usually takes longer than for other data processing technologies. It is good to make it clear in advance.