The 7 Most Popular Feature Stores In 2023

Feature Stores are becoming increasingly popular tools in the machine learning environment, serving to manage and share the features needed to build…

Read moreTime is often a critical factor that can make or break the success of any endeavor. In the context of machine learning, building a new model from scratch can be a challenging task, especially for companies with limited analytical resources. This process can stretch over several months, slowing down progress and impeding innovation.

However, by leveraging the power of low-code platforms, organizations can significantly accelerate the machine learning journey. These platforms provide efficient tools and streamlined workflows that enable faster development, testing and deployment of models. This not only saves valuable time but also empowers companies to stay ahead of the competition. By swiftly implementing ML models, organizations can seize opportunities, make data-driven decisions and ultimately gain a competitive advantage in the market. Such a proactive approach fosters innovation, propels growth and positions businesses as leaders in their respective industries.

Alongside different types of AutoML solutions like cloud based platforms (Google Cloud AutoML, AzureML AutoML, Amazon SageMaker AutoPilot), open-source platforms (Auto-Sklearn, H2O.ai, AutoGluon, etc.) and Enterprise platform (DataRobot, H2O Driverless AI, IBM Watson AutoAI) frameworks have emerged that greatly simplify the entire model-building process, from initial exploratory data analysis and preprocessing to model comparison, hyperparameter tuning and deployment. One exemplary framework in this regard is PyCaret.

However, finalizing the model and deploying it in a production environment can often present challenges. This is precisely where BigQueryML and Inference Engine come to the rescue, streamlining the process of using the model in production. With both our data and the model residing in one place, namely BigQuery, scheduling and deploying the model becomes much simpler compared to alternative approaches.

In this blog post we aim to combine these two solutions, leveraging the power of both PyCaret and BigQueryML Inference Engine. Additionally, we will explore the use of ONNX and address the question of whether this integrated approach offers the easiest and most efficient way to train and implement a machine learning model.

PyCaret is one of the most popular low-code machine learning libraries in Python. Its primary objective is to simplify and automate the various stages of the machine learning pipeline, such as data preprocessing, feature engineering, model selection, hyperparameter tuning, model evaluation and deployment. Thanks to its intuitive high-level interfaces, PyCaret empowers users to effortlessly experiment with and apply sophisticated machine learning techniques using just a few lines of code. The library supports classification, regression, clustering, anomaly detection and time series analysis tasks. Drawing from personal experience, we have found PyCaret to be remarkably efficient in automating initial, time-consuming tasks like exploratory data analysis (EDA) and feature engineering. Additionally, it offers appealing visualizations of model performance. Notably, PyCaret heavily relies on scikit-learn. The final pipeline it creates is the pipeline from scikit-learn. This aspect was one of the key factors in selecting PyCaret for this tutorial, as it allows us to use ONNX. More details on this can be found below. Beyond scikit-learn, PyCaret also allows the use of other libraries, such as XGBoost, LightGBM, CatBoost, or imbalance-learn, broadening the range of tools at the user's disposal.

ONNX is a format that allows you to export and share trained models between different deep learning frameworks and environments. It provides a standardized way to describe and share trained models across different platforms and programming languages, without the need for extensive model conversion or rewriting the code. For a few months now, it has also been possible to import such a model into BigQuery and run it with BigQueryML.

BigQueryML is a machine learning functionality integrated into BigQuery. It enables the training and deployment of machine learning models directly within BigQuery, eliminating the need to move data to external ML frameworks. With BigQueryML, users can leverage an SQL-like syntax to perform tasks such as data preprocessing, feature engineering, model training and evaluation. BigQueryML supports a variety of ML algorithms and techniques, including linear regression, logistic regression, k-means clustering, time series forecasting and many more.

The BigQueryML inference engine enables the execution of models trained in different environments. It supports the utilization of imported models in various formats such as ONNX, XGBoost and TensorFlow. Additionally, it allows the use of remotely hosted models on Vertex AI and pretrained state-of-the-art models. Importing models in an ONNX format seems to be especially valuable as it allows the incorporation of models trained in popular frameworks such as scikit-learn and PyTorch.

Ok, that was the theory bit, now let's see how our stack performs in practice. To demonstrate the practical performance of our stack, we will delve into a real-world scenario. For this purpose, we have utilized the widely available GA4 dataset, which can be accessed by any user through BigQuery. Here is a script preparing the data. From this dataset we have selected 9 categorical columns:

'c_is_mobile','c_operating_system','c_browser','c_country','c_city',‘c_traffic_source','c_traffic_medium','c_traffic_campaign','c_is_first_visit',along with 6 numerical columns:

'n_product_pages_viewed','n_total_hits','n_total_pageviews','n_total_visits','n_total_time_on_site'.Our objective is to build a model that can predict whether a user will add an item to their cart.

Training a model using PyCaret is a straightforward process. Firstly, you need to set up an experiment. This function initializes the training environment and creates the transformation pipeline. It is essential to call the setup function before executing any other function. This requires two mandatory parameters: data, which represents the dataset, and target, which specifies the target variable for prediction. While these two parameters are mandatory, all other parameters are optional, providing flexibility for customization and fine-tuning.

s = setup(data,

target = y,

session_id = 123,

train_size = 0.8,

numeric_features = num,

categorical_features = cat,

numeric_imputation = 'mean',

transformation = True,

normalize = True

)At this stage, we encounter our first challenge, which will become more apparent later on. When we select the preprocessing steps, we lack complete control over the underlying processes. Consequently, we may end up with features that cannot be converted to the ONNX format in the future.

Once the experiment setup is complete, users have the flexibility to choose from a diverse range of algorithms for training their models. There is a wide selection of models available from scikit-learn, and essentially any model with an API consistent with scikit-learn should work seamlessly. Therefore, we can incorporate highly effective algorithms such as XGBoost, LightGBM and CatBoost.

models_to_compare = ['dummy', 'lr', 'knn',

'nb', 'dt', 'rbfsvm',

'mlp', 'rf', 'ada',

'gbc', 'lda', 'et',

'xgboost', 'lightgbm', 'catboost']Now we can start the training and wait for the results.

best = compare_models(sort = 'AUC', include = models_to_compare)

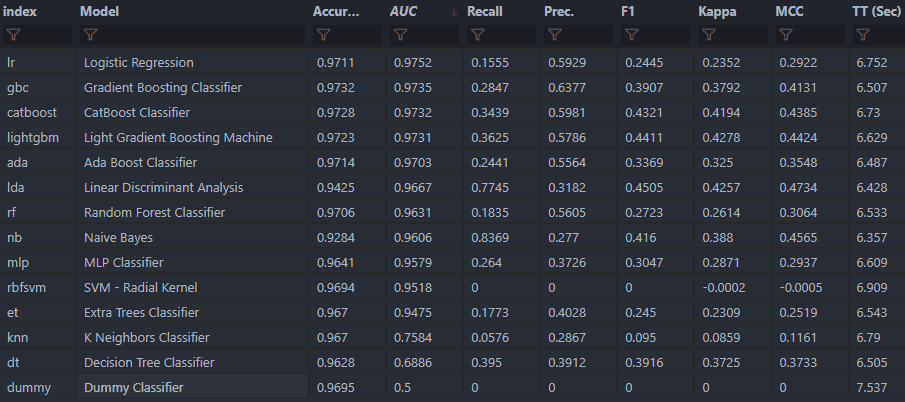

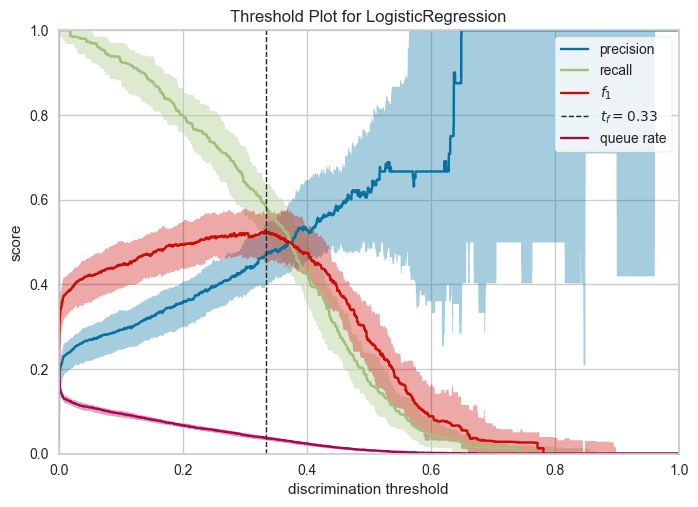

PyCaret automatically compares models using several metrics for classification, including Accuracy, AUC, Recall, Precision, F1, Kappa and MCC. In the previous step, 'AUC' was selected, so our "best" model is Logistic Regression.

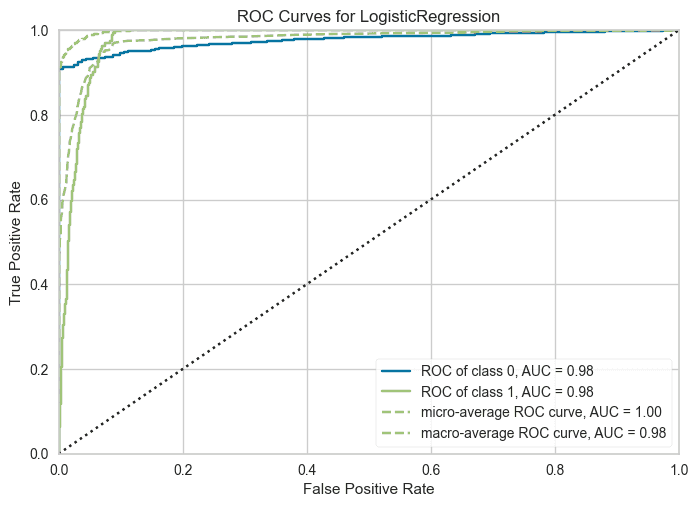

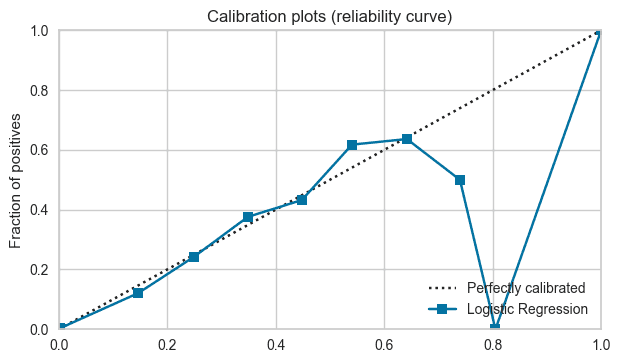

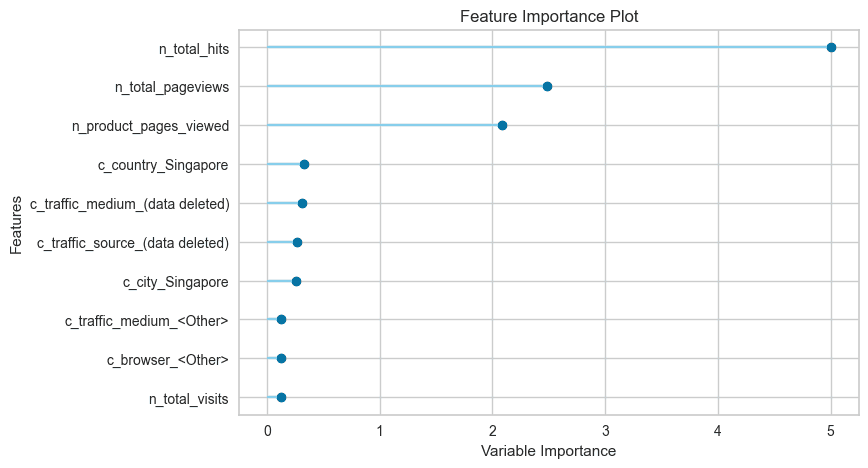

Additionally, PyCaret offers a variety of useful visualizations to aid in evaluating model performance. A full list of available visualizations can be found here, with a few examples below.

In the simplest scenario, we have a model ready. In the next step, we can convert it to ONNX format. To do this, we will use the skl2onnx library.

from skl2onnx import to_onnx

data_sample = get_config('X_train')\[:1]

options = {id(best): {'zipmap': False}}

model_onnx = to_onnx(best, data_sample.to_numpy() , options=options)After conversion we can check the predictions of the models and compare them with the predictions from PyCaret.

from onnxruntime import InferenceSession

sess = InferenceSession(model_onnx.SerializeToString())

X_test = get_config('X_test_transformed').to_numpy()

predictions_onnx = sess.run(None, {'X': X_test})

prob_onnx = predictions_onnx\[1]\[0:10]\[:,1]

pd.options.display.float_format = '{:.4f}'.format

compare_predictions = pd.DataFrame({'PyCaret': pred_holdout.prediction_score_1\[0:10], 'ONNX': prob_onnx}) PyCaret ONNX

18055 0.0000 0.0000

8830 0.0000 0.0000

19802 0.0000 0.0000

6131 0.0000 0.0000

3491 0.3542 0.3542

15067 0.0000 0.0000

198 0.0000 0.0000

9960 0.0011 0.0011

15776 0.0006 0.0006

3950 0.0004 0.0004Now our model is converted to ONNX format and can be saved and used on other platforms, for example on BigQueryML.

with open("ga4.onnx", "wb") as f:

f.write(model_onnx.SerializeToString())To load a model in BigQueryML it must first be copied to the Cloud Storage in Google Cloud Platform. Then just use the Create Model function, specify the location of the model in the bucket, and specify the target location in BigQuery.

CREATE MODEL \`project_id.mydataset.ga4_pycaret\`

OPTIONS(MODEL_TYPE='ONNX',

MODEL_PATH="gs://…/ga4.onnx")Unfortunately, the scenario shown above is a gross oversimplification compared to a real use case. Although PyCaret is largely based on scikit-learn, some of the preprocessing steps rely on other libraries. For example, in our use case it would be useful to balance the dataset, but PyCaret uses a different library for this purpose. As a result, when trying to convert to ONNX, we will encounter an error telling us that the transformation is not supported or the type of data is wrong. The same is true for any data operations affecting the number of columns in our dataset, even if we use one of the functions available in scikit-learn for this purpose.

RuntimeError:

There is a way to solve this problem which involves implementing a custom converter, but in this case, the use of PyCaret is no longer justified. The main goal of using this framework was to simplify and speed up the development of the model as much as possible, rather than making it more complicated. The process of creating the model will become much longer, with no guarantee of final success.

When working with machine learning, it's common for the initial dataset to differ from the one used during model training due to imputation, encoding or other preprocessing steps. It's crucial to remember that all transformations applied during model building must be replicated in the prediction pipeline, ensuring the same data structure is maintained. Let's consider an example where a categorical variable introduces a new level after the model's production run. In this scenario, simply applying one-hot encoding to the new data is insufficient. It is necessary to employ the encoder that was used during training, which stores information about the expected columns to prevent any issues with model execution. By maintaining consistency in the preprocessing pipeline, we can ensure accurate predictions and avoid potential disruptions caused by changes in the dataset.

Returning to the use case, we skipped through to address the preprocessing of categorical and date type variables in the selected dataset. Since those operations change the size of the dataframe, preprocessing must be done at an earlier stage. In a situation where we would like to use our model in BigQueryML, it is imperative that we replicate this process in BigQuery. While this is possible for one-hot encoding, this is not necessarily the case for more complex operations.

It is unfortunate to note that while PyCaret has impressive capabilities, there is currently a limitation in its compatibility with ONNX. Enhancing the integration between these two technologies would significantly amplify PyCaret's commercial potential.

In conclusion, PyCaret offers numerous benefits for simplifying and streamlining the machine learning model creation process. It provides tools for preprocessing data, conducting feature engineering, feature selection and a performance comparison of various models. The library also offers appealing visualizations to analyze model performance comprehensively. PyCaret offers a wide range of algorithms, enabling easy model blending and stacking. Thanks to comprehensive documentation and simplified workflow, it can be a very good tool for beginners.

On the downside, PyCaret suffers from limitations such as using global scope for experiment setup, issues with reproducibility, limited transparency in tool functioning and reduced control over transformations. The lack of probabilities for some default algorithms makes techniques such as model stacking impossible to use. Furthermore, PyCaret's abstraction of the scikit-learn pipeline poses difficulties in accessing and modifying it, while converting the pipeline to ONNX format proves challenging in specific scenarios involving multiple variable types or exceeding default transformation steps. Despite these drawbacks, PyCaret remains a valuable resource for ML practitioners, offering a convenient framework for quick experimentation.

Regarding the Inference Engine in BigQueryML, the process of transferring a model is straightforward, and it supports a wide range of formats. Once a model is moved, it can be seamlessly utilized in a manner similar to the models trained within BigQueryML.

However, it is important to be aware of certain limitations when working with the inference engine in BigQueryML. Most AutoML solutions do not generate scikit-learn pipelines, making them incompatible with ONNX. Additionally, while ONNX can transform a wide range of scikit-learn functions, some remain unsupported. Nevertheless, the list of supported functions continues to expand, and for those that are unsupported, custom operators need to be created. Consequently, transfering a model to the inference engine may require more effort compared to training it directly in BigQuery. It is crucial to consider these limitations and trade-offs when utilizing the inference engine for seamless model transfer and deployment in BigQueryML.

Here you can find a notebook with our case.

Interested in ML and MLOps solutions? How to improve ML processes and scale project deliverability? Watch our MLOps demo and sign up for a free consultation.

Feature Stores are becoming increasingly popular tools in the machine learning environment, serving to manage and share the features needed to build…

Read moreThis blog is based on the talk “Simplified Data Management and Process Scheduling in Hadoop” that we gave at the Big Data Technical Conference in…

Read moreIn this episode of the RadioData Podcast, Adam Kawa talks with Michał Wróbel about business use cases at RenoFi (a U.S.-based FinTech), the Modern…

Read moreThese days, Big Data and Business Intelligence platforms are one of the fastest-growing areas of computer science. Companies want to extract knowledge…

Read moreRemember our whitepaper “Guide to Recommendation Systems. Implementation of Machine Learning in Business” from the middle of last year? Our data…

Read morePlanning any journey requires some prerequisites. Before you decide on a route and start packing your clothes, you need to know where you are and what…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?