Panem et circenses — how does the Netflix’s recommendation system work.

Panem et circenses can be literally translated to “bread and circuses”. This phrase, first said by Juvenal, a once well-known Roman poet is simple but…

Read moreIt was epic, the 10th edition of the Big Data Tech Warsaw Summit - one of the most tech oriented data conferences in this field. Attending the Big Data Tech Warsaw over the last 10 years has allowed you to track Data trends. What was the main focus of each edition and which topics and concepts dominated the stages? All of this has always correctly reflected the global trends.

The data landscape has evolved over the last 9 years. Since the 1st edition of the conference, new trends have emerged such as Cloud, DataOps, MLOps, Data Democratisation, Data Mesh, Data Lakehouse and most recently, AI and GenAI. While several technologies remain very relevant e.g. Kafka, Spark and Flink, many of them are not really used anymore e.g. Pig, Crunch or Drill. However, even more of them have successfully entered the market e.g. Snowflake, DBX, BigQuery, dbt and Iceberg. It’s difficult to predict what topics will be presented at the 20th edition of the Big Data Tech Warsaw Summit, but data & AI will definitely be used by more and more companies, in more and more use-cases, not only by data-driven companies, but also by AI-native ones.

Coming back to the trend barometer mentioned above, we can observe that in previous editions, companies boasted about cloud migrations and cloud-agnostic solutions. Later, data governance became a very hot topic, especially Data Mesh architecture. Then came the polarizing topic of generative intelligence and discussions about the future of IT. At this year's edition, AI was still current, but a lot of space was also dedicated to data contracts and data quality. In this blogpost we will share the main takeaways from selected topics that were presented during the Big Data Tech this year.

Maciej Maciejko, Staff Data Engineer at GetInData

I enjoy the diversity at the Big Data Tech Warsaw conferences. Attendees have the option to choose between business presentations and more technical talks.

One notable representative from the latter category was Giuseppe Lorusso (Senior Data Engineer - Agile Lab), who presented a case study titled Don't trust the tip of the iceberg, focusing on the migration from Lambda to Kappa Architecture.

As you might have guessed from the presentation’s subject, the transition from Parquet to the Iceberg file format was just the initial step. Without a proper approach, Spark with Iceberg wouldn’t have been capable of continuously merging 100GB of change data capture events daily into a 65TB table.

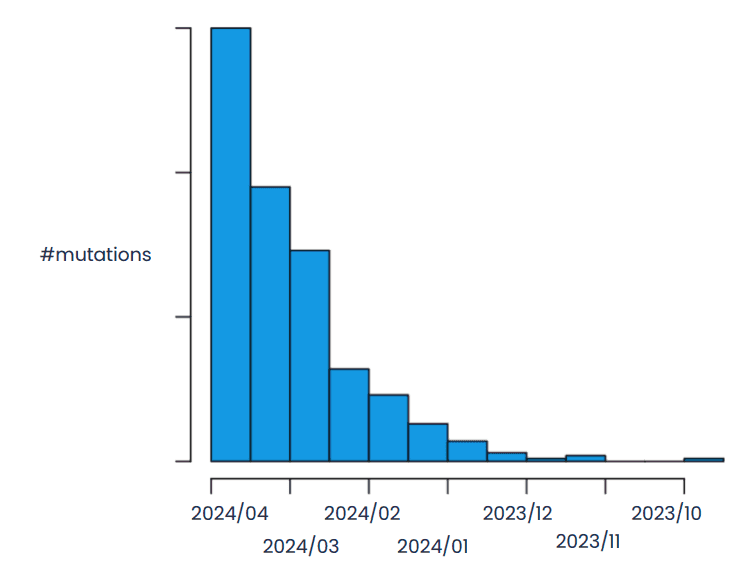

How does one manage such a massive table? Understanding your data and technological limitations is key. Giuseppe utilized distribution mutation analysis to partition the table into hot (affected by many updates) and cold segments.

This segmentation allowed for the application of the best merge strategy, including copy-on-write and merge-on-read techniques. Subsequent optimization involved file pruning to minimize the amount of data used in operations by adjusting the table’s partitioning and bucketing, and forcing Spark to use the Partition Filter.

If data shuffling remains a problem, consider adopting the new join strategy - Storage Partitioned Join (introduced in Spark 3.3). Storing data on disk before operations, while maintaining the same table layout, enables Spark to execute joins without data shuffling!

The takeaway from this presentation is clear: while writing SQL queries may be straightforward, effectively managing databases requires careful consideration of various factors. Despite the challenges, Iceberg still rules!

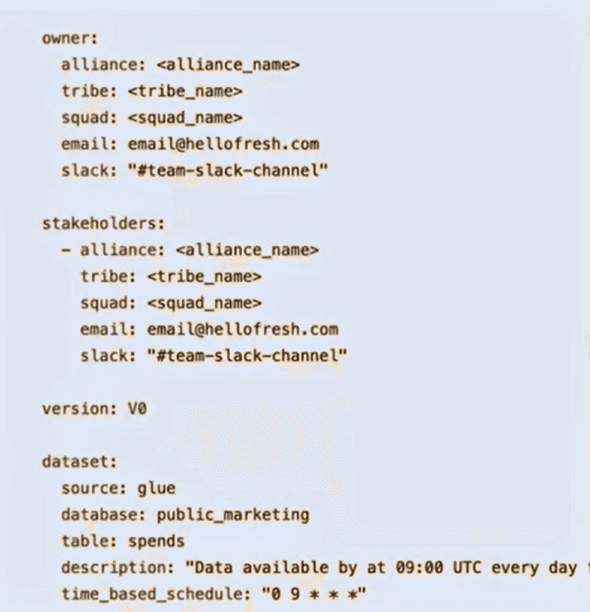

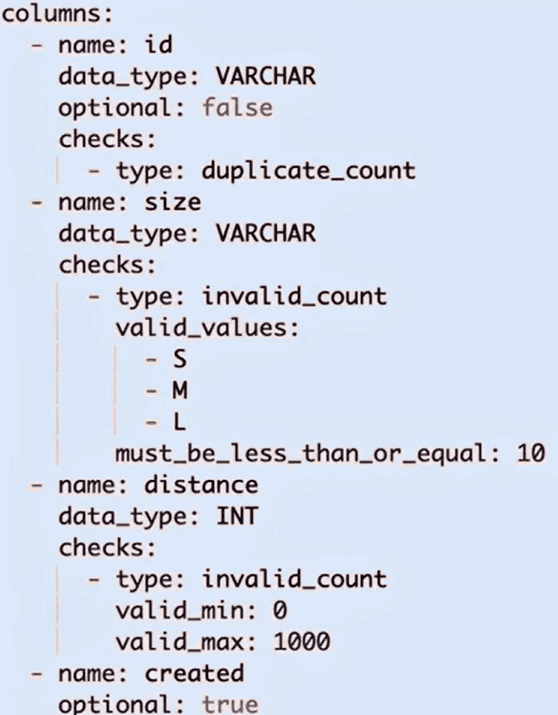

Are you strapped for time when it comes to grocery shopping? Struggling to come up with delicious and healthy meal ideas for you and your family every day? HelloFresh can help you! Meal-kit subscription services are gaining popularity worldwide. Let's delve into the numbers. In 2023 alone, over 1 billion meals were delivered, featuring more than 12,000 recipes, all made possible by a workforce of around 20,000 employees. Such a massive scale demands well-defined data contracts. Building trust in data through automatable data contracts was the focus of Max Schultze’s (Associate Director of Data Engineering - HelloFresh) and Abhishek Khare's (Staff Data Engineer) presentation.

It all began with soft contracts, typically in the form of signed-off paper documents, which were necessary for business purposes. However, there was often a lack of alignment in expectations, enforcement was inconsistent and these contracts quickly became outdated. Some teams made efforts to create technical data quality checks to ensure that the data met the expectations, but this process required deep technical knowledge. Additionally, tasks such as scheduling, monitoring and alerting had to be implemented internally. That was the trigger for building automated data contracts - the platform for alignment of expectations without technical knowledge, using a unified, built-in methodology.

How does it work? Firstly, the data owner creates a contract on GitHub, which undergoes pre-validation. In the next step, the contract is published, generating Airflow DAGs and Prometheus alerts.

Service Level Objectives (SLOs) can be scheduled via cron or triggered by a signal. Each execution concludes by notifying customers in the event of success or triggering alerts if the data fails to meet requirements.

Data quality becomes simple! There is no need for technical knowledge, as monitoring and alerting are provided out of the box. A clear definition of expectations and guarantees assists working with data on a large scale!

The Big Data conference also offers practical guides, such as the talk by Abdul Rehman Zafar (Ververica Solution Architect) titled Real-time Clickstream Analytics on E-commerce Website Data using Ververica Cloud.

Web applications track users' activities, providing clickstream data. This data can be leveraged to increase business revenue through sales optimization or by enhancing the user experience. Clickstream data offers insights into user behavior, patterns and preferences. Real-time analysis of clickstream data is particularly valuable in e-commerce, aiding in stock supply management, fraud detection, personalized product recommendations and more.

When it comes to processing streaming data, Flink is the go-to solution. Additionally, for bringing streaming capabilities to businesses more easily, you can try Ververica Cloud!

Abdul demonstrated how to easily utilize Flink SQL features such as connectors, aggregations and sliding windows (HOP function) with Ververica, analyze Clickstream data and integrate it with the other systems.

Ververica Cloud is a complete solution that provides lifecycle management, high availability, data lineage, enterprise security, autopilot (job’s auto-scaling), observability and more. It can significantly boost your e-commerce business!

Michał Kardach, Staff Data Engineer at GetInData

At the Big Data Tech Warsaw conference, Juliana Araujo, a Group Product Manager at Spotify with extensive experience in data product management, delivered an enlightening presentation on Spotify's strategies and policies aimed at reducing its greenhouse gas emissions. The focus was on practical measures and the company's commitment to sustainability within its operations.

Spotify has set ambitious environmental targets, centering on achieving net-zero greenhouse gas emissions and promoting greater ecological awareness and engagement among its stakeholders. Juliana detailed the company's incremental approach, emphasizing the importance of specific and measurable objectives. She revealed that the majority of Spotify's emissions stem from marketing, user end-use and cloud operations.

A significant part of the presentation was dedicated to Spotify’s operational strategies. The company has allocated specific roles, such as program managers and hack weeks, to drive its green initiatives. Tools like emission footprint dashboards and gamified elements that equate the activities to the number of flights that are used to make the data relatable and encourage proactive behavior among employees and users alike.

As a company primarily using Google Cloud Platform (GCP), Spotify has taken targeted actions to decrease its cloud-related emissions. Noteworthy strategies include migrating to greener GCP regions and adopting carbon-conscious policies at an organizational level, limiting operations to regions with lower carbon emissions. An impactful move was the activation of autoscaling in BigTable, which significantly reduced emissions by optimizing resource use. Furthermore, implementing lifecycle rules in Google Cloud Storage has helped minimize data storage and retrieval footprints.

Juliana candidly addressed the inherent challenges of balancing profitability and sustainability. She pointed out the positive correlation between eco-friendly practices in the GCP environment and cost reductions, which support long-term sustainable growth. Additionally, compliance with regulations like GDPR and the need to consider environmental impacts in data retention and backup strategies are crucial to achieving their zero-emission goal.

The presentation concluded with a forward-thinking perspective on the challenges posed by the burgeoning field of AI, particularly generative AI. Juliana stressed the importance of approaching the environmental impacts of AI with caution and awareness, as this represents a new frontier in Spotify’s sustainability efforts.

Spotify's presentation at Big Data Tech Warsaw provided a comprehensive view of its committed approach to reducing its environmental footprint. The company's detailed strategies, from cloud computing optimizations to internal policy reforms, demonstrate a robust commitment to sustainability. These efforts not only aim to mitigate climate impact but also align closely with operational efficiencies and regulatory compliance, showcasing a model for integrating environmental responsibility into core business practices.

Sergey Enin, an Engineering Manager for the Data Platform at Dropbox, delivered an insightful presentation on how Dropbox is addressing big data challenges through innovative data architecture strategies. The talk focused on the integration of Databricks and Delta Lake to enable a Data Mesh architecture, providing a comprehensive solution for the escalating complexities in data management driven by AI advancements.

Sergey opened his presentation with tracing the evolution of big data, starting from the foundational principles of velocity, variety and volume. He illustrated how the expansion of these dimensions led to the adoption of the Hadoop ecosystem and the subsequent big data hype. Today, as AI introduces new data challenges, organizations like Dropbox are reassessing their infrastructure and methodologies.

A significant portion of the talk was dedicated to the challenges inherent in maintaining on-premise Hadoop clusters. Sergey highlighted issues such as high compute and storage costs, the creation of data silos, and the complexities of maintaining such ecosystems. He also touched on the pitfalls of centralized data governance, which can become a bottleneck.

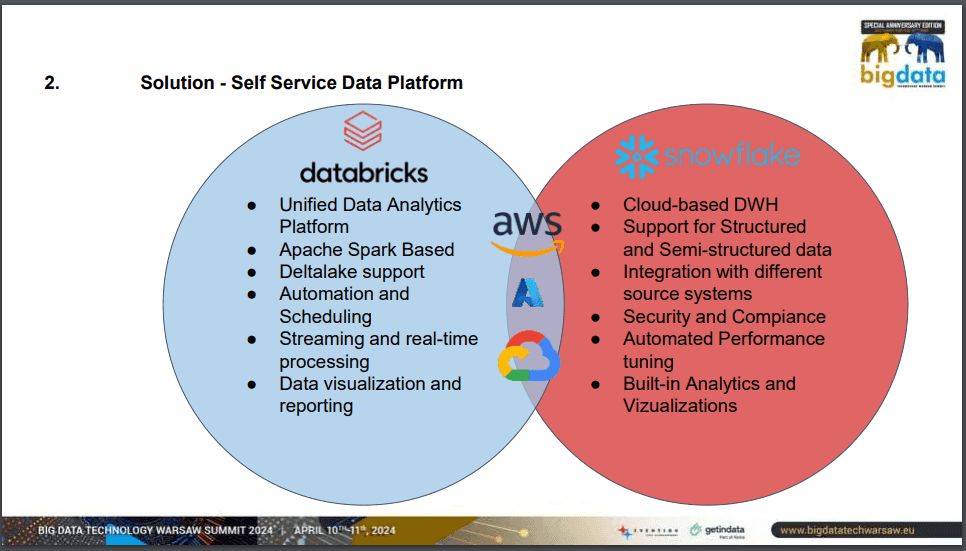

Sergey described the migration of data systems to the cloud as a fundamental step in addressing these challenges. Dropbox's approach involves leveraging tools like Databricks for analytics-driven firms and Snowflake for those focusing on data warehousing. This strategic utilization of cloud services is aimed at optimizing costs and efficiency.

The core of Sergey’s presentation was the concept of a Data Mesh, which he described as a transformative approach in overcoming issues of centralization and silos. By treating data as a product, promoting domain ownership and fostering federated governance, Dropbox aims to establish a self-service data platform. This approach underscores the transition from data management as a function to data as a strategic asset.

Sergey provided a detailed breakdown of the data governance framework, which includes data discovery, lineage, documentation, ownership and quality. He stressed the importance of each component in ensuring that data moves around the organization securely and efficiently. In terms of observability, he discussed the necessity of monitoring infrastructure, financial operations, data flow and usage, not only for surveillance but also for proactive issue resolution.

An interesting aspect of the presentation was the emphasis on Quality of Service (QoS) within the data ecosystem. Sergey advocated for practices such as documenting data tables, setting policies for unnecessary data and automating communications for data documentation, to maintain a clean and reliable data environment.

Moreover, he urged companies to nurture a culture that views data as a crucial business asset, build trust around data ownership and continuously engage with stakeholders to refine data strategies, supported by executive sponsorship.

Sergey’s presentation at the Big Data Tech Warsaw conference provided valuable insights into Dropbox’s strategic approach to big data challenges. By integrating advanced cloud solutions and promoting a data-centric culture, Dropbox is paving the way to more efficient and scalable data architectures. Attendees left with a clear understanding of how embracing a Data Mesh framework and robust data governance can profoundly impact an organization's ability to leverage big data in the age of AI.

If you missed the Big Data Tech Summit, our reviewer has done their best to provide you with the most valuable pieces of it. Plus this is only the first part of the review. Next week you will read about personal LLM and RAG-backed Data Copilot or about high-quality data products at Allegro (sign in to our newsletter to not miss it).

But that's not the only thing that will help wipe away your tears, because there's another opportunity to attend live top presentations from industry, networking meetings and discussions coming up. DataMass this year will occupy one of the stages on the biggest tech conference in Western Europe: InfoShare. It will take place on 22-23 May at the Polish seaside in Gdańsk! The DataMass Stage is for those of you who use the cloud in their daily work to solve Data, Data Science, Machine Learning and AI problems. Furthermore, InfoShare offers even more on the other 7 stages. Check out the details and register here. Hopefully, see you in May in one of the most beautiful cities in Poland!

And traditionally, we invite you to Warsaw next year, and for more insights from the conference, follow the conference profile on LinkedIn.

Panem et circenses can be literally translated to “bread and circuses”. This phrase, first said by Juvenal, a once well-known Roman poet is simple but…

Read moreThe partnership empowers both to meet the growing global demand Xebia, the company at the forefront of digital transformation, today proudly announced…

Read moreIntroduction In the ever-evolving world of data analytics, businesses are continuously seeking innovative methods to unlock hidden value from their…

Read moreReal-time data streaming platforms are tough to create and to maintain. This difficulty is caused by a huge amount of data that we have to process as…

Read moreCan you build a data platform that offers scalability, reliability, ease of management and deployment that will allow multiple teams to work in…

Read moreThe year 2023 has definitely been dominated by LLM’s (Large Language Models) and generative models. Whether you are a researcher, data scientist, or…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?