Data Enrichment in Flink SQL using HTTP Connector For Flink - Part One

HTTP Connector For Flink SQL In our projects at GetInData, we work a lot on scaling out our client's data engineering capabilities by enabling more…

Read moreThe 8th edition of the Big Data Tech Summit left us wondering about the trends and changes in Big Data, which clearly resonated in many presentations. It provoked us to take stock, revise and reflect on many issues in the projects we are running. Furthermore, we were inspired to consider what direction to look towards in the near future. You can find the first part of these reflections, along with a listing of the new Big Data trends, here: A Review of the Presentations at the Big Data Technology Warsaw Summit 2022!

This blog post is the second part of the BDTW Summit review. We will share interesting analyses of two conference tracks: Architecture Operations & Cloud and Artificial Intelligence and Data Science. You'll also check out the top 3 rated presentations of the entire conference.

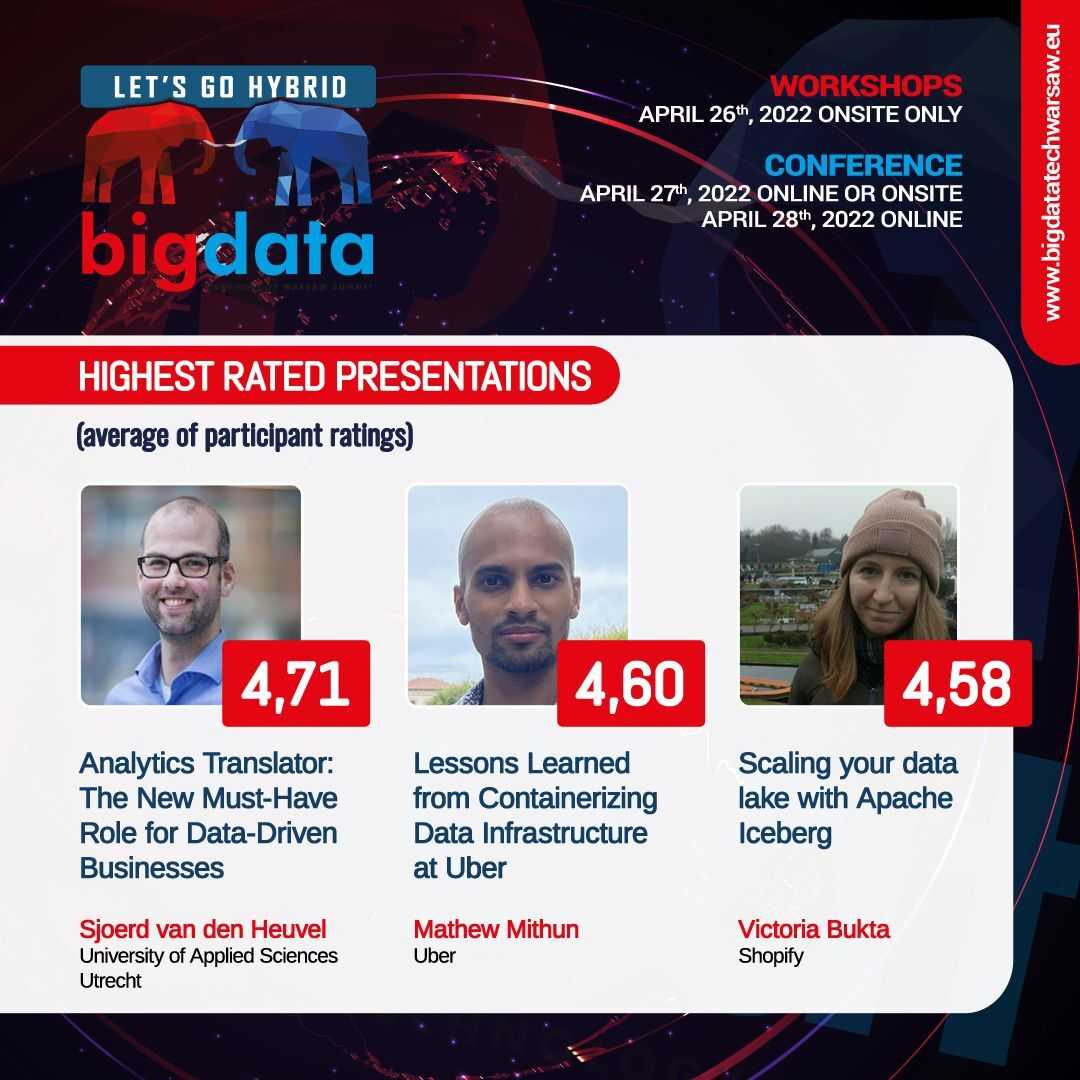

Without further ado, here are the top three listener-rated presentations along with the short takeaways.

Takeaway:

“Analytics Translators bridge the gap between data expertise on the one hand and business expertise on the other. They help to transfer strategic business objectives to data professionals and ensure that data solutions facilitate evidence-based decision-making. In this way they contribute to the learning capacity of organizations and to the realization of sustainable strategic value.” (The University of Applied Sciences Utrecht, 2019)

Takeaway: Containerizing Data infrastructure as the step to a fully automated, self-healing Data infrastructure. Uber has launched technologies on the Kubernetes cluster such as: HDFS, YARN, Kafka, Presto, Pinot, Flink and many others.

More on this trend in the Radosław review below.

Takeaway: Shopify uses a mix of open-source and cloud-native technologies such as Apache Iceberg, Debezium, Kafka, and GCP. Apache Iceberg provides the flexibility to change data under the hood without impacting teams. They collect data from various data sources and store them in a central location and use Apache Iceberg as the format for analytical tables. Iceberg gives the possibility to rewrite data sets to avoid expensive merges on read operations.

Radosław Szmit, Data Architect at GetInData

I had the pleasure of attending presentations from the “Architecture Operations & Cloud” track. The presented ideas and technologies can be divided into several categories.

The first hotly discussed topic was building Big Data platforms based on Kubernetes clusters. This is a fairly new, but rapidly gaining in popularity trend of launching all tools in the world of data analytics as containerized applications. One of the world's pioneers is Uber, which has launched such technologies as HDFS, YARN, Kafka, Presto, Pinot, Flink and many others on the Kubernetes cluster, which could be heard in the presentation “Lessons Learned from Containerizing Data Infrastructure at Uber” by Mathew Mithun (Sr Software Engineer at Uber).

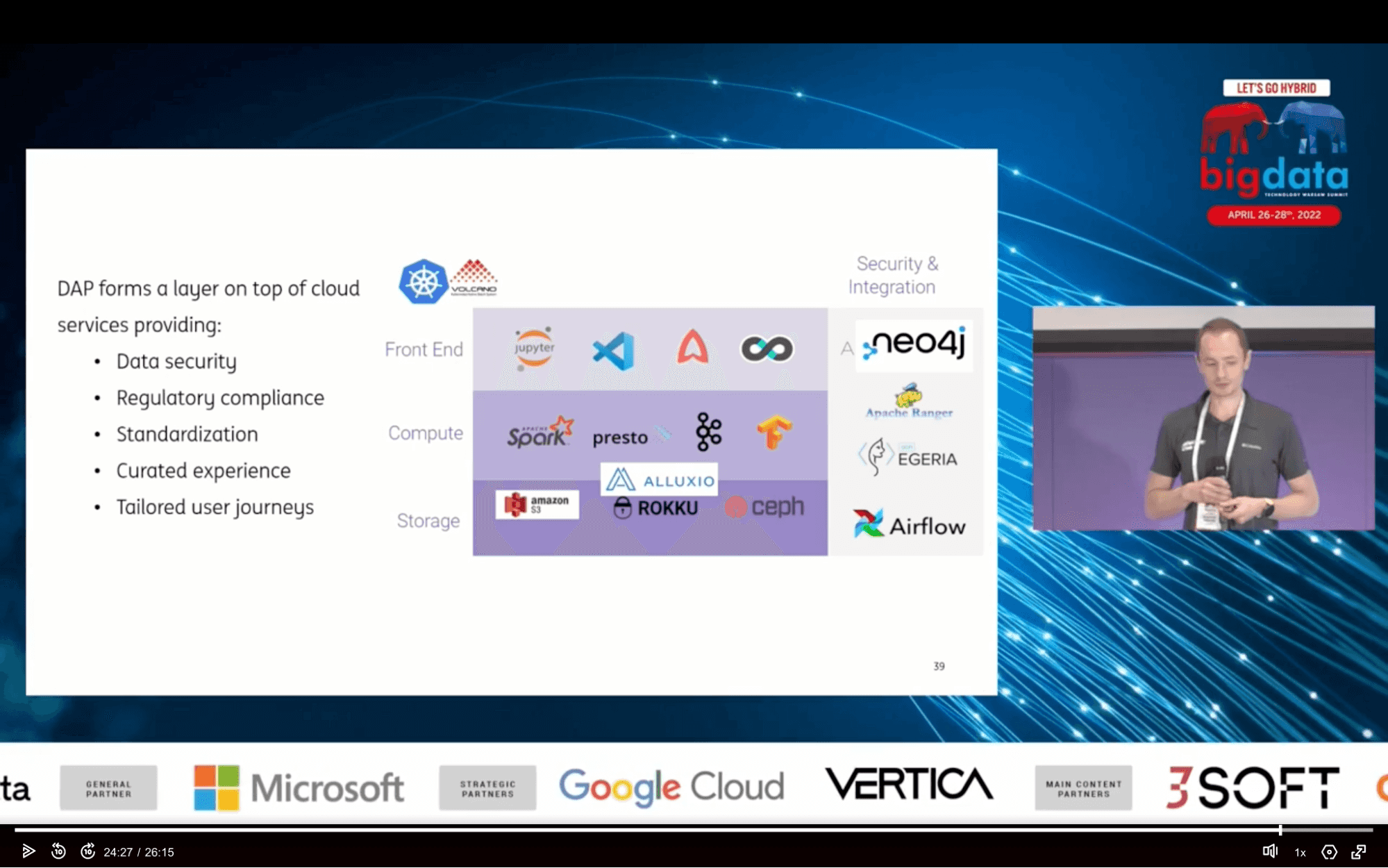

However, this trend is also visible in Poland, which could be seen during the presentation of Krzysztof Adamski (Technical Lead for the Data Analytics Platform at ING Hubs Poland) titled “ING Data Analytics Platform 3 years later. Lessons learned”. Krzysiek presented the results of the migration of the ING bank's platform to the Kubernetes environment based solely on open source technologies. Only around half of the company's employees have access to the platform, which poses a real challenge to its creators. The platform provides a number of the most popular technologies such as Spark, Presto, Kafka, Airflow, Jupyter, Superset, Amundsen, and the data is stored in Data Lake based on Ceph and Alluxio using the S3 protocol. In turn, Łukasz Sztachański (Cloud Lead at Mindbox) in his presentation “Fine-Tuning Kubernetes Clusters for Data Analytics” presented how to optimize Kubernetes clusters for applications of advanced data analytics.

The second trend presented on the “Architecture Operations & Cloud” path, was building a modern data warehouse. In the presentation "Rise up and reach the cloud - evolution of a modern global healthcare data warehouse" Piotr Kaczor (Senior Technical Architect at IQVIA) presented two possibilities of building a modern data warehouse in the cloud that was to replace the previous version based on the Oracle database and Cloudera Data Platform in the on-premise environment. The first proposal was to use the Snowflake warehouse, a very popular solution, which redefined this market in many aspects. Snowflake is a cloud native data warehouse built on top of the Amazon Web Services, Google Cloud Platform or Microsoft Azure cloud infrastructure, and allows storage and computation to scale independently. The second proposal was to use the Databricks Unified Analytics Platform, based on the Delta Lake library, the Apache Spark project with the vectorized query engine called Photon and SQL Endpoints to build a data warehouse. Databricks describes its platform as Lakehouse because it is a combination of the advantages of a classic data warehouse and a cloud data lake. Both proposals offer many possibilities previously unknown in on-premise environments, which is why more and more companies choose these and similar modern data warehouse platforms for their analytics. Maryna Zenkova (Data Platform Associate at Point72) in her presentation "Data Quality on Data Lakehouse: Implementation at Point72" presented one of the key issues in working with data in a data driven organization, i.e. ensuring high quality of data. She presented the implementation of a data quality framework on their cloud platform, focusing on the business needs of their financial organization.

The third popular trend presented in the path was building data analysis platforms based on the public cloud. Jakob Gottlieb Svendsen (Chief Cloud Engineer at Saxo Bank) presented how his company from the highly regulated financial sector uses the public cloud to build a safe and at the same time fully-automated and usable work environment. Pawel Chodarcewicz (Principal Software Engineering Manager at Microsoft) in his presentation "Developing and Operating a real-time data pipeline at Microsoft's scale - lessons from the last 7 years" showed what his team have learned over the last 7 years of building a Microsoft internal real-time telemetry system used by many company services every day. They designed, developed and operated (DevOps model) a big data pipeline gathering telemetry data at Microsoft scale (the pipeline has: 100k+ Azure cores, 13 Data Centers and hundreds of PBs).

Last but not least, the presentation of "COVID-19 is a cloud security catalyst" by Radu Vunvulea (Group Head of Cloud Delivery at Endava) focused on the pattern of use of cloud services caused by the coronavirus pandemic and the related security challenges. Many companies significantly accelerated their migration to the cloud, and at the same time the number of cyber attacks in the EU increased by 250%, which also required a significant increase in expenditure on data security.

Michał Rudko, Data Analyst at Getindata

The “Artificial Intelligence and Data Science” track over the last editions of the Big Data Tech Summit has always offered a richness of fresh and inspiring ideas on the most popular and widely discussed data topics. This year's edition was no different. It’s become obvious for many companies how important their data is and they are investing more and more in data solutions - including machine learning. However, a fascinating adventure with advanced analytics can be quite a challenge when faced with the need to bring it to production. This is where the discipline of MLops comes into play and different approaches to it were shared by our speakers.

In the first presentation of the track: “Where data science meets software and ML engineering – a practical example”, Alicja Stąpór and Krzysztof Stec from Philip Morris International demonstrated how they structured their advanced analytics solutions. It was very inspiring to see how some of the best practices from the Software Engineering field can be applied to machine learning like an object oriented approach, pipeline automation or code standardization. While minding the similarities, it was also important to add to the picture elements specific to the ML process such as model testing, new data validation and the monitoring of model predictive performance. What I found very impressive was the well-thought-out collection of reusable Python packages addressing all of the important stages of the ML model (training, inference, etc.), which introduced a good level of standardization and reusability of the standard steps. All this supported by Airflow as a pipeline orchestration tool, periodic model retrain associated with performance degradation and

end-to-end model monitoring - you should not need more than this to have everything related to data science under control!

In another speech of the track: “Feed your model with Feast Feature Store”, Kuba Sołtys from Bank Millennium demonstrated how simple yet powerful an MLOps process can be with an open source stack based on the Feast feature store. The main motivation behind using a feature store is the elimination of the well-known training-serving skew, by serving the same data to models during training and inference.

Among the available solutions, what prompted the choice of Feast was above all the large community and many integrations. Apart from Feast, the whole stack consisted of other popular open source tools including Kubeflow, Seldon, MLflow or Katib. The feature store is divided into offline and online sections.

The offline store is dedicated to model training (historical features, a small number of queries, a large set of entities, large data lookups, latency of minutes to hours) while the online store is used for predictions (the latest features, a high number of queries, a small set of entities, small data lookups, a latency of milliseconds). For the offline store the source could be either one of the data warehouses, Trino, PostgreSQL or parquet files, while for online it’s one of the high-performance no-sql databases like DynamoDB, Redis or Datastore. It all comes down to the preparation of historical data, training the model and materialization to the online store (for example, Redis cluster). On paper everything looked good, however it turned out that operationally, Feast had some shortcomings and performance issues - especially the lack of a feature to fetch and materialize data from multiple sources. This is where Kuba’s Feast extension, Yummy, came into play. Yummy centralizes the logic which fetches historical data from offline stores in the backend (instead of the query layer) which makes it possible to combine data from multiple data sources. Kuba demonstrated how features from parquet, delta lake and csv could be processed at the same time. What’s more, the extension also offers a quick and hassle-free switch to distributed engines like Apache Spark, Dask or Ray without any changes to the model definition - what is needed is only a change in the Feast’s config file. More on Kuba’s Yummy extension on Kuba’s blog. You’re also welcome to subscribe to Kuba’s youtube channel with lots of interesting content on similar topics.

We hope this review has only wet your appetite for knowledge, because you won't have to wait long for the next conference. Together with DataMass and Evention, we are organizing the DataMass Summit, another great conference, this time at the Polish seaside in Gdańsk. As early as this September! The DataMass Summit is for those of you who use the cloud in their daily work to solve Big Data, Data Science, Machine Learning and AI problems. The main idea of the conference is to promote knowledge and experience in designing and implementing tools for solving difficult and interesting challenges. Check out the details here. Hopefully see you in September in one of the most beautiful cities in Poland!

This is not the only date worth booking in your calendar. We would like to invite you to next year's edition: Big Data Technology Warsaw Summit 2023. Follow the conference's profile on LinkedIn so you don't miss out on registration.

HTTP Connector For Flink SQL In our projects at GetInData, we work a lot on scaling out our client's data engineering capabilities by enabling more…

Read moreHello again in 2020. It’s a new year and the new, 6th edition of Big Data Tech Warsaw is coming soon! Save the date: 27th of February. We have put…

Read moreMLOps on Snowflake Data Cloud MLOps is an ever-evolving field, and with the selection of managed and cloud-native machine learning services expanding…

Read moreThis article explores using Airflow 2 in environments with multiple teams (tenants) and concludes with a brief overview of out-of-the-box features to…

Read moreToday's fast-paced business environment requires companies to migrate their data infrastructure from on-premises centers to the cloud for better…

Read moreFlink complex event processing (CEP).... ....provides an amazing API for matching patterns within streams. It was introduced in 2016 with an…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?