Airbyte is in the air - data ingestion with Airbyte

One of our internal initiatives are GetInData Labs, the projects where we discover and work with different data tools. In the DataOps Labs, we’ve been…

Read more

It’s been exactly two months since the last edition of the Big Data Technology Warsaw Summit 2020, so we decided to share some great statistics with you and write a short review of some presentations.

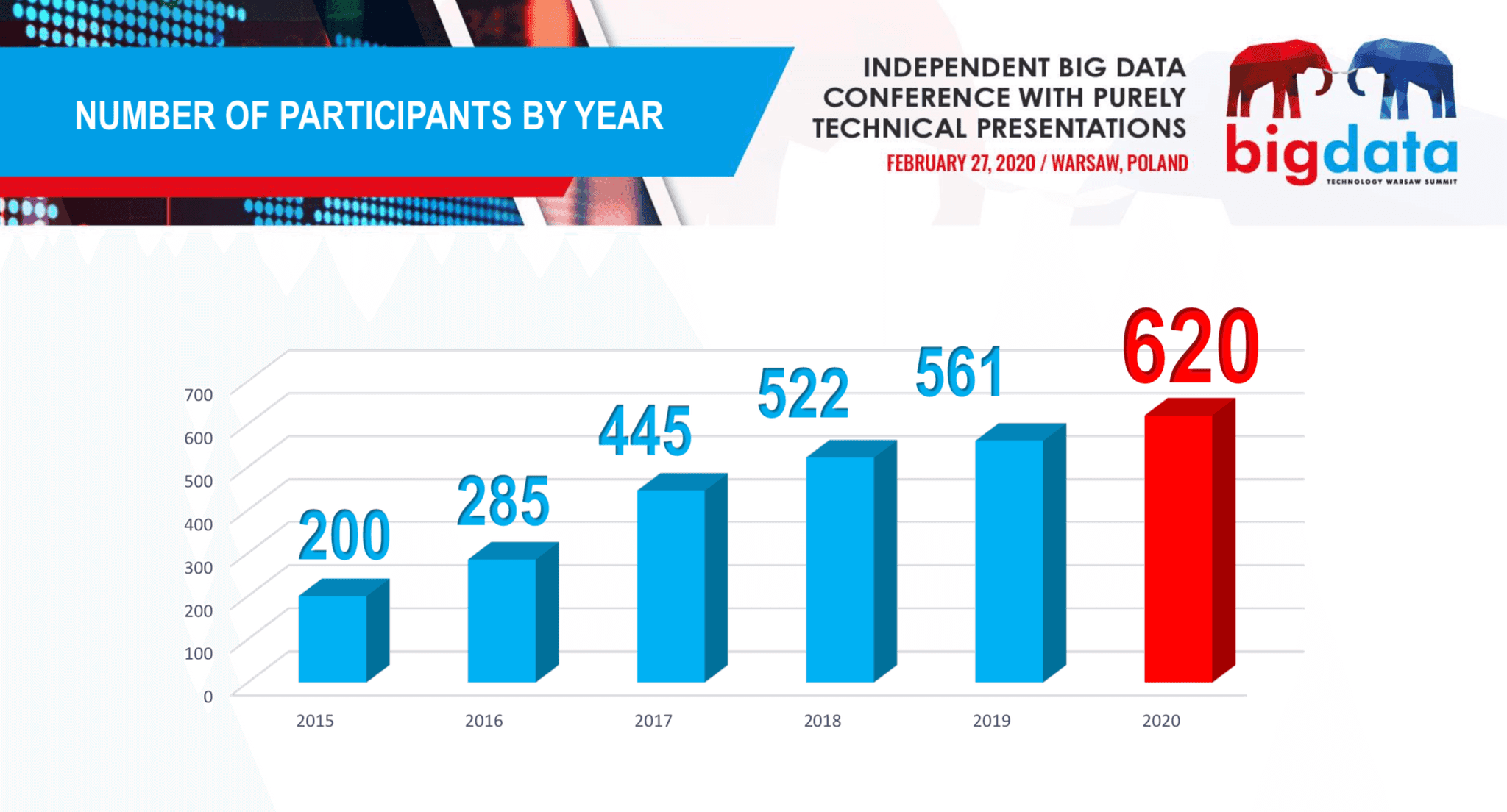

We started Big Data Tech Warsaw Summit 6 years ago, and as you can see below, the numbers of participants is growing each years - from 200 attendees in 2015 to more than 600 in 2020! Our goal is to keep the conference stay purely technical, so the main group of participants are big data specialists. In the agenda you can find mostly technical presentations: real stories, practical case-studies and lessons learned by true practitioners who work with data everyday.

In this year’s edition, we had the privilege and luck (if we think about how everything has changed since COVID-19 spread around the world) to host 78 speakers and around 620 attendees on 6th edition of our conference. The main presentations in the plenary session were led by guests from Snowflake, ING, Cloudera & 3Soft. Afterwards, participants had a chance to listen to 30 presentations divided into five simultaneous sessions - ‘Architecture, Operations and Cloud’, ‘Data Engineering’, ‘Streaming and Real-Time Analytics’, ‘Artificial Intelligence and Data Science’, ‘Data Strategy and ROI’. The list of companies was impressive: Uber, Alibaba, Criteo, Spotify, Trucaller, Orange, Findwise, GetInData and more.

Below you can find a brief review of some of the presentations our team members attended.

Architecture, Operations and Cloud sessions started with an excellent presentation by Philipp Krenn, Developer from Elastic. I was very excited to hear about efficient Docker deployments and enjoying presentation From Containers to Kubernetes Operators for a Datastore. Running Docker on k8s cluster gives us great flexibility and automation capabilities for managing both resources and applications but to make sure everything is working as we expect it is necessary to have good monitoring or cluster and applications state verification. Whereas it may be easy to start development but moving to production with passwords, secrets and certificates have their own lifecycle using Keystore and fetching immutable docker images from repository needs more attention. There is a great collection of base images you can use and introduce your modifications on top. When you have a deployment update this is where operators shine helping us to automate routines and make sure ongoing changes to our system are deployed and run as expected. Finally, people nowadays may think it is a blocker if you don’t have Kubernetes strategy even despite the fact that it runs only a portion of workloads.

The second track’s session was run by Stuart Pook, Senior Site Reliability Engineer from Criteo. Replication Is Not Enough for 450 PB: Try an Extra DC and a Cold Store came with sharing great experience and addressing challenges every data company is dealing with. We want to define our critical data resources, choose reliable – at least two independent backup options usually choosing at least one cloud provider and still be happy when not called at night to execute our restore backup plan. Differential backups are a powerful tool and identifying priorities in backup restore policy as well as time to restore data from failure are must-haves in our company backup policy.

Next presentation was How to make your Data Scientists like you and save a few bucks while migrating to cloud - Truecaller case study by Fouad Alsayadi, Senior Data Engineer from Truecaller, Juliana Araujo Data Product Manager from Truecaller and Tomasz Żukowski, Data Analyst from GetInData. The session addressed both architecture and efficient cloud operations topics which cannot be missed when running a successful startup. Running global call service on a multi-data center platform gives a great experience to share and migration to cloud shows both technology and cost-effectiveness in data management. Tens of billions of events are processed daily and shared for further analysis by business and data science teams. Managing petabyte scale data warehouse with a variety of pipelines is challenging enough but when it comes to hiring the right technology and professionals making right decisions is critical for your data team to survive. Using Google Cloud Platform and it’s cutting edge cloud and data warehousing solutions at scale gave outstanding results for Truecaller’s data engineering and it’s already a ramp-up of the startup’s cloud journey.

DevOps best practices in AWS cloud was the first presentation in the Architecture, Operations and Cloud track after the lunch. It was led by Adam Kurowskiego and Kamil Szkoda from StepStone services who described their experience with using AWS services and showed lessons learned. It was really interesting to see how they achieved smoothly working Infrastructure-as-a-Code in the cloud and I would say the presentation is a valuable source of knowledge about new AWS services for managing all the data pipelines. Speakers mentioned about DevOps principles in the public cloud and in my opinion, it was the most interesting part of this performance.

The next presentations The Big Data Bento: Diversified yet Unified led by Michael Shtelma, Solutions Architect from Databricks.

At present we can see more and more companies are moving to the public cloud and no one is surprised about it. Michael explained why it is happening and why solutions outside the core Hadoop components are becoming so popular. The part about using Databricks Data Lake was the greatest and I think it was the most valuable information. There were some questions about it in the Q&A part so that was the thing for which some participants had been waiting for. I liked it too because it explained the way how it transformed data with Bronze, Silver and Gold tables.

How to send 16,000 servers to the cloud in 8 months? Huge migrations from on-premises to the cloud infrastructure are always great stories and it was no different this time. OpenX moves its ad-exchange platforms to the Google Cloud Platform when they discovered that adding next DC would take too much time and it wouldn’t provide any flexibility. Marcin Mierzejewski, Engineering Director in OpenX, and Radek Stankiewicz, showed the way to migrate all the stuff to the cloud. I liked the part about costs changes during the next months and description of the done steps in the migration process. Surely, they mentioned some challenges like changing the approach to the processing of data that would take benefit of using Google Cloud services.

I really enjoyed hosting the Artificial Intelligence and Data Science path. Even though this is not an AI conference, doing data science at scale is an interesting task that suits this conference well. The variety of topics and number of presentations show that more and more companies are starting to incorporate AI into their data pipelines. All presentations were interesting not only for Data Science freaks - they included a lot of business justification for this kind of projects.

It all started with Grzegorz Puchawski presentation on building recommendation systems for ESPN+ and Disney+. This was a really eye-opening presentation as the author pointed out a major problem with the evaluation of such systems - it is non-trivial to collect feedback from users. We, as users, sometimes prefer not to watch recommended items as we might have seen that on another platform or in the TV. This doesn't mean we are not interested in this kind of content though. The author also devoted a large part of the presentation describing methods that minimize regret - that is, the number of people that saw recommendations from worse models than the best during A / B testing. Despite challenges - this project is a great success and is iteratively getting better with more than 60 production deployments during the first year.

Next on our plate, we had a presentation by Josh Baer from Spotify on building a factory for Machine Learning. There is a beautiful analogy between the state of machine learning right now and in the pre-industrial era. The cost of a single experiment is really high and the most costly part is not developing the model but wiring all the pieces together. The model on its own is just a small part of the production system. There is a lot of communication involved between experts from various domains to set this complex machinery in motion. The current goal for Spotify is to become a factory for deploying ideas and iteratively improving them. They are just getting started with the system presented during this talk - for the old pipeline it took 10 weeks to get model into production, it currently takes 9 weeks. There is still a lot of work to do but it seems that focusing on standardization, automization, centralization and economy at scale will eventually pay off.

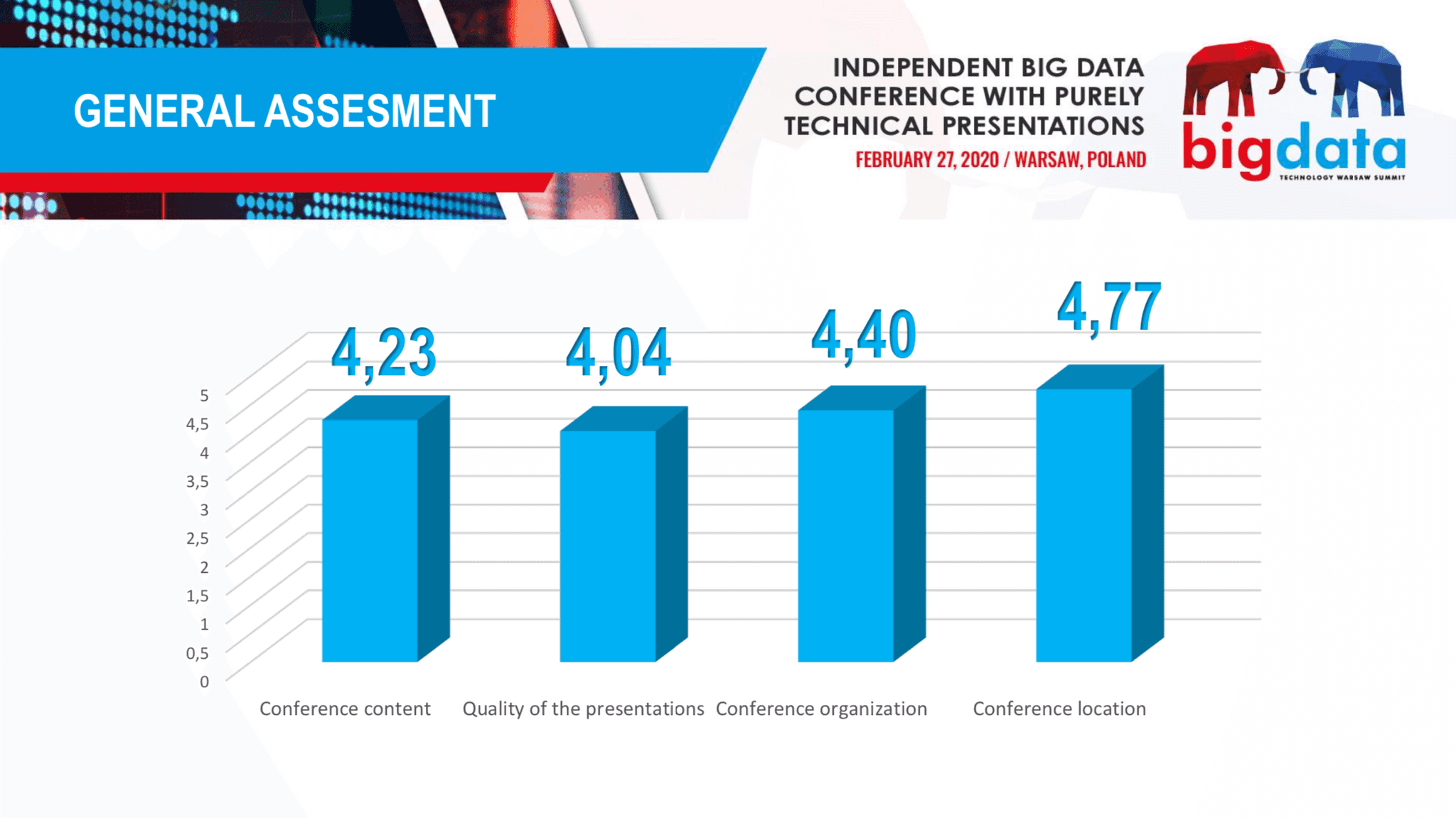

It’s worth mentioning that Josh performance was the highest-rated presentation during the Big Data Technology Summit - 4.92/5, that’s a great result!

The final presentation of the first part of the track was by Katarzyna Pakulska and Barbara Rychalska on neural machine translation. They have shown how companies can benefit from building their own translation systems - and how GDPR applies there as well. We learned about their custom tool for machine translation using Sharepoint pages - with automatic language detection and intuitive - checkbox based - user interface. The major advantage of this system is that it preserves the structure of source documents. They also pointed out what are the challenges when assessing translation quality and how to address adversarial examples. It was a great source of information about real-world NLP.

Right after the break, Andrzej Michałowski showed us how feature stores help us with solving anti-patterns in machine learning systems. An example of such anti-patterns is all the code responsible for integrating open source models into larger systems. Such code often has too many responsibilities e.g. it transforms features into the format accepted by a model. As a result models often later fail in production due to changes in source data as someone might have forgotten to update this glue code. Decoupling model development with feature engineering by introducing feature store provide companies with a reliable source of features that are versioned, tested and documented. Having such control prevents companies from introducing incompatible changes in data that might cause their models to fail.

Later, Arunabh Singh told us a bit about optimization of marketing spends through attribution modelling. We saw how hard it is for a company to acquire new customers. Their internal system is aggregating conversion data from Google, Facebook, Yandex, Bing and many other partners using BigQuery (there are over 1000 data sources but the distribution is highly skewed - most data come from 4 mentioned companies). He reminded us about an important fact - when a lot of money is at stake, even small but reliable improvement yields a high return for the company. Explainable models such as Logistic Regression which usually yield worse accuracy might thus be preferred - as there is a lower risk of unpredictable results in production and the company is making stable profit anyways.

It was all wrapped up by Kornel Skałowski presentation of reliability in Machine Learning. His presentation touched many points that were previously mentioned by other speakers. The idea to version both data, code and models seems like a solution to many problems seen by the companies nowadays. Apart from A / B testing that most practitioners are aware of - Kornel mentioned backward compatibility prediction testing: the model should not suddenly predict high credit score for certain people that we know from the domain knowledge should have low scores. These kinds of tests might reduce a lot of headaches regarding explaining such predictions to the manager. There are many tools available that might help to build such versioning system: Git LFS, Quilt for storage and more comprehensive solutions such as DVC or MLflow.

Overall, there were a lot of great speakers sharing their industry knowledge and experience with us. All presentations touched separate topics which gave us a great overview of approaches used in various domains. Some lessons learnt repeated themselves - know your data before modelling, deploy model end-to-end to production and then iteratively improve, A / B test new models minimizing regret. We also saw that models are just a small part of complex machine learning infrastructure within companies - building feature stores and standardization across the company was a target for some companies and for sure this will be further explored by many more in upcoming months.

Creating an extensible Big Data Platform for advanced analytics - 100s of PetaBytes with Realtime access was the first presentation in the Streaming and real-time analytics track. Reza Shiftehfar provided us with Uber's philosophy of a platform embracing industry standard systems, with the usage of open source internal components, while also contributing to them as the company encounters unique use cases due to its data loads. Uber tackled some problems that come with the traditional approach e.g.: limited scalability of hadoop, 24h latency of ingested data or ETL becoming the bottleneck. They developed their own system - Marmaray - a generic framework for data ingestion from any source and dispersion to any sink, leveraging the use of Apache Spark.

Next, Yuan Jiang presented primary goals met during designing a real time data warehouse at Alibaba. The company managed to build their service from the ground up, unifying streaming and batch processing in one place and eliminating the need for lambda architecture. Due to the highly optimized network, the platform provides separation of computing and storage nodes, enabling them to be scaled independently. The service offers high performance and is used as a data warehouse at Alibaba, the largest e-commerce company in China. Every component is optimized, for instance C++ is used instead of Java as the source code programming language in order to ensure a stable and low latency operation.

Afterwords, a group of speakers from Orange: Jakub Błachucki, Maciej Czyżowicz and Paweł Pinkos showed us the evolution of an on-premise environment. They managed to build a complex, flexible and reusable event processing system in Apache Storm with business rules easy to define even by the users with low technical skills. The meanders of working in a legacy environment with high security standards were demonstrated on the example of ingesting data from external sources.

Monitoring & Analysing Communication and Trade Events as Graphs - Christos Hadjinikolis had his own battle scars and stories to share during a presentation describing his hands-on experience gathered from two use cases. He was given a task of graph analysis in email communication between business units. Through denormalizing the email and the ability to track the communication between groups on different levels of abstraction, he was able to provide increased real-time awareness; challenge and improve internal structure to boost organisation’s effectiveness; or even detect irregular communication flow between groups, which helped identify hand-over between departments with high likelihood. The second case involved the stream processing of trade monitoring. In order to tackle the analysis of trade requests, Christos had to perform the online graph analytics. The usage of a finite state machine enabled him to identify what was normal and what was an anomaly. This solution delivered an increased real-time responsiveness and low latency alerting as well as an online analysis of irregular life cycles that helped reveal the root causes.

At the end of the track, thanks to Wojciech Indyk the participants were able to take Flink on a trip. He presented us with the role of big data clashing between dynamic environment with breakthrough moments (first automobile trip over 100 km long in 1888; 5G enabled networks in cars - as predicted by 2030) and insurance environment with stagnation of risk calculation. Based on online processing of data originating from driving, traffic and city infrastructure, the real-time value of insurance can be calculated. The usage of stream processing enables live dynamic risk assessment and pricing.

The Big Data Technology Warsaw Summit was an exciting one-day conference with many interesting topics, presentations and experts in big data fields in one place. It's a really great event to share knowledge and experience with others.

To sum up, none of it would be possible without the help of Evention – our partner in organizing Big Data Technology Warsaw Summit, and you guys – the big data technology fans :)

Thank you and hopefully see you next year!

One of our internal initiatives are GetInData Labs, the projects where we discover and work with different data tools. In the DataOps Labs, we’ve been…

Read moreThe GID Modern Data Platform is live now! The Modern Data Platform (or Modern Data Stack) is on the lips of basically everyone in the data world right…

Read moreData Mesh as an answer In more complex Data Lakes, I usually meet the following problems in organizations that make data usage very inefficient: Teams…

Read moreSnowflake has officially entered the world of Data Lakehouses! What is a data lakehouse, where would such solutions be a perfect fit and how could…

Read moreTrend 4. Larger clouds over the Big Data landscape A decade ago, only a few companies ran their Big Data infrastructure and pipelines in the public…

Read moreA data-driven approach helps companies to make decisions based on facts rather than perceptions. One of the main elements that supports this approach…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?