Introduction to Apache Sedona (incubating)

Apache Sedona is a distributed system which gives you the possibility to load, process, transform and analyze huge amounts of geospatial data across…

Read moreThe 8th edition of the Big Data Tech Summit is already over, and we would like to thank all of the attendees for joining us this year. It was a real pleasure to host Big Data practitioners and fans live again in Warsaw.

During this year's edition, we had over 40 presentations and 14 roundtable discussions. They were presented or moderated by over 70 speakers. After the Call For Presentations process, we analyzed the topics of the proposition and we noticed the following big data trends:

As always, the reviewers had a tough decision to make. We received over 50 submissions and were very satisfied with the quality of the proposals. During the two-day event, we had 6 presentations given by personally invited speakers. They were experts who we identified in the community, and we are very thankful to them for accepting our invitation to speak. We had 14 presentations given by conference partners and almost half of the talks came from the call for presentations process. Our speakers and roundtable leaders represent a large number of data-driven companies from all over the world. It was a wonderful opportunity for attendees to exchange knowledge with so many experts gathered together in one place.

Would you like to know more about the performance at the Big Data Technology Warsaw Summit? Below you will find some of the reviews prepared by GetInData’s experts.

Adam Stelmaszczyk, Machine Learning Engineer at GetInData

I had the pleasure of attending 3 presentations from the “Artificial Intelligence and Data Science” track. The first one was “Eliminating Bias in the Deployment of AI and Machine Learning” by Stephen Brobst from Teradata Corporation. Stephen highlighted that it’s easy to obtain ML models that are discriminatory in unwanted ways. Teams are prone to all kinds of biases, e.g. selection or confirmation biases. Two production-level examples were given: Nikon’s blink detection model that wasn’t trained enough on Asian eyes and a Tesla car that crashed into a fire truck, because the trucks were removed from the training as outliers. Stephen recommended following a standardized approach for development and deployment, to have a separate challenger team contesting the models and assumptions, to invest in explainability, to test for unwanted biases and to monitor the performance of the models.

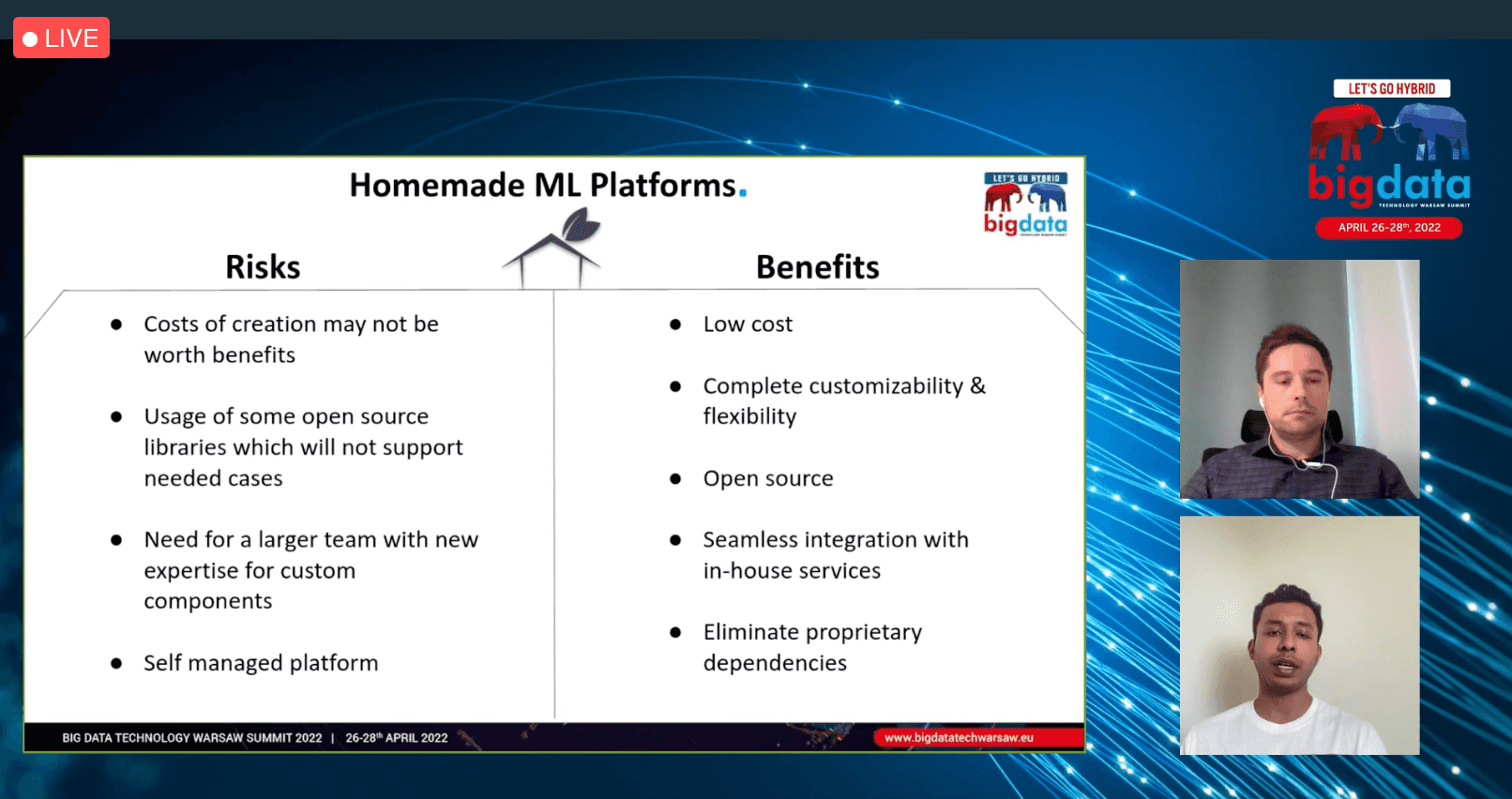

The second presentation was “Benefits of a homemade ML Platform” by Liniker Seixas from TrueCaller and Bartosz Chodnicki from our team. First, Liniker explained why MLOps is needed in the first place, then presented an overview of their in-house platform named Positron. Next, Bartosz talked about the goals and their approach to development, with leveraging the existing open source tools as much as possible, e.g. Airflow, MLflow and MLeap to name a few. The last one was compared to Seldon in terms of performance. MLeap turned out to be much faster in their benchmark. The presentation ended with plans for the future, which included integration with Flink and checking serializers such as PMML and ONNX.

The third presentation was “Digging the online gold - interpretable ML models for online advertising optimization” by Joanna Misztal-Radecka from Ringier Axel Springer Polska. Joanna described their end-to-end approach to CTR (Click Through Rate) optimization. Ads have around 30 thousand features, so automatic feature extraction was needed. Interpretable models such as logistic regression and decision trees were chosen for this task. To check if an ad variant with a specific target filter (e.g. A middle-aged male) was better than the baseline, AB testing was used. The whole optimization led to a satisfactory 20-60% (depending on ad type) increase in CTR since the project start of August 2020. The technology behind this was AWS S3, EMR, Airflow, Spark, Scikit-learn and Grafana for monitoring. Currently, Joanna and her team are considering a migration of ML jobs from Airflow to Kubeflow.

Mariusz Strzelecki, Big Data Engineer, GetInData

I had the pleasure of attending presentations from the “Real-time streaming” track. The first one was the “NetWorkS! project – real-time analytics that control 50% of the mobile networks in Poland”. We are all living in the fascinating times of the 5G transformation. This new technology not only provides more services and better performance for mobile users, but also – under the hood – produces way more metrics and maintenance events than its predecessors. Cellular networking businesses are data-driven. Analysis performed on real-time events not only allows you to understand the network health and predict failures, but also, discovering patterns in users’ behavior can help to better locate 5G antennas.

Maciek Bryński (Big Data Architect from GetInData) and Michał Maździarz (IT Operations Manager from NetWorkS!) showed us NetStream – a scalable system that processes 2.2 billion messages per day coming from the network management devices of two main mobile operators in Poland and provides flexible analytical capabilities in real-time. They presented not only the architecture behind the system, but also described in a very detailed way, arguments that led to all the decisions.

NetStream’s heart is Apache Flink, orchestrated by the Ververica platform. The system processes various metrics coming from 750 data sources (a mix of different hardware vendors and technologies), flowing through Kafka. First, it takes care of deduplicating these with Flink’s RocksDB engine. What is interesting, is that enabling bloom filters on RocksDB increased deduplication by over 3 times compared to the default settings.

Deduplicated data goes to a separate set of Kafka topics and analytical processes run on these. There is a separate component, called Metadata DB, that stores definitions of aggregations and KPIs to calculate in the form of SQL queries. Every query starts a separate Flink job and the results are transferred into Oracle DB with exactly-once delivery guarantees. To minimize maintenance, the team developed its own Kubernetes operator that handles the reconciliation of the aggregations/KPIs definitions based on Metadata DB entries. They also mentioned the use of Kafka Connect HDFS Sink as a solution for data reprocessing and ClickHouse as an overall system health monitoring solution.

NetStream solved most of the issues that the project faced. It not only provided a scalable way to process huge data streams but – thanks to Metadata DB – it opened the way to introduce new aggregations and KPIs on the fly. During the development, the team faced some bugs in Flink’s behavior that were reported to the maintainers. Also, they managed to address challenges like Flink's major version upgrade impact on stateful queries and long watermarking business requirements in a very smart way, keeping the system within Flink boundaries.

The next presentation I attended was ”TerrariumDB as a streaming database for real-time analytics.” When you start a new project, one of the main decisions to make is what technologies to choose that will help bring it to life. If you need to utilize a certain type of database, you can choose from a variety of solutions supporting either OLTP (transactional) or OLAP (analytical) access patterns. But what if your project requires a DB that supports both of these? Miłosz Baluś, Co-founder and CTO of Synerise, faced this challenge while building a behavioral intelligence platform. The database he needed for the project should not only support OLTP/OLAP but also provide scalability (with cloud-based deployments and data locality) and be able to respond to complex ad-hoc queries on schemaless data.

The first evaluation included a setup consisting of Hbase, Hazelcast, and Lambdas, but it turned out to be too complex to maintain in the long term. He also experimented with the MemSQL database, implementing both row and column-stores in one engine, but the licensing cost was too big to handle in the long term. On the other hand, Tarantool, being even faster than commercial MemSQL, didn’t meet all the requirements. As a result of these investigations, the team decided to develop their own database from scratch – TerrariumDB.

TerrariumDB started as an in-memory database, but it soon evolved into a disk-based distributed engine. Miłosz mentioned that while creating a fast database that stores all the data in RAM is pretty straightforward, it’s not really a cost-effective solution. TerrariumDB is a highly-distributable platform. Every request goes through a gateway node that decides if the operation is a „direct query” and targets rowstore (OLTP storage) or is an 'analytical query' and should contact multiple worker nodes in the cluster in order to perform aggregation (columnstore + OLAP case).

Regarding the query type, the request finally reaches the worker nodes. They store data in a format implemented from scratch with compression tricks like bit-packing and using a smart directories structure to provide fast random-access reads. When it comes to data modification requests, the engine uses two-dimensional LSM trees – inserts go to the main tree, but updates and deletes reach the second one, which is eventually merged into the main one. This interesting 'delta format'. implementation allows you to get updates/deletes as fast as inserts with eventual consistency.

Miłosz shared the metrics coming from the productional cluster of TerrariumDB with us, consisting of 1440 cores and almost 6TB of RAM. This cluster responds to 2 billion queries per day with amazing performance, delivering results of OLAP queries in less than 1 second for 99% of queries and OLTP requests in less than 50ms for the 99th percentile additionally.

On the last slides, Miłosz shared a few lessons learned with us from the process of designing and implementing TerrariumDB. He claimed it was a great experience, full of R&D efforts, like studying existing OLAP and OLTP databases at the source code level. Having a real business case helped them a lot to provide flexible and efficient technology. Also, implementing storage and the query engine from scratch allowed them to reduce running costs to a minimum and optimize the technology without tradeoffs that would come with an alternative – integrating existing technologies in the project. I just wish TerrariumDB was open-source, so I could test it myself! ;-)

Instead of writing “see you next year at the 9th edition of Big Data Tech Summit”, we have a surprise for you! We are happy to announce that, together with DataMass and Evention, we are organizing the DataMass Summit, another great conference, this time at the Polish seaside in Gdańsk. DataMass Summit is aimed at people who use the cloud in their daily work to solve Big Data, Data Science, Machine Learning and AI problems. The main idea of the conference is to promote knowledge and experience in designing and implementing tools for solving difficult and interesting challenges. Don’t miss the chance to join us in September 2022! Check out the details here.

Apache Sedona is a distributed system which gives you the possibility to load, process, transform and analyze huge amounts of geospatial data across…

Read moreWelcome to another Power of Big Data series post. In the series, we present the possibilities offered by solutions related to the management, analysis…

Read moreTraining ML models and using them in online prediction on production is not an easy task. Fortunately, there are more and more tools and libs that can…

Read moreAt GetInData we use the Kedro framework as the core building block of our MLOps solutions as it structures ML projects well, providing great…

Read moreMy goal is to create a comprehensive review of available options when dealing with Complex Event Processing using Apache Flink. We will be building a…

Read moreCustom components As we probably know, the biggest strength of Apache Nifi is the large amount of ready-to-use components. There are, of course…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?