OAuth2-based authentication on Istio-powered Kubernetes clusters

You have just installed your first Kubernetes cluster and installed Istio to get the full advantage of Service Mesh. Thanks to really awesome…

Read moreYou want to build a private LLM-based assistant to generate the financial report summary. Although Large Language Models, like GPT, are now commonly used, assembling one that will keep the information private for your organization is not simple. In this blog post, I will explain what challenges you need to overcome, how you can build a personal LLM-based assistant and, most importantly: how to do it on the Google Cloud Platform.

Large Language Models have been gaining lots of attention over the last several months. On the one hand, it’s a groundbreaking technology that lowers the barrier of using machine learning models by every, even non-technical user. Many implementations of Large Language Models, like GPT or Llama are available for free, and no special knowledge is required to start. You open a web application and interact with the trained LLM model using a human language to generate text content. For more details, see our article: Finding your way through the Large Language Models Hype.

On the other hand, a few considerations arise when you want to use that technology for more than play. Is it safe to input sensitive data as a prompt? Can I use it without breaking my company guidelines for external tools? Will I keep control of the data I share? Let’s look at this aspect of using LLMs and demonstrate the private way of using this technology.

There are readily available web applications to interact with LLMs for free. But, if you want to use one of them, you should always verify the terms and conditions of the service. You should not put sensitive data into the chat since the service may use it to train future model versions, making it available to all model users. For example, the most popular and awesome tool known as ChatGPT claims that it may use your conversations to improve its models.

Data submitted through the OpenAI API is not used to train OpenAI models or improve OpenAI’s service offering. Data submitted through non-API consumer services ChatGPT or DALL·E may be used to improve our models. (source)

To avoid sensitive data sharing, you should use an API to communicate with the service. However, it’s less convenient for beginner users and requires some technical knowledge rather than a simple, friendly user interface.

You could train an LLM model from scratch on your infrastructure. Unfortunately, it’s not as simple as it appears. Preparing your LLM model requires massive data, robust computing infrastructure and specific knowledge. For example, one part of the dataset to train the GPT3 model, the Common Crawl dataset, was 45TB in size, and the training time on the large cluster took days (source). Small organizations may lack the budget and skillset to do this from scratch. Especially if you only want to evaluate whether LLM models may help your business cases, then starting with open-source models may be the best approach.

Our clients often ask us about the example of commercial use cases of the LLMs in their organizations. Below you can find a short list of the applications. However, as LLM technology is still in the research phase, you should review the provided results before using it.

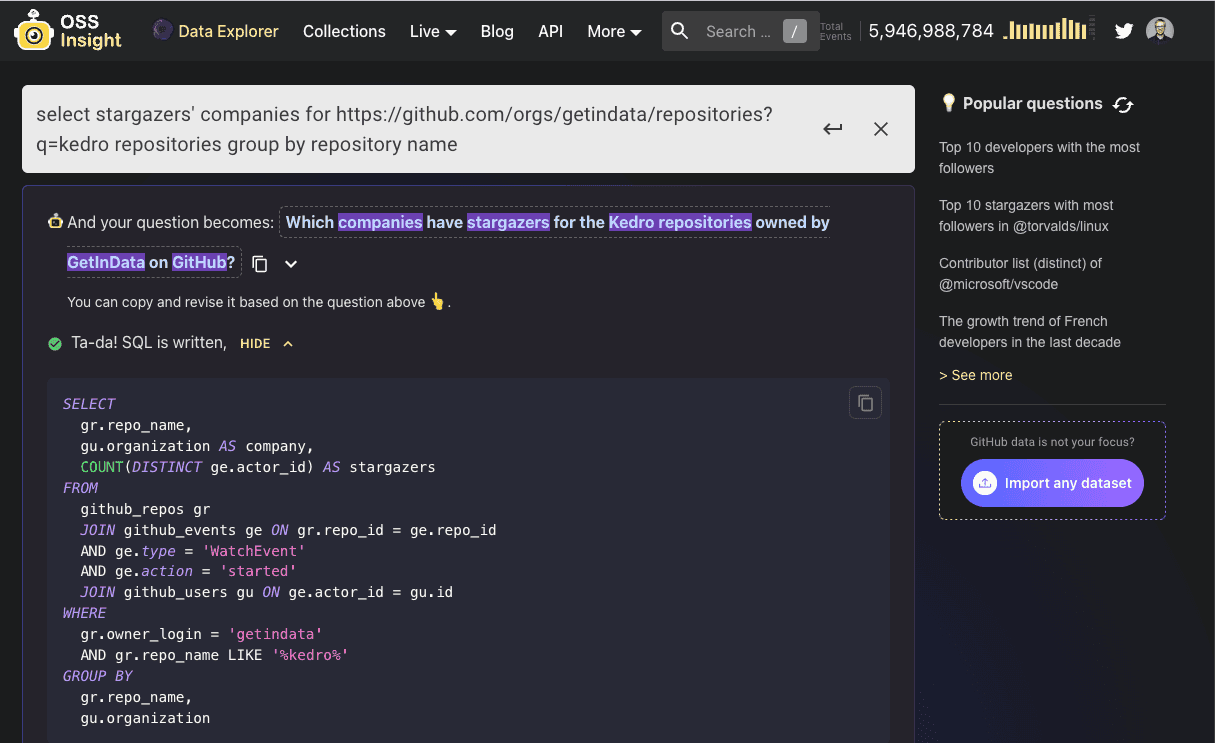

You can ask a generic question, the LLM model can build the SQL query into the database and visualize the results for you. It's a good way to democratize the data access across the organization, especially to allow access for less technical users.

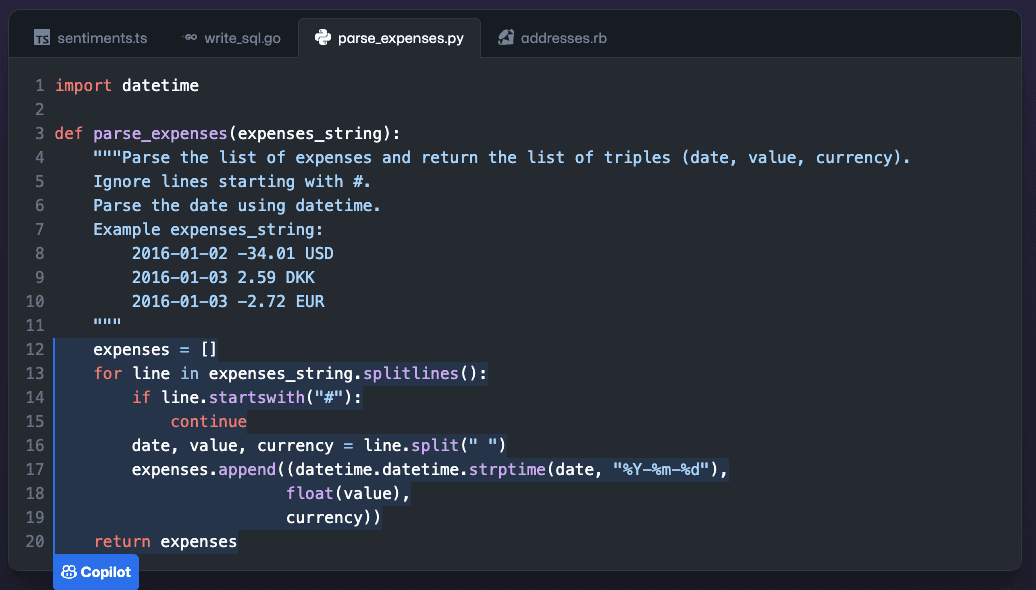

The other LLM application is assistance in code generation. You can integrate the Large Language Model into your IDE and ask for help with writing the code by describing the code instructions and the expected output. As a result, the model will generate the code in the programming language you ask for.

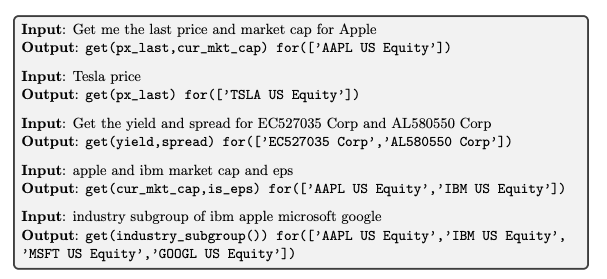

Above the generic use cases, there are proprietary models that you can use, dedicated for specific tasks, and including the related domain knowledge. Recently, the dedicated models for financial analysis, healthcare, or information security.

We need alternatives to the available on-the-market web applications to address our considerations when using LLM models. Fortunately, there are open-source LLM models (like GPT) available. Using them, we can evaluate whether they fit our organization's use case securely and privately, without proprietary information sharing with external vendors, and avoid the extensive costs of building the LLM model from scratch.

Let’s prepare the private assistant using LLM models under the hood, to generate the text summary of the data tables with the company revenue information (sensitive data).

We aim to create the assistant, which will generate the text executive summary from the data table containing information about the company revenue divided by year. This assistant could support data analysts in creating insights for the annual reports - usually manual, time-consuming work. With the LLM model, the data analyst gets the draft of insights in Polish and prepares the final word. Eventually, when LLM technology is more mature in the future, we can imagine fully automated summary generation.

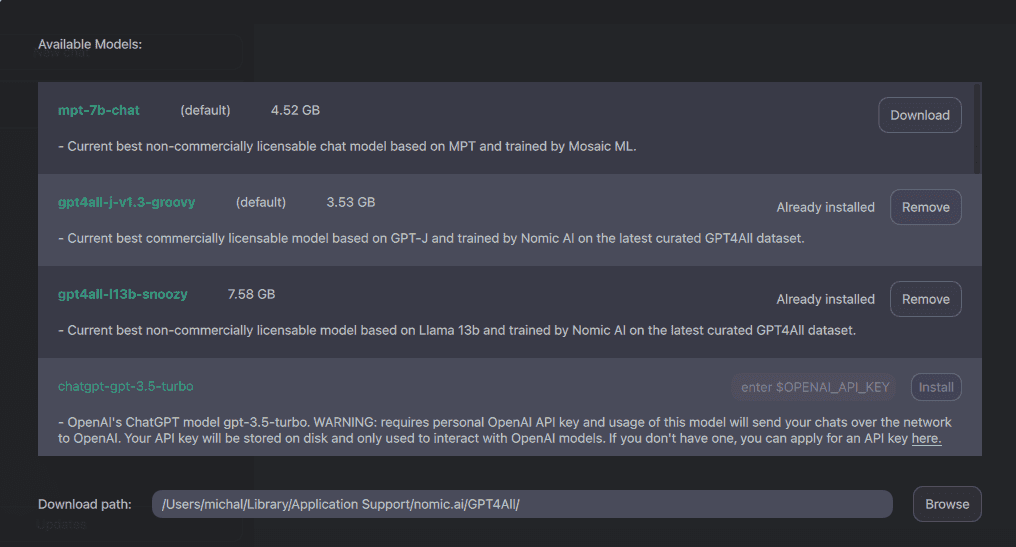

First, we will build our private assistant locally. This is possible because we use gpt4all - an ecosystem of open-source chatbots and the open-source LLM models (see: Model Explorer section: GPT-J, Llama), contributed to the community by the researcher groups and companies.

We can start with installing GUI on our local machine for a fast evaluation, following this section: https://github.com/nomic-ai/gpt4all#chat-client. There, you can find the application for your operating system. After installation, you need to download the selected models. Select the model that fits your requirements and the application, including the license. Note: trained models are large files (3.5GB up to 7.5GB), so use a fast and stable internet connection.

Once you have the GUI and the model, you’re ready to start generating your data summaries.

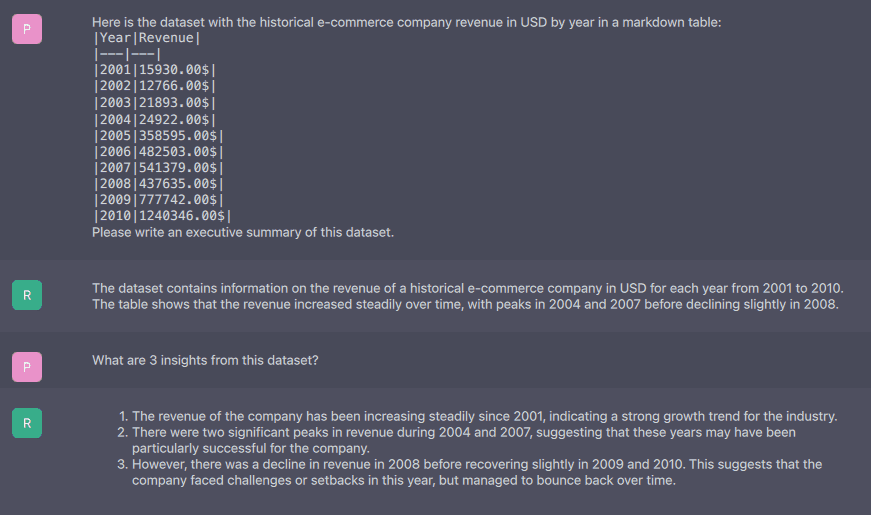

Since you may interact with LLM models in a human language, the better prompt you prepare, the better results you will get. To assist the model with better parsing of our input data, we prepared the following prompt with (synthetic) data about the e-commerce company revenue:

Here is the dataset with the historical e-commerce company revenue in USD by year in a markdown table:

```

|Year|Revenue|

|---|---|

|2001|15930.00$|

|2002|12766.00$|

|2003|21893.00$|

|2004|24922.00$|

|2005|358595.00$|

|2006|482503.00$|

|2007|541379.00$|

|2008|437635.00$|

|2009|777742.00$|

|2010|1240346.00$|

```

Please write an executive summary of this dataset.Additionally, you may add some follow-up questions like:

What are 3 insights from this dataset?

When was the highest revenue increase? How high was it?

Can you summarize this dataset?

What trends do you see in this data?Now, enter the prompt into the chat interface and wait for the results. For the demonstration, we used `GPT4All-J v1.3-groovy`, described as Current best commercially licensable model based on GPT-J and trained by Nomic AI on the latest curated GPT4All dataset. As the model runs offline on your machine without sending data to the external endpoint, it may take some time to receive the summary of the data. Note, that the results quality will vary depending on the model you select. Here, we’re bound by the license for commercial use.

#RESPONSE:

The dataset contains information on the revenue of a historical e-commerce company in USD for each year from 2001 to 2010. The table shows that the revenue increased steadily over time, with peaks in 2004 and 2007 before declining slightly in 2008.The result is not bad, and the facts are correct with one exception - the peak in the revenue was not in 2004 but in 2005. It’s a good example that you always need to review the results provided by the model. Next, we added a follow-up question about the insights:

#QUESTION: What are 3 insights from this dataset?

#RESPONSE:

1. The revenue of the company has been increasing steadily since 2001, indicating a strong growth trend for the industry.

2. There were two significant peaks in revenue during 2004 and 2007, suggesting that these years may have been particularly successful for the company.

3. However, there was a decline in revenue in 2008 before recovering slightly in 2009 and 2010. This suggests that the company faced challenges or setbacks in this year, but managed to bounce back over time.As the work with LLMs requires extensive computing, your local machine may need to be more powerful for fast interaction with the GPT models. Also, to allow your organization to work efficiently with GPT, create a single, shared instance of the assistant. The private cloud is the go-to solution for this scenario.

Note that there are dedicated tools coming soon for Large Language Models on Google Cloud Platform (like Generative aI Studio, and Model Garden - announced on Google I/O 2023 as a Public Preview). Also, you can still use the external API (i.e. OpenAI). But, to stick to our use case of the private GPT assistant, we’ll run an instance using the private cloud.

Now, let’s demonstrate the possible way of using private GPT models in the cloud environment. In our basic scenario, we’ll leverage cloud computing to provide an infrastructure (more powerful computing environment than the local machine) and allow the use of private GPT-based assistants by multiple users across an organization.

Moreover, for better control and configuration options, we’ll use the Python client for the GPT4All ecosystem.

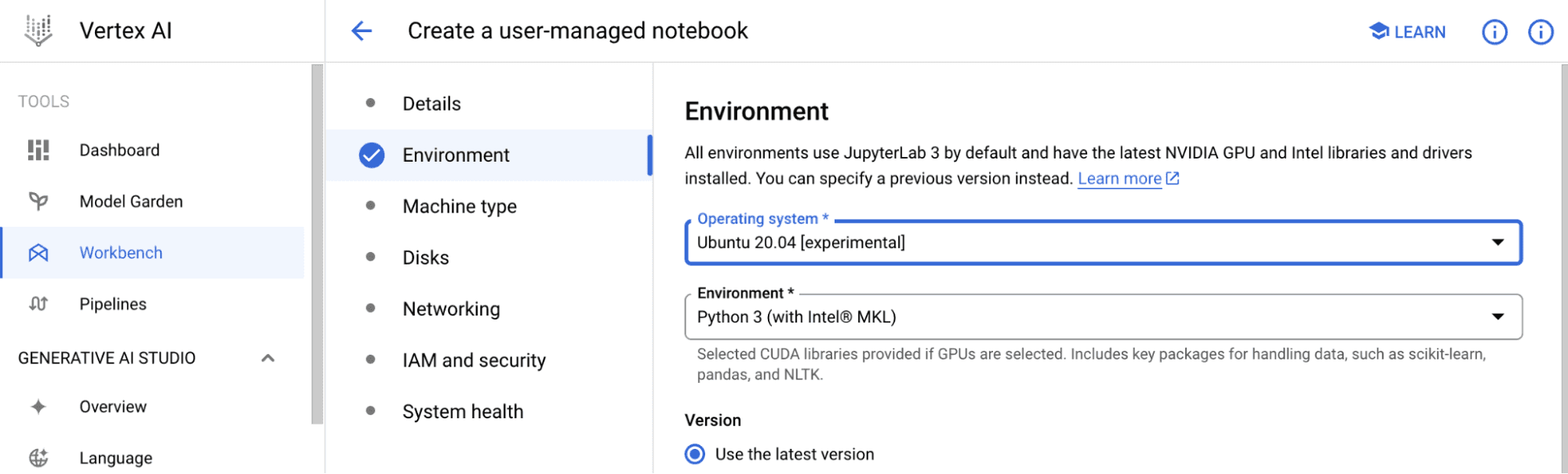

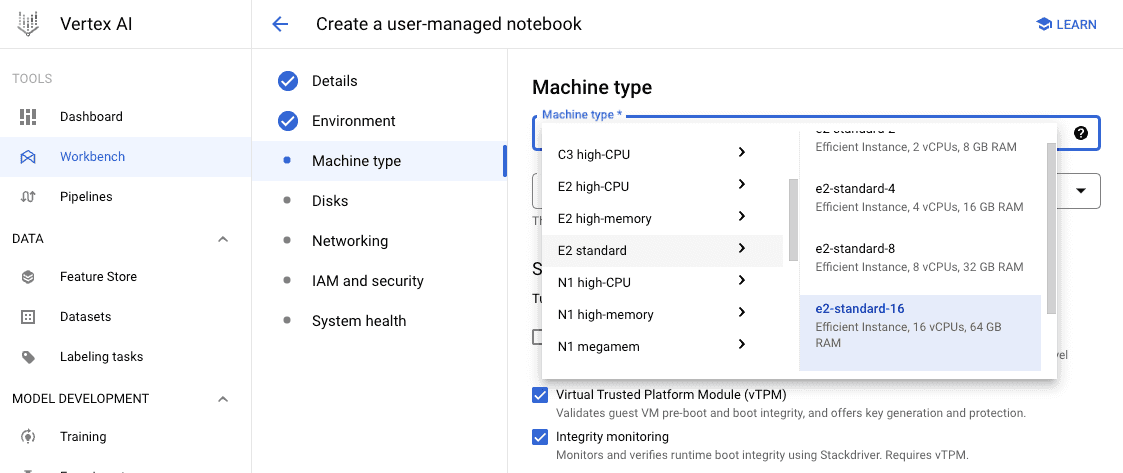

First, we need to provide infrastructure. We’ll start with a Vertex AI Workbench (user-managed) instance based on the Ubuntu operating system. Our main benefit is that we can spin up the compute instance with more CPUs and RAM than we have on a local machine, or, select some hardware accelerators (i.e. GPU, or the CPU platform with some specific extensions of instruction set architecture). It has an impact on the results generation speed.

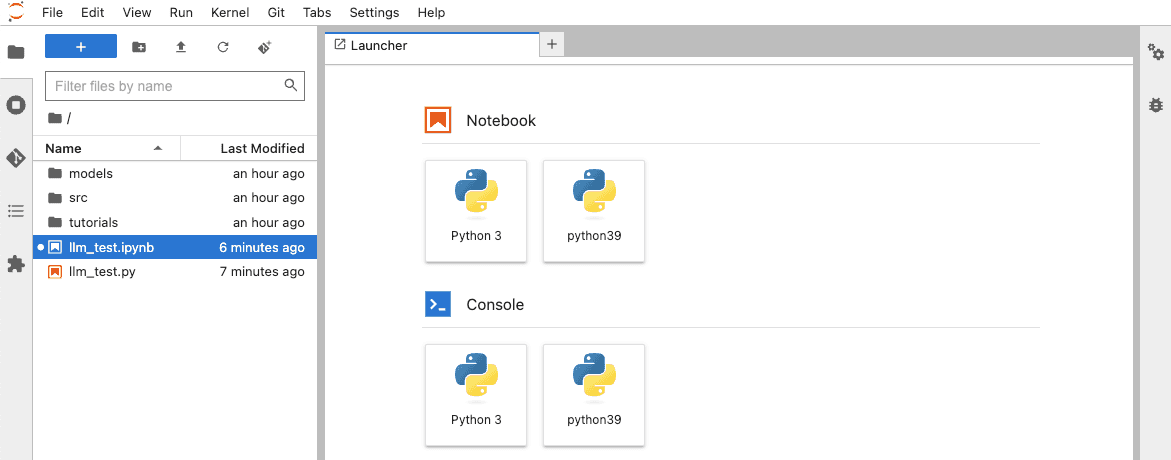

Vertex AI Workbench notebooks run by default with Python 3.7, and gpt4all requires Python 3.8+. Fortunately, we can add additional kernels to the workbench with Conda. To do this, you need to follow the instructions in the Jupyterlab terminal:

conda create -n python39 python=3.9

conda activate python39

conda install ipykernel

ipython kernel install --name "python39" --userNext, we need to install the Python client for gpt4all.

pip install pygpt4allNow, we have everything in place to start interacting with a private LLM model on a private cloud. Just create a new notebook with kernel Python 3.9:

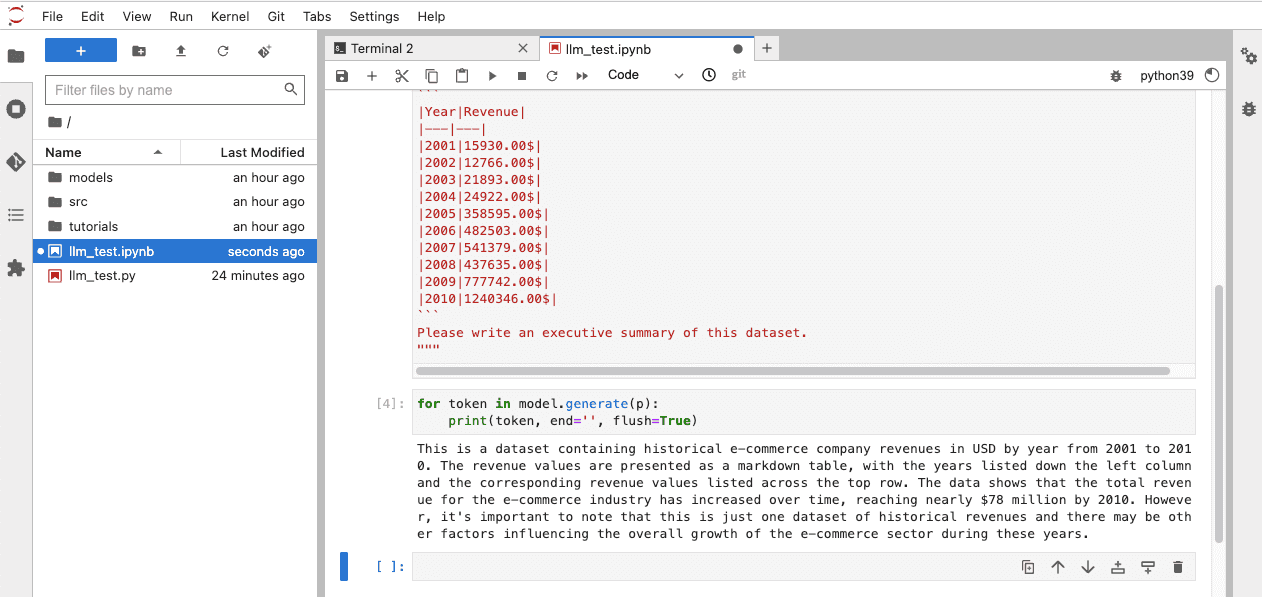

And ask your assistant to generate a summary of your data (github: https://gist.github.com/michalbrys/6ba99e91772504156df029cd40242437 )

from pygpt4all.models.gpt4all import GPT4All

model = GPT4All('./models/ggml-gpt4all-l13b-snoozy.bin')

p = """

Here is the dataset with the historical e-commerce company revenue in USD by year in a markdown table:

```

|Year|Revenue|

|---|---|

|2001|15930.00$|

|2002|12766.00$|

|2003|21893.00$|

|2004|24922.00$|

|2005|358595.00$|

|2006|482503.00$|

|2007|541379.00$|

|2008|437635.00$|

|2009|777742.00$|

|2010|1240346.00$|

```

Please write an executive summary of this dataset.

"""

for token in model.generate(p):

print(token, end='', flush=True)

Feel free to play around with the configuration parameters available in the gpt4all. You may increase the number of threads used by the model, keep the model in the memory, or even set random seed for the reproducibility. You may notice further speed improvements by running the instructions above in the Jupyterlab terminal. See the package documentation: https://docs.gpt4all.io/gpt4all_python.html

Congrats! You just launched a private GPT-backed assistant. But it’s only the beginning of the journey. As the next step, you may consider adding your private documents to the model context for better results, or integrating it with the other internal systems.

If you want to discuss any potential LLM applications in your business or any other data-related topic that GetInData can help you with (including Advanced Analytics, Data-driven Organization Strategy, DataOps, MLOps and more), please sign up for a free consultation.

You have just installed your first Kubernetes cluster and installed Istio to get the full advantage of Service Mesh. Thanks to really awesome…

Read moreWe would like to announce the dbt-flink-adapter, that allows running pipelines defined in SQL in a dbt project on Apache Flink. Find out what the…

Read moreThese days, companies getting into Big Data are granted to compose their set of technologies from a huge variety of available solutions. Even though…

Read moreToday's fast-paced business environment requires companies to migrate their data infrastructure from on-premises centers to the cloud for better…

Read moreSales forecasting is a critical aspect of any business, especially in the fast-paced and competitive world of e-commerce. Accurately predicting future…

Read morePlanning any journey requires some prerequisites. Before you decide on a route and start packing your clothes, you need to know where you are and what…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?