Enabling Hive on Spark on CDH 5.14 — a few problems (and solutions)

Recently I’ve had an opportunity to configure CDH 5.14 Hadoop cluster of one of GetInData’s customers to make it possible to use Hive on Spark…

Read moreWhat is BigQuery ML? BQML empowers data analysts to create and execute ML models through existing SQL tools & skills. Thanks to that, data analysts can build machine learning models in BigQuery. Below we explain how you can start using BigQuery ML to create and evaluate ML models.

In this example, we’ll build a machine learning model to predict the probability of adding a product to a shopping cart by the e-commerce website user.

The problem to put it simply, is a binary classification (product added to cart / product not added to cart) problem. In BigQuery ML (BQML), this classification can be delivered by multiple algorithms:

To minimize the complexity of the example, we decided on a commonly known logistic regression model. This machine learning model produces the classification along with the attribution to class probability.

For the training data, we’ll use the publicly available BigQuery sample dataset for Google Analytics 4 ecommerce web implementation. We’re focusing on the latest Google Analytics 4 version due to the Universal Analytics sunset announced for July 1, 2023:

On July 1, 2023, standard Universal Analytics properties will no longer process data. You'll be able to see your Universal Analytics reports for a period of time after July 1, 2023. However, new data will only flow into Google Analytics 4 properties. Source.

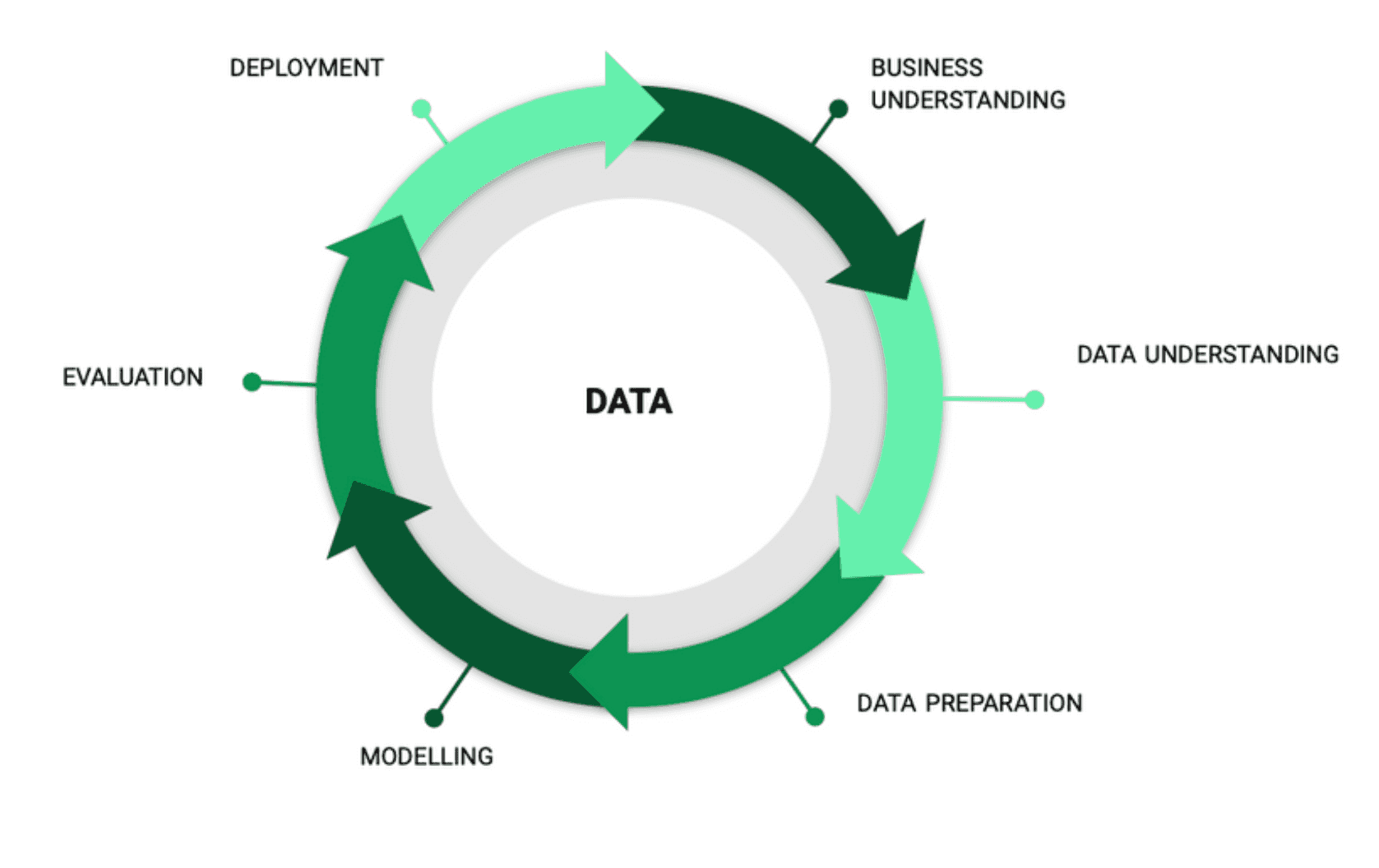

To structure the description of the application of BQML, we’ll use the Cross-industry standard process for Data Mining (CRISP-DM) framework, which will let us split the complex scenario of mode building into the well-defined steps of:

This approach will be the first, simplified iteration of the whole process. We’ll build more advanced scenarios in the next phases (See: Next steps).

Taking into account the raw data location (BigQuery) and the available budget and complexity constraints, we decided to use the BigQuery Machine Learning (BQML) features to build our prototype model.

Using BQML, by its design, provides several very practical features:

To start working with BigQuery ML, you only need the web browser - no IDE or other tools are required. What’s worth mentioning, is that data processing and model training runs entirely on the Google Cloud Platform, so you don’t need a powerful local machine to work, even with large datasets.

Next, just create a project on Google Cloud Platform, and optionally apply for the free trial credits. Under the project, you’ll create BigQuery datasets, and train your ML models.

Important: We called our project `bqmlhackathon`. Feel free to select your own project ID, unique across the whole Google Cloud Platform.

First, we need to get the data we’ll be using to train the model.

Note: In this example, we’ll use the sample, publicly available Google Merchandise Store data export from Google Analytics 4 to BigQuery. If you’d like to use your own data, please configure the export from your website following these instructions. You can learn more about the dataset here: Google Analytics documentation.

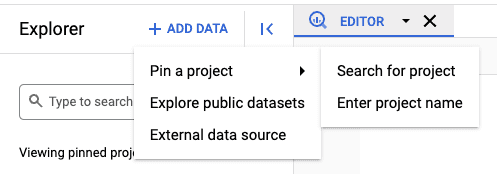

To see the Google Analytics data in BigQuery UI, go to [+Add data] -> Pin a project -> Enter project name: bigquery-public-data and see the ga4_obfuscated_sample_ecommerce.

By completing this step, you’ll see the Google Analytics 4 data in BigQuery. Let’s get familiar with the dataset content.

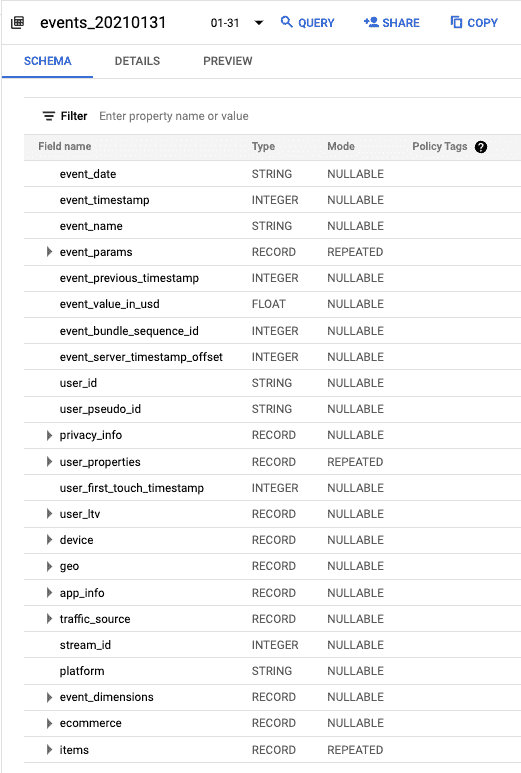

At this step, we’ll do a walk-through of the available data. You can see the schema in BigQuery UI, by clicking on the ga4_obfuscated_sample_ecommerce dataset and events_ table.

The meaning of each column is well described in the Google Analytics documentation.

Note, that the table is partitioned by date. You can explore the available partitions using the drop-down menu in BigQuery UI. Additionally, in your queries you may want to specify the desired rate range by using wildcard tables, i.e. to query data between '20210101' and '20210131'.

--count unique users number in JanuarySELECTCOUNT(DISTINCT user_pseudo_id) AS usersFROM`bigquery-public-data.ga4_obfuscated_sample_ecommerce.events_*`WHERE_TABLE_SUFFIX BETWEEN '20210101' AND '20210131'

Link to the query: bqml_wildcard_tables.sql

We encourage you to run some basic queries and see how much more you can get by querying the dataset instead of only using Google Analytics UI. Take advantage of BigQuery aggregate functions like AVG(), COUNT(), COUNTIF(), MAX(), MIN(), SUM().

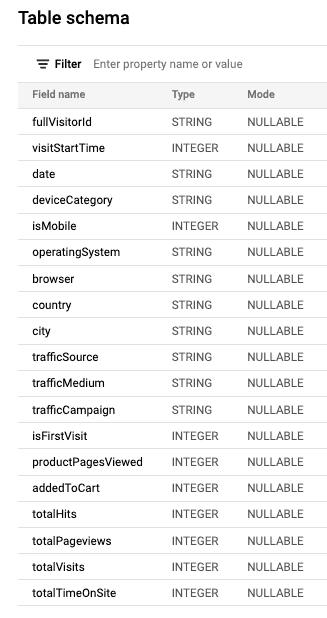

To train a machine learning model, you’ll need to create features, which could be both raw and transformed data. Based on our business experience, we propose the following set of features. We’ll create this dataset from the raw Google Analytics 4 data in the next steps.

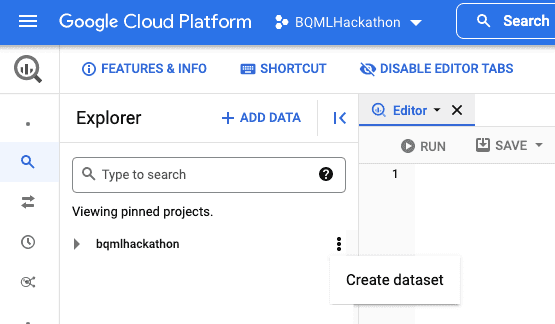

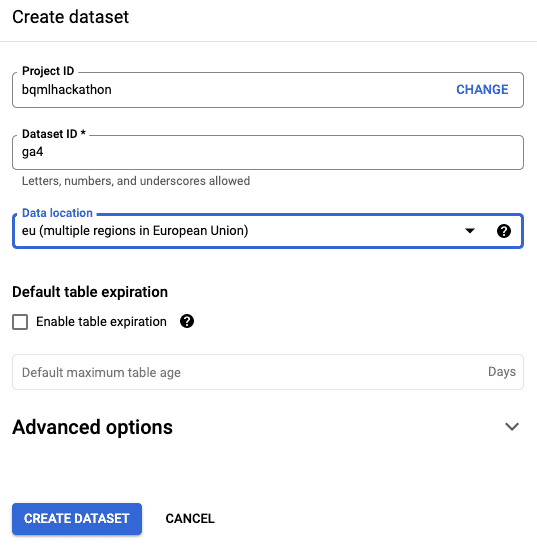

To create it under your project in BigQuery, first you’ll need to create a place for the data and your ML models. In BigQuery, it’s called a dataset.

In the next step, remember to set the proper data location (US/EU) for compliance reasons.

We’ll use eu (multiple regions in the European Union).

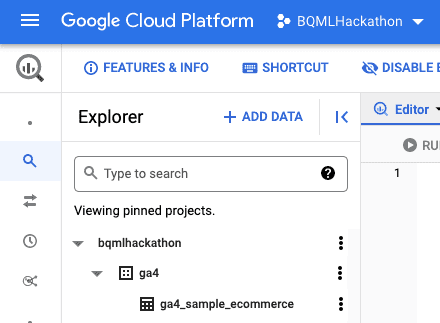

Now, you can create your dataset containing Google Analytics 4 data by running the following query: bqml_ga4_dataset.sql

If you changed the project/dataset names to different values than proposed above, update the lines with:

bqmlhackathon.ga4.ga4_sample_ecommerce

to

<project_id>.ga4.ga4_sample_ecommerce

and then also use the updated values in the next steps.

After completing this step, you’ll see your dataset on the left sidebar.

To better understand the prepared dataset, you may want to do some exploratory data analysis using aggregation functions in BigQuery, described in the previous step.

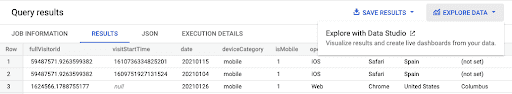

Alternatively, If you prefer a more interactive environment for data exploration, you may visualize and drill-down your query results in Google Data Studio. To do this, just run the query on the dataset you want to visualize, and select [Explore data] -> [Explore with Data Studio]

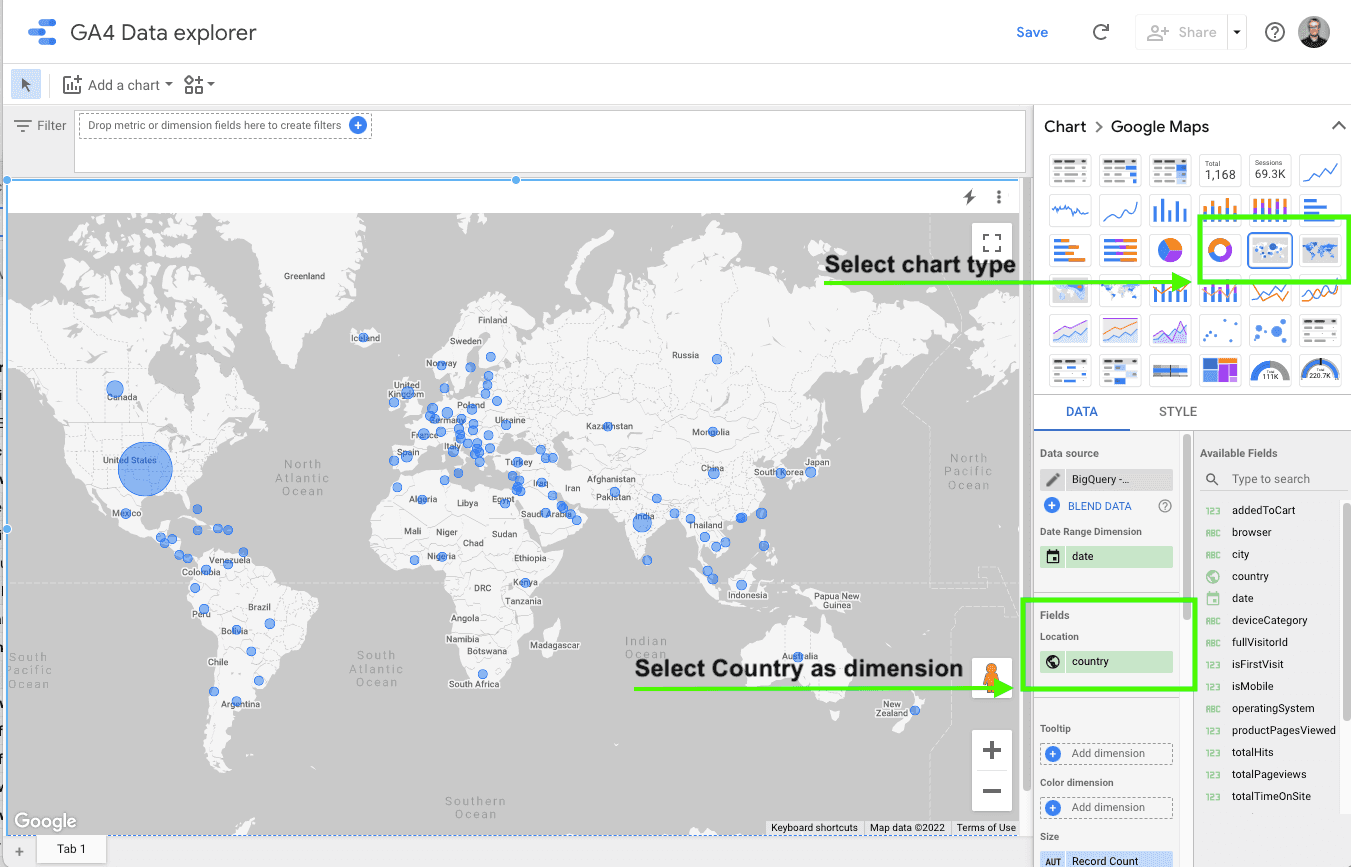

The data explorer will open in a new window, where you can use many types of data visualization to inspect and discover the dataset used for model training.

In this example, since the dataset is small (<20MB) and limited to a certain time period, we just run the query: SELECT * FROM `bqmlhackathon.ga4.ga4_sample_ecommerce`, then [Explore data] -> [Explore with Data Studio]. Then, we change the chart type to map, and select Country as a dimension to visualize.

You may extend the dataset by performing some feature engineering, like data transformations, feature creation, and feature selection.

BigQuery performs some automatic data transformations, to specifically match the input data format to the model input requirements:

For more advanced scenarios, you may want to perform more advanced manual data preprocessing, using ML functions such as:

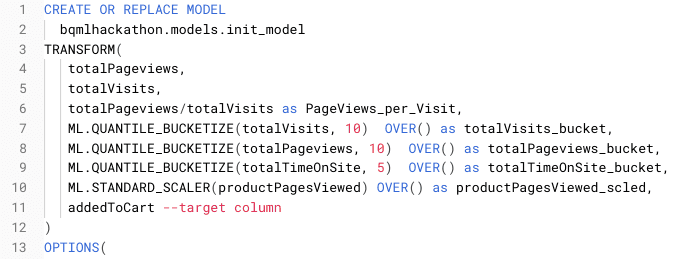

With BigQuery ML (BQML), you may train a model using raw data, or optionally add data preprocessing / feature engineering before the model training step using the TRANSFORM clause.

(See: Using the BigQuery ML TRANSFORM clause for feature engineering)

We define data transformations along with the model definition using the TRANSFORM statement in the following way:

If you decide to add the TRANSFORM clause on top of SELECT, the model will only use the features selected in this part, so you can use both feature selection and feature engineering there.

You can use the data transformations to produce features carrying more relevant information for the model. You’ll see the TRANSFORM clause in practice used for the model input data transformations in the next section.

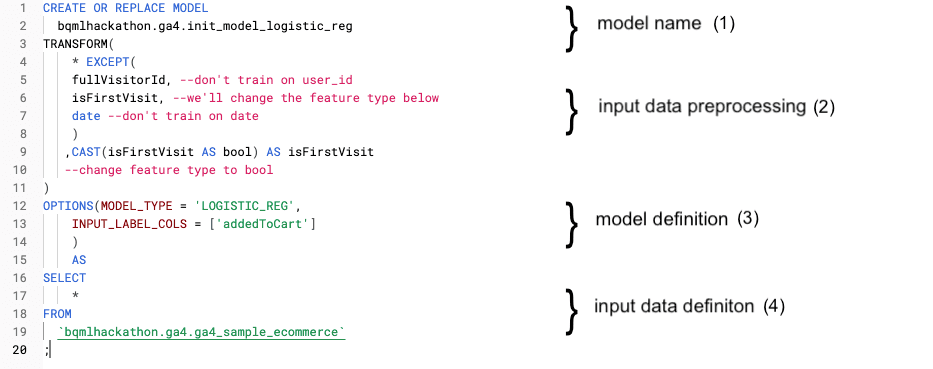

Once we’ve got a prepared dataset in place, we can train our first machine learning model, which will be the logistic regression classifier.

In your future projects, you may need to use a different model type to address your problem. This model selection guide may be useful.

In this simple scenario, you don’t need to create a separate train and test dataset. BigQuery ML will split your input data automatically to the train and test dataset.

To train the model in BigQuery, you define it in the SQL-like query.

TRANSFORM).

The link to query: bqml_create_model.sql

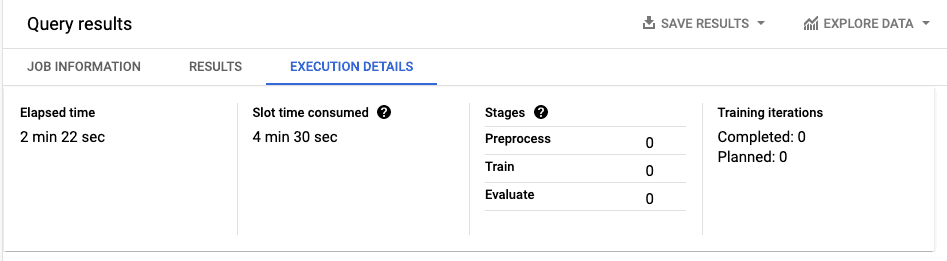

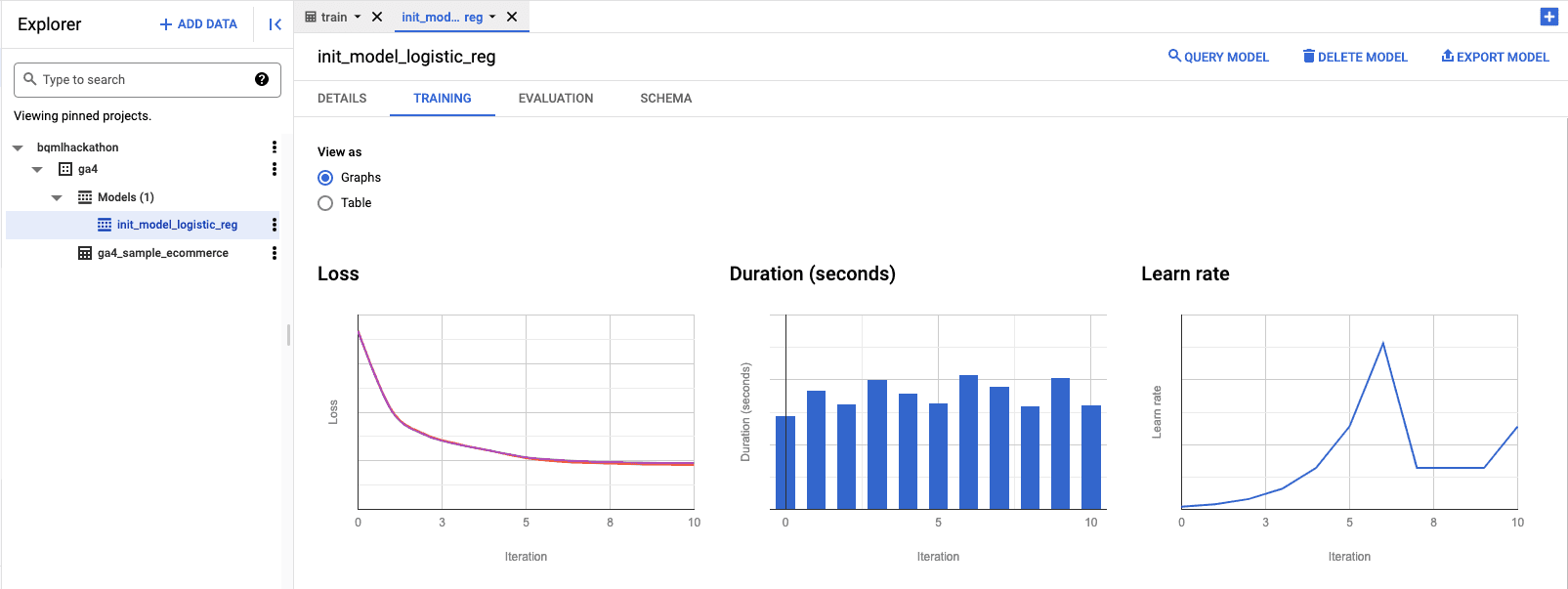

When you run the query, the model training will start. Model training time depends on the model type. It may take from a few minutes for simple models (like our case) to several hours for more complex models (like neural networks). You may follow the model training progress in the BigQuery UI:

You can also go back to the training process data in the TRAINING tab in your trained model details:

Once the model is trained, you can evaluate the model performance in a few ways.

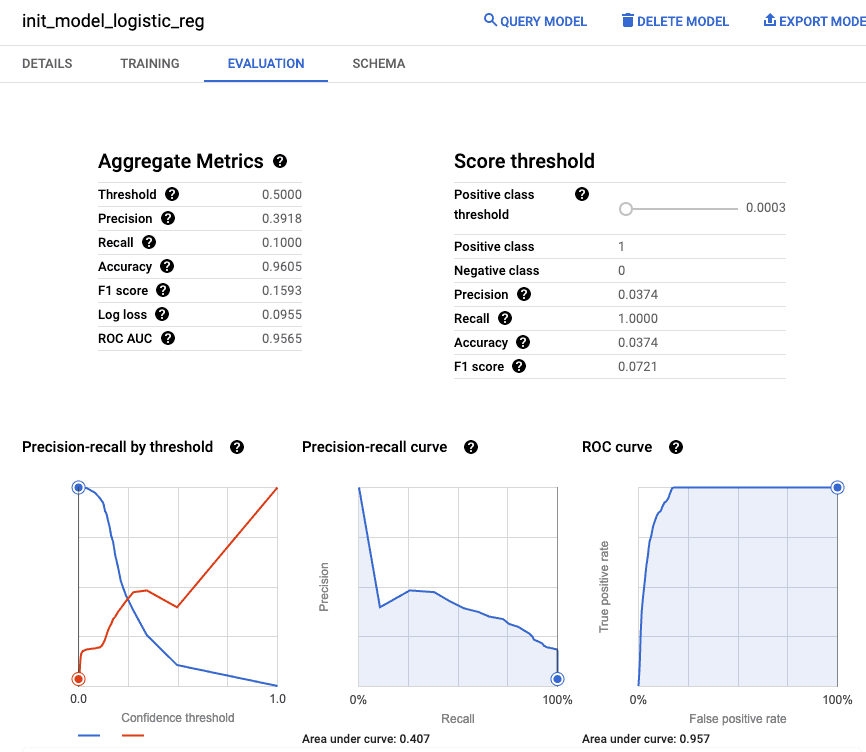

BigQuery also calculates the model performance metrics (Depending on the model type, i.e. precision, recall, accuracy, F1 score, log loss, or ROC AUC). You can preview it on the EVALUATION tab in the BigQuery console:

There are also SQL queries (i.e. ML.EVALUATE, ML.ADVANCED_WEIGHT) that allow access to this data in tabular form. If you want to learn more about the evaluation metrics interpretation, check the ML Crash Course sections about accuracy, precision and recall, and ROC AUC.

At this point you can decide if you want to proceed with the trained model, or whether you want to go back to the model training step and improve the model performance by defining a more complex model.

When you’re satisfied with the model performance, it’s time to use the model on the previously unseen (by the model) data. Alternatively, you can export the model and use it outside of the BigQuery in your production systems, i.e. to deploy it for online predictions. We’ll cover this topic in the next blog post.

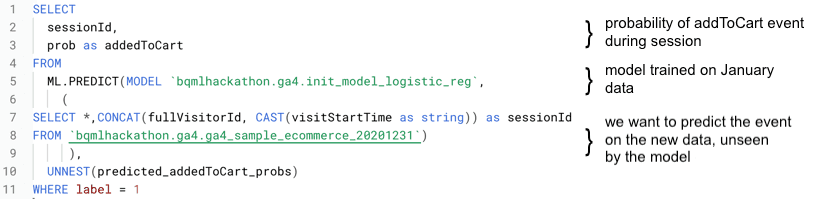

In our example, we’ll predict the probability for the addedToCart event for the new sessions on our e-commerce website.

First, prepare the dataset with the new data, which was unseen by the model (see: 1_bqml_ga4_dataset.sql and use different dates than were used for model training).

For example, set the date for 20201231, and name the table: bqmlhackathon.ga4.ga4_sample_ecommerce_20201231

Note, that currently 20210131 is the last available date in the public BigQuery GA4 dataset. So, to get predictions on the data unseen by the model during training, we’ll use 20201231. Usually, you’ll use the data after the training period.

You can get the predictions using the following query:

Link to the query: bqml_model_deployment.sql

Congrats! You just used your model to predict the probability of addedToCart event on the new data!

In this post, we explained the process of training, validating and deploying a machine learning model using BigQuery ML (BQML). Our example model predicts the probability of adding an item to the shopping cart on ane-commerce website. We used the Google Analytics 4 export to BigQuery to train a logistic regression classifier and then we predicted the probability of a sample event (addedToCart) on the new sessions.

Soon, we will publish a blog post about advanced model training in BigQuery ML (BQML), so if you don’t want to miss the publication, subscribe to our newsletter. Last but not least, we are happy to discuss Machine Learning models in your business, do not hesitate to contact us.

Interested in ML and MLOps solutions? How to improve ML processes and scale project deliverability? Watch our MLOps demo and sign up for a free consultation.

Recently I’ve had an opportunity to configure CDH 5.14 Hadoop cluster of one of GetInData’s customers to make it possible to use Hive on Spark…

Read moreIn the dynamic world of e-commerce, providing exceptional customer service is no longer an option – it's a necessity. The rise of online shopping has…

Read moreThe Data Mass Gdańsk Summit is behind us. So, the time has come to review and summarize the 2023 edition. In this blog post, we will give you a review…

Read moreToday's fast-paced business environment requires companies to migrate their data infrastructure from on-premises centers to the cloud for better…

Read moreOne of the core features of an MLOps platform is the capability of tracking and recording experiments, which can then be shared and compared. It also…

Read moreIn today's data-driven world, maintaining the quality and integrity of your data is paramount. Ensuring that organizations' datasets are accurate…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?