Deploying efficient Kedro pipelines on GCP Composer / Airflow with node grouping & MLflow

Airflow is a commonly used orchestrator that helps you schedule, run and monitor all kinds of workflows. Thanks to Python, it offers lots of freedom…

Read moreSo, you have an existing infrastructure in the cloud and want to wrap it up as code in a new, shiny IaC style? Splendid! Oh… it’s spanning through two different cloud providers, has several hundred resources and services, in several different regions? Quite a pickle indeed! But don’t worry mate, I just been through a similar situation and lived to tell the tale.

Infrastructure as a Code definitely makes sense as one of the basic DevOps principles. Instead of manually managing cloud resources which end in usual ad-hoc solutions “because he said he needed this one EC2 instance for a moment” and creating a strange spaghetti of services “no one knows if someone uses and if can be deleted”, it forces some rules and order. Keeping infrastructure as code makes it easier to manage, review and revert changes if needed. Also, the whole infrastructure and its parts can be easily recreated in a reliable way. Terraform is also nice enough to show how the changes in code would affect the infrastructure, should the code be executed, which is an additional safety measure. Ideally of course, we should be implementing IaC from the very beginning.

Terraform was created as a provisioning tool with cloud services in mind, and shines brightest when orchestrating cloud services. It supports most of the popular providers like AWS, GCP and Azure, has great documentation and is open-source and user-friendly. Puppet, Ansible, Chef and Salt are better at configuration management and Cloud Formation is AWS only.

I would assume the reader has some experience in Terraform so I won’t explain what state or resource is. I will focus on imports using AWS in examples.

To kick things off, let’s import a single IAM user. I made a simple Terraform configuration in file main.tf:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 3.27"

}

}

required_version = ">= 0.14.9"

}

provider "aws" {

profile = "default"

region = "eu-west-1"

}Next, I made a single user named “terraform_user” manually from AWS Web Console.

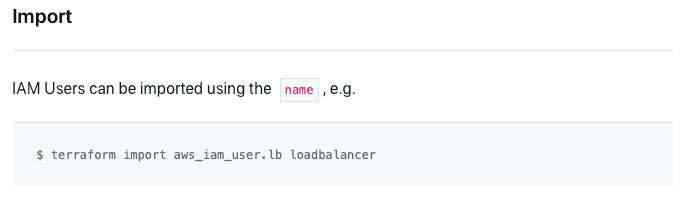

From there, let's see how to import an IAM user. Majority of resources supported by Terraform have an “Import” section in their documentation page.

So, in this example import takes as arguments:

type_of_resource.resource_name existing_user_name

Quick note:

Do not be misled, not every resource import is that straightforward. Some, for example aws_iam_role_policy_attachment are much more complicated:

$ terraform import aws_iam_role_policy_attachment.test-attach

test-role/arn:aws:iam::xxxxxxxxxxxx:policy/test-policy

Where role name and policy arn separated by /. Always check “Import” section.

With that knowledge I added a simple mock up of my user resource to my Terraform configuration:

resource "aws_iam_user" "my_user" {

name = "terraform_user"

}Executing terraform plan shows:

# aws_iam_user.my_user will be created

+ resource "aws_iam_user" "my_user" {

+ arn = (known after apply)

+ force_destroy = false

+ id = (known after apply)

+ name = "terraform_user"

+ path = "/"

+ tags_all = (known after apply)

+ unique_id = (known after apply)

}

Plan: 1 to add, 0 to change, 0 to destroy.

Looks ok, so let's import the user executing command:

$ terraform import aws_iam_user.my_user terraform_user

aws_iam_user.my_user: Importing from ID "terraform_user"...

aws_iam_user.my_user: Import prepared!

Prepared aws_iam_user for import

aws_iam_user.my_user: Refreshing state... [id=terraform_user]

Import successful!

The resources that were imported are shown above. These resources are now in

your Terraform state and will henceforth be managed by Terraform.So the user is listed in state:

$ terraform state list

aws_iam_user.my_userLet’s check the plan again:

$ terraform plan

Terraform will perform the following actions:

# aws_iam_user.my_user will be updated in-place

~ resource "aws_iam_user" "my_user" {

+ force_destroy = false

id = "terraform_user"

name = "terraform_user"

~ tags = {

- "color" = "red" -> null

}

~ tags_all = {

- "color" = "red"

} -> (known after apply)

# (3 unchanged attributes hidden)

}

Plan: 0 to add, 1 to change, 0 to destroy.The plan command found a little difference between my code and the existing user. force_destroy = false is default value so it can be ignored in this case. I will add the missing tag to my Terraform config:

resource "aws_iam_user" "my_user" {

name = "terraform_user"

tags = {

"color" = "red"

}

}Now the plan shows:

No changes. Your infrastructure matches the configuration.

The job is done, the user is added to the state and managed in Terraform. Applying the plan is also safe and would not cause any changes.

In the case of mistakes or major edits, resources can be removed from state using command:

$ terraform state rm resource_type.resource_name

So in our case it will be:

$ terraform state rm aws_iam_user.my_user

Okay, now do it manually several hundred times for different resources. See ya!

...just kidding. Adding it this way may be fine for a single resource, as we needed to look up the user name in the AWS Console to import it. To efficiently import big, existing infrastructure, we need some kind of mapping tool for all kinds of resources. As usual, the open source community did not disappoint.

https://github.com/GoogleCloudPlatform/terraformer

Terraformer is an open source CLI tool for generating Terraform code based on existing infrastructure. It’s very good for mapping cloud resources, but won’t do all the job for you. Generated code often contains default values, which decrease readability and resource names are not that user friendly. Imports still need to be done manually by command terraform import too. Our example user has some resources attached to it, so let’s see what we get from our IAM example (region is needed in terraformer command, but it doesn’t matter for IAM service, as they are global):

$ terraformer import aws --resources="iam" --regions="eu-west-1" --profile="default"

2021/10/11 05:00:53 aws importing default region

2021/10/11 05:00:55 aws importing... iam

2021/10/11 05:00:59 aws done importing iam

2021/10/11 05:00:59 Number of resources for service iam: 9

2021/10/11 05:00:59 Refreshing state... aws_iam_role.tfer--AWSServiceRoleForSupport

2021/10/11 05:00:59 Refreshing state... aws_iam_role_policy_attachment.tfer--AWSServiceRoleForSupport_AWSSupportServiceRolePolicy

2021/10/11 05:00:59 Refreshing state... aws_iam_role_policy_attachment.tfer--AWSServiceRoleForTrustedAdvisor_AWSTrustedAdvisorServiceRolePolicy

2021/10/11 05:00:59 Refreshing state... aws_iam_user_group_membership.tfer--terraform_user-002F-Admin

2021/10/11 05:00:59 Refreshing state... aws_iam_group.tfer--Admin

2021/10/11 05:00:59 Refreshing state... aws_iam_group_policy_attachment.tfer--Admin_AmazonEC2FullAccess

2021/10/11 05:00:59 Refreshing state... aws_iam_user_policy_attachment.tfer--terraform_user_AmazonS3ReadOnlyAccess

2021/10/11 05:00:59 Refreshing state... aws_iam_user.tfer--AIDAR33EZOPUUP2XE5OJ4

2021/10/11 05:00:59 Refreshing state... aws_iam_role.tfer--AWSServiceRoleForTrustedAdvisor

2021/10/11 05:01:02 Filtered number of resources for service iam: 9

2021/10/11 05:01:02 aws Connecting....

2021/10/11 05:01:02 aws save iam

2021/10/11 05:01:02 aws save tfstate for iamTerraformer found 9 different resources. Some of them like roles and policies are AWS managed, so we don’t have to import them. User, group, group membership and policy attachments on the other hand, are what interest us. These files are created by the terraformer:

.

└── generated

└── aws

└── iam

├── iam_group_policy_attachment.tf

├── iam_group.tf

├── iam_role_policy_attachment.tf

├── iam_role.tf

├── iam_user_group_membership.tf

├── iam_user_policy_attachment.tf

├── iam_user.tf

├── outputs.tf

├── provider.tf

└── terraform.tfstate

3 directories, 10 filesThat’s a lot for one IAM user. Let’s analyze the files. Going from the bottom:

output "aws_iam_user_tfer--AIDAR33EZOPUUP2XE5OJ4_id" {

value = "${aws_iam_user.tfer--AIDAR33EZOPUUP2XE5OJ4.id}"

}So in its current form it’s very hard to read and use, I would recommend writing your own outputs with some planning behind them.

In our scenario, those three files were not very interesting, they often can be safely ignored and deleted. The rest of them are actual mapped resources and I will omit some of them as they contain AWS managed roles and policies we don’t need to import.

iam_user.tf

resource "aws_iam_user" "tfer--AIDAR33EZOPUUP2XE5OJ4" {

force_destroy = "false"

name = "terraform_user"

path = "/"

tags = {

color = "red"

}

tags_all = {

color = "red"

}

}

This looks almost exactly like a resource we added manually. Executing this code would of course be valid and have the exact same effect as the one we wrote, but there are still some things to consider. Attributes force_destroy, path and tags_all can be safely deleted from config, the first two are default values, and the latter is a read-only attribute only saved in state, containing all tags of resource for reference purposes. Resource name is not very informative, so it’s advised to change it too.

iam_user_policy_attachment.tf

resource "aws_iam_user_policy_attachment" "tfer--terraform_user_AmazonS3ReadOnlyAccess" {

policy_arn = "arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess"

user = "terraform_user"

}iam_user_group_membership.tf

resource "aws_iam_user_group_membership" "tfer--terraform_user-002F-Admin" {

groups = ["Admin"]

user = "terraform_user"

}iam_group.tf

resource "aws_iam_group" "tfer--Admin" {

name = "Admin"

path = "/"

}iam_group_policy_attachment.tf

resource "aws_iam_group_policy_attachment" "tfer--Admin_AmazonEC2FullAccess" {

group = "Admin"

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2FullAccess"

}Now those files had resources we needed. It seems our terraform_user is in the Admin group which has AmazonEC2FullAccess permissions, and policy AmazonS3ReadOnlyAccess attached directly to it. I'll add those lines to my code:

resource "aws_iam_user_policy_attachment" "terraform_user_AmazonS3ReadOnlyAccess" {

policy_arn = "arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess"

user = "terraform_user"

}

resource "aws_iam_user_group_membership" "terraform_user-Admin" {

groups = ["Admin"]

user = "terraform_user"

}

resource "aws_iam_group" "Admin" {

name = "Admin"

}

resource "aws_iam_group_policy_attachment" "Admin_AmazonEC2FullAccess" {

group = "Admin"

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2FullAccess"

}I changed resource names to be more readable and deleted the path attribute from aws_iam_group as it was default and not needed.$ terraform plan

Terraform will perform the following actions:

# aws_iam_group.Admin will be created

+ resource "aws_iam_group" "Admin" {

+ arn = (known after apply)

+ id = (known after apply)

+ name = "Admin"

+ path = "/"

+ unique_id = (known after apply)

}

# aws_iam_group_policy_attachment.Admin_AmazonEC2FullAccess will be created

+ resource "aws_iam_group_policy_attachment" "Admin_AmazonEC2FullAccess" {

+ group = "Admin"

+ id = (known after apply)

+ policy_arn = "arn:aws:iam::aws:policy/AmazonEC2FullAccess"

}

# aws_iam_user_group_membership.terraform_user-Admin will be created

+ resource "aws_iam_user_group_membership" "terraform_user-Admin" {

+ groups = [

+ "Admin",

]

+ id = (known after apply)

+ user = "terraform_user"

}

# aws_iam_user_policy_attachment.terraform_user_AmazonS3ReadOnlyAccess will be created

+ resource "aws_iam_user_policy_attachment" "terraform_user_AmazonS3ReadOnlyAccess" {

+ id = (known after apply)

+ policy_arn = "arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess"

+ user = "terraform_user"

}

Plan: 4 to add, 0 to change, 0 to destroy.Looks ok, let’s import those four resources. Writing those commands manually may be boring and cause mistakes so I advise copying strings from plan and terraformer outputs as they often contain the required ARN, or creating whole scripts via some sed/grep/vim magic. For example string form plan # aws_iam_group.Admin will be created contain the first half of our import command, grep all “will be created” replace # with “terraform import” and then just add the last part from the existing resource accordingly to the documentation.

$ terraform import aws_iam_group.Admin Admin

$ terraform import aws_iam_group_policy_attachment.Admin_AmazonEC2FullAccessAdmin/arn:aws:iam::aws:policy/AmazonEC2FullAccess

$ terraform import aws_iam_user_group_membership.terraform_user-Adminterraform_user/Admin

$ terraform import aws_iam_user_policy_attachment.terraform_user_AmazonS3ReadOnlyAccessterraform_user/arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess

Everything has been added correctly, and after executing the plan we get:

No changes. Your infrastructure matches the configuration.Plus the state list shows managed resources as expected:

aws_iam_group.Admin

aws_iam_group_policy_attachment.Admin_AmazonEC2FullAccess

aws_iam_user.my_user

aws_iam_user_group_membership.terraform_user-Admin

aws_iam_user_policy_attachment.terraform_user_AmazonS3ReadOnlyAccessUsing --compact parameter to group resource files within a single service into one resources.tf file

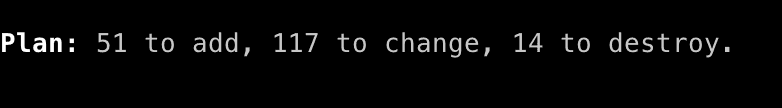

Plan your imports, do things slowly and methodically so you don't see monsters like this:

Did you like this blog post? Check out our other blogs and sign up for our newsletter to stay up to date!

Airflow is a commonly used orchestrator that helps you schedule, run and monitor all kinds of workflows. Thanks to Python, it offers lots of freedom…

Read moreThis blog series is based on a project delivered for one of our clients. We splited the content in three parts, you can find a table of content below…

Read moreReal-time data streaming platforms are tough to create and to maintain. This difficulty is caused by a huge amount of data that we have to process as…

Read moreThe year 2023 has definitely been dominated by LLM’s (Large Language Models) and generative models. Whether you are a researcher, data scientist, or…

Read more“How can I generate Kedro pipelines dynamically?” - is one of the most commonly asked questions on Kedro Slack. I’m a member of Kedro’s Technical…

Read moreIntroduction At GetInData, we understand the value of full observability across our application stacks. In this article we will share with you our…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?