LLM-enhanced information retrieval

Over the last few months, Large Language Models have gained a lot of traction. Companies and developers were trying to outdo each other with inventing different use cases for LLMs and presenting impressive demos that involved language models backed up with the additional stack of tools. As with all new things that emerge so dynamically it has been really hard to bet on which of these applications are going to bring the most business value. Now, as the LLM hype starts to transform into a more tangible shape, we can observe that some of the most interesting use cases are being built around widely understood information retrieval.

Even after we narrow down our area of interest to the aforementioned information retrieval, there are still many cases to consider. To name a few, we have to face the usual decisions about whether to use self-deployed open source models or commercial APIs, how to handle data privacy, how to cut deployment costs and many more. But from a purely functional perspective, the most important question is the one resulting from the use case’s problem statement itself - we want to retrieve information, so how are we going to access the data that is not directly encoded in LLMs’ parameters? And again, there are multiple answers, so let’s consider some examples.

Fine-tuning foundation models

LLMs are trained on vast amounts of diverse data and are able to encode the information they are presented during the training process in their parameters and utilize it to perform various tasks. These are so-called “foundation models”. However, in some practical use cases, there might be a need to incorporate domain-specific datasets or some proprietary, internal knowledge of the company that the foundation model obviously cannot have. If the amount of this knowledge is small enough, we can achieve this through proper prompt engineering by simply adding some data and context to the model prompt. On the other hand, when we have a lot of auxiliary data that we want to make usable for the model, we can think of fine-tuning the model's parameters. This, however, can be a very tricky and difficult engineering task. Adjusting all model parameters is very demanding in terms of resources (and, therefore, costs). There is also a set of techniques allowing for parameter-efficient finetuning (PEFT). Thanks to them we don’t need to tune all the parameters of the LLM. Instead, we can adjust just a small amount by adding additional trainable layers (adapters), performing algebraic transformations of internal matrices (e.g. LORA) or applying other tricks. But even this way, fine-tuning the model is not simple and the results (and costs) are hard to predict. Luckily, this is not the only solution.

Retrieval Augmented Generation (RAG)

To combine Large Language Models’ generative capabilities with the ability to extract information from additional data sources that were not included in the training dataset, a technique called Retrieval Augmented Generation (RAG) was proposed. Lately, it has become probably the hottest topic in terms of practical LLM applications. Much effort is being put into development, both from independent developers (LlamaIndex, Langchain) and leading AI companies (Microsoft, Nvidia and others). In a basic setup, RAG works as follows. We can take a bunch of documents that contain information unknown to the model (e.g. a company’s internal financial documentation) that is stored somewhere and split it into chunks of text. These chunks can be fed to the embedding model which is able to produce numerical representations of the text input. Next, these numerical representations called embeddings can be stored in a vector database. On the other hand, we can also apply the same embedding model to the input that the user provides to the LLM model, for example in the form of a question. Thanks to the properties of the embedding vectors and mechanisms implemented by vector databases, we can search for the encoded document chunks that are most similar to the encoded question. Then, we can include some of the most similar fragments of documents in the prompt to provide the LLM with the additional context. Since document chunks are indexed, and we can also store some metadata about the original documents, we are not only able to allow the LLM to answer the user’s questions based on internal knowledge from documents, but also to precisely point to what basis these answers were generated.

Retrieving information from structured sources

Maybe it was not stated explicitly, but so far we were talking about retrieving information from unstructured data sources. What if the additional information that we want to provide to the LLM is already stored in a structured form, e.g. in a relational database? Do we have to dive into complex finetuning or create a vector store and RAG pipelines? It turns out that in many cases we don’t. Instead, we can take advantage of a different property of some Large Language Models: the ability to generate code. The LLMs that were trained not only on natural language texts but also on the codebases written in various programming languages can be pretty good in creating working pieces of code, for example in SQL. This fact was exploited in the proof of concept that we developed for the Swedish fin-tech company Juni.

Juni use case

Juni is a financial platform for businesses in digital commerce, offering the financial tools and intelligence to manage and optimize cash flow, with features such as multi-currency business accounts, interest-free capital for media buying or supplier payments, powerful integrations with banks and accounting tools, and automated invoicing. The platform aims to streamline payments, enhance financial visibility, and provide flexible financing options to help businesses make informed decisions and boost their bottom line.

Among other requests related to the data catalog development initiative, Juni asked GetInData about the possibility of implementing a chatbot that would provide a natural language interface to their internal data warehouse. In other words, the task was to create a proof of concept for a question answering tool that is integrated with the company’s communication platform (Slack) and, taking plain user question as the input, is able to access information stored in the database (BigQuery) and answer the question using this internal knowledge. This would enable a convenient way of extracting business knowledge, even for people that are not fluent in SQL.

Assumptions and technologies

For the sake of the PoC, questions could be asked in three different areas: definitions of specific business terms used in Juni, structure and metadata of the entire data warehouse and finally: analytical and operational data. All the data was stored in several BigQuery tables within the separate dataset. Since data privacy was crucial in this case, one important assumption was that no data can ever leave the Google Cloud Platform perimeter, except when sending the user questions from Slack to GCP or receiving the answer the other way. This implied that we were limited to the GCP services and open-source tools used within the GCP infrastructure.

Taking these constraints into account, the Large Language Model that was selected was PaLM 2 from Google, used as a service on the VertexAI platform. We briefly compared it with open source Llama 2, but jointly considering multiple factors like inference time, results quality and deployment costs we decided to stick to the commercial option for this case. We also used Slack Bolt SDK to enable communication via the Slack platform. This was a suggestion from the customer, however the solution can be easily modified to become completely enclosed within GCP, for example by using streamlit-chat or other open source chat interfaces deployed on a cloud virtual machine.

From a high-level functional perspective, we assumed that the LLM will be used to interpret the user's question and based on this and the provided database/table structure it will generate a valid SQL query. The query will be then executed and the retrieved table will be interpreted again by the LLM, resulting in the natural language response. To focus on the main functionality which was information retrieval, we did not implement a conversation memory component in the PoC prototype, leaving it as a one shot question-and-answer tool. However, this component can be added in the future to enable a more chatbot-like fluent conversation experience.

Implementation and results

To get some initial results as quickly as possible, for the first iteration we relied on some pre-implemented components. Over the last year, Langchain framework gained a lot of popularity. The main reason for this is probably that it tries to unify and automate a lot of standard workflows involving LLMs, SQL database information extraction being no exception. Langchain provides specialized agents and toolkits that can be used out of the box on many different databases. They are able to generate and execute valid SQL queries and also automatically perform inference about the database structure. This approach worked pretty well (but not perfectly) in our case, but as with all generic solutions - it comes at a price. Apart from being difficult to customize, the out of the box workflow was taking way too long to execute because of some unnecessary steps that were taken to extract enough information about underlying BigQuery tables before an actual query could be formulated. Total response time from the user perspective was oscillating around 30 seconds which was not acceptable.

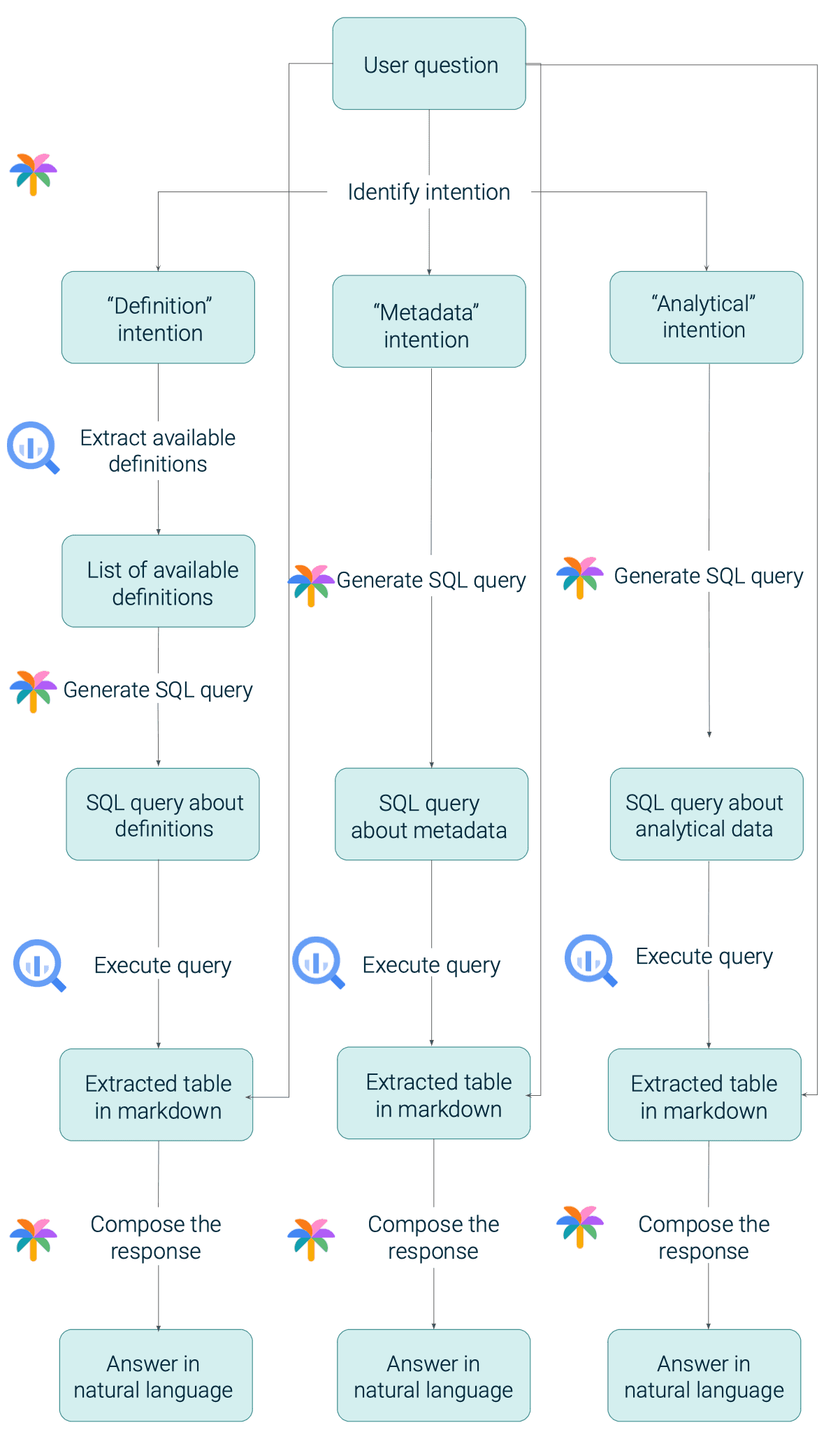

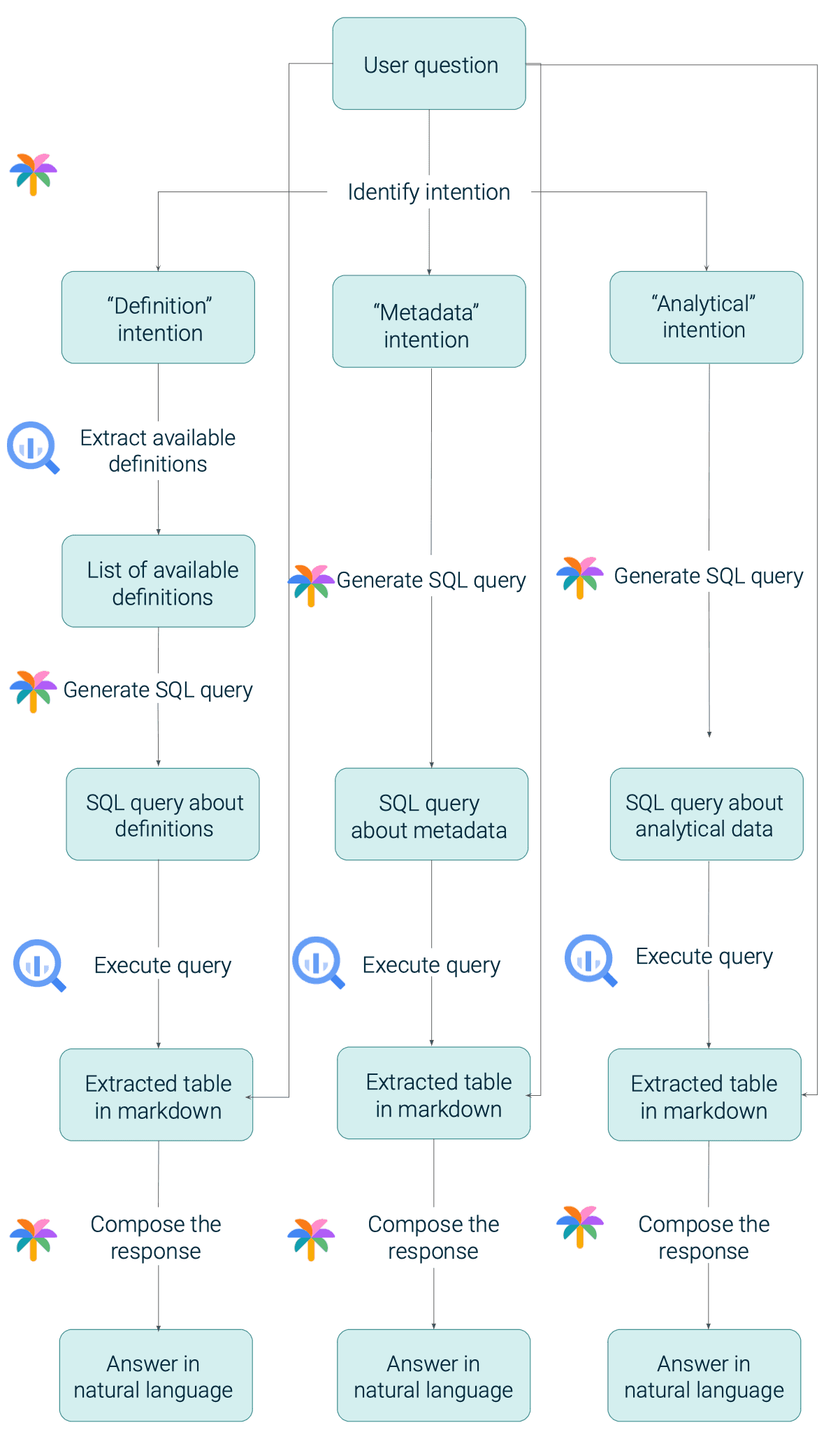

To speed up the process, we decided to give up on Langchain and strip the workflow to the absolute minimum. Since we already knew what information we wanted to extract (either definitions of business terms, warehouse metadata or analytical data) and what are the names of tables and columns (along with example rows) we were able to provide this information instantly via a system prompt, without the need to extract it each time. This way, the LLM (which had all the needed information upfront) could be used only twice - first to generate an SQL query and then to interpret the results. Also, we needed to access the database only once, just to execute the generated query. However, hardcoding the entire dataset structure would result in a huge, unmaintainable system prompt that would also quickly exceed the PaLM 2 context window limit. Therefore, knowing that the question can be about either definitions, metadata or analytical data, we divided the information extraction workflow into 3 subworkflows, one for each “user intention”. We had 3 separate system prompts (definition prompt, metadata prompt and analytical prompt), each containing the structure and a sample of records from related tables, and also some intention-specific instructions and few-shot examples for the LLM. To direct the system towards the particular subworkflow we needed to add an additional step where the LLM identifies the intention of the user. One more extraction step was added only in the definition subworkflow because each time we needed to get the updated list of available business terms to allow for inexact matching of the user input with the contents of the definitions table. This customization reduced total response time from half a minute to a few seconds.

As tested on a list of typical user questions, our implementation allowed for extracting not only very basic information (“define churn” or “give me the list of all data owners”), but also to generate and execute queries that need some table joins or subqueries, non-obvious conditional statements and aggregations. For example, the question “What is the average daily total card spend in the last quarter for customers from the United Kingdom?” resulted in the following query, which was valid and returned the correct answer:

SELECT AVG(total_card_spend_amount_day) AS avg_daily_total_card_spend

FROM juni-data-chatbot.chatbot_metadata.cashback_ledger_metrics

WHERE calendar_date >= DATE_SUB(CURRENT_DATE(), INTERVAL 3 MONTH)

AND calendar_date < CURRENT_DATE()

AND customer_id IN (

SELECT customer_id

FROM juni-data-chatbot.chatbot_metadata.customer_metrics

WHERE country_code = 'GB'

)

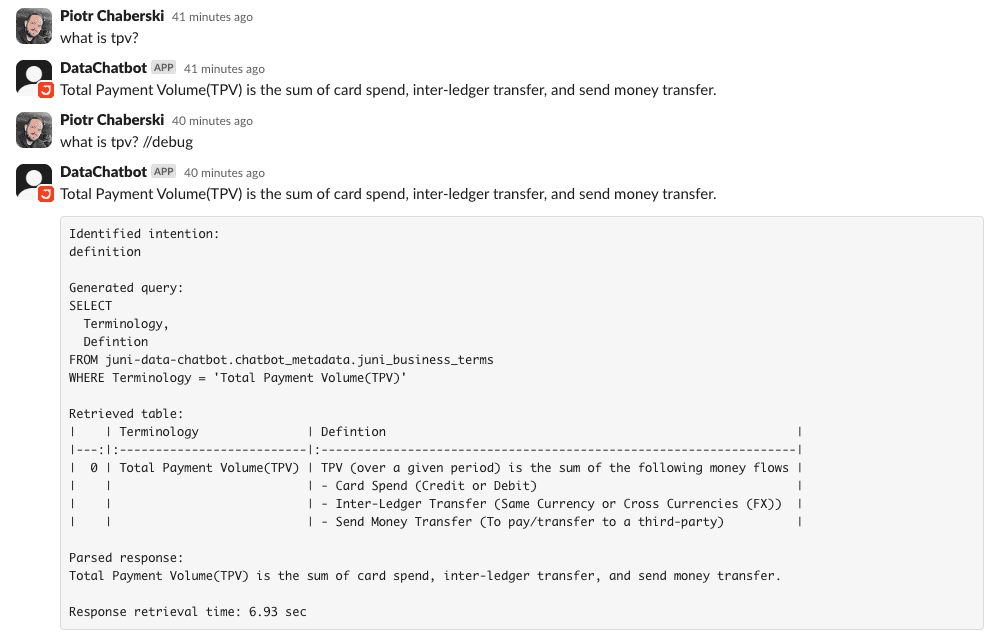

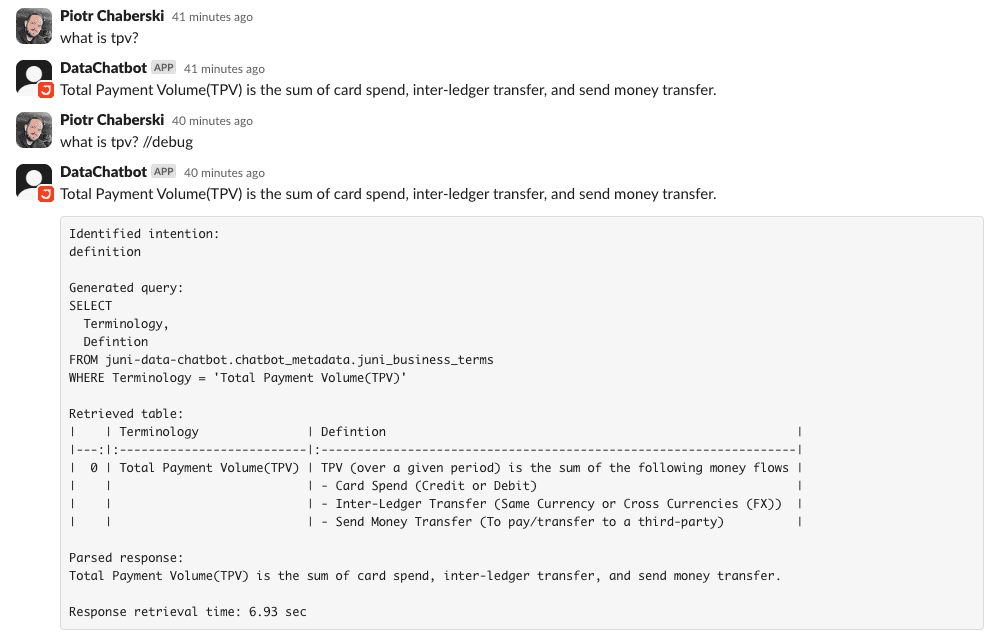

Of course due to the probabilistic nature of LLMs, even despite careful prompt engineering and output validation, there is always a chance that something goes wrong in the generative process. To allow users to easily investigate such situations without accessing the logs, we added a “debug mode”. If the question was followed with the “//debug” tag, in addition to the final answer all intermediate steps were printed, including identified intention, generated query and returned response table.

Lessons learned

Our proof of concept showed that it is possible to effectively use the generative capabilities of LLMs to build a simple database retrieval system with a natural language interface, which hides the complexity of SQL queries underneath the hood. One thing to remember is that there is a tradeoff between genericity and efficiency. Generic agents as these implemented in Langchain are good, but each time they have to investigate the database structure and also need a lot of self-validation steps to finally come up with a valid query. This takes time and negatively affects usability and user experience. Sometimes, when we are considering a specific use case where the database structure is known and potential questions from the users are predictable, it might be a better idea to customize the workflow and give all known information to the LLM upfront. This will only make the solution fitted to our use case, but it will greatly reduce the complexity of the code, allowing for easier maintenance and will improve effectiveness and the user experience. Another interesting conclusion taken from the feedback given by the data platform team is that it is probably more effective to focus on a platform rather than just on a LLM model. As models come and go but the platform exists, a compatible ecosystem is more important than a killer model in the long term.