2022 Big Data Trends: Retail and eCommerce become one of the hottest sectors for AI/ML

Nowadays, we can see that AI/ML is visible everywhere, including advertising, healthcare, education, finance, automotive, public transport…

Read moreIt has been almost a month since the 9th edition of the Big Data Technology Warsaw Summit. We were thrilled to have the opportunity to organize an event on a larger scale once again! Over 500 participants from various companies joined us this year in Warsaw. The agenda included a plenary session, two panel discussions and four parallel sessions covering topics such as data engineering, MLOps, architecture operations and cloud, real-time streaming, AI, ML and Data Science, data strategy and ROI. Attendees also had the opportunity of joining one of the 10 roundtable discussions, and everything was streamed live! On the second day, several hundred people tuned in online to watch the great presentations given by speakers from well-known companies.

We had three amazing days of substantive knowledge sharing at the Big Data Technology Warsaw Summit. Participants had the option of choosing between technical workshops, 'old school' onsite participation, or watching everything from the comfort of their own homes or offices. They had the opportunity to exchange experiences with 90 speakers from well-known brands such as Airbnb, Netflix, FreeNow, Xebia, King, Allegro, Microsoft, Google, Cloudera and more. It's worth mentioning that over 60% of the presentations came from the Call for Papers (CFP), as we intentionally wanted the conference to be open to the whole community and provide the opportunity for anyone with a good story to share to present in front of our audience. Additionally, seven performances were given by personally invited experts in the big data, machine learning, artificial intelligence and data science world. We are grateful that among the many invitations they received, they chose to be part of the Big Data Technology Warsaw Summit.

Would you like to find out more about the speakers and presentations? As every year, we have presented some of the reviews prepared by our experts below.

On the first day of the conference, I had the pleasure of attending 3 presentations from the “Data Engineering” track.

During the first one, Maciej Durzewski from Ab Initio in the presentation called “Data Engineering - the most eclectic part of Machine Learning process” took us on the journey of data, starting from dataset acquisitions, all the way up to using them in Machine Learning modeling. The typical route, implemented by most of the projects, starts by applying low-level transformations on the raw data, then data enrichment up to canonical shape (called a 'feature store' in ML language). Then, we use the features to run ML algorithms and create models. Flows for inference (running the predictions) look similar and pipelines often re-use the same transformation flow.

While this sounds simple, Maciej describes some cases that need the touch of a magic wand before feeding ML. While 95% of the data he worked on is easily vectorizable (like relational DBMS, CSV files or even images), the remaining cases require special code to understand the data. These are random image sets, non-homogenous databases or blobs or texts. He described to us the most complicated dataset he faced – these contain combined different types of data, like image + text + annotations + external feeds.

Two projects were described - the first focused on the Large Language Model creation for Polish language with steps including source selection and extraction, dividing data into lexemes and words to finally build a model. The challenge Maciej faced there was the unification of the flow and separately testing different parts to enable easy change management. It seemed like using properly prepared Makefiles was enough to simplify these. The second example was a model built on medical data to classify patients. This wasn’t trivial, even at the data acquisition stage, because multiple files needed to be merged and the resulting data was very sparse. This created a need for special libraries like libsvm that were problematic to be deployed to production.

Both examples taught important lessons – in order to make the promotion of proof-of-concept to production as effortless as possible, one needs to take into account the readability of the transformations code, encapsulating different steps and focusing on the repeatability of the execution. In the end, Maciej revealed two main tricks that he implements in ML projects: having column-level lineage information about every separate feature in the feature store and simplifying transformations to be as easy and readable as possible.

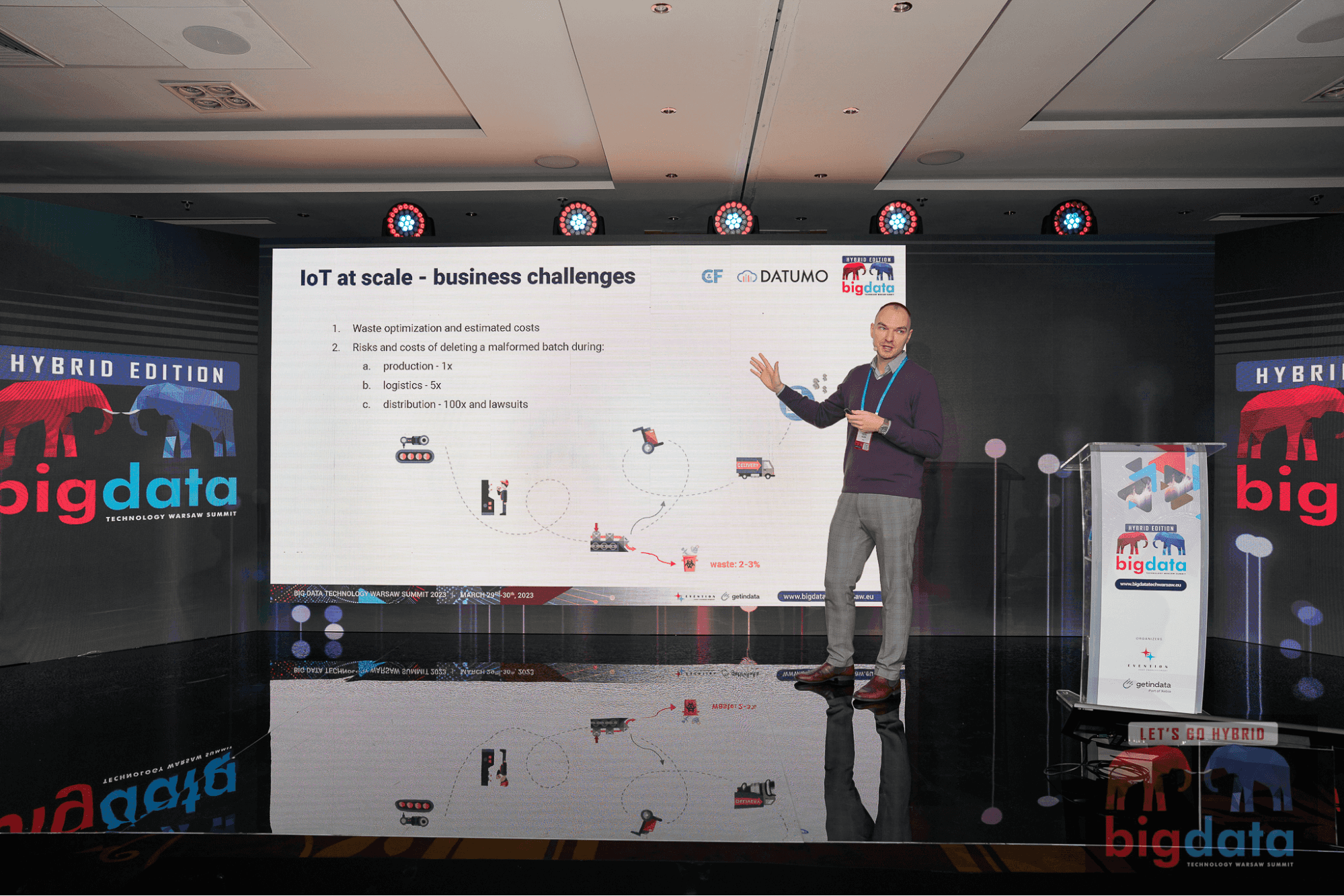

The second presentation that I had the pleasure of attending was “Smart manufacturing in life science. Real-life use case of cloud-based project in IoT” performed by Piotr Guzik (CEO of Datumo). Piotr reveals the details of advanced IoT implementation in the cloud, supported by Azure IoT Hub. The project goal was to collect data from 30 factories, each handling 15 000 devices, and finally build an ML model to provide recommendations of the parameters to be set on PLC drivers. The scale wasn’t the only challenge – this project runs in a highly regulated environment with two separate network layers in each site: the internal one, out of their control, and the external one where they were able to install things, but with quite limited resources. Moreover, the project success criteria included TCO reduction (focus on using cloud), ensuring high performance and scalability, reliability of the data (sensors produce them only once, so there is no way of losing these) and operational excellence by continuous monitoring.

Piotr shared with us all the implementation details. They decided to use Azure IoT Edge to collect the data and even for some simple local transformations (before the data is sent to the cloud) on limited computational resources. This solution uses tiny docker containers, so it’s easy to maintain and replicate. This part takes care of handling different protocols that devices implement (gRPC, MQTT, or even SFTP) and buffering data in the case of internet connectivity issues. In the cloud, Azure IoT Hub keeps the central management of devices in a scalable way. They didn’t decide to publish events directly to IoT Hub – instead, the Kafka cluster in the cloud takes care of data collection from multiple sites. When data reaches the cloud, it may be redirected into three sinks.

„Hot data” is handled in a streaming fashion - it is expected to have under-minute latency and move directly to Kafka with no batching. Then, events land in Azure Data Explorer which is used as a time series database, powering PowerBI dashboards. This flow is mostly used for monitoring scenarios where an immediate response is key. "Warm data” uses micro-batch solutions with under-hour latency – this applies to most of the solutions where joins with metadata or historical data are necessary and are implemented using Databricks services." Cold data” uses pure batch scenarios, with data stored in long-term data stores and is used to power all analytical use cases. It also handles ML model training before it is published to the factory.

Piotr shares with us the lessons learned during the project implementation. IoT use cases are challenging and often require a different selection of technologies for every layer, increasing the complexity of the setup. Resilience is key, as the data cannot ever be lost and costs are crucial too – it’s hard to convince clients to plug into the IoT world with high entry costs,a pay-as-you-go model is the most friendly option. These were all very important lessons that can play an important role in every Internet-of-Things business.

The next one; “What data engineering approach and culture fits your organisation better?” performed by Pierre de Leusse, Engineering Director at PepsiCo. Pierre shared with us his perspective on the different ways in how the data processing ecosystem can be implemented in different organizations, based on the correlation of two factors: technical culture (the presence or lack of skilled engineers) and the complexity of data pipelines.

The first example shared by Pierre describes an organization with very basic data engineering needs. In this type of company, the sales team usually maintains all the data and is generally OK with keeping it in Excel. For more advanced pipelines, Airbyte or Fivatran can be used (or even Azure Power Apps), as these do not require extensive technical aptitude. This setup works well for anything simple, but in the case of special requests, it may become a bit messy because of the tools’ limitations. Additionally, it can be quite slow on bigger datasets.

Another approach describes an all-in-one specialist tool like Dataiku or Alteryx. These can power all data engineering processes – acquisition, transformations, quality validation and even visualizations. What is important to mention is that these support CI/CD for the pipelines and do not require software engineers to maintain them. However, this setup may be expensive and it’s also not optimized for every type of process.

The third example mentions Historical ETL tools like Control-M or Oracle. These tech stacks are proven to work well with managing lots of data and are extremely reliable and mature. Unfortunately, they usually lack good CI/CD and unit-testing capabilities. Also, what is common for Oracle experts, with their good understanding of technology, they tend to promote one ecosystem as a remedy for every problem. Pierre noticed that this behavior is a clear example of the famous 'when you have a hammer, everything looks like a nail'.

The more advanced approach is to build a full-blown application, based on widely-used tools like Airflow, Dbt, Python and Snowlake. It requires strong engineering teams and often software developers to customize these, but it helps to avoid vendor lock-in.

Finally, the fifth type of organization may want to use Cloud services. They usually come with a pay-as-you-go model and can virtually handle any scale. However, they are not super easy to customize in the case of special scenarios and costs can grow without implementing good recommendations.

All five approaches presented by Pierre match well with different types of use cases and they clearly show that there is no golden rule on how to implement data engineering. The decision of ecosystem selection should always be driven by the technological advancement of the organization and the complexity of the pipelines.

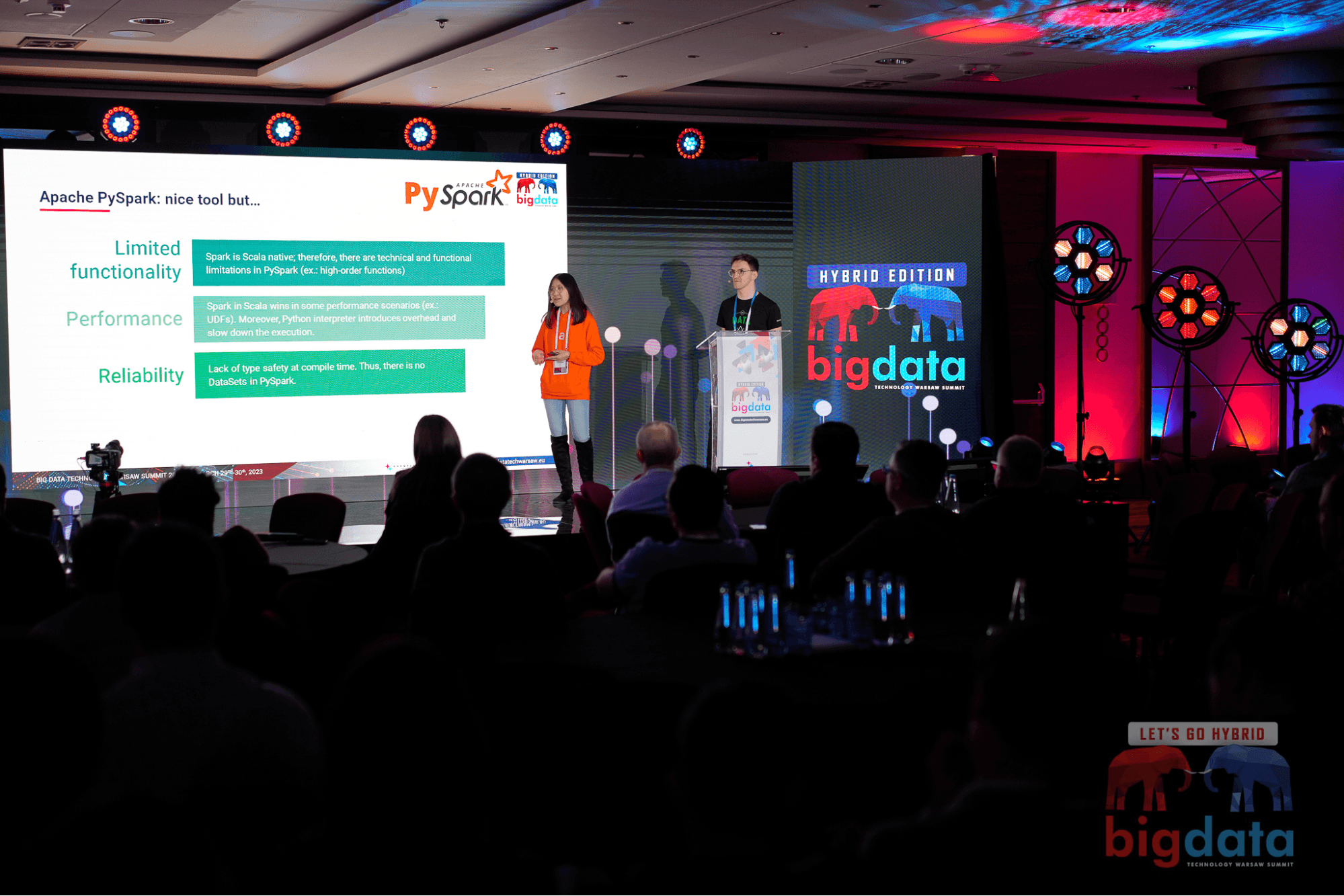

The other presentation from the Data Engineering track was ”Modern Big Data world challenge: how to not get lost among multiple data processing technologies?”. Jakub Demianowski and Yevgeniya Li (both working in the data engineering area in Allegro) decided to share with us what methods they use when it comes to deciding on framework selection for a data project. The criteria they use include skills and expertise in a team that is about to do an implementation but also expected delivery time, complexity and technical limitations.

One of the most common solutions they use is to write an application in Scala, powered by the Apache Spark framework. This type of application gives them full control over types with Datasets API so they see all the errors during compilation and not in the runtime. Scala makes it easy to separate concepts and makes things modular and universal (using generics). Applications written in this way are easy to test with unit tests, some can run, even without starting spark context. This approach is a good choice for JVM developers, but it also requires the courage to tune spark and troubleshoot issues.

A bit of a simpler solution is to write the logic in Pyspark. It still uses Spark under the hood, but it’s way easier to run experiments (for example in Jupyter notebooks) and visualize data. Pyspark has similar testing capabilities to applications written in Scala-Spark, but seems to integrate way better with ML/AI frameworks and pythonic preprocessing libraries. Unfortunately, it’s not as rosy as it seems – code written in this way lacks type-safety and in some cases can be less performant. Also, not all the functionalities are ported to Pyspark yet (high-order functions for example).

Another framework they tend to use is Apache Beam. It’s not a processing engine, but a unified model for running applications on different engines like Apache Flink, Apache Spark or Google Cloud Dataflow. Beam provides APIs in multiple languages and brings the concept of PColection that can also span to python objects. It’s quite easy to test the Beam application with Direct Runner and there is usually no need to tune the applications – they just work. Jakub appreciates the autoscaling capabilities, descriptive user interface and ability to write streaming apps easily.

However, not all of the frameworks they use are based on pure application programming. For simple cases they tend to use BigQuery – it’s easy to implement a pipeline step in SQL and it runs pretty fast with no maintenance, as the service is fully serverless. Apart from the usual data processing capabilities like aggregations, joins and windows, it also offers some ML features for quick modeling. Unfortunately, the more complicated the SQL query is, the harder it is to write and understand it. When we connect it with an inability to write unit tests, it only makes this framework suitable for basic transformations.

The last approach described by Yevgeniya is based on microservices, usually used to process streams of data from Kafka using the standard Consumer API or Kafka Streams framework. This solution is highly horizontally scalable in containers managed by Kubernetes and it’s quite easy to develop and maintain growing business requirements in the custom code. Dedicated applications can be written in many languages, so this method brings freedom in the implementation of details for the team that is going to write and maintain it. Despite this, there are some drawbacks – challenging the orchestration of multiple streaming apps and sometimes lacking classic processing functionalities like windowing.

All the pros and cons of the frameworks presented by Jakub and Yevgeniya provide a nice perspective on the diversity of use cases that they work with in Allegro. Having so many options available, supported by the underlying infrastructure is a great enabler for anybody interested in implementing data applications.

I had the pleasure of attending presentations from the “Artificial Intelligence, Machine Learning, and Data Science” track. The first one was “How to start with Azure OpenAI?” by Pamela Krzypkowska, AI Cloud Solution Architect at Microsoft. Pamela talked about one of the very interesting services available in Microsoft's cloud - Azure OpenAI. This service should be of particular interest to anyone considering using Large Language Models (LLMs) for their needs and applications. Azure OpenAI provides REST API access to OpenAI’s newest and most powerful language models such as GPT3, models from Codex and Davinci series, or even the newest GPT4. Such cooperation was made possible by Microsoft's recent, huge investment in OpenAI, which allowed the two companies to work more closely together. This is good news for Azure users, as they will be able to try out the latest developments in language models while benefiting from built-in responsible AI and accessing enterprise-grade Azure security. In addition to using ready-made models trained by OpenAI on massive data, it is also possible to customize them with labeled data for your specific scenario. What is more, you will be able to fine-tune your model's hyperparameters to increase the accuracy of outputs or even use the few-shot learning capability to provide the API with examples and achieve more relevant results.

The second presentation was “Quality Over Quantity - Active Learning Behind the Scenes” by Sivan Biham, Machine Learning Lead from Healthy.io. Data-Centric AI (DCAI) is one of the recent very popular trends in Machine Learning. Experts involved in this initiative argue that the best way to improve performance in practical ML applications is to improve the data we use, and not necessarily only focus on the models themselves. This presentation dealt with a similar theme. In it, Sivan outlined how the proper use of Active Learning can benefit the quality of our training data. Active Learning is a way of collecting labeled data wisely, thus achieving equivalent or better performance levels with fewer data samples and saving time and money. It is a special case of machine learning in which an algorithm can interactively query a user (usually some kind of subject-matter expert) to label new data points which usually helps the model to learn more efficiently. During the presentation, Sivan outlined several ways on how to select data samples for labeling, in a way that makes the process as beneficial as possible for training. One idea was to select such examples that posed a greater problem for the model being trained. Such a methodology turned out to be better than randomly selecting samples, and by carefully validating the results it was possible to see how much was gained by incorporating these procedures in the model training pipeline.

The last presentation that I would like to mention is “Lean Recommendation Systems - The Road from “Hacky” PoCs to “Fancy” NNs”, which was presented by Stefan Vujović, Senior Data Scientist at Free Now. Building a recommendation system, especially for a large company, can be an extremely complicated and time-consuming task. During the presentation, Stefan explained how to approach the problem in a way that maximizes our chances of success in large machine-learning projects. Key aspects are breaking down our project into smaller, more manageable units, structuring our project properly, or good risk management. One technique to help us with these tasks is to work in accordance with the Build-Measure-Learn Loop. This working methodology is based on the iterative building of our solution and cyclical verification of its performance, in such a way that it allows us to maximize our understanding of the problem and help solve it. In turn, the use of Mercedes Decomposition Blueprint can be helpful in iteratively building our solution. This is another technique that allows us to develop and work on our project in a structured way. By clearly prioritizing the tasks ahead of us, we are able to minimize redundant work and focus on developing our solution from the simplest heuristic rules to advanced systems.

I had the pleasure of participating in the Big Data Technology Warsaw Summit - the biggest data conference in Poland which is an obligatory meeting for specialists, enthusiasts and people who want to start in the (not so big) IT world. During the on-site day of the event after the conference’s opening, there was a very interesting tech point panel about Big Data processing using cutting-edge technologies and the nearest future vision. The participants were representatives of Snowflake (Przemysław Greg Kantyka), Google Cloud (Łukasz Olejniczak) and Cloudera (Pierre Villard), the debate was moderated by Marek Wiewiórka. Each person had a bit of a different vision on how to work with BD, but there was consensus that streaming processing with machine learning is the current trend. Companies expect results immediately and want to be able to react as fast as possible.

The second buzz word during the debate was AI with the famous ChatGPT. The IT world is continuously evaluating, specialists have to adapt to new technologies and trends. ChatGPT is the next milestone in that evolution. The participants didn’t predict that it would replace engineers but simplify and speed up their work. A good example is Structured Query Language, which was created about half a century ago and it’s used in (almost) the same format nowadays. AI may change this and make IT widely open for non-technical people in the near future. It will be possible to query data just by asking a chatbot for it. In the future AI may hide the system complexity and become the next generation API, connecting humans and machines.

After the plenary sessions I chose to attend the “Real-time Streaming” track. I’m personally a big fan of Flink and streaming solutions, so choosing a parallel session was easy. First of all I would like to mention Pierre Villard from Cloudera with his speech titled ‘Control your data distribution like a pro with cloud-native NiFi architecture’. NiFi is a platform, where you can simply drag & drop blocks, building complex pipelines. It's a very flexible platform, but Pierre’s recommendation is to use it mainly for data distribution and format unification across multiple systems and simple ETLs.

Let’s imagine that we have several systems, each generating logs in different formats. Based on that we need to build a fraud detection system. It sounds scary, but not for Pierre. His solution is composed of two parts. The first one is collecting and unifying logs with NiFy. The second part - fraud detection is a job for Flink. Using stateful and streaming processing, it’s easy to track user activities and detect anomalies like IPs located in different parts of the world.

The moral of the story is simple - each technology has pros and cons. You should be aware of them and choose wisely.

Data Mesh is still a popular concept. More and more companies are doing systems transformation using DM principles. One of them is Roche which started its journey in this area 2 years ago. Katarzyna Królikowska together with Mehmet Emin Oezkan presented their experiences during the speech ‘Data Mesh! Just a catchy concept or more than that?’. An important take- away was that in order to successfully transform or rather unify systems, a whole company has to see and understand the benefits of Data Mesh.

Some people claim that Data Mesh is a data mess. To avoid that you can use contract testing. This interesting methodology was explained by Arif Wider during his speech ‘Applying consumer-driven contract testing to safely compose data products in a data mesh’. If you work with microservices, you probably know that the integration tests don’t solve every problem. They scale badly, are slow, may be unrepeatable and detect problems very late in the process. Moreover it is hard to maintain them in huge systems.

The contract testing can fill the gap between isolated and integrated tests. The goal of that kind of test is to ensure that systems are compatible and communicate well with one another. This methodology has a lot of benefits, but most importantly - it can help to work with Data Mesh!

GetInData, as a co-organizer of the event, put great effort into ensuring the smooth running of the conference. They not only performed during the event but also co-hosted some of the sessions. We were proud to have a significant representation from GetInData and Xebia NL, and the presentations they gave received excellent feedback.

During the first day of the conference, Marcin Zabłocki demonstrated an innovative MLOps Platform powered by Kedro, MLflow, and Terraform that can help improve data scientists' productivity and make their work easier in the presentation titled 'Data Science meets engineering - the story of the MLOps platform that makes you productive, everywhere!'. Vadim Nelidov shared his expertise on solving common issues with Time Series effectively, and Juan Manuel Perafan provided a unique perspective on building a data community in his presentation 'How to build a data community: The fine art of organizing Meetups'.

On the next day, Michał Rudko spoke about the GID Modern Data Platform, a self-service haven for analytics that can revolutionize how data is handled. Paweł Leszczyński and Maciej Obuchowski gave an amazing presentation on the foundations of column lineage using the OpenLineage standard entitled 'Column-level lineage is coming to the rescue'.

The Big Data Technology Warsaw Summit conference is not our only event this year! We would love to see you on October 5th at the DataMass Summit - Cloud Against Data. The DataMass Conference will take place in Gdańsk and is aimed at professionals who use the cloud in their daily work to solve data engineering, data science, machine learning and AI problems. You can join us as a participant or a speaker, as the Call for Papers (CFP) is open until June 30th, 2023. Submit your proposals now!

Last but not least, in a few months we will start preparations for the jubilee 10th edition of the Big Data Tech conference! Stay up-to-date with our community activities by following us on LinkedIn.

Nowadays, we can see that AI/ML is visible everywhere, including advertising, healthcare, education, finance, automotive, public transport…

Read moreMLOps with Stream Processing In the big data world, more and more companies are discovering the potential in fast data processing using stream…

Read moreThis blog series is based on a project delivered for one of our clients. We splited the content in three parts, you can find a table of content below…

Read moreIn one of our recent blog posts Announcing the GetInData Modern Data Platform - a self-service solution for Analytics Engineers we shared with you our…

Read moreMulti-tenant architecture, also known as multi-tenancy, is a software architecture in which a single instance of software runs on a server and serves…

Read moreDynamoDB is a fully-managed NoSQL key-value database which delivers single-digit performance at any scale. However, to achieve this kind of…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?