Heroes 3: Office Wars - How to build real company culture

Communication. Respect. Integrity. Innovation. Strive for greatness. Passion. People. Stirring words, right? Let me share a tale with you about how we…

Read moreWe recently took part in the Kaggle H&M Personalized Fashion Recommendations competition where we were challenged to build a recommendation engine that would predict which articles a customer would buy in a particular week starting on September 23, 2020.

“Dataism points out that exactly the same mathematical laws apply to both biochemical and electronic algorithms. [...] You may not agree with the idea that organisms are algorithms but you should know that this is current scientific dogma.”

Homo deus by Yuval Noah Harari, chapter 11: The Data Religion

As stated in the quote above, I will present humans' decision making process as if it was an algorithm which is processing multidimensional input information and generates outputs in a form of decisions. I hope such an observation attracts your attention rather than scares you…

Also, I will take you on a tour to a data scientist’s mind and will show you how to represent decision-making process with numbers and how to match machine learning algorithms to real-life concepts.

Recommendations have become a part of our daily lives and it’s hard to imagine how the world functioned before that era. Personally, I LOVE Spotify, creating personalized playlists based on what I like - I am an explorer and I cater for new experiences - new songs that match my multi-genre music taste. But discovery takes time - this is why I need a finite number of songs that I can listen to every week and add those to my favorites which I like the most. Similarly to Netflix - just based on a short history of what you watched and liked, you can see a list of tv series or movies you will most probably enjoy as well. How much time does that save you?

So apparently H&M also wants to save your time when you are looking for new clothes. When I go to a store I need to search through tens or hundreds of items of clothing I would never buy, just to find the ones that match my current style. I really like the idea of having personalized clothing recommendations and choosing one or two out of 10 that were recommended.

Before solving a problem with machine learning models, you really need to understand it. As a data scientist, I specialize in solving business problems involving people’s decisions, experiences, needs and feelings. At the beginning I always try to put myself in customer's shoes, imagining their decision-making process. Then, I translate it into a numeric representation using feature engineering and model that process with machine learning algorithms (try to imitate reality with numbers).

When I think about buying clothes in September, the first thing that comes to my mind is the weather change. Let’s travel back in time and place ourselves in New York in September 2020, which was the crucial time of the competition. To make it easier, let’s create a persona - Emily, who is in her 20s and goes to college.

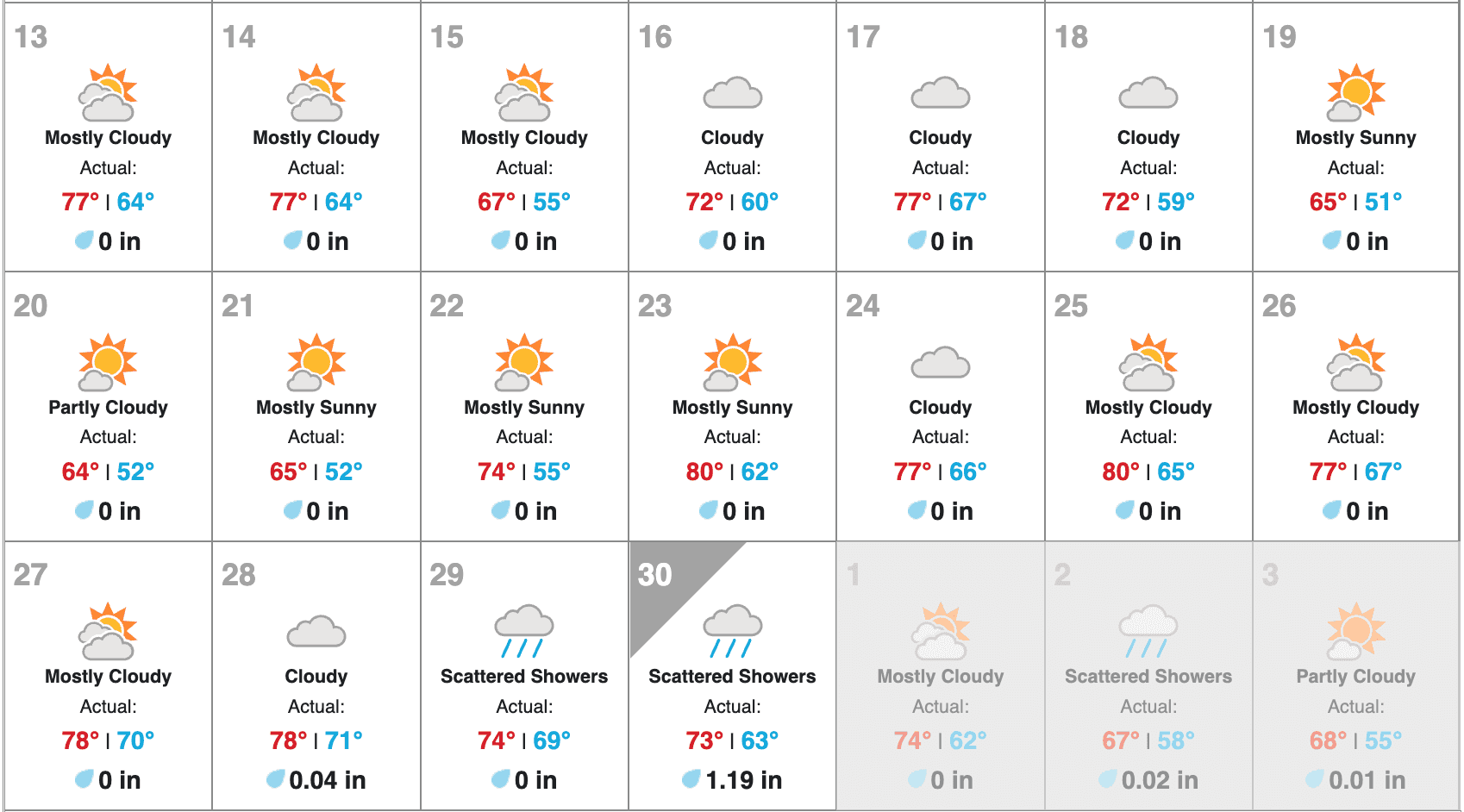

Summer has finished, autumn is about to make an appearance and the leaves are starting to change colour. It is mostly sunny with some cloudy days, still rather hot during the day (74°F ≈ 23°C), but cold at night (51°F ≈ 10°C).

“I am going out with friends for a drink on Friday and will be coming back late. It will be cold then, so I need a new jacket.”

Here we have captured the first moment when a NEED for a purchase has occurred. This is one thing that we will try to model - predicting what a customer needs at a particular moment.

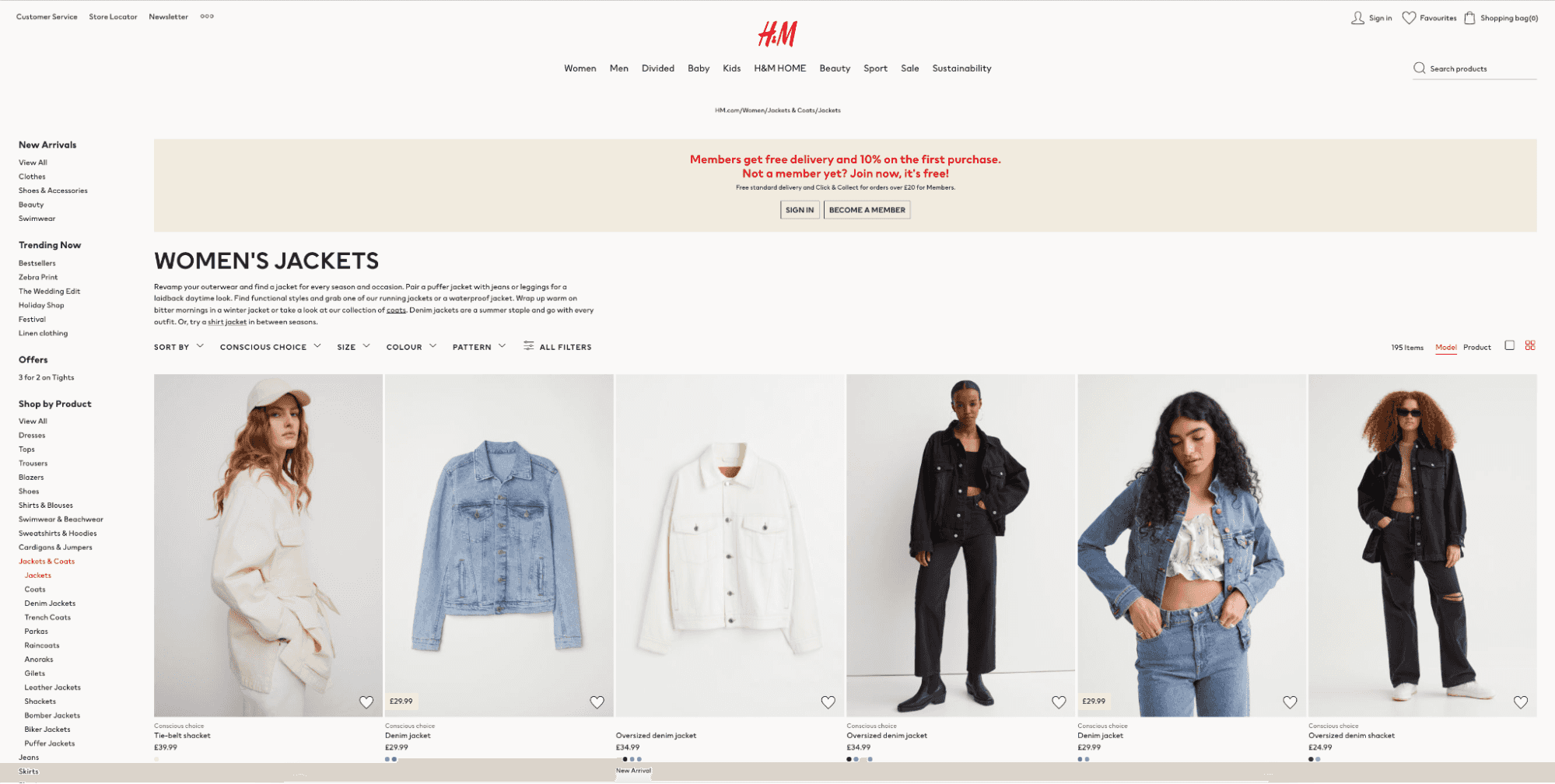

What she can immediately do is open the H&M online store and look for jackets she likes. She scrolls through the website and realizes that she would prefer a coat, which she will need for the whole of autumn.

This is another instance when a need was realized in Emily’s brain. She now knows she wants a coat and she will start looking for the one she likes the most.

Here I would like to distinguish another component that a final decision is influenced by - the customer’s style - what he or she likes, what would match their personality.

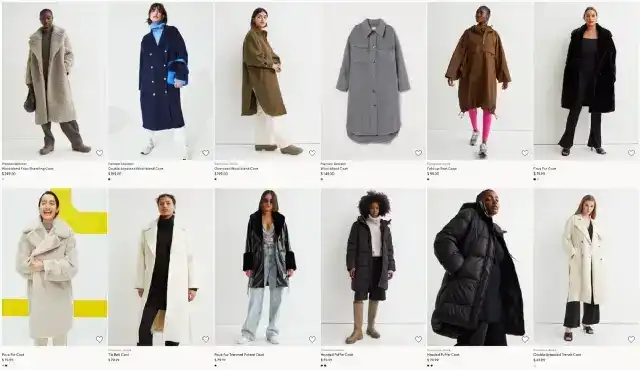

Finally, she goes through all the articles and concludes she wants to feel more elegant this season. She really likes the navy-coloured one, but it costs almost $200 and she can't afford it. So her second choice is the classic, cream coat which costs only $69.99.

This is the third dimension that we can look at - the money.

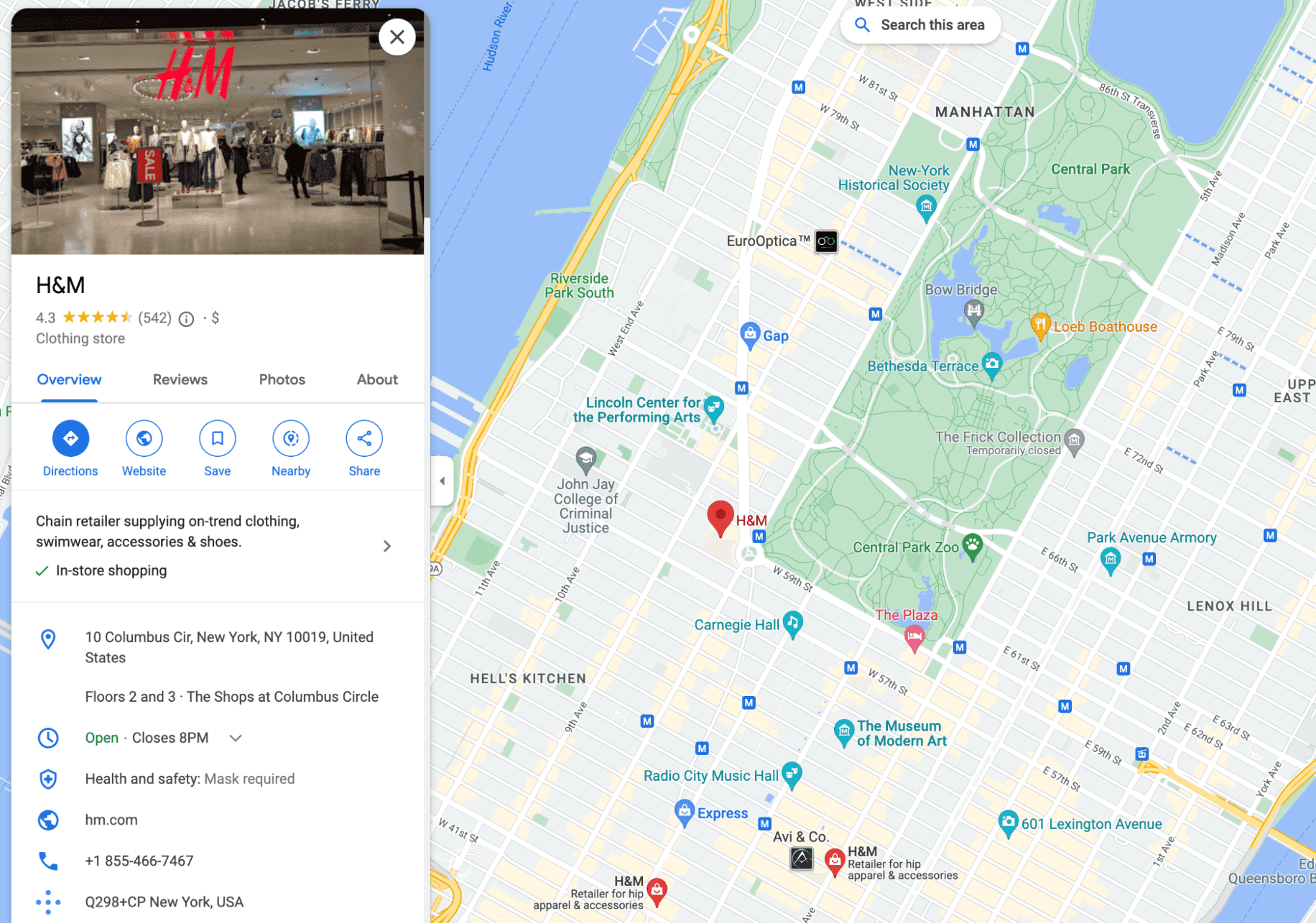

When she gets familiarized with the H&M offer, she decides to go to a local store. She does not like buying clothes online without trying them on. She searches for nearest H&M stores using Google Maps and she finds out that there is a store close to Central Park, where she will be meeting her friends in the evening.

On her way, it started raining. She had forgotten to check the weather forecast. So now she also needs an umbrella!

When she is in the store, on her way to the coats section, she notices black pants that would match her look. She grabs them on her way and takes them to the fitting room, together with the coat she has finally found.

She likes both things and decides to buy them. At the checkout, she grabs a classic black umbrella, which is currently at a reduced price. She does not pay much attention to the umbrella's style so she takes the cheapest one.

When I think about it, she was lucky, because the size of clothes she wanted was available at the store. It’s worth mentioning here that there are also external factors that influence our decisions and one of these is a particular store’s stock at a particular moment in time. If the coat was not available, she would need to:

The decision would depend on various factors:

In hindsight, the crucial factor in selecting THE coat resulted from visiting the H&M online store. Imagine that she had not done that and she went straight to the store. There is a chance she would have missed it if she did not notice it when passing it by. Also, clothes can be presented in an appealing way on pictures, which also influences single decisions. To conclude, a customer’s decisions also depend on the ways they do their shopping:

More factors that influence a customer’s decision that I can think of are:

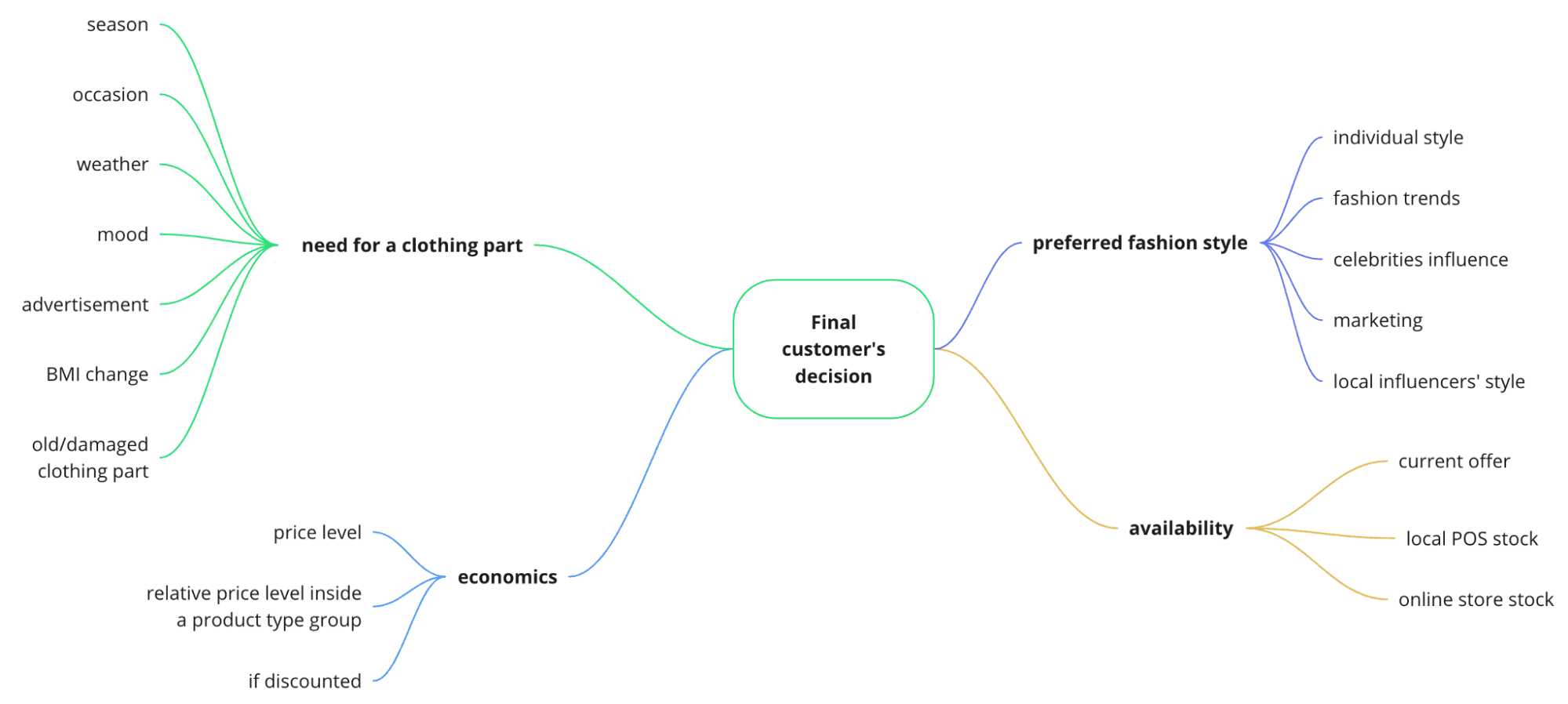

To sum up the section, I created a mind map which organizes the ideas about all of these factors in a much clearer way.

Before we get into the conceptualization part, I will generally describe data sources that were provided in the competition. Familiarizing yourself with the data is a necessary step when building Machine Learning Models, because:

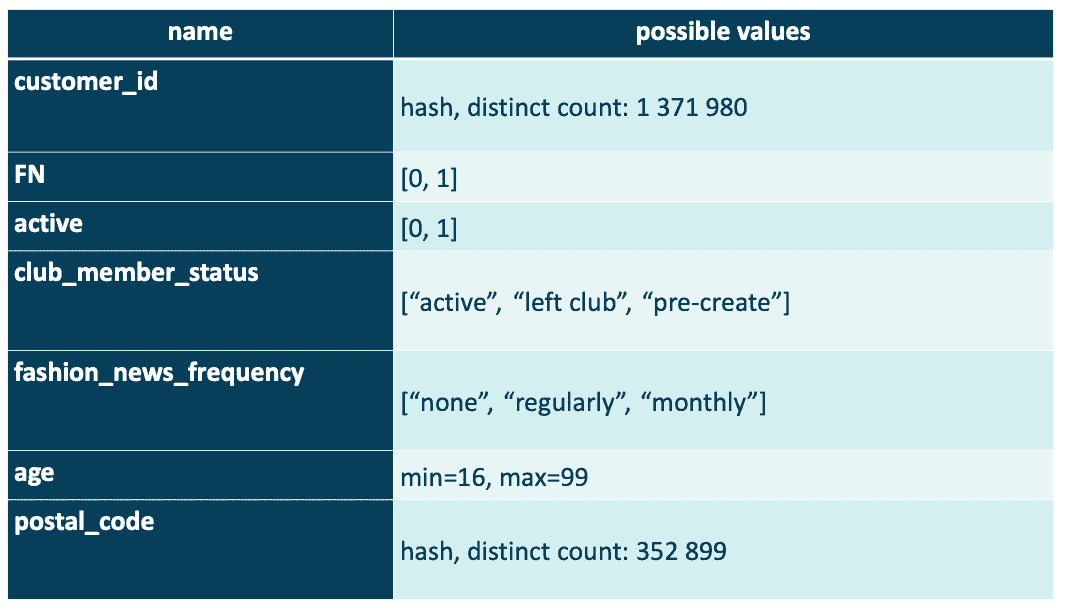

The customer table e.g. contains information about the customer's age, club membership status or newsletter frequency. There are over 1.3 million customers that we need to provide recommendations for**.**

Unfortunately, postal code information is hashed so we will not be able to calculate any features based on location, proximity to stores etc.

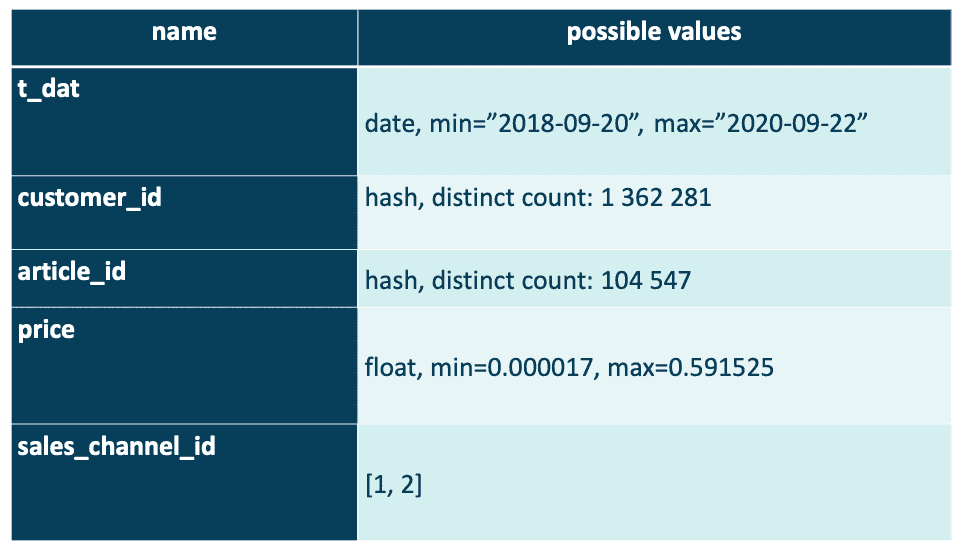

Transactional data is the representation of a customer's interests, needs and style. There are 31 788 324 transactions in the table. The schema is as follows:

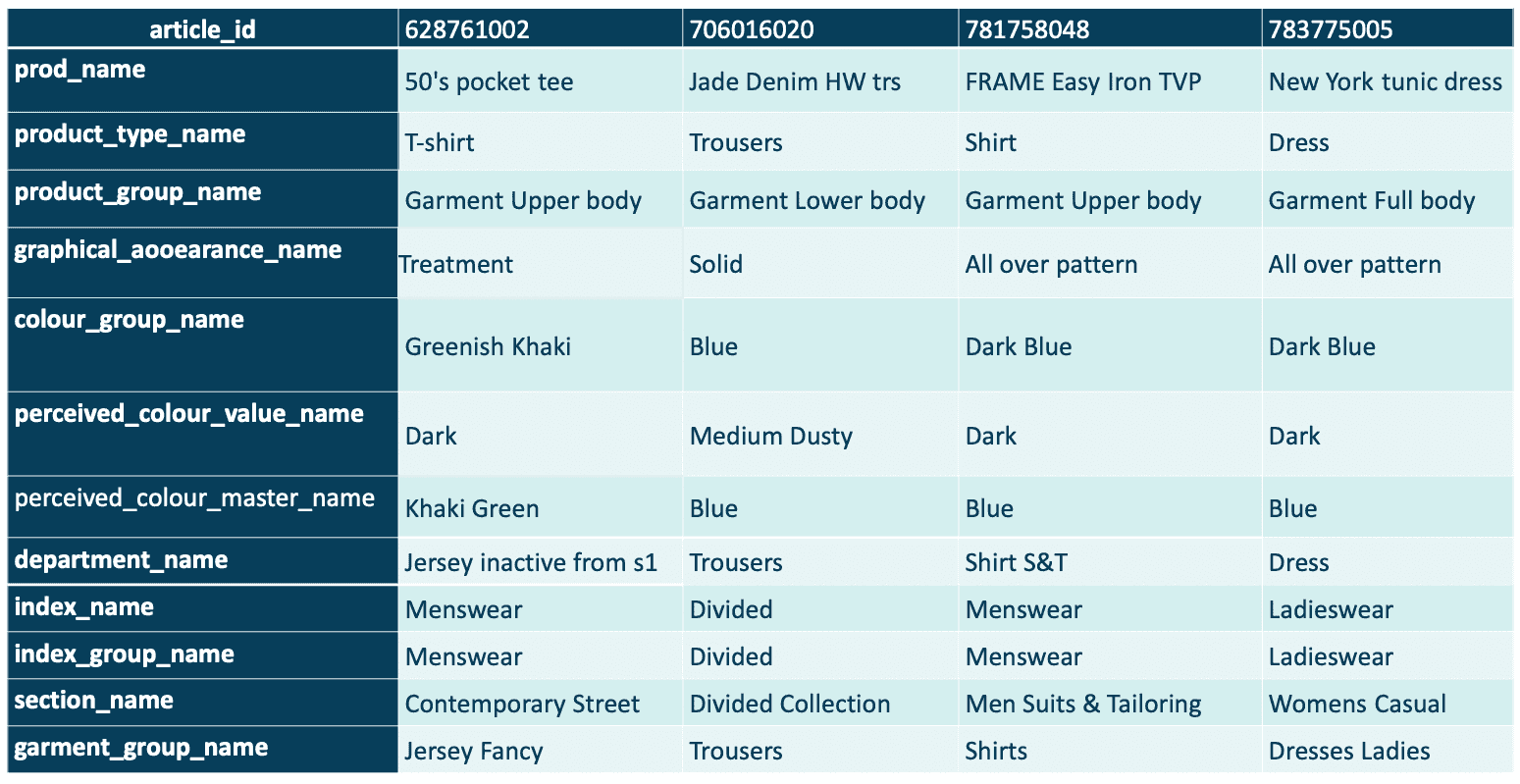

One of the datasets that I spent a lot of time analyzing was, how I called it, article hierarchy. All of the articles have been described using multiple dimensions - we can see which product group, department, section name or garment group the article has been assigned to. Also, there is information about perceived color name and graphical appearance - whether the T-shirt appears to be treated or has an all-over pattern.

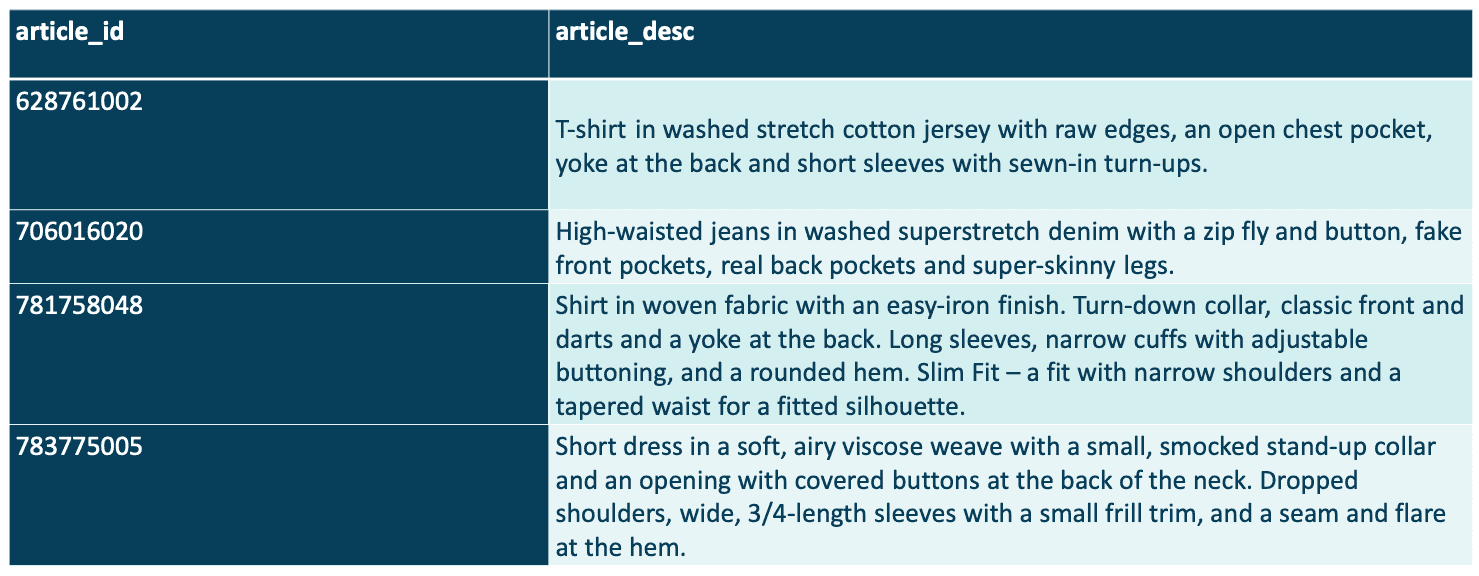

H&M also provides detailed descriptions of articles. Sometimes, just based on the text you can somehow imagine how the product looks like, what materials it is made of, what style it represents.

Embeddings derived from the text can give a model more information about the product and features built on them can tell us more about what a customer's style is. Thus, text embeddings could contribute to an ML model performance because they can identify deeper, nuanced features of clothes.

We also believe that the text description features should work also then, when a customer has not read them, because they describe features of clothing that the customer sees when screening the store during their shopping.

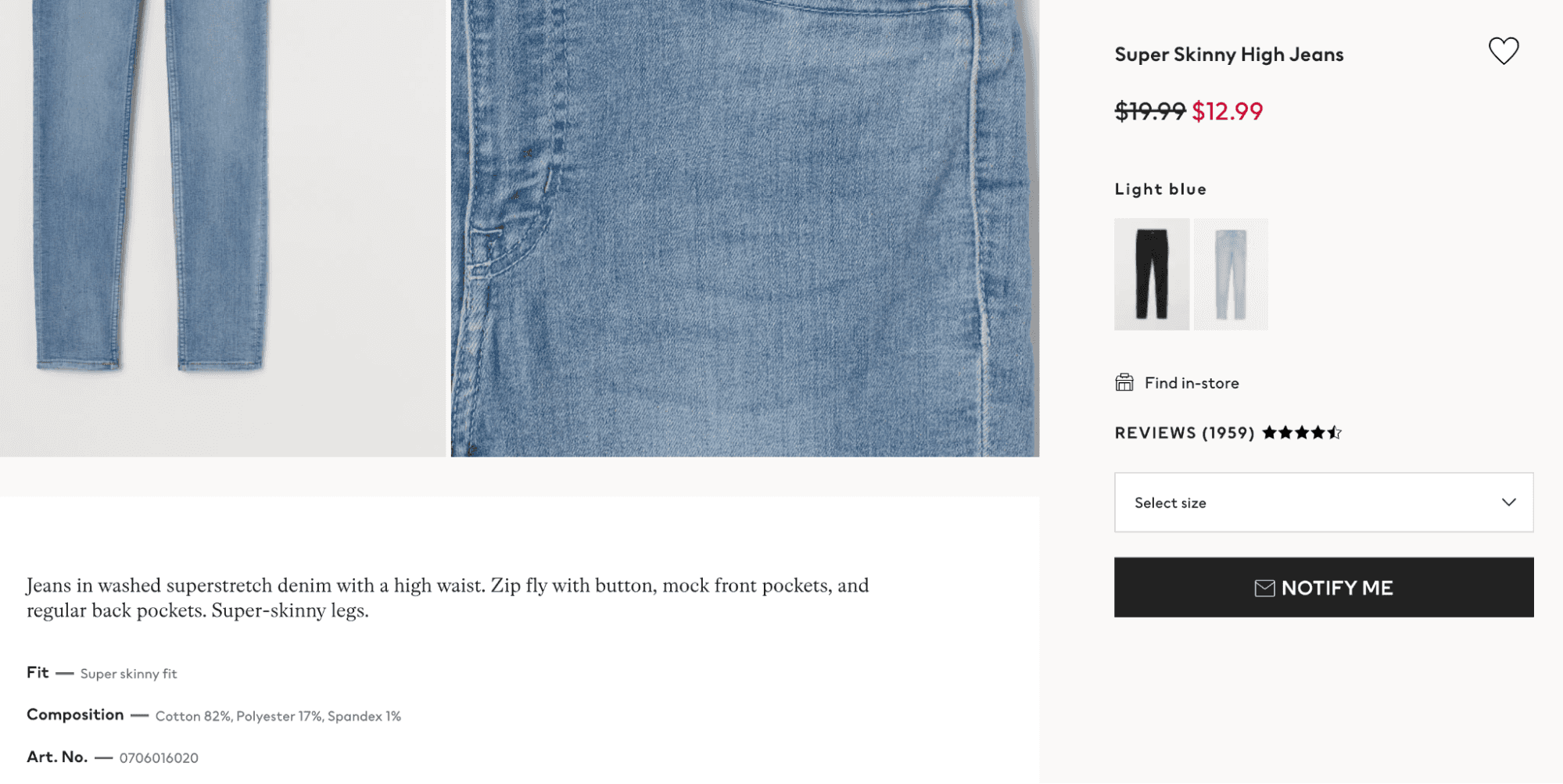

As you can see, these tell us a lot about the product. However, please notice that there were also some examples with more generic descriptions.

It is worth adding that the description is present in the product’s site in the online store. Also, the description is the same for different color variants of the same product.

What was really cool about the competition was that the dataset consisted of data of different types, including images, which gave the contestants a much wider range of methods they could use to make better-quality recommendations.

The images were vertical and rather standardized - the majority of them looked like the examples below. They were centered, with a light background and similar frame ratio.

Having the Emily’s story in mind, I will create a list of the decision’s components that will be included in the final model concept.

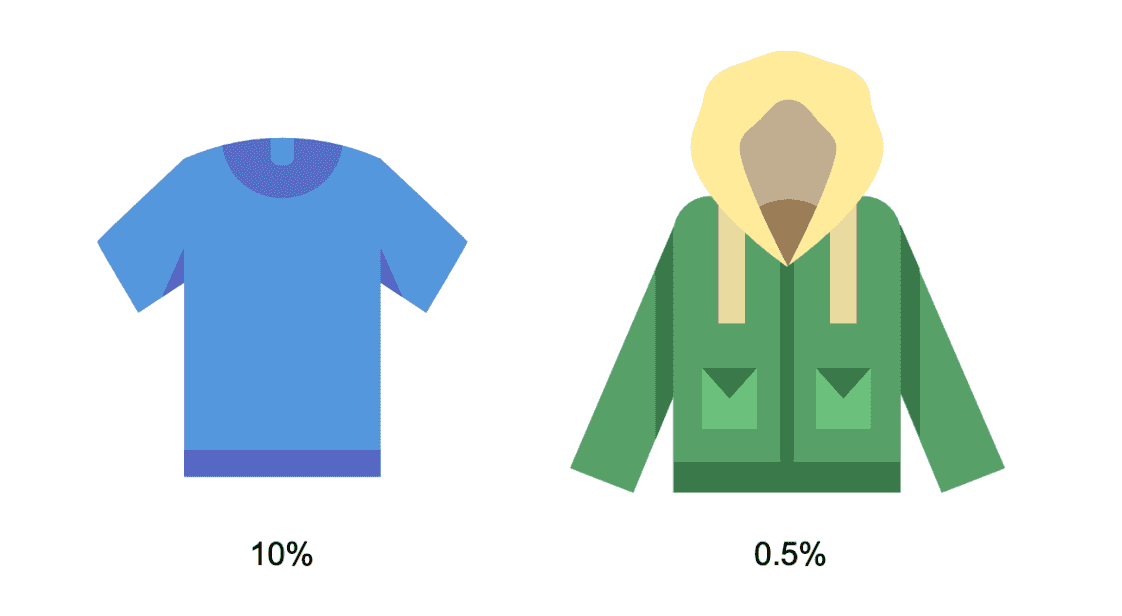

First and foremost, the model should include features representing a customer’s need for a purchase of a particular clothing type such as “a pair of jeans”, “a raincoat”, “a smartshirt”, etc. This component should be estimated for a particular time period, e.g. “during the next week”. So, I want the model to return a score, or ideally a probability estimate, e.g.

in the period between 10th and 17th June 2020 there is a 10% chance that the customer will buy a cotton T-shirt and 0.5% chance they will buy a warm jacket

*the chances are not 0%, because some may travel to a place where it’s cold or they need it for a theater classes at school, for example

What is the probability that a customer will buy a t-shirt or a warm jacket in the week starting 10th June 2020?

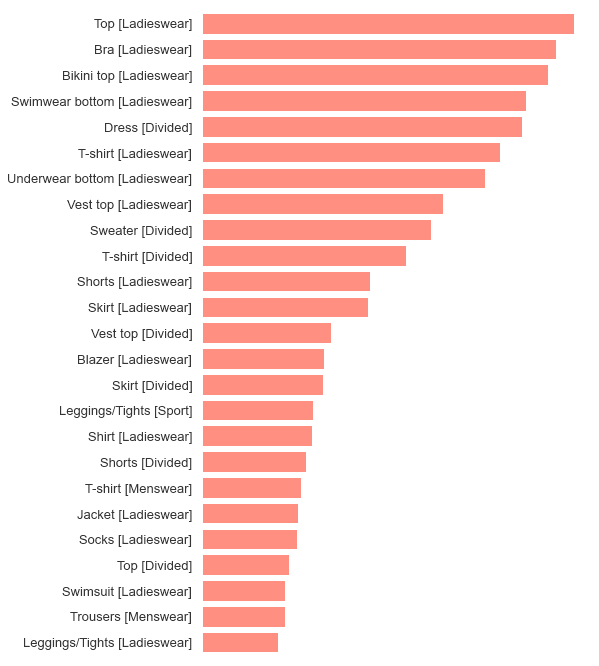

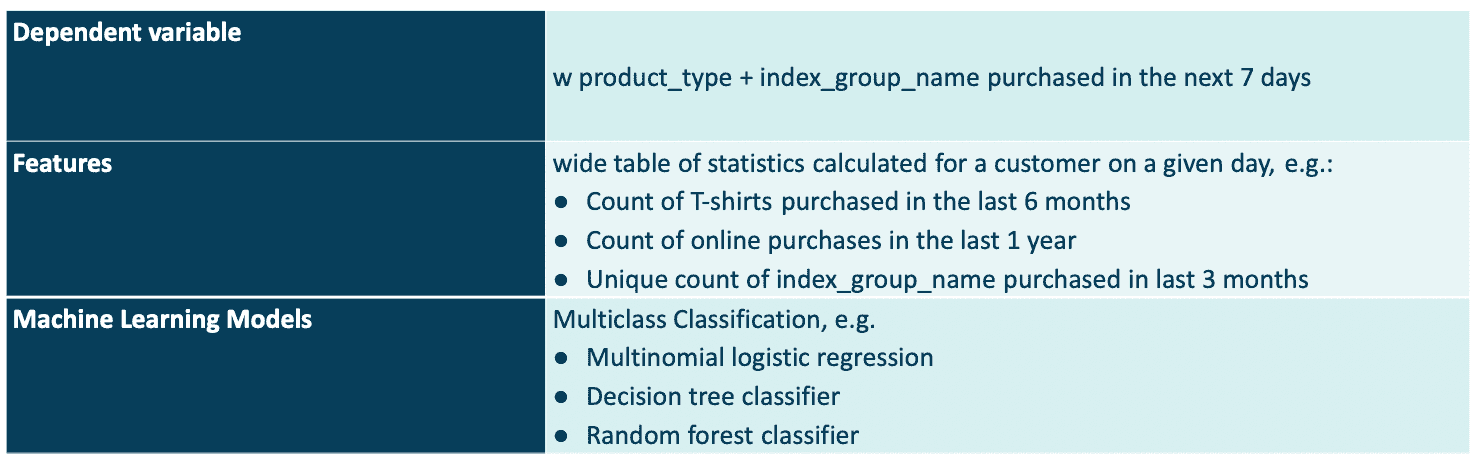

The first group of Machine Learning models that will be used to predict a customer’s need to buy a product in a particular time frame would be classic propensity-to-buy models. They should not try to predict article_id, but something more general e.g. product_type + index_group_name. Let’s see the top 25 products that we would be predicting.

It seems that such a grouping matches the general way of thinking about customer’s needs when it comes to clothing.

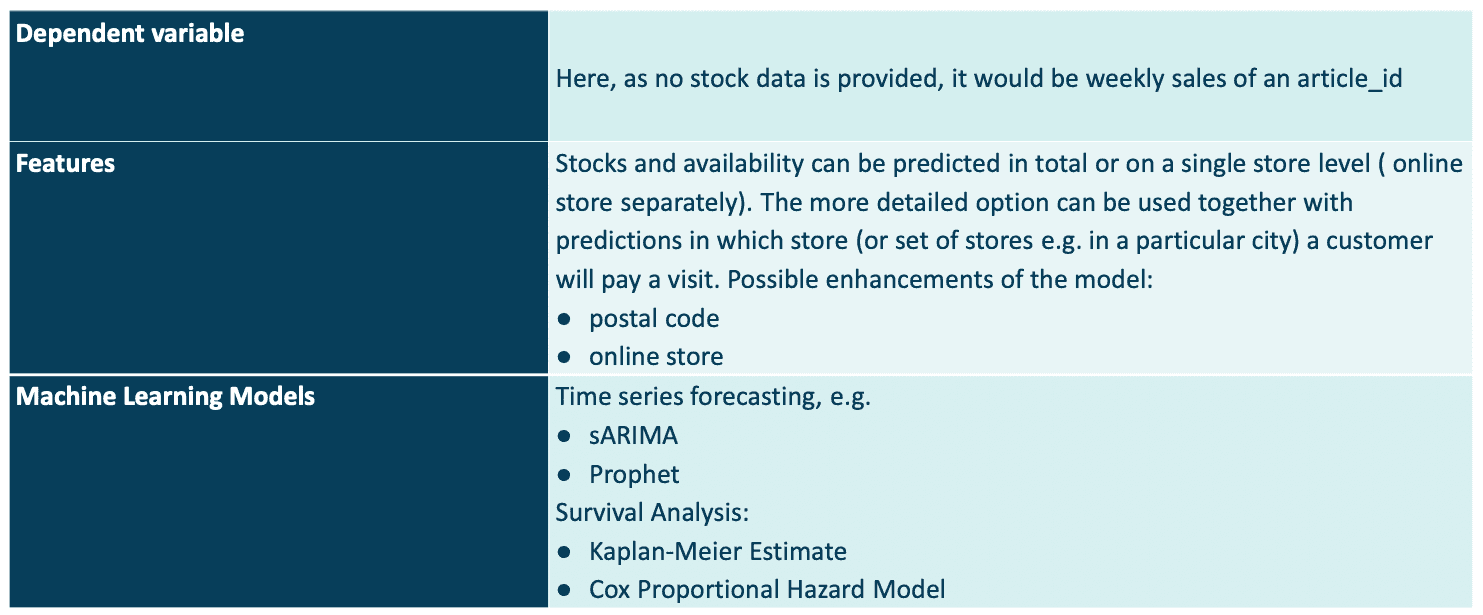

Now let’s choose Machine Learning models which will solve this subproblem.

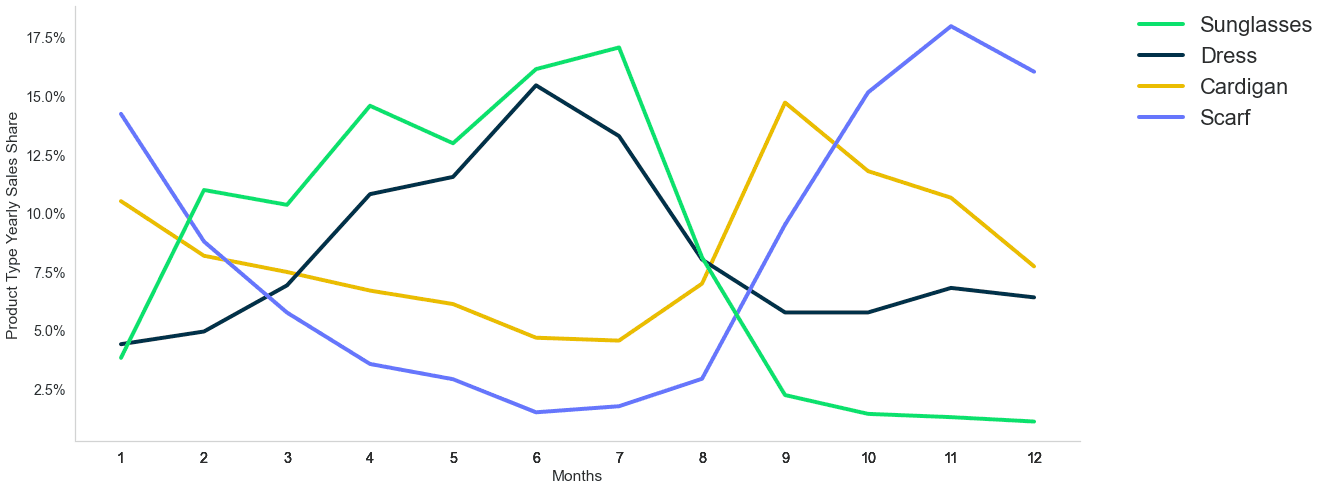

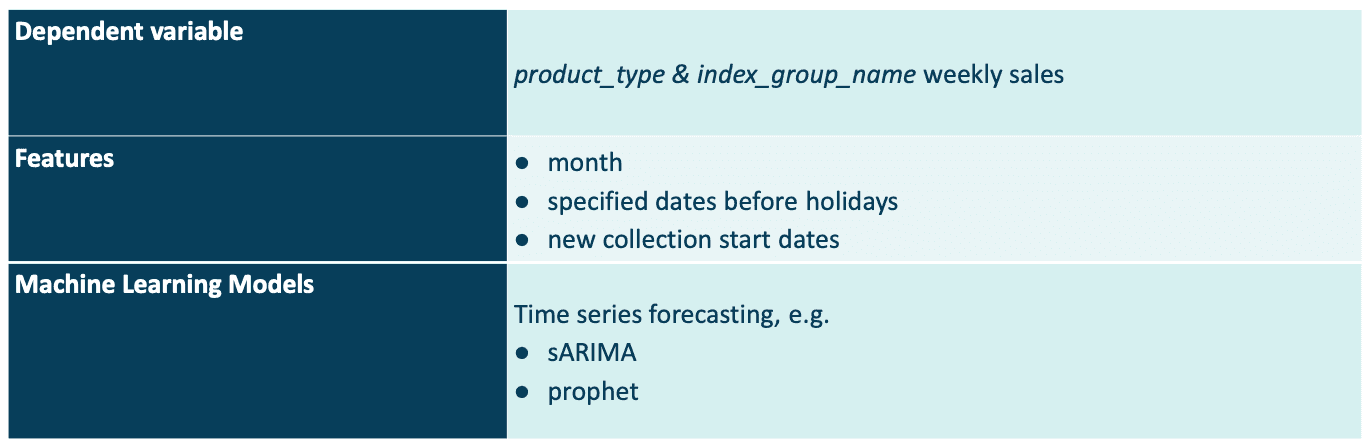

This was the way to predict a customer's need for a product purchase on an individual level. However, there are more general trends when it comes to person's purchasing patterns throughout the year. This is where a time-series seasonality forecasting model would come in handy. Let’s see monthly shares of sales for a selection of 4 products: sunglasses, dress, cardigan and scarf.

It is quite obvious that not many people would be interested in buying a cardigan when it's warm outside. Its’ sales peak in September, when autumn starts. So, such a model for each product type and for each week of the year would definitely help with making better product recommendations.

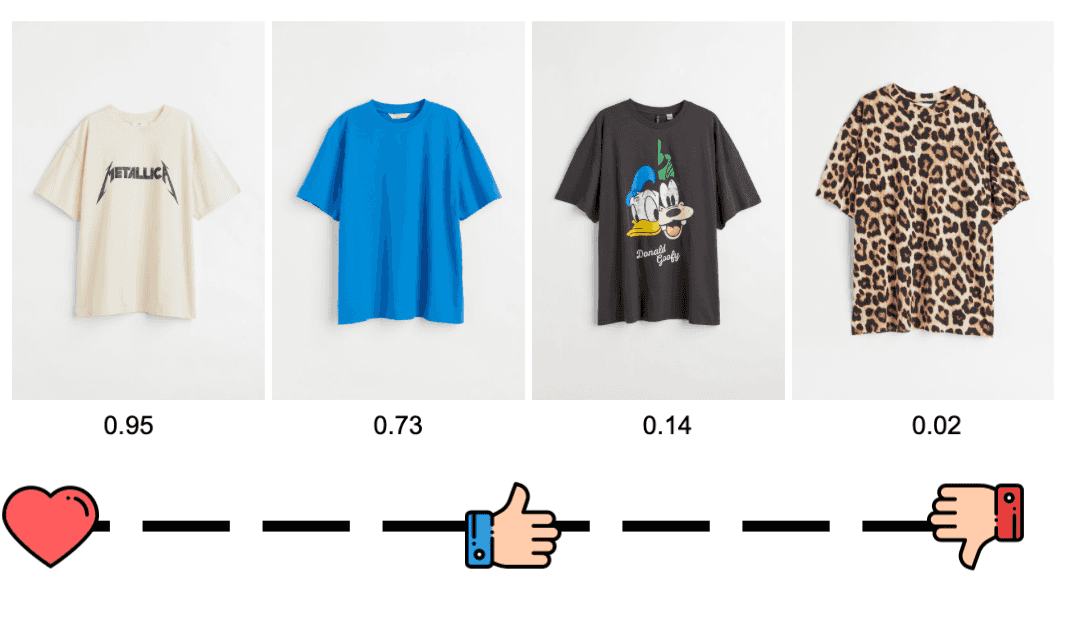

Secondly, we need a model whose results will tell us that a customer prefers one t-shirt over another one. Ideally, it should sort all articles within a product category from the ones that a customer finds the most matching their style to the least.

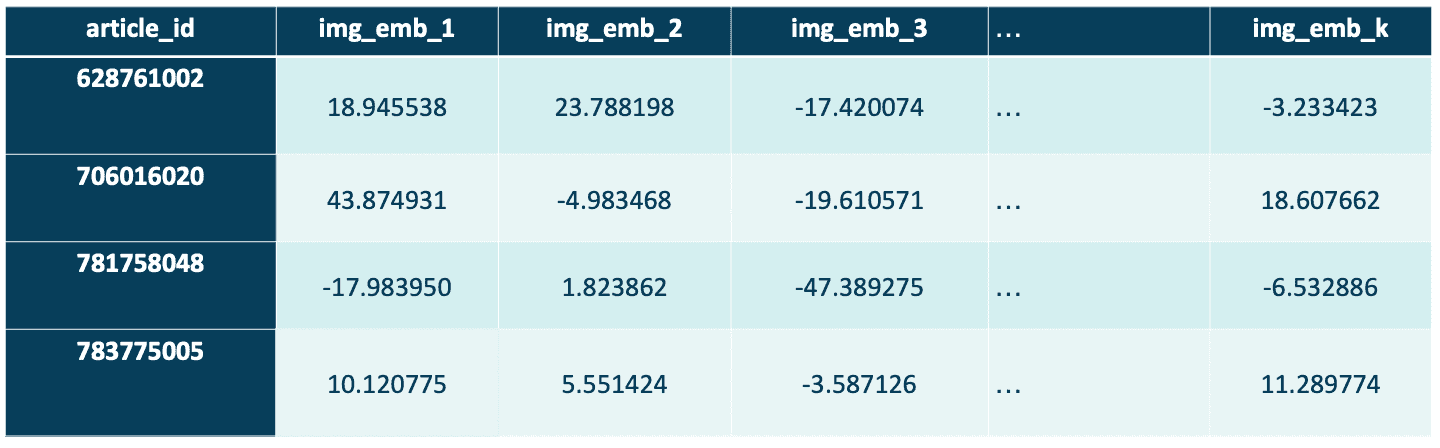

Firstly, we plan to generate more features describing clothes. There are statistical techniques that can translate images into a matrix, where each vector will represent a latent (hidden, not understandable at first sight) feature of the object.

Take the leopard-print for example. An algorithm could learn to detect such a pattern and create a separate feature for it. Values of the vector would range from 0 to 1, where 0 would mean no leopard-print and 1 would mean the leopard-print on the whole t-shirt.

Then, having such embeddings generated for all clothes, we can use them in classical machine learning algorithms, e.g. we could see “which values of embeddings” the customer buys. Imagine a lady who has recently been buying only leopard print clothes. She would have really high scores in the vector representing the print in her past purchases. Thus, the model would learn that and would start recommending similar products.

To achieve such a result, we will use a convolutional autoencoder. If you have never heard about this, there will be a separate blog post about it soon. Shortly, it is a neural network that is trained to reproduce its input image in the output layer.

The output of the algorithm will be structured like in the example below.

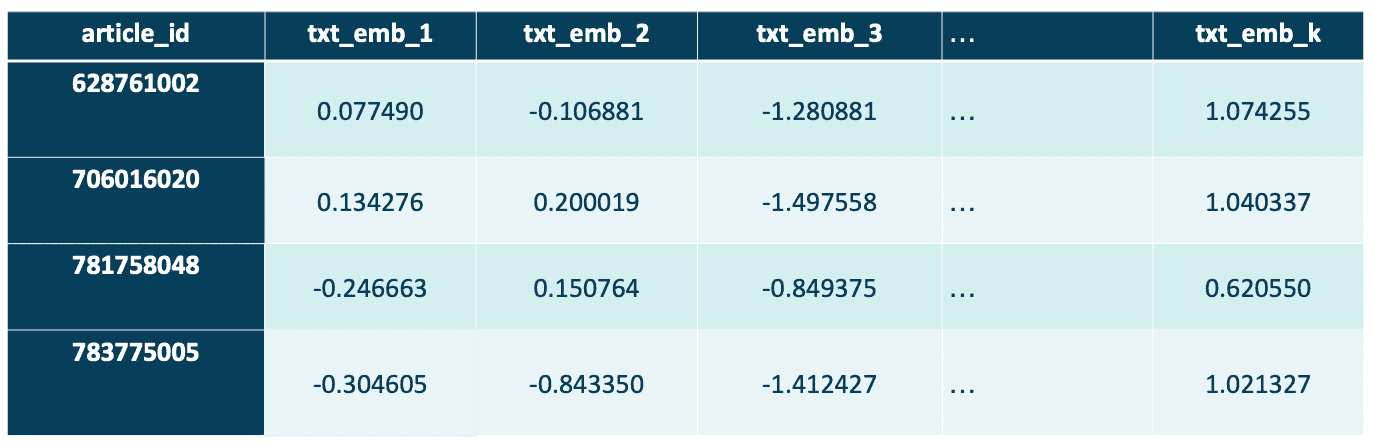

Similarly to image embeddings, detailed description of articles can give the model another dimension to look at. Take this description for example:

Calf-length dress in crisp cotton poplin. Narrow, detachable, adjustable shoulder straps, wide smocking over bust, and softly draped A-line skirt. Unlined. Made from organic cotton, this dress is part of our hand-painted wildflowers collection. The pattern was developed by our print designers Kavita, Abigail, Holly, and Florentin, who picked their favorite wildflowers and recreated them in watercolor.

The description certainly adds new information that is not available anywhere else. Just based on the image, you could not have figured out that the dress is hand-painted.

However, in order to use this in Machine Learning models, we need to transform it into embeddings. For that we will use a pre-trained transformer which we will apply to tokenized, stemmed and lemmatized article descriptions.

The output of the algorithm will be structured as like in the example below.

When you think about recommendations, content-based and collaborative filtering models come to your mind, because these are the two most popular ML algorithms in this field.

According to the definition found here:

Content-based filtering uses item features to recommend other items similar to what the user likes, based on their previous actions or explicit feedback.

So just like in the leopard-print example above, if a customer likes the pattern, let’s recommend her other products with the same pattern. It’s quite a simple solution, but may work in some cases. It will help to determine which products share a similar style. Then, we will be able to recommend the ones with the highest similarity to what the user bought before.

According to the definition found here:

collaborative filtering uses similarities between users and items simultaneously to provide recommendations. This allows for serendipitous recommendations; that is, collaborative filtering models can recommend an item to user A based on the interests of a similar user B. Furthermore, the embeddings can be learned automatically, without relying on the hand-engineering of features.

As you can see, this algorithm adds another level to the content-based filtering as it can recommend clothes that other customers purchased together, if you share similar products in your historical transactions.

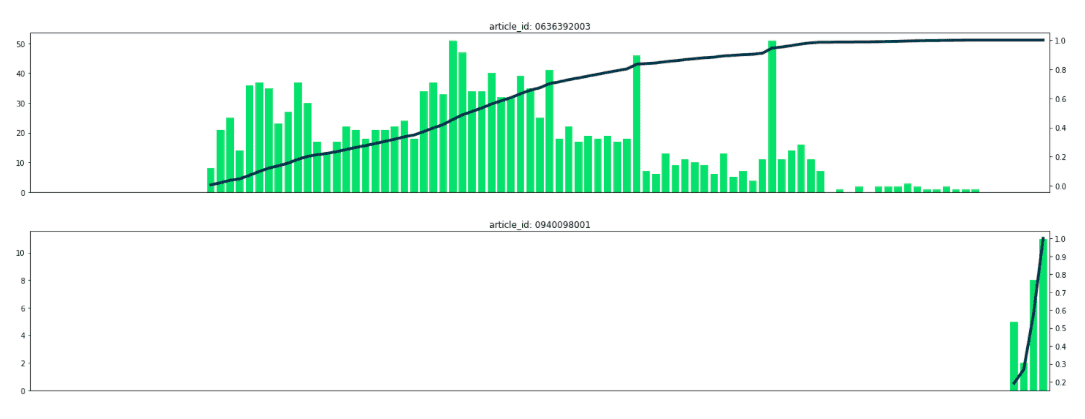

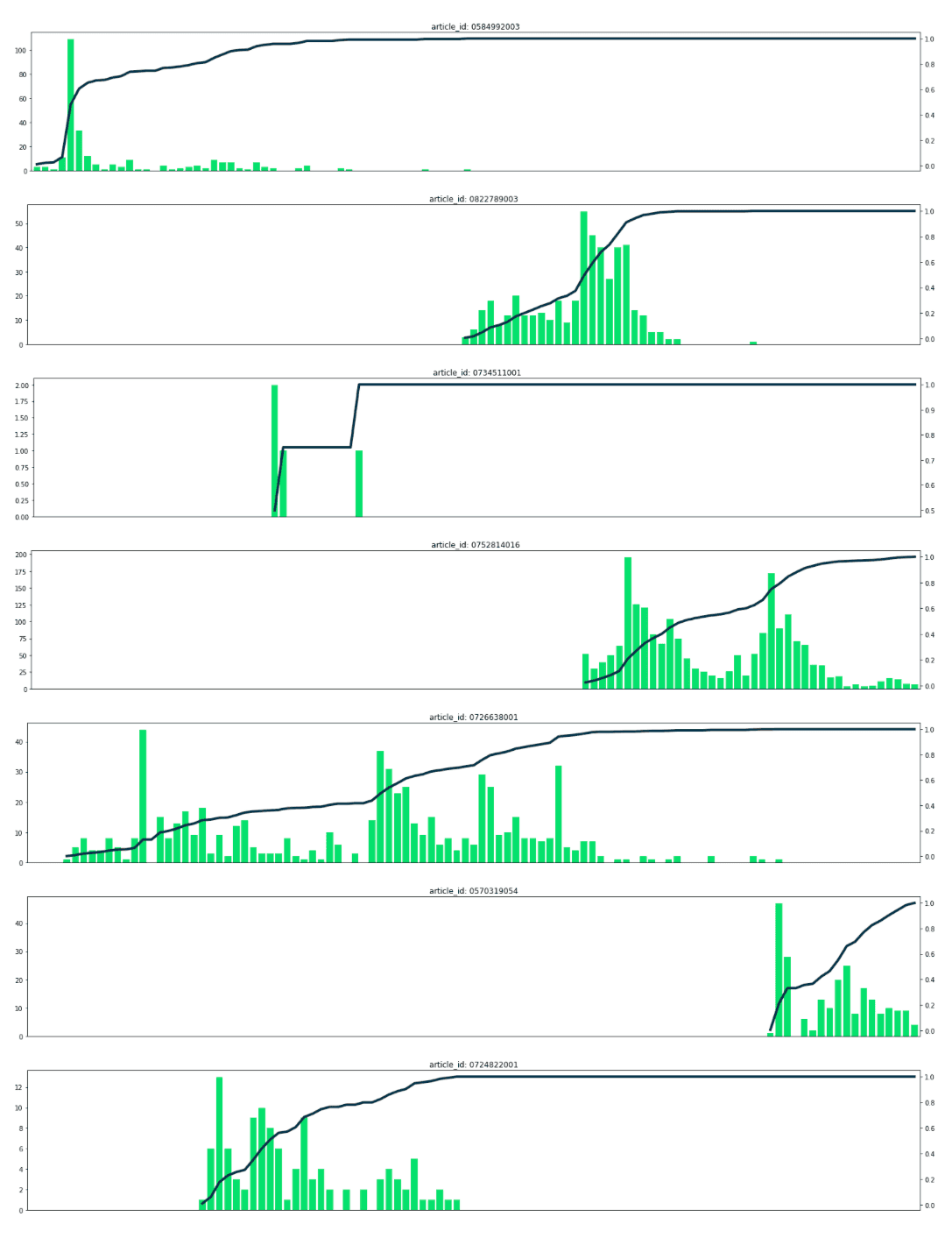

As there are 105 542 unique article_ids and we have to predict just 12 articles for each customer, we figured out that probably not all articles are offered at every point in time. In order to get a better understanding on what an article_id lifetime looks like, I visualized weekly sales of a sample of articles, together with the line representing a cumulative share of total sales of the article.

You can see that a few patterns can be distinguished that can help us determine the probability that the article would be offered in a given week.

Estimated volume of sales per article_id per week (in total or per store).

Here you can see that there can be some overlap with the Time-Series model proposed before, but please note that the model before was based on a more general product type level, not on an article_id level. The “stock” model can rather be used for short-time predictions and not for seasonality calculations.

When we already have a list of all the factors that influence a customer's decision, we are able to create a final-decision model which will be an ensemble of all the solutions.

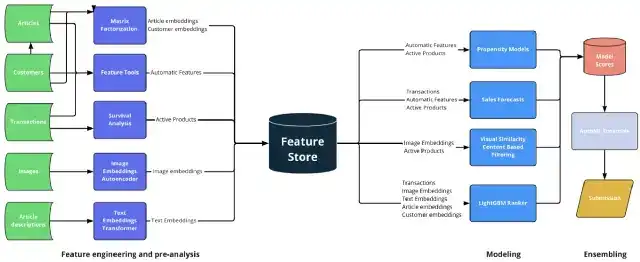

The final concept of the solution that we came up with is represented in the schema below.

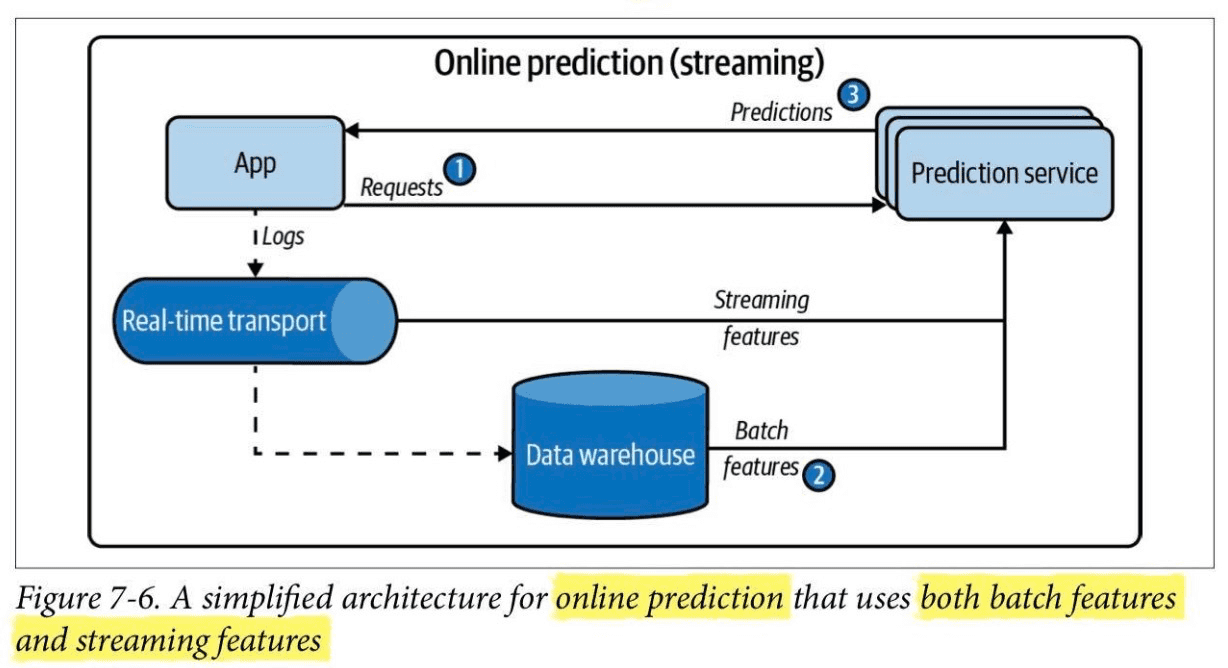

We start with data sources and generate features using multiple techniques, including embeddings generation with neural networks. All features are then stored in a Feature Store (see Feature Stores comparison blog post written by our colleague Jakub Jurczak). When all features are collected, multiple Machine Learning models can be estimated.

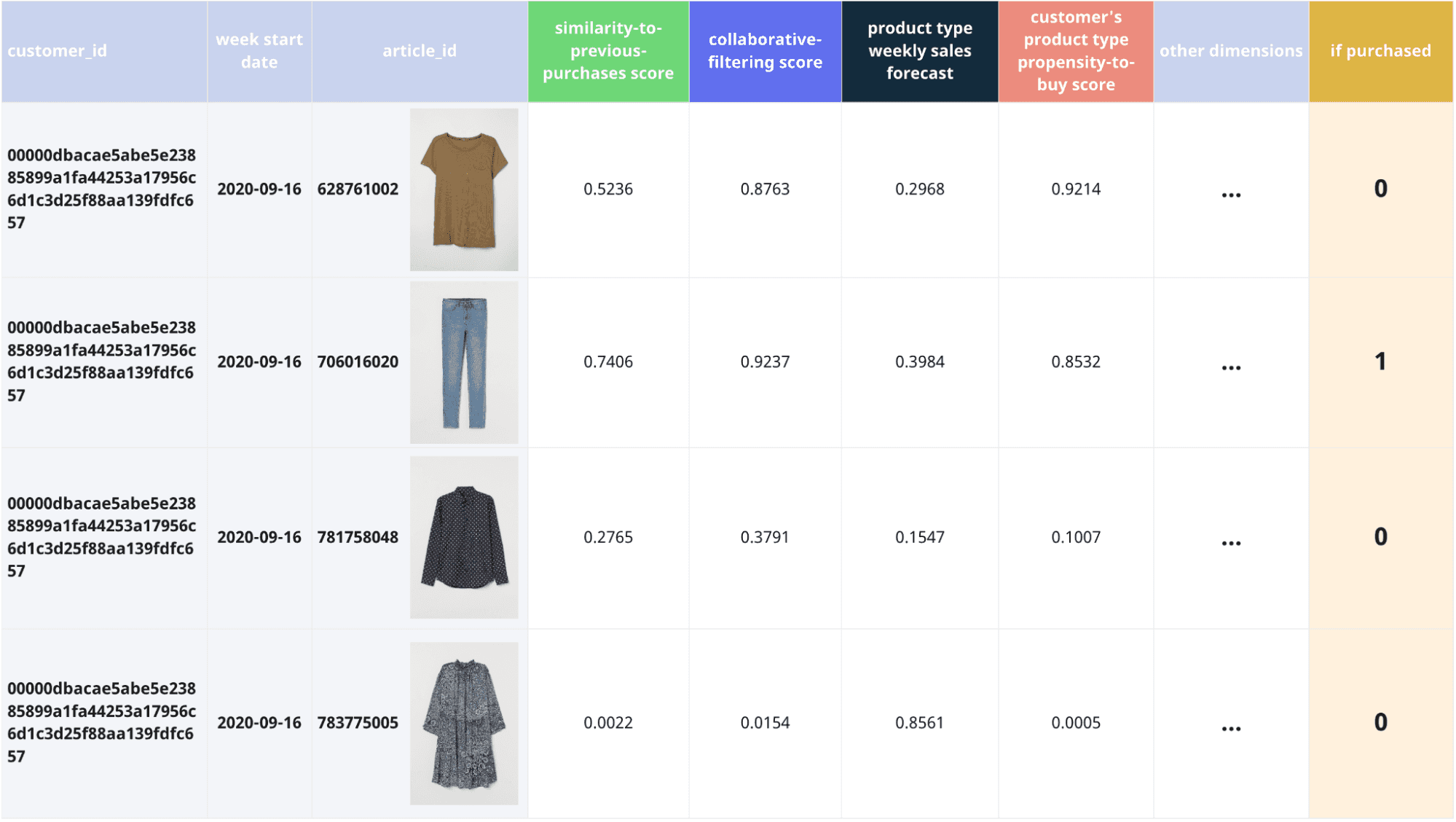

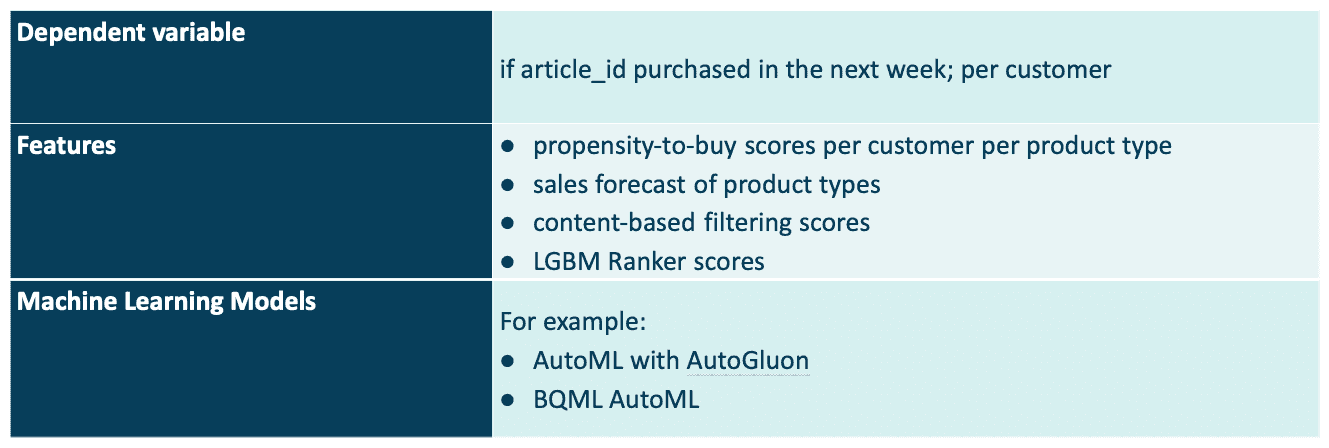

The expected input to a final Ensemble Model would be a table with an index of: customer_id, week_id, article_id. Columns would represent results from various ML models and possibly some other descriptive statistics and features concerning the customer or article itself.

With such a table form with scores for each customer and each article, we have more than enough information to generate great recommendations. Adding a properly designed model validation should create a powerful tool.

To generate final scores with the model ensemble, we planned to use AutoML. However, it was not trivial… but more about it will be described in the next blog posts.

The table below presents a general concept of the final model.

This is the end of the conceptualization, the data science project part I like the most.

I hope you found the article interesting and after reading it you now have a different perspective on how you make decisions and how they can be quantified in a multidimensional space. I believe that it also gave you a sneak peek into a Data Scientist’s business problem solving process, where we need to match the worlds of business, psychology, emotions, marketing, math, statistics and machine learning. You definitely cannot say the job is not interesting :)

If you are interested more in technicals, we will be publishing more detailed blog posts soon! In order not to miss the publication, subscribe to our newsletter. The articles will be describing selected Machine Learning solutions that we have used in solving the competition, e.g. How to create embeddings from images and text, What project management techniques are best for data science projects, How to generate thousands of features using feature tools?. And don’t worry if you think they can only be applied to apparel or e-commerce problems! They can be used to solve many more kinds of business problems than you think.

Interested in ML and MLOps solutions? How to improve ML processes and scale project deliverability? Watch our MLOps demo and sign up for a free consultation.

Communication. Respect. Integrity. Innovation. Strive for greatness. Passion. People. Stirring words, right? Let me share a tale with you about how we…

Read moreIntroduction As organizations increasingly adopt cloud-native technologies like Kubernetes, managing costs becomes a growing concern. With multiple…

Read moreThe adage "Data is king" holds in data engineering more than ever. Data engineers are tasked with building robust systems that process vast amounts of…

Read moreEver felt overwhelmed by the flood of news about the latest technologies, tools, and trends in Data, AI, and ML? A new framework here, a revolutionary…

Read moreThe 8th edition of the Big Data Tech Summit left us wondering about the trends and changes in Big Data, which clearly resonated in many presentations…

Read moreOne of the core features of an MLOps platform is the capability of tracking and recording experiments, which can then be shared and compared. It also…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?