3 Apache Flink Blogs That Will Revolutionize Your Streaming Game

Streaming analytics is no longer just a buzzword—it’s a must-have for modern businesses dealing with dynamic, real-time data. Apache Flink has emerged…

Read moreA prototype is an early sample, model, or release of a product built to test a concept or process.

Wikipedia

What we have above is a nice, generic definition of the early stage of some development. A definition applicable in many areas. But let’s try to be more specific. What is a prototype in machine learning? A machine learning prototype is an overwhelming mix of spaghetti code, unversioned Jupyter Notebooks, and loose data samples that can be put together and run by no one but it’s creator. A machine learning prototype is something that causes MLOps engineers to suddenly wake up at 2 AM screaming “So what if it works on your machine?!”. A machine learning prototype is something that a data scientist adds to his pile of shame, seconds after hearing this from his manager “Nice job, but now let’s build something deployable”. But does it have to be this way?

A few months ago, in our GetInData Advanced Analytics team we decided to participate in a Kaggle competition called “H&M Personalized Fashion Recommendations'' (you can read more about this in one of our previous blog posts “What drives your customer’s decisions? Find answers with Machine Learning Models! H&M’s Kaggle competition“). In short, as you can guess from its name, the competition was about developing a prototype of a recommendation system based on data provided by H&M, so not surprisingly, it was all about clothes. We had tabular data about customers, articles and transactions along with article images and descriptions in natural language. Based on 2 years of history, we were supposed to predict 12 articles for each customer that would be bought in the following week. What we also had was a bunch of people in our team, eager to try a lot of different modeling approaches.

Nevertheless, we tried to be reasonable about all of this and in addition to getting a decent score in the competition, we also aimed to organize our teamwork and structure our project as neatly as we could. So basically, we tried to create a machine learning prototype, but not a messy one. Although not everything went perfectly due to limited time and resources, we tried to approach the problem as if it were a real-life use case from our client, that was eventually going to be productized with as little additional effort as possible to make the transition between PoC and a deployable, production quality solution.

That last idea resonated strongly in our team after the competition ended. What if we could generalize this concept of having a really well organized way of prototyping that would make the results of the PoC phase of our data science projects clean, auditable and reproducible? What if we could not only have some guidelines on how to initiate and develop machine learning solutions, but also a practical set of solved use cases that we could partially reuse in real projects? What if we could effectively minimize the effort of productionizing our prototypes and scaling our local work into big data cloud environments? So that’s how our GID ML Framework was born.

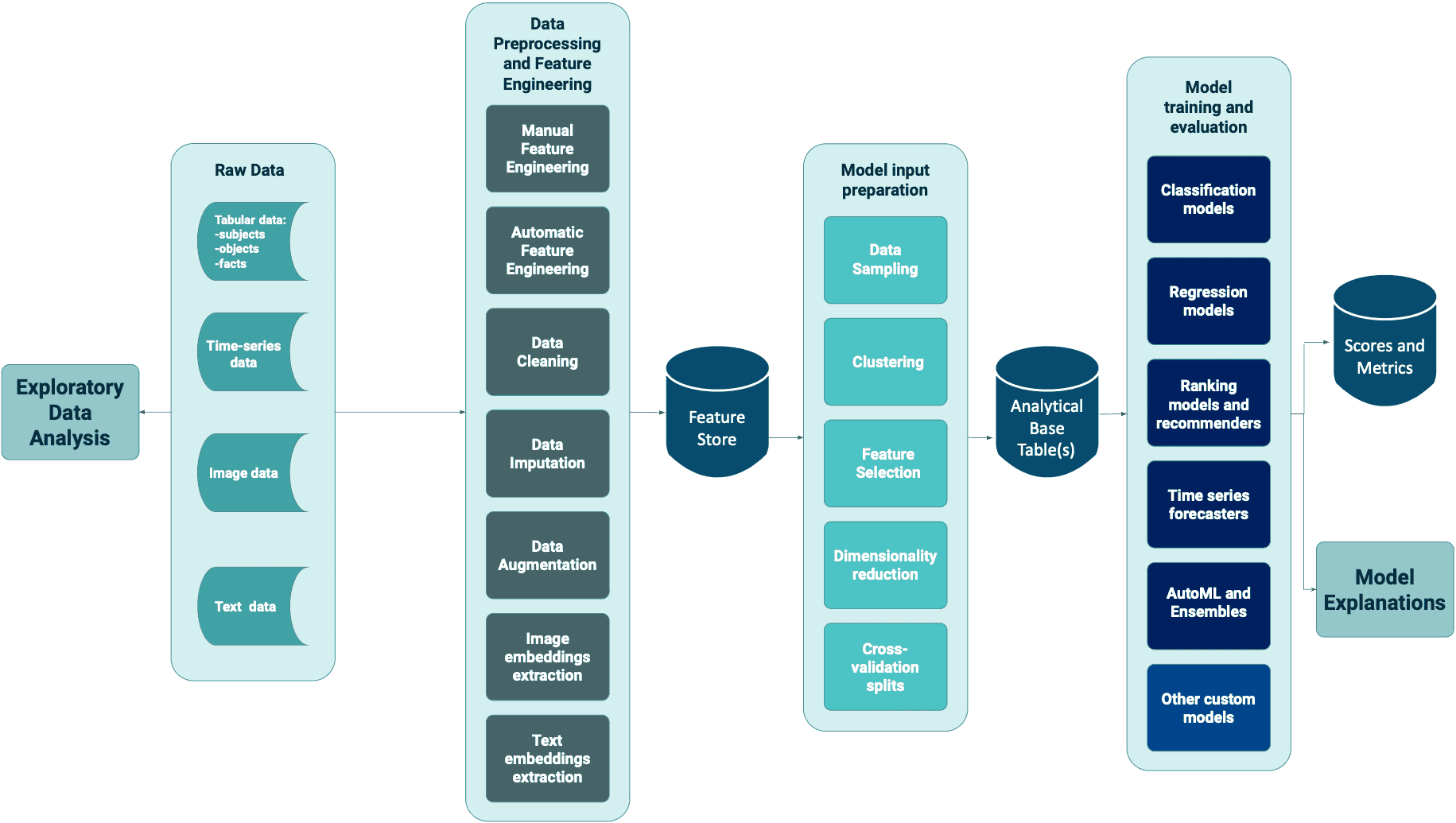

We started by looking at what we created during the Kaggle competition and thinking about how we could improve and extend our approach to make it applicable to other machine learning use cases that we encountered in our work. We defined some not-too-specific, but also not-too-trivial modules which - if properly connected - could describe almost every typical machine learning pipeline (so far we are talking about core ML, but don’t worry, we didn’t forget that it has to eventually fit into DataOps and MLOps landscapes). Then, we went back to our Kaggle recommendation engine problem and based on that specific use case, we started implementing the codebase that would materialize a subset of modules in our predefined architecture. When we finished, we no longer only had an abstract scheme of a generic ML pipeline, but also a complete blueprint for solving a recommendation problem based on tabular, visual and text data using ranking models. This blueprint is not only a repository of well documented, test-covered, adjustable and reusable chunks of code. It also gives us a clear example of how to:

And that is exactly what we want to achieve in the end.

The GID ML Framework is not a black-box AutoML problem solver, nor is it a tool that will automate prototype deployment. The GID ML Framework is a collection of best practices for developing machine learning solutions. But it’s not just a bunch of theoretic rules. It is a set of complete end to end use cases that are already implemented, documented and tested in both local and cloud setup. Those proven examples can serve as the basis for developing solutions for real use cases that are similar to some extent. Example uses would be:

And what’s best in all this - you won’t end up with an illegible tangle of loose code chunks or “Untitled.ipynb” files. If you just follow a little bit of discipline, which this framework is designed to help you maintain, you will get a well documented, modular, unit tests-covered production quality code and a complete record of your experimentation procedure and model versions. In other words, after PoC you will be surprised how many approaches you were able to compare. On the other hand, your MLOps team will be surprised with how little work is left for them in order to deploy your prototype.

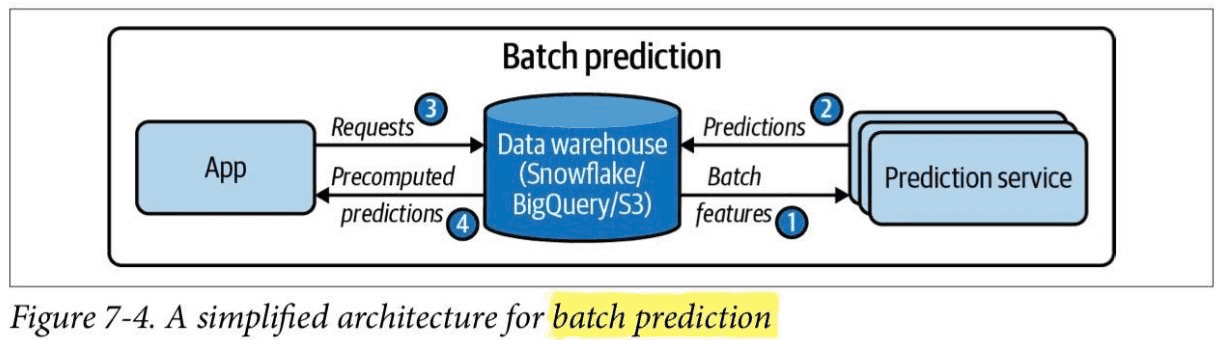

What makes the above idea possible to materialize is the technology involved. Our main assumption is to stick to the state of the art, well-proven open source tooling. We want the GID ML Framework to be applicable and adjustable to any MLOps architecture, so we avoid using commercial or proprietary software for essential functionalities. We use Python as the main programming language. We use Poetry and pyenv to manage environments, however we also verified different options using conda, pip-compile and other tools. We use docker to containerize these environments and make them portable. We also use a bunch of modern linters and other code quality tools like Black, isort, flake8 and pre-commit. We put emphasis on properly covering all the codebase with unit tests using pytest. For machine learning, exploratory data analysis and model explainability, we use or plan to use a wide variety of Python packages. Deep learning modules were so far based mainly on our favorite PyTorch, but there is nothing in the way of using Tensorflow or other frameworks. Experiment tracking and model versioning is done by default with the help of MLflow to stick to the open-source principle, however we already tried some other experiment tracking software whenever we needed more sophisticated capabilities, e.g. for visual data. The only non-open source piece of stack utilized so far was Google Cloud Platform, which we used to test the transferability and scalability of our locally-developed solutions. However, we want to remain open to any cloud provider or even more generally - to any full-scale production environment. But combining all this would be a real challenge if it weren’t for the one essential piece that is in the very heart of our GID ML Framework architecture: Kedro.

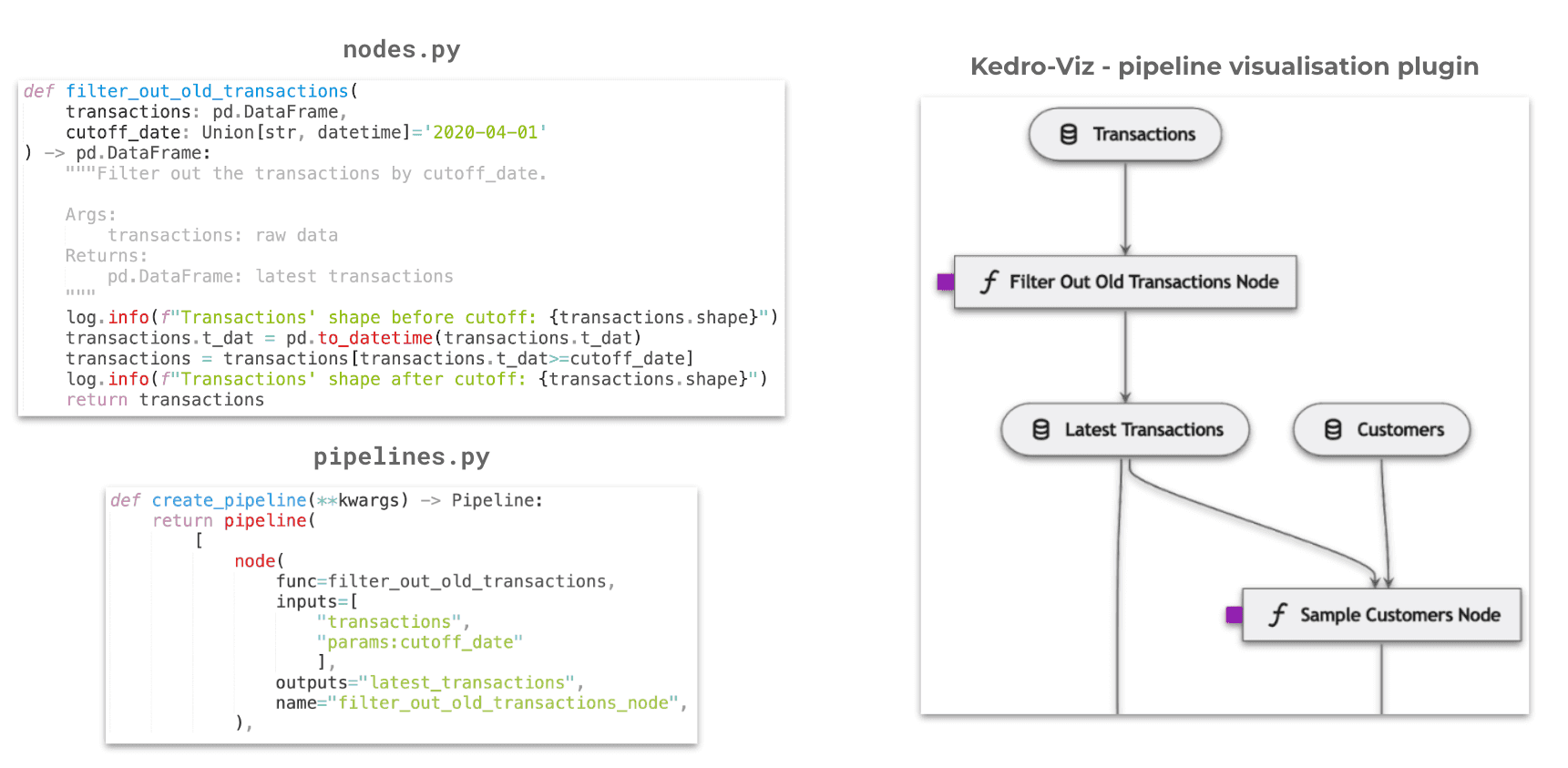

Kedro is an open source framework for developing data science code that borrows a lot of concepts and best practices from the software engineering world. Kedro has a number of features that exactly reflect our philosophy of creating a customizable, modular framework to be applied to certain classes of ML problems:

Having the core machine learning framework structure already developed, we are now focusing on creating new example use cases based on our real-life project experience and adding new common functionalities. What we already have can be summarized as follows:

We have either already started or plan to do so in the nearest future the following things:

Of course we still feel like we're only getting started and we have many more ideas for the future, such as model ensembling, machine learning on streaming data, explainable AI modules, customizable data imputation and many more. What’s important to add at the end, is that pretty soon we want to open source our work to collect some direct feedback and hopefully gain some contributors from outside our company.

Interested in ML and MLOps solutions? How to improve ML processes and scale project deliverability? Watch our MLOps demo and sign up for a free consultation.

Streaming analytics is no longer just a buzzword—it’s a must-have for modern businesses dealing with dynamic, real-time data. Apache Flink has emerged…

Read moreSnowflake has officially entered the world of Data Lakehouses! What is a data lakehouse, where would such solutions be a perfect fit and how could…

Read moreTime flies extremely fast and we are ready to summarize our achievements in 2022. Last year we continued our previous knowledge-sharing actions and…

Read moreNowadays, data is seen as a crucial resource used to make business more efficient and competitive. It is impossible to imagine a modern company…

Read moreLarge Language Models (LLMs) are at the forefront of technological innovation, transforming industries like e-commerce, cloud computing, and AI-driven…

Read moreYou could talk about what makes companies data-driven for hours. Fortunately, as a single picture is worth a thousand words, we can also use an…

Read moreTogether, we will select the best Big Data solutions for your organization and build a project that will have a real impact on your organization.

What did you find most impressive about GetInData?